mikelzc1990 / Nsganetv2

Licence: apache-2.0

[ECCV2020] NSGANetV2: Evolutionary Multi-Objective Surrogate-Assisted Neural Architecture Search

Stars: ✭ 52

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Nsganetv2

NEATEST

NEATEST: Evolving Neural Networks Through Augmenting Topologies with Evolution Strategy Training

Stars: ✭ 13 (-75%)

Mutual labels: evolutionary-algorithms, neural-architecture-search

Hypernets

A General Automated Machine Learning framework to simplify the development of End-to-end AutoML toolkits in specific domains.

Stars: ✭ 221 (+325%)

Mutual labels: evolutionary-algorithms, neural-architecture-search

Fasterseg

[ICLR 2020] "FasterSeg: Searching for Faster Real-time Semantic Segmentation" by Wuyang Chen, Xinyu Gong, Xianming Liu, Qian Zhang, Yuan Li, Zhangyang Wang

Stars: ✭ 438 (+742.31%)

Mutual labels: neural-architecture-search

Geatpy

Evolutionary algorithm toolbox and framework with high performance for Python

Stars: ✭ 990 (+1803.85%)

Mutual labels: evolutionary-algorithms

Awesome Automl And Lightweight Models

A list of high-quality (newest) AutoML works and lightweight models including 1.) Neural Architecture Search, 2.) Lightweight Structures, 3.) Model Compression, Quantization and Acceleration, 4.) Hyperparameter Optimization, 5.) Automated Feature Engineering.

Stars: ✭ 691 (+1228.85%)

Mutual labels: neural-architecture-search

Hpbandster

a distributed Hyperband implementation on Steroids

Stars: ✭ 456 (+776.92%)

Mutual labels: neural-architecture-search

Eaopt

🍀 Evolutionary optimization library for Go (genetic algorithm, partical swarm optimization, differential evolution)

Stars: ✭ 718 (+1280.77%)

Mutual labels: evolutionary-algorithms

Optim

OptimLib: a lightweight C++ library of numerical optimization methods for nonlinear functions

Stars: ✭ 411 (+690.38%)

Mutual labels: evolutionary-algorithms

Efficientnas

Towards Automated Deep Learning: Efficient Joint Neural Architecture and Hyperparameter Search https://arxiv.org/abs/1807.06906

Stars: ✭ 44 (-15.38%)

Mutual labels: neural-architecture-search

Paddleslim

PaddleSlim is an open-source library for deep model compression and architecture search.

Stars: ✭ 677 (+1201.92%)

Mutual labels: neural-architecture-search

Neural Architecture Search With Rl

Minimal Tensorflow implementation of the paper "Neural Architecture Search With Reinforcement Learning" presented at ICLR 2017

Stars: ✭ 37 (-28.85%)

Mutual labels: neural-architecture-search

Randwirenn

Implementation of: "Exploring Randomly Wired Neural Networks for Image Recognition"

Stars: ✭ 675 (+1198.08%)

Mutual labels: neural-architecture-search

Pagmo2

A C++ platform to perform parallel computations of optimisation tasks (global and local) via the asynchronous generalized island model.

Stars: ✭ 540 (+938.46%)

Mutual labels: evolutionary-algorithms

Devol

Genetic neural architecture search with Keras

Stars: ✭ 925 (+1678.85%)

Mutual labels: neural-architecture-search

Platemo

Evolutionary multi-objective optimization platform

Stars: ✭ 454 (+773.08%)

Mutual labels: evolutionary-algorithms

Bolero

Behavior Optimization and Learning for Robots

Stars: ✭ 39 (-25%)

Mutual labels: evolutionary-algorithms

Geneticalgorithmpython

Source code of PyGAD, a Python 3 library for building the genetic algorithm and training machine learning algorithms (Keras & PyTorch).

Stars: ✭ 435 (+736.54%)

Mutual labels: evolutionary-algorithms

Awesome Federated Learning

Federated Learning Library: https://fedml.ai

Stars: ✭ 624 (+1100%)

Mutual labels: neural-architecture-search

Slimmable networks

Slimmable Networks, AutoSlim, and Beyond, ICLR 2019, and ICCV 2019

Stars: ✭ 708 (+1261.54%)

Mutual labels: neural-architecture-search

Autokeras

AutoML library for deep learning

Stars: ✭ 8,269 (+15801.92%)

Mutual labels: neural-architecture-search

NSGANetV2: Evolutionary Multi-Objective Surrogate-Assisted Neural Architecture Search [slides][arXiv]

@inproceedings{

lu2020nsganetv2,

title={{NSGANetV2}: Evolutionary Multi-Objective Surrogate-Assisted Neural Architecture Search},

author={Zhichao Lu and Kalyanmoy Deb and Erik Goodman and Wolfgang Banzhaf and Vishnu Naresh Boddeti},

booktitle={European Conference on Computer Vision (ECCV)},

year={2020}

}

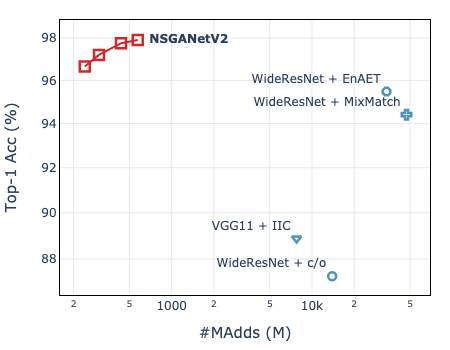

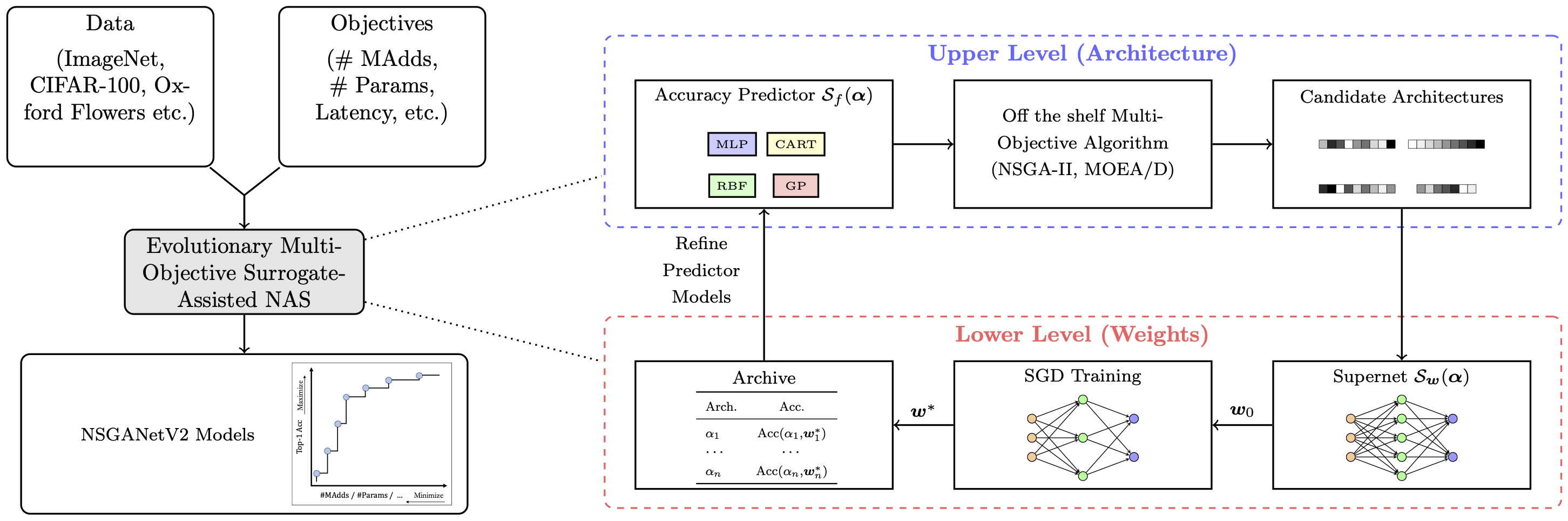

Overview

NSGANetV2 is an efficient NAS algorithm for generating task-specific models that are competitive under multiple competing objectives. It comprises of two surrogates, one at the architecture level to improve sample efficiency and one at the weights level, through a supernet, to improve gradient descent training efficiency.

NSGANetV2 is an efficient NAS algorithm for generating task-specific models that are competitive under multiple competing objectives. It comprises of two surrogates, one at the architecture level to improve sample efficiency and one at the weights level, through a supernet, to improve gradient descent training efficiency.

Datasets

Download the datasets from the links embedded in the names. Datasets with * can be automatically downloaded.

| Dataset | Type | Train Size | Test Size | #Classes |

|---|---|---|---|---|

| ImageNet | multi-class | 1,281,167 | 50,000 | 1,000 |

| CINIC-10 | 180,000 | 9,000 | 10 | |

| CIFAR-10* | 50,000 | 10,000 | 10 | |

| CIFAR-100* | 50,000 | 10,000 | 10 | |

| STL-10* | 5,000 | 8,000 | 10 | |

| FGVC Aircraft* | fine-grained | 6,667 | 3,333 | 100 |

| DTD | 3,760 | 1,880 | 47 | |

| Oxford-IIIT Pets | 3,680 | 3,369 | 37 | |

| Oxford Flowers102 | 2,040 | 6,149 | 102 |

How to evalute NSGANetV2 models

Download the models (net.config) and weights (net.init) from [Google Drive] or [Baidu Yun](提取码:4isq).

""" NSGANetV2 pretrained models

Syntax: python validation.py \

--dataset [imagenet/cifar10/...] --data /path/to/data \

--model /path/to/model/config/file --pretrained /path/to/model/weights

"""

How to use MSuNAS to search

""" Bi-objective search

Syntax: python msunas.py \

--dataset [imagenet/cifar10/...] --data /path/to/dataset/images \

--save search-xxx \ # dir to save search results

--sec_obj [params/flops/cpu] \ # objective (in addition to top-1 acc)

--n_gpus 8 \ # number of available gpus

--supernet_path /path/to/supernet/weights \

--vld_size [10000/5000/...] \ # number of subset images from training set to guide search

--n_epochs [0/5]

"""

- Download the pre-trained (on ImageNet) supernet from here.

- It supports searching for FLOPs, Params, and Latency as the second objective.

- To optimize latency on your own device, you need to first construct a

look-up-tablefor your own device, like this.

- To optimize latency on your own device, you need to first construct a

- Choose an appropriate

--vld_sizeto guide the search, e.g. 10,000 for ImageNet, 5,000 for CIFAR-10/100. - Set

--n_epochsto0for ImageNet and5for all other datasets. - See here for some examples.

- Output file structure:

- Every architecture sampled during search has

net_x.subnetandnet_x.statsstored in the corresponding iteration dir. - A stats file is generated by the end of each iteration,

iter_x.stats; it stores every architectures evaluated so far in["archive"], and iteration-wise statistics, e.g. hypervolume in["hv"], accuracy predictor related in["surrogate"]. - In case any architectures failed to evaluate during search, you may re-visit them in

failedsub-dir under experiment dir.

- Every architecture sampled during search has

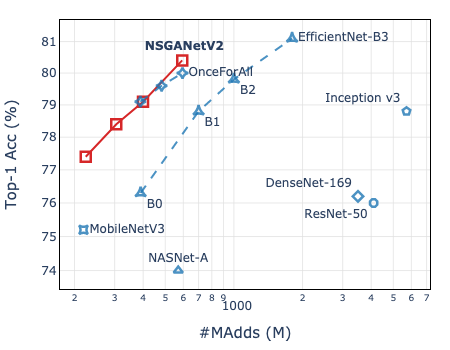

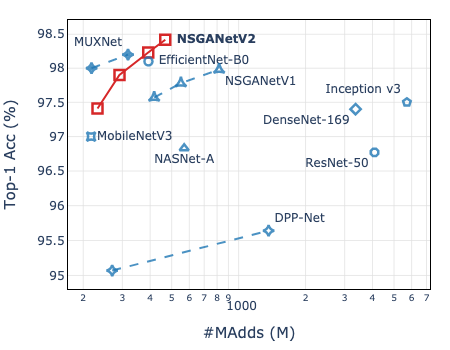

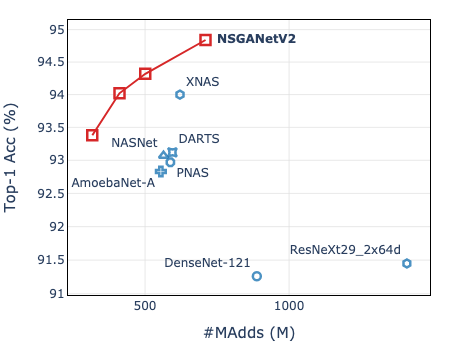

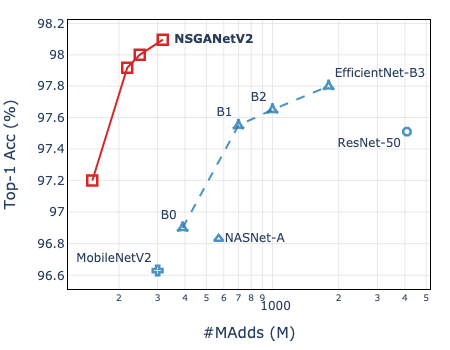

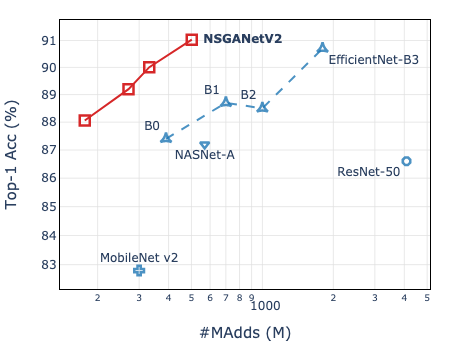

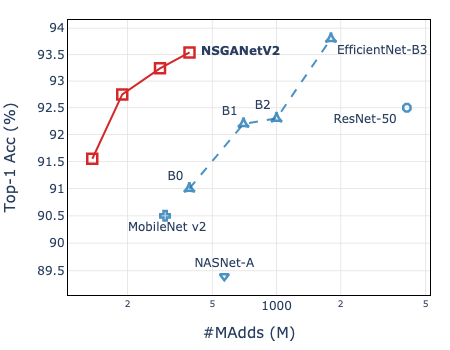

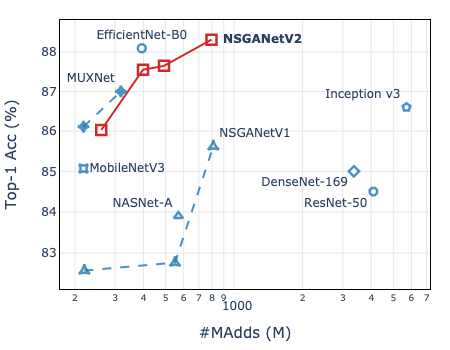

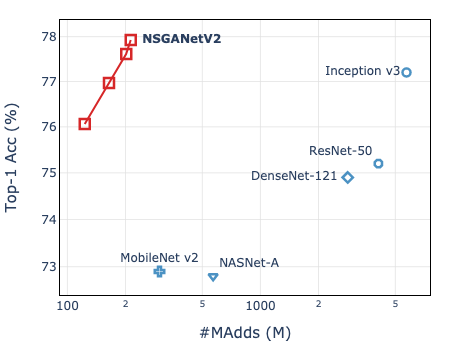

| ImageNet | CIFAR-10 |

|---|---|

|

|

How to choose architectures

Once the search is completed, you can choose suitable architectures by:

- You have preferences, e.g. architectures with xx.x% top-1 acc. and xxxM FLOPs, etc.

""" Find architectures with objectives close to your preferences

Syntax: python post_search.py \

-n 3 \ # number of desired architectures you want, the most accurate archecture will always be selected

--save search-imagenet/final \ # path to the dir to store the selected architectures

--expr search-imagenet/iter_30.stats \ # path to last iteration stats file in experiment dir

--prefer top1#80+flops#150 \ # your preferences, i.e. you want an architecture with 80% top-1 acc. and 150M FLOPs

--supernet_path /path/to/imagenet/supernet/weights \

"""

- If you do not have preferences, pass

Noneto argument--prefer, architectures will then be selected based on trade-offs. - All selected architectures should have three files created:

-

net.subnet: use to sample the architecture from the supernet -

net.config: configuration file that defines the full architectural components -

net.inherited: the inherited weights from supernet

-

How to validate architectures

To realize the full potential of the searched architectures, we further fine-tune from the inherited weights. Assuming that you have both net.config and net.inherited files.

""" Fine-tune on ImageNet from inherited weights

Syntax: sh scripts/distributed_train.sh 8 \ # of available gpus

/path/to/imagenet/data/ \

--model [nsganetv2_s/nsganetv2_m/...] \ # just for naming the output dir

--model-config /path/to/model/.config/file \

--initial-checkpoint /path/to/model/.inherited/file \

--img-size [192, ..., 224, ..., 256] \ # image resolution, check "r" in net.subnet

-b 128 --sched step --epochs 450 --decay-epochs 2.4 --decay-rate .97 \

--opt rmsproptf --opt-eps .001 -j 6 --warmup-lr 1e-6 \

--weight-decay 1e-5 --drop 0.2 --drop-path 0.2 --model-ema --model-ema-decay 0.9999 \

--aa rand-m9-mstd0.5 --remode pixel --reprob 0.2 --amp --lr .024 \

--teacher /path/to/supernet/weights \

"""

- Adjust learning rate as

(batch_size_per_gpu * #GPUs / 256) * 0.006depending on your system config.

""" Fine-tune on CIFAR-10 from inherited weights

Syntax: python train_cifar.py \

--data /path/to/CIFAR-10/data/ \

--model [nsganetv2_s/nsganetv2_m/...] \ # just for naming the output dir

--model-config /path/to/model/.config/file \

--img-size [192, ..., 224, ..., 256] \ # image resolution, check "r" in net.subnet

--drop 0.2 --drop-path 0.2 \

--cutout --autoaugment --save

"""

More Use Cases (coming soon)

- [ ] With a different supernet (search space).

- [ ] NASBench 101/201.

- [ ] Architecture visualization.

Requirements

- Python 3.7

- Cython 0.29 (optional; makes

non_dominated_sortingfaster in pymoo) - PyTorch 1.5.1

- pymoo 0.4.1

- torchprofile 0.0.1 (for FLOPs calculation)

- OnceForAll 0.0.4 (lower level supernet)

- timm 0.1.30

- pySOT 0.2.3 (RBF surrogate model)

- pydacefit 1.0.1 (GP surrogate model)

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].