JosephKJ / Owod

Programming Languages

Labels

Projects that are alternatives of or similar to Owod

Towards Open World Object Detection

Accepted to CVPR 2021 as an ORAL paper

arXiv: https://arxiv.org/abs/2103.02603

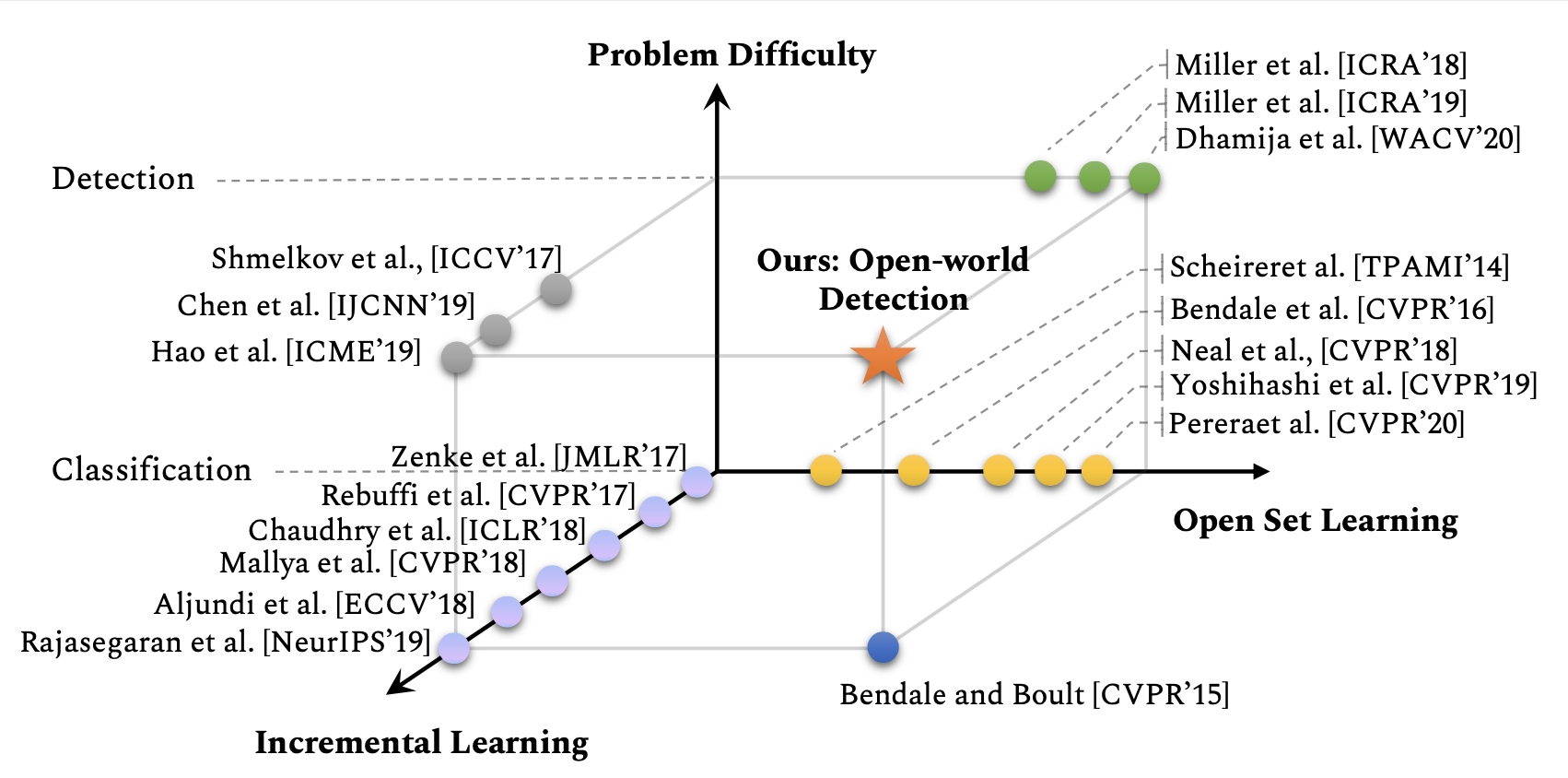

The figure shows how our newly formulated Open World Object Detection setting relates to exsiting settings.

Abstract

Humans have a natural instinct to identify unknown object instances in their environments. The intrinsic curiosity about these unknown instances aids in learning about them, when the corresponding knowledge is eventually available. This motivates us to propose a novel computer vision problem called: Open World Object Detection, where a model is tasked to:

- Identify objects that have not been introduced to it as `unknown', without explicit supervision to do so, and

- Incrementally learn these identified unknown categories without forgetting previously learned classes, when the corresponding labels are progressively received.

We formulate the problem, introduce a strong evaluation protocol and provide a novel solution, which we call ORE: Open World Object Detector, based on contrastive clustering and energy based unknown identification. Our experimental evaluation and ablation studies analyse the efficacy of ORE in achieving Open World objectives. As an interesting by-product, we find that identifying and characterising unknown instances helps to reduce confusion in an incremental object detection setting, where we achieve state-of-the-art performance, with no extra methodological effort. We hope that our work will attract further research into this newly identified, yet crucial research direction.

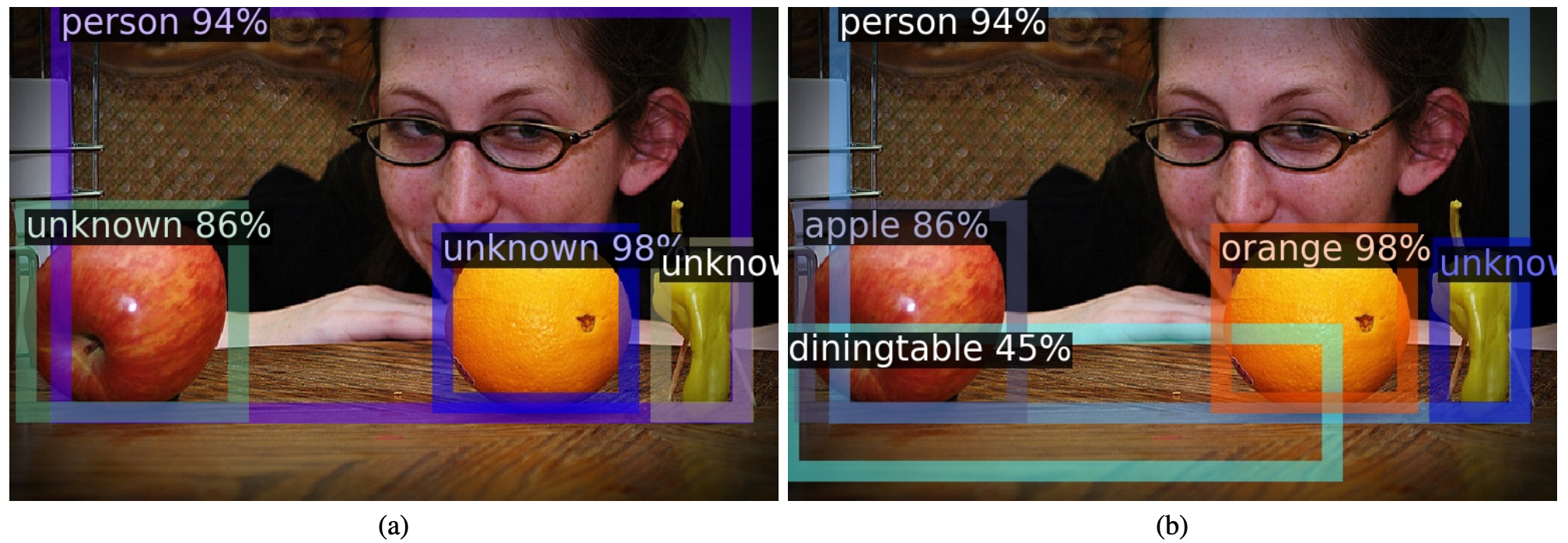

A sample qualitative result

The sub-figure (a) is the result produced by our method after learning a few set of classes which doesnot include classes like apple and orange. We are able to identify them and correctly labels them as unknown. After some time, when the model is eventually taught to detect apple and orange, these instances are labelled correctly as seen in sub-figure (b); without forgetting how to detect person. An unidentified class instance still remains, and is successfully detects it as unknown.

Installation

See INSTALL.md.

Quick Start

Some bookkeeping needs to be done for the code, like removing the local paths and so on. We will update these shortly.

Data split and trained models: Google Drive

All config files can be found in: configs/OWOD

Sample command on a 4 GPU machine:

python tools/train_net.py --num-gpus 4 --config-file <Change to the appropriate config file> SOLVER.IMS_PER_BATCH 4 SOLVER.BASE_LR 0.005

Acknowledgement

Our code base is build on top of Detectron 2 library.

Citation

If you use our work in your research please cite us:

@inproceedings{joseph2021open,

title={Towards Open World Object Detection},

author={K J Joseph and Salman Khan and Fahad Shahbaz Khan and Vineeth N Balasubramanian},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2021)},

eprint={2103.02603},

archivePrefix={arXiv},

year={2021}

}