fwilliams / Point Cloud Utils

Licence: gpl-2.0

A Python library for common tasks on 3D point clouds

Stars: ✭ 281

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Point Cloud Utils

Polylidar

Polylidar3D - Fast polygon extraction from 3D Data

Stars: ✭ 106 (-62.28%)

Mutual labels: point-cloud, mesh, geometry

Meshlab

The open source mesh processing system

Stars: ✭ 2,619 (+832.03%)

Mutual labels: point-cloud, mesh

Openmvs

open Multi-View Stereo reconstruction library

Stars: ✭ 1,842 (+555.52%)

Mutual labels: point-cloud, mesh

classy blocks

Python classes for easier creation of OpenFOAM's blockMesh dictionaries.

Stars: ✭ 53 (-81.14%)

Mutual labels: geometry, mesh

Kinectfusionapp

Sample implementation of an application using KinectFusionLib

Stars: ✭ 69 (-75.44%)

Mutual labels: point-cloud, mesh

Point2Mesh

Meshing Point Clouds with Predicted Intrinsic-Extrinsic Ratio Guidance (ECCV2020)

Stars: ✭ 61 (-78.29%)

Mutual labels: point-cloud, mesh

Easy3d

A lightweight, easy-to-use, and efficient C++ library for processing and rendering 3D data

Stars: ✭ 383 (+36.3%)

Mutual labels: point-cloud, mesh

pcc geo cnn

Learning Convolutional Transforms for Point Cloud Geometry Compression

Stars: ✭ 44 (-84.34%)

Mutual labels: geometry, point-cloud

Kinectfusionlib

Implementation of the KinectFusion approach in modern C++14 and CUDA

Stars: ✭ 261 (-7.12%)

Mutual labels: point-cloud, mesh

volumentations

Augmentation package for 3d data based on albumentaitons

Stars: ✭ 26 (-90.75%)

Mutual labels: point-cloud, mesh

Sopgi

A small VEX raytracer for SideFX Houdini with photon mapping global illumination and full recursive reflections and refractions

Stars: ✭ 55 (-80.43%)

Mutual labels: point-cloud, geometry

3d Machine Learning

A resource repository for 3D machine learning

Stars: ✭ 7,405 (+2535.23%)

Mutual labels: point-cloud, mesh

pymadcad

Simple yet powerful CAD (Computer Aided Design) library, written with Python.

Stars: ✭ 63 (-77.58%)

Mutual labels: geometry, mesh

Draco

Draco is a library for compressing and decompressing 3D geometric meshes and point clouds. It is intended to improve the storage and transmission of 3D graphics.

Stars: ✭ 4,611 (+1540.93%)

Mutual labels: point-cloud, mesh

Cgal

The public CGAL repository, see the README below

Stars: ✭ 2,825 (+905.34%)

Mutual labels: point-cloud, geometry

Hole fixer

Demo implementation of smoothly filling holes in 3D meshes using surface fairing

Stars: ✭ 165 (-41.28%)

Mutual labels: mesh, geometry

Matgeom

Matlab geometry toolbox for 2D/3D geometric computing

Stars: ✭ 168 (-40.21%)

Mutual labels: mesh, geometry

pcc geo cnn v2

Improved Deep Point Cloud Geometry Compression

Stars: ✭ 55 (-80.43%)

Mutual labels: geometry, point-cloud

TriangleMeshDistance

Header only, single file, simple and efficient C++11 library to compute the signed distance function (SDF) to a triangle mesh

Stars: ✭ 55 (-80.43%)

Mutual labels: geometry, mesh

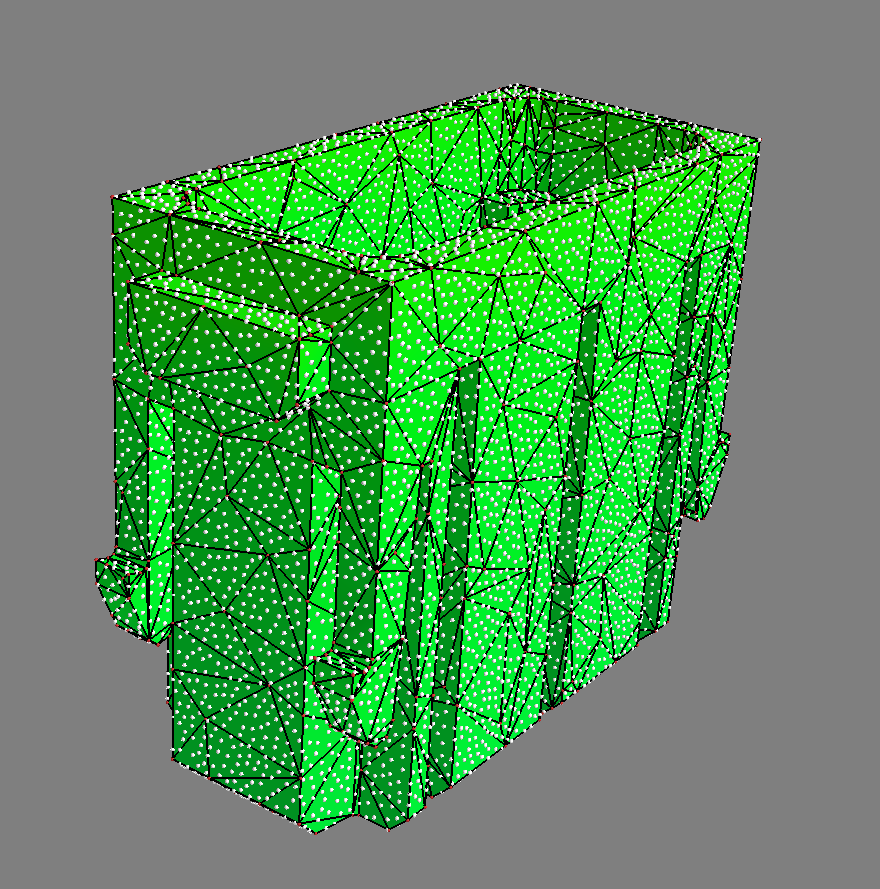

Point Cloud Utils (pcu) - A Python library for common tasks on 3D point clouds

Point Cloud Utils (pcu) is a utility library providing the following functionality:

- A series of algorithms for generating point samples on meshes:

- Poisson-Disk-Sampling of a mesh based on "Parallel Poisson Disk Sampling with Spectrum Analysis on Surface".

- Sampling a mesh with Lloyd's algorithm

- Monte-Carlo sampling on a mesh

- Normal estimation from point clouds

- Very fast pairwise nearest neighbor between point clouds (based on nanoflann)

- Hausdorff distances between point-clouds.

- Chamfer distnaces between point-clouds.

- Approximate Wasserstein distances between point-clouds using the Sinkhorn method.

- Pairwise distances between point clouds

- Utility functions for reading and writing common mesh formats (OBJ, OFF, PLY)

Installation Instructions

With conda (recommended)

Simply run:

conda install -c conda-forge point_cloud_utils

With pip (not recommended)

pip install git+git://github.com/fwilliams/point-cloud-utils

The following dependencies are required to install with pip:

- A C++ compiler supporting C++14 or later

- CMake 3.2 or later.

- git

Examples

Poisson-Disk-Sampling

Generate 10000 samples on a mesh with poisson disk samples

import point_cloud_utils as pcu

import numpy as np

# v is a nv by 3 NumPy array of vertices

# f is an nf by 3 NumPy array of face indexes into v

# n is a nv by 3 NumPy array of vertex normals

v, f, n, _ = pcu.read_ply("my_model.ply")

# Generate 10000 samples on a mesh with poisson disk samples

# f_i are the face indices of each sample and bc are barycentric coordinates of the sample within a face

f_i, bc = pcu.sample_mesh_poisson_disk(v, f, n, 10000)

# Use the face indices and barycentric coordinate to compute sample positions and normals

v_poisson = (v[f[f_i]] * bc[:, np.newaxis]).sum(1)

n_poisson = (n[f[f_i]] * bc[:, np.newaxis]).sum(1)

Generate samples on a mesh with poisson disk samples seperated by approximately 0.01 times the boundinb box diagonal

import point_cloud_utils as pcu

import numpy as np

# v is a nv by 3 NumPy array of vertices

# f is an nf by 3 NumPy array of face indexes into v

# n is a nv by 3 NumPy array of vertex normals

v, f, n, _ = pcu.read_ply("my_model.ply")

# Generate samples on a mesh with poisson disk samples seperated by approximately 0.01 times

# the length of the bounding box diagonal

bbox = np.max(v, axis=0) - np.min(v, axis=0)

bbox_diag = np.linalg.norm(bbox)

# f_i are the face indices of each sample and bc are barycentric coordinates of the sample within a face

f_i, bc = pcu.sample_mesh_poisson_disk(v, f, n, 10000)

# Use the face indices and barycentric coordinate to compute sample positions and normals

v_poisson = (v[f[f_i]] * bc[:, np.newaxis]).sum(1)

n_poisson = (n[f[f_i]] * bc[:, np.newaxis]).sum(1)

Monte-Carlo Sampling on a mesh

import point_cloud_utils as pcu

import numpy as np

# v is a nv by 3 NumPy array of vertices

# f is an nf by 3 NumPy array of face indexes into v

# n is a nv by 3 NumPy array of vertex normals

v, f, n, _ = pcu.read_ply("my_model.ply")

# Generate very dense random samples on the mesh (v, f, n)

# f_i are the face indices of each sample and bc are barycentric coordinates of the sample within a face

f_idx, bc = pcu.sample_mesh_random(v, f, num_samples=v.shape[0] * 40)

# Use the face indices and barycentric coordinate to compute sample positions and normals

v_dense = (v[f[f_idx]] * bc[:, np.newaxis]).sum(1)

n_dense = (n[f[f_idx]] * bc[:, np.newaxis]).sum(1)

Lloyd Relaxation

import point_cloud_utils as pcu

# v is a nv by 3 NumPy array of vertices

# f is an nf by 3 NumPy array of face indexes into v

v, f, _, _ = pcu.read_ply("my_model.ply")

# Generate 1000 points on the mesh with Lloyd's algorithm

samples = pcu.sample_mesh_lloyd(v, f, 1000)

# Generate 100 points on the unit square with Lloyd's algorithm

samples_2d = pcu.lloyd_2d(100)

# Generate 100 points on the unit cube with Lloyd's algorithm

samples_3d = pcu.lloyd_3d(100)

Estimating Normals From a Point Cloud

import point_cloud_utils as pcu

# v is a nv by 3 NumPy array of vertices

v, _, _, _ = pcu.read_ply("my_model.ply")

# Estimate a normal at each point (row of v) using its 16 nearest neighbors

n = pcu.estimate_point_cloud_normals(n, k=16)

Approximate Wasserstein (Sinkhorn) Distance Between Point-Clouds

import point_cloud_utils as pcu

import numpy as np

# a and b are arrays where each row contains a point

# Note that the point sets can have different sizes (e.g [100, 3], [111, 3])

a = np.random.rand(100, 3)

b = np.random.rand(100, 3)

# M is a 100x100 array where each entry (i, j) is the squared distance between point a[i, :] and b[j, :]

M = pcu.pairwise_distances(a, b)

# w_a and w_b are masses assigned to each point. In this case each point is weighted equally.

w_a = np.ones(a.shape[0])

w_b = np.ones(b.shape[0])

# P is the transport matrix between a and b, eps is a regularization parameter, smaller epsilons lead to

# better approximation of the true Wasserstein distance at the expense of slower convergence

P = pcu.sinkhorn(w_a, w_b, M, eps=1e-3)

# To get the distance as a number just compute the frobenius inner product <M, P>

sinkhorn_dist = (M*P).sum()

Batched Version:

import point_cloud_utils as pcu

import numpy as np

# a and b are each contain 10 batches each of which contain 100 points of dimension 3

# i.e. a[i, :, :] is the i^th point set which contains 100 points

# Note that the point sets can have different sizes (e.g [10, 100, 3], [10, 111, 3])

a = np.random.rand(10, 100, 3)

b = np.random.rand(10, 100, 3)

# M is a 10x100x100 array where each entry (k, i, j) is the squared distance between point a[k, i, :] and b[k, j, :]

M = pcu.pairwise_distances(a, b)

# w_a and w_b are masses assigned to each point. In this case each point is weighted equally.

w_a = np.ones(a.shape[:2])

w_b = np.ones(b.shape[:2])

# P is the transport matrix between a and b, eps is a regularization parameter, smaller epsilons lead to

# better approximation of the true Wasserstein distance at the expense of slower convergence

P = pcu.sinkhorn(w_a, w_b, M, eps=1e-3)

# To get the distance as a number just compute the frobenius inner product <M, P>

sinkhorn_dist = (M*P).sum()

Chamfer Distance Between Point-Clouds

import point_cloud_utils as pcu

import numpy as np

# a and b are arrays where each row contains a point

# Note that the point sets can have different sizes (e.g [100, 3], [111, 3])

a = np.random.rand(100, 3)

b = np.random.rand(100, 3)

chamfer_dist = pcu.chamfer(a, b)

Batched Version:

import point_cloud_utils as pcu

import numpy as np

# a and b are each contain 10 batches each of which contain 100 points of dimension 3

# i.e. a[i, :, :] is the i^th point set which contains 100 points

# Note that the point sets can have different sizes (e.g [10, 100, 3], [10, 111, 3])

a = np.random.rand(10, 100, 3)

b = np.random.rand(10, 100, 3)

chamfer_dist = pcu.chamfer(a, b)

Nearest-Neighbors and Hausdorff Distances Between Point-Clouds

import point_cloud_utils as pcu

import numpy as np

# Generate two random point sets

a = np.random.rand(1000, 3)

b = np.random.rand(500, 3)

# dists_a_to_b is of shape (a.shape[0],) and contains the shortest squared distance

# between each point in a and the points in b

# corrs_a_to_b is of shape (a.shape[0],) and contains the index into b of the

# closest point for each point in a

dists_a_to_b, corrs_a_to_b = pcu.point_cloud_distance(a, b)

# Compute each one sided squared Hausdorff distances

hausdorff_a_to_b = pcu.hausdorff(a, b)

hausdorff_b_to_a = pcu.hausdorff(b, a)

# Take a max of the one sided squared distances to get the two sided Hausdorff distance

hausdorff_dist = max(hausdorff_a_to_b, hausdorff_b_to_a)

# Find the index pairs of the two points with maximum shortest distancce

hausdorff_b_to_a, idx_b, idx_a = pcu.hausdorff(b, a, return_index=True)

assert np.abs(np.sum((a[idx_a] - b[idx_b])**2) - hausdorff_b_to_a) < 1e-5, "These values should be almost equal"

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].