denghuichao / Proxy Pool

爬虫代理IP池服务,可供其他爬虫程序通过restapi获取

Stars: ✭ 91

Programming Languages

java

68154 projects - #9 most used programming language

Projects that are alternatives of or similar to Proxy Pool

Ok ip proxy pool

🍿爬虫代理IP池(proxy pool) python🍟一个还ok的IP代理池

Stars: ✭ 196 (+115.38%)

Mutual labels: crawler, proxypool

Spoon

🥄 A package for building specific Proxy Pool for different Sites.

Stars: ✭ 173 (+90.11%)

Mutual labels: crawler, proxypool

Proxybroker

Proxy [Finder | Checker | Server]. HTTP(S) & SOCKS 🎭

Stars: ✭ 2,767 (+2940.66%)

Mutual labels: crawler, proxypool

Fooproxy

稳健高效的评分制-针对性- IP代理池 + API服务,可以自己插入采集器进行代理IP的爬取,针对你的爬虫的一个或多个目标网站分别生成有效的IP代理数据库,支持MongoDB 4.0 使用 Python3.7(Scored IP proxy pool ,customise proxy data crawler can be added anytime)

Stars: ✭ 195 (+114.29%)

Mutual labels: crawler, proxypool

Python3Webcrawler

🌈Python3网络爬虫实战:QQ音乐歌曲、京东商品信息、房天下、破解有道翻译、构建代理池、豆瓣读书、百度图片、破解网易登录、B站模拟扫码登录、小鹅通、荔枝微课

Stars: ✭ 208 (+128.57%)

Mutual labels: crawler, proxypool

Swiftlinkpreview

It makes a preview from an URL, grabbing all the information such as title, relevant texts and images.

Stars: ✭ 1,216 (+1236.26%)

Mutual labels: crawler

Python Testing Crawler

A crawler for automated functional testing of a web application

Stars: ✭ 68 (-25.27%)

Mutual labels: crawler

Geziyor

Geziyor, a fast web crawling & scraping framework for Go. Supports JS rendering.

Stars: ✭ 1,246 (+1269.23%)

Mutual labels: crawler

Acm Statistics

An online tool (crawler) to analyze users performance in online judges (coding competition websites). Supported OJ: POJ, HDU, ZOJ, HYSBZ, CodeForces, UVA, ICPC Live Archive, FZU, SPOJ, Timus (URAL), LeetCode_CN, CSU, LibreOJ, 洛谷, 牛客OJ, Lutece (UESTC), AtCoder, AIZU, CodeChef, El Judge, BNUOJ, Codewars, UOJ, NBUT, 51Nod, DMOJ, VJudge

Stars: ✭ 83 (-8.79%)

Mutual labels: crawler

Goscraper

Golang pkg to quickly return a preview of a webpage (title/description/images)

Stars: ✭ 72 (-20.88%)

Mutual labels: crawler

Wombat

Lightweight Ruby web crawler/scraper with an elegant DSL which extracts structured data from pages.

Stars: ✭ 1,220 (+1240.66%)

Mutual labels: crawler

Taiwan News Crawlers

Scrapy-based Crawlers for news of Taiwan

Stars: ✭ 83 (-8.79%)

Mutual labels: crawler

Webb

Python: An all-in-one Web Crawler, Web Parser and Web Scrapping library!

Stars: ✭ 77 (-15.38%)

Mutual labels: crawler

Is Google

Verify that a request is from Google crawlers using Google's DNS verification steps

Stars: ✭ 82 (-9.89%)

Mutual labels: crawler

proxy-pool 代理IP池

简介

最近在玩爬虫, 很多网站有反爬虫机制,会对访问频率太高的IP进行禁封.于是到网上去找免费的代理,使用过程中发现这些代理的质量不高,很多都不能使用,因此想到做个代理池.程序主动收集代理IP,并定时对这些代理进行可用性验证,同时提供接口获取可用的代理供爬虫使用.

代理IP来源

有很多网站提供代理IP服务,有的免费,质量好的则付费.这里只收集免费的代理IP.目前爬取了goubanjia,kuaidaili,66IP,xicidaili这几个网站的代理IP.每个网站IP只爬取了前面10页,后面的基本上不可用.

可用性保证

爬回来的IP有大部分都是不能用的,要保证爬虫拿到的IP质量较高,就需要进行可用性的验证,在代理不可用时将其删除.

接口方式

为了方便客户端(爬虫)获取代理,将接口做成了服务的形式,客户端通过restapi获取代理,并在发现代理不可用时,主动调用api将其删除.

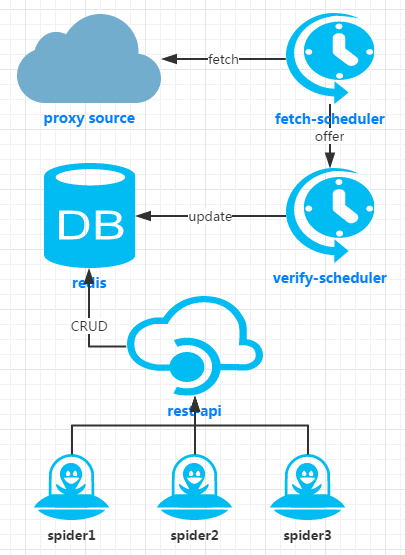

proxy-pool设计

整个项目主要分成以下几个模块:

- fetcher 收集网上代理IP的爬虫,目前有

GoubanjiaFetcher,KuaiDailiFetcher,Www66IPFetcher,XichiDailiFetcher四个爬虫,分别收集四个网站的代理IP.如果需要收集其他网站的IP,可以在该模块下增加相应实现. - schedule 定时任务,定时从网络上爬取最新IP,定时验证已有代理的可用性.

- db 存储功能, 目前采用redis保存可用代理

- boot 项目的启动类

- api restapi实现

- utils 一些工具类库

项目部署

项目基于SpringBoot开发,直接打包成jar并启动jar包即可.

使用方式

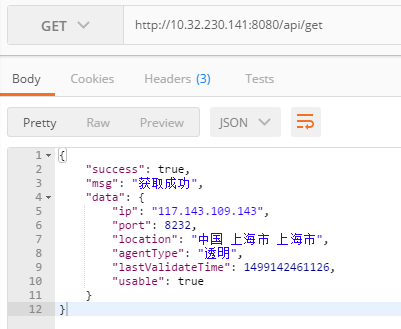

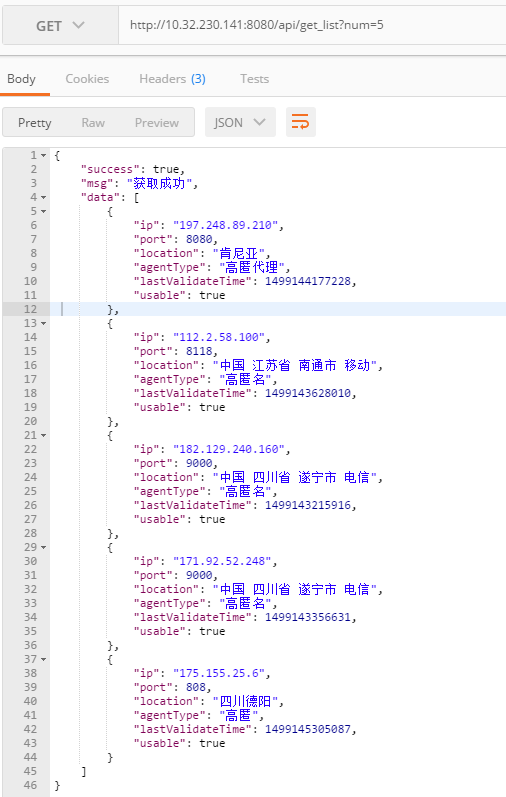

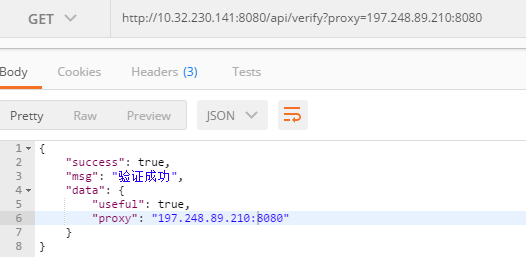

启动程序即可通过浏览器获取代理ip,目前支持以下操作:

- get 获取一个可用代理

- get_list 获取多个可用代理

- verify 验证代理可用性

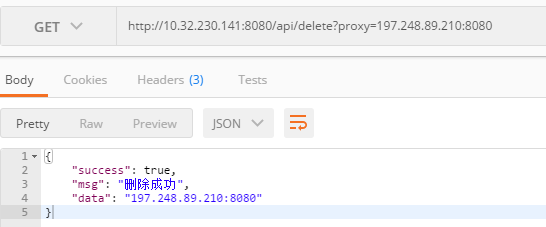

- delete 删除不可用代理

测试地址

http://hcdeng.pp.ngrok.cc/api/get_list?num=5

写在最后

由于能力有限,代码写的比较水,proxy-pool的功能也不完善,欢迎大家指正.如果您认为项目对您有帮助,请给个star~~

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].