NyanKiyoshi / Pytest Django Queries

Programming Languages

Projects that are alternatives of or similar to Pytest Django Queries

pytest-django-queries

Generate performance reports from your django database performance tests (inspired by coverage.py).

Usage

Install pytest-django-queries, write your pytest tests and mark any

test that should be counted or use the count_queries fixture.

Note: to use the latest development build, use pip install --pre pytest-django-queries

import pytest

@pytest.mark.count_queries

def test_query_performances():

Model.objects.all()

# Or...

def test_another_query_performances(count_queries):

Model.objects.all()

Each test file and/or package is considered as a category. Each test inside a "category" compose its data, see Visualising Results for more details.

You will find the full documentation here.

Recommendation when Using Fixtures

You might end up in the case where you want to add fixtures that are generating queries

that you don't want to be counted in the results–or simply, you want to use the

pytest-django plugin alongside of pytest-django-queries, which will generate

unwanted queries in your results.

For that, you will want to put the count_queries fixture as the last fixture to execute.

But at the same time, you might want to use the the power of pytest markers, to separate

the queries counting tests from other tests. In that case, you might want to do something

like this to tell the marker to not automatically inject the count_queries fixture into

your test:

import pytest

@pytest.mark.count_queries(autouse=False)

def test_retrieve_main_menu(fixture_making_queries, count_queries):

pass

Notice the usage of the keyword argument autouse=False and the count_queries fixture

being placed last.

Using pytest-django alongside of pytest-django-queries

We recommend you to do the following when using pytest-django:

import pytest

@pytest.mark.django_db

@pytest.mark.count_queries(autouse=False)

def test_retrieve_main_menu(any_fixture, other_fixture, count_queries):

pass

Integrating with GitHub

TBA.

Testing Locally

Simply install pytest-django-queries through pip and run your

tests using pytest. A report should have been generated in your

current working directory in a file called with .pytest-queries.

Note: to override the save path, pass the --django-db-bench PATH option to pytest.

Visualising Results

You can generate a table from the tests results by using the show command:

django-queries show

You will get something like this to represent the results:

+---------+--------------------------------------+

| Module | Tests |

+---------+--------------------------------------+

| module1 | +-----------+---------+------------+ |

| | | Test Name | Queries | Duplicated | |

| | +-----------+---------+------------+ |

| | | test1 | 0 | 0 | |

| | +-----------+---------+------------+ |

| | | test2 | 1 | 0 | |

| | +-----------+---------+------------+ |

+---------+--------------------------------------+

| module2 | +-----------+---------+------------+ |

| | | Test Name | Queries | Duplicated | |

| | +-----------+---------+------------+ |

| | | test1 | 123 | 0 | |

| | +-----------+---------+------------+ |

+---------+--------------------------------------+

Exporting the Results (HTML)

For a nicer presentation, use the html command, to export the results as HTML.

django-queries html

It will generate something like this.

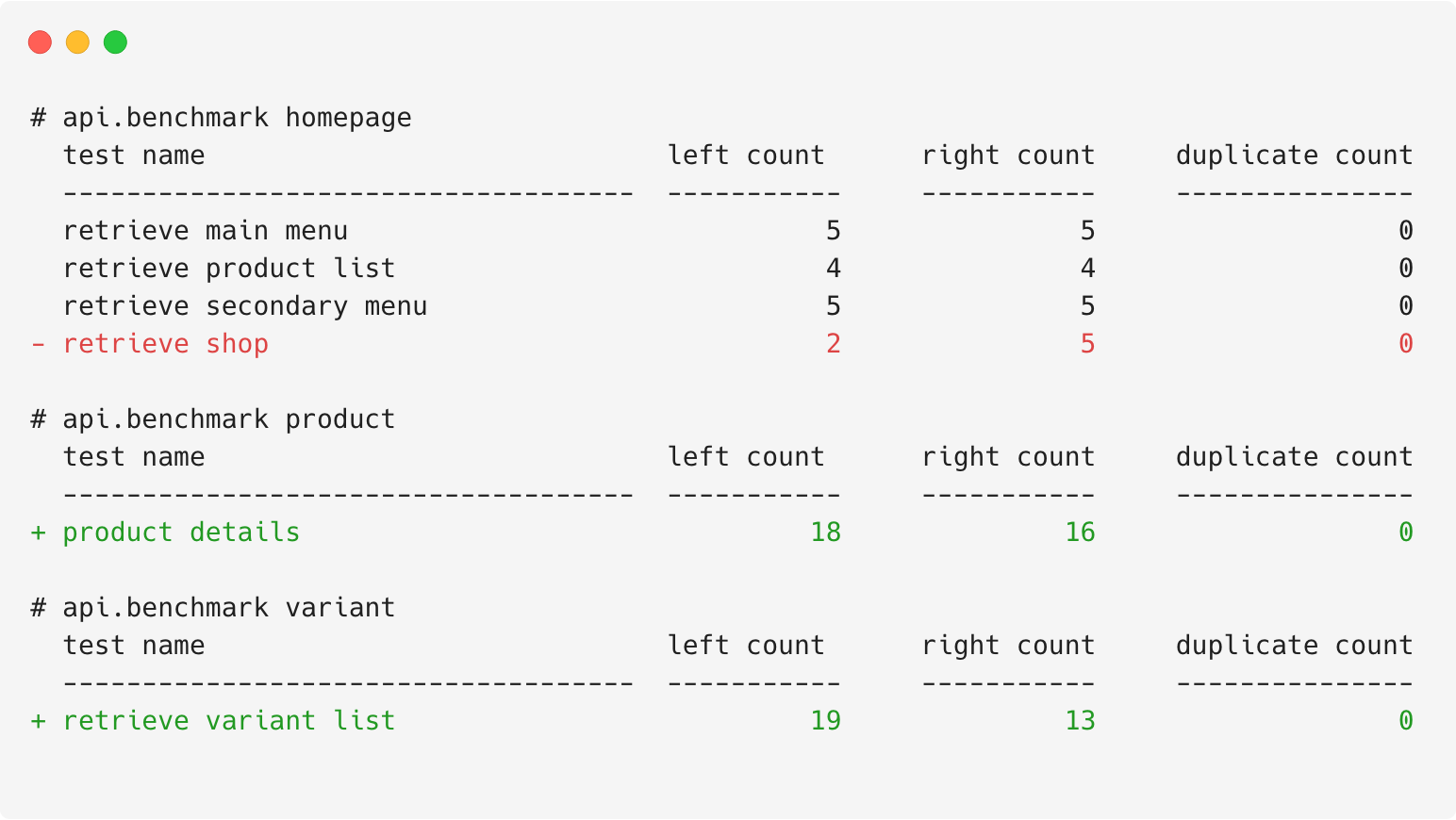

Comparing Results

You can run django-queries backup (can take a path, optionally) after

running your tests then rerun them. After that, you can run django-queries diff

to generate results looking like this:

Development

First of all, clone the project locally. Then, install it using the below command.

./setup.py develop

After that, you need to install the development and testing requirements. For that, run the below command.

pip install -e .[test]