cplusx / Qatm

Licence: other

Code for Quality-Aware Template Matching for Deep Learning

Stars: ✭ 93

Labels

Projects that are alternatives of or similar to Qatm

Sprint gan

Privacy-preserving generative deep neural networks support clinical data sharing

Stars: ✭ 92 (-1.08%)

Mutual labels: jupyter-notebook

Ds With Pysimplegui

Data science and Machine Learning GUI programs/ desktop apps with PySimpleGUI package

Stars: ✭ 93 (+0%)

Mutual labels: jupyter-notebook

Resnet cnn mri adni

Code for Residual and Plain Convolutional Neural Networks for 3D Brain MRI Classification paper

Stars: ✭ 92 (-1.08%)

Mutual labels: jupyter-notebook

One Shot Siamese

A PyTorch implementation of "Siamese Neural Networks for One-shot Image Recognition".

Stars: ✭ 91 (-2.15%)

Mutual labels: jupyter-notebook

Programming Collective Intelligence

《集体智慧编程》Python代码(基于Python3.6)和数据集

Stars: ✭ 92 (-1.08%)

Mutual labels: jupyter-notebook

Car Damage Detector

Detect dents and scratches in cars. Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow.

Stars: ✭ 91 (-2.15%)

Mutual labels: jupyter-notebook

Zeroshotknowledgetransfer

Accompanying code for the paper "Zero-shot Knowledge Transfer via Adversarial Belief Matching"

Stars: ✭ 93 (+0%)

Mutual labels: jupyter-notebook

Stepik Dl Nlp

Материалы мини-курса на Stepik "Нейронные сети и обработка текста"

Stars: ✭ 93 (+0%)

Mutual labels: jupyter-notebook

Cognoma

Putting machine learning in the hands of cancer biologists

Stars: ✭ 92 (-1.08%)

Mutual labels: jupyter-notebook

QATM: Quality-Aware Template Matching for Deep Learning

This is the official repo for the QATM DNN layer (CVPR2019). For method details, please refer to

@InProceedings{Cheng_2019_CVPR,

author = {Cheng, Jiaxin and Wu, Yue and AbdAlmageed, Wael and Natarajan, Premkumar},

title = {QATM: Quality-Aware Template Matching for Deep Learning},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2019}

}

Overview

What is QATM?

QATM is an algorithmic DNN layer that implements template matching idea with learnable parameters.

How does QATM work?

QATM learns the similarity scores reflecting the (soft-)repeatness of a pattern. Emprically speaking, matching a background patch in QATM will produce a much lower score than matching a foreground patch.

Below is the table of ideal matching scores:

| Matching Cases | Likelihood(s|t) | Likelihood(t|s) | QATM Score(s,t) |

|---|---|---|---|

| 1-to-1 | 1 | 1 | 1 |

| 1-to-N | 1 | 1/N | 1/N |

| M-to-1 | 1/M | 1 | 1/M |

| M-to-N | 1/M | 1/N | 1/MN |

| Not Matching | 1/||S|| | 1/||T|| | ~0 |

where

- s and t are two patterns in source and template images, respectively.

- ||S|| and ||T|| are the cardinality of patterns in source and template set.

- see more details in paper.

QATM Applications

Classic Template Matching

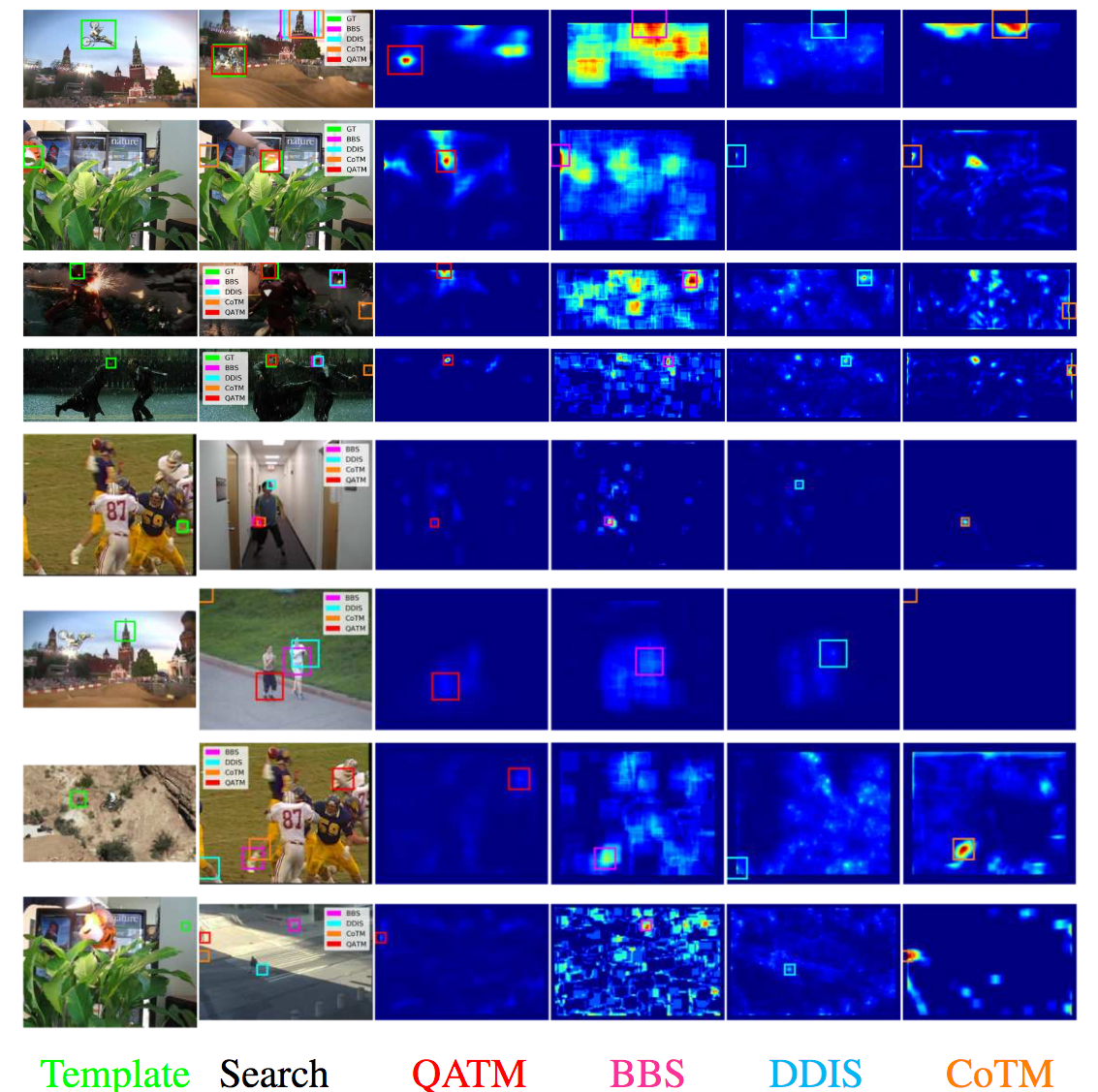

Figure 1: Qualitative template matching performance. Columns from left to right are: the

template frame, the target search frame with predicted bounding boxes overlaid (different colors indicate different method), and the response maps of QATM, BBS, DDIS, CoTM, respectively. Rows from top to bottom: the top four are positive samples

from OTB, while the bottom four are negative samples from MOTB. Best viewed in color and zoom-in mode. See detailed explanations in paper.

Figure 1: Qualitative template matching performance. Columns from left to right are: the

template frame, the target search frame with predicted bounding boxes overlaid (different colors indicate different method), and the response maps of QATM, BBS, DDIS, CoTM, respectively. Rows from top to bottom: the top four are positive samples

from OTB, while the bottom four are negative samples from MOTB. Best viewed in color and zoom-in mode. See detailed explanations in paper.

Deep Image-to-GPS Matching (Image-to-Panorama Matching)

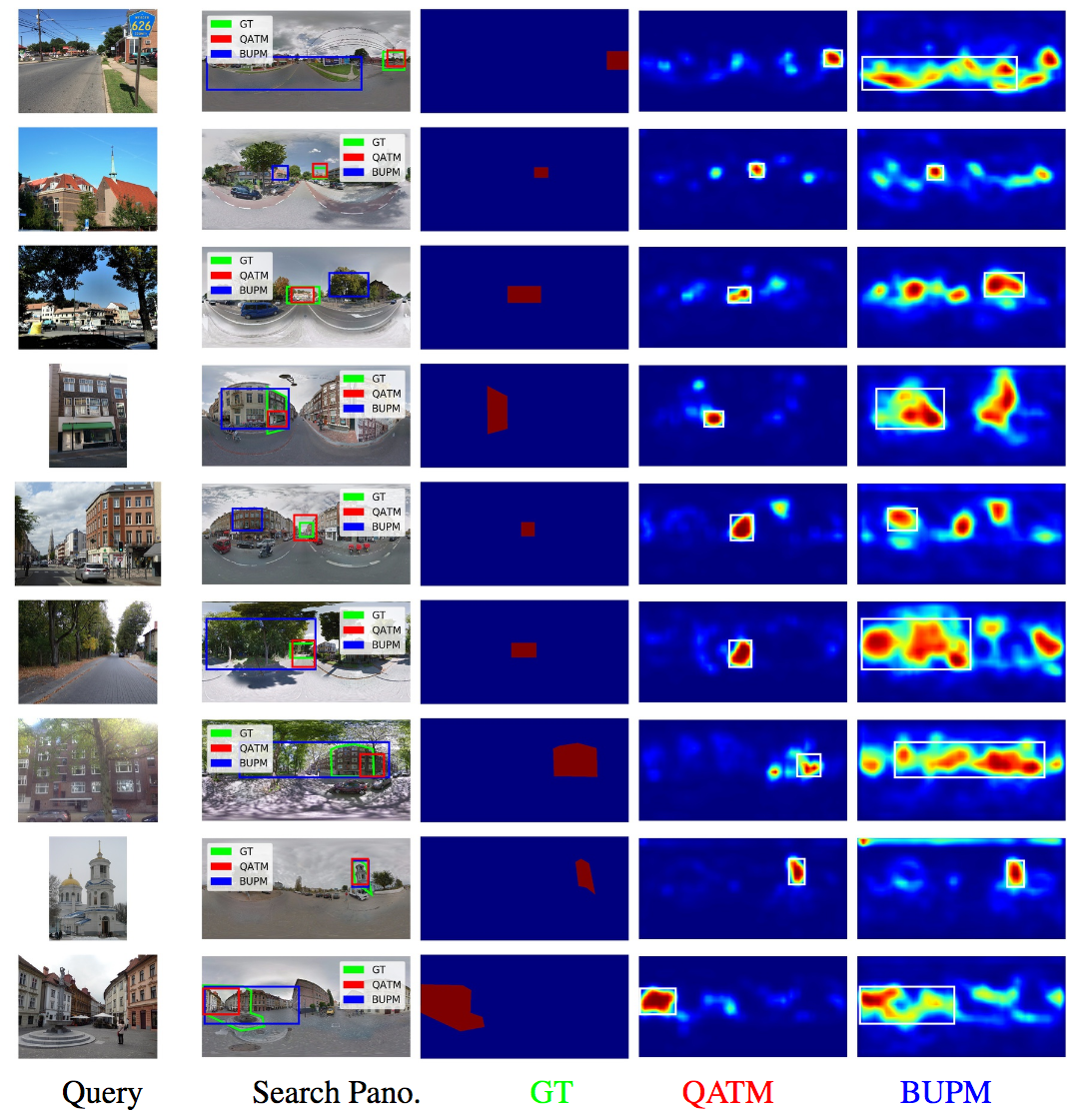

Figure 2: Qualitative image-to-GPS results. Columns from left to right are: the query image, the reference panorama

image with predicted bounding boxes overlaid (GT, the proposed QATM, and the baseline BUPM), and the response

maps of ground truth mask, QATM-improved, and baseline, respectively. Best viewed in color and zoom-in mode. See detailed explanations in paper.

Figure 2: Qualitative image-to-GPS results. Columns from left to right are: the query image, the reference panorama

image with predicted bounding boxes overlaid (GT, the proposed QATM, and the baseline BUPM), and the response

maps of ground truth mask, QATM-improved, and baseline, respectively. Best viewed in color and zoom-in mode. See detailed explanations in paper.

Deep Semantic Image Alignment

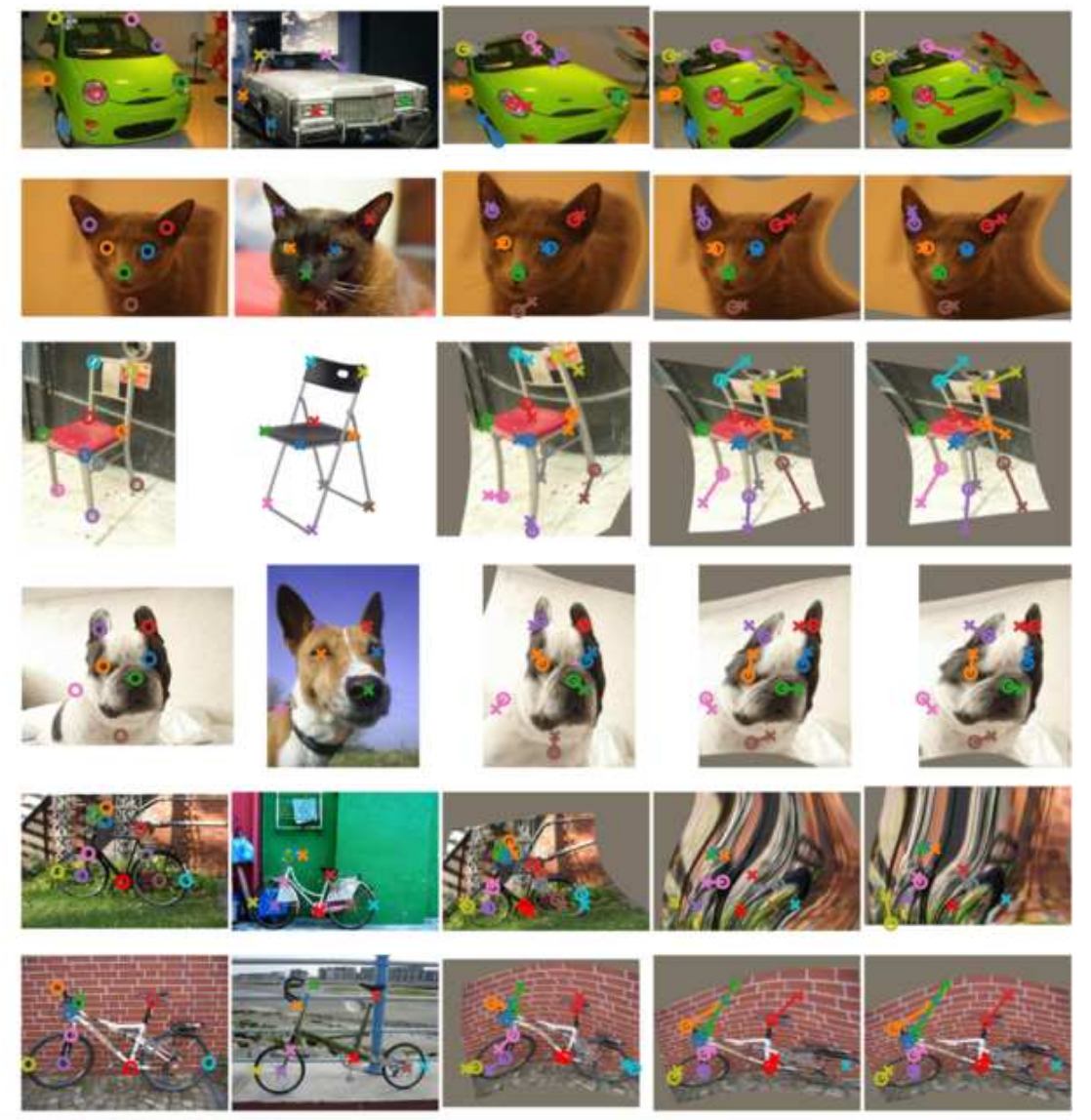

Figure 3: Qualitative results on PF-PASCAL dataset. Columns from left to right represent source image, target

image, transform results of QATM, GoeCNN and Weakly-supervisedSA. Circles and crosses indicate key points on source images

and target images. Best viewed in color and zoom-in mode. See detailed explanations in paper.

Figure 3: Qualitative results on PF-PASCAL dataset. Columns from left to right represent source image, target

image, transform results of QATM, GoeCNN and Weakly-supervisedSA. Circles and crosses indicate key points on source images

and target images. Best viewed in color and zoom-in mode. See detailed explanations in paper.

Others

See git repository https://github.com/kamata1729/QATM_pytorch.git

Dependencies

- Dependencies in our experiment, not necessary to be exactly same version but later version is preferred

- keras=2.2.4

- tensorflow=1.9.0

- opencv=4.0.0 (opencv>=3.1.0 should also works)

Demo Code

Run one sample

Run OTB dataset in paper

For implementations in other DNN libraries

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].