RisingStack / Risingstack Bootcamp

Programming Languages

Projects that are alternatives of or similar to Risingstack Bootcamp

RisingStack bootcamp

General

- Always handle errors (at the right place, sometimes it's better to let it propagate)

- Write unit tests for everything

- Use

winstonfor logging - Use

joifor schema validation (you can validate anything from environment variables to the body of an HTTP request) - Leverage

lodashandlodash/fpfunctions - Avoid callbacks, use

util.promisifyto convert them - Read the You don't know JS books

- Use the latest and greatest ES features

- Follow the clean coding guidelines

- Enjoy! :)

Installation

Install Node 8 and the latest npm.

For this, use nvm, the Node version manager.

$ nvm install 8

# optional: set it as default

$ nvm alias default 8

# install latest npm

$ npm install -g npm

Install PostgreSQL on your system

Preferably with Homebrew.

$ brew install postgresql

# create a table

$ createdb risingstack_bootcamp

You should also install a visual tool for PostgreSQL, pick one:

Install Redis on your system

Preferably with Homebrew.

$ brew install redis

Install project dependencies

You only need to install them once, necessary packages are included for all of the steps.

$ npm install

Set up your development environment

If you already have a favorite editor or IDE, you can skip this step.

- Download Visual Studio Code

- Install the following extensions:

- Read the Node.js tutorial

Steps

1. Create a simple web application and make the test pass

Tasks:

- [ ] Create a

GETendpoint/helloreturningHello Node.js!in the response body, use the middleware of thekoa-routerpackage - [ ] Use the

PORTenvironment variable to set the port, make it required - [ ] Make the tests pass (

npm run test-web) - [ ] Run the application (eg.

PORT=3000 npm startand try if it breaks whenPORTis not provided)

Readings:

- 12 factor - Config

- Node

process - Koa web framework

- Koa router

- Mocha test framework

- Chai assertion library

2. Create a model for the Github API

In this step you will implement two functions, wrappers for the GitHub API. You will use them to get information from GitHub later.

Tasks:

- [ ]

searchRepositories(query): should search for repositories given certain programming languages and/or keywords- The

queryfunction parameter is anObjectof key-value pairs of the request query parameters (eg.{ q: 'language:javascript' }, defaults to{}) - It returns a

Promiseof the HTTP response without modification

- The

- [ ]

getContributors(repository, query): get contributors list with additions, deletions, and commit counts (statistics)-

repositoryfunction parameter is a String of the repository full name, including the owner (eg.RisingStack/cache) - The

queryfunction parameter is anObjectof key-value pairs of the request query parameters (defaults to{}) - It returns a

Promiseof the HTTP response without modification

-

- [ ] Write unit tests for each function, use

nockto intercept HTTP calls to the GitHub API endpoints

Readings:

- Github API v3

-

request&request-promise-nativepackages -

nockfor mocking endpoints

Extra:

- Use the Github API v4 - GraphQL API instead

3. Implement the database models

In this step you will create the database tables, where the data will be stored, using migrations.

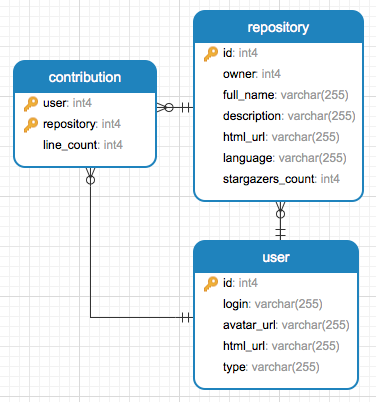

Your model should look like this:

It consists of 3 tables: user, repository, contribution. Rows in the repository table have foreign keys to a record in the user table, owner. The contribution table is managing many-to-many relationship between the user and repository tables with foreign keys.

Tasks:

- [ ] Edit the config and specify the

migrationsfield in the knex initializationObject, for example:{ client: 'pg', connection: '...', migrations: { directory: path.join(__dirname, './migrations') } }

- [ ] Create one migration file per table (eg.

1-create-user.js,2-create-repository.js,3-create-contribution.js) with the following skeleton:-

upmethod has the logic for the migration,downis for reverting it - The migrations are executed in transactions

- The files are executed in alphabetical order

'use strict' const tableName = '...' function up(knex) { return knex.schema.createTable(tableName, (table) => { // your code goes here }) } function down(knex) { return knex.schema.dropTableIfExists(tableName) } module.exports = { up, down }

-

- [ ] Add a

migrate-dbscript to the scripts inpackage.json, editscripts/migrate-db.jsto add the migration call. Finally, run your migration script to create the tables:$ npm run migrate-db -- --local

Readings:

-

knexSQL query builder knexmigrations API- npm scripts

4. Implement helper functions for the database models

In this step you will implement and test helper functions for inserting, changing and reading data from the database.

Tasks:

- [ ] Implement the user model:

-

User.insert({ id, login, avatar_url, html_url, type })- validate the parameters

-

User.read({ id, login })- validate the parameters

- one is required:

idorlogin

-

- [ ] Implement the repository model:

-

Repository.insert({ id, owner, full_name, description, html_url, language, stargazers_count })- Validate the parameters

-

descriptionandlanguagecan be emptyStrings

-

Repository.read({ id, full_name })- Validate the parameters

- One is required:

idorfull_name - Return the owner as well as an object (join tables and reorganize fields)

-

- [ ] Implement the contribution model:

-

Contribution.insert({ repository, user, line_count })- Validate the parameters

-

Contribution.insertOrReplace({ repository, user, line_count })- Validate the parameters

- Use a raw query and the

ON CONFLICTSQL expression

-

Contribution.read({ user: { id, login }, repository: { id, full_name } })-

Validate the parameters

-

The function parameter should be an Object, it should contain either a user, either a repository field or both of them.

If only the user is provided, then all the contributions of that user will be resolved. If only the repository is provided, than all the users who contributed to that repository will be resolved. If both are provided, then it will match the contribution of a particular user to a particular repo.

-

The functions resolves to an Array of contributions (when both a user and a repository identifier is passed, it will only have 1 element)

-

Return the repository and user as well as an object (This requirement is just for the sake of making up a problem, when you actually need this function, you will most likely have the user or the repository Object in a whole)

{ line_count: 10, user: { id: 1, login: 'coconut', ... }, repository: { id: 1, full_name: 'risingstack/repo', ... } }

-

Use a single SQL query

-

When you join the tables, there will be conflicting column names (

id,html_url). Use theaskeyword when selecting columns (eg.repository.id as repository_id) to avoid this

-

-

Notes:

-

useris a reserved keyword in PG, use double quotes where you reference the table in a raw query - You can get the columns of a table by querying

information_schema.columns, which can be useful to select fields dinamically when joining tables, eg.:SELECT column_name FROM information_schema.columns WHERE table_name='contribution';

5. Create a worker process

In this step you will implement another process of the application, the worker. We will trigger a request to collect the contributions for repositories based on some query. The trigger will send messages to another channel, the handler for this channel is reponsible to fetch the repositories. The third channel is used to fetch and save the contributions.

Make a drawing of the message flow, it will help you a lot!

Tasks:

- [ ] Start Redis locally

- [ ] Implement the contributions handler:

- The responsibility of the contributions handler is to fetch the contributions of a repository from the GitHub API and to save the contributors and their line counts to the database

- Validate the

message, it has two fields:dateandrepositorywithidandfull_namefields - Get the contributions from the GitHub API (use your models created in step 2)

- Count all the lines currently in the repository per users (use

lodashandArrayfunctions) - Save the users to the database, don't fail if the user already exists (use your models created in step 3)

- Save the contributions to the database, insert or replace (use your models created in step 3)

- [ ] Implement the repository handler:

- Validate the

message, it has three fields:date,queryandpage - Get the repositories from the GitHub API (use your models created in step 2) with the

q,pageandper_page(set to 100) query parameters. - Modify the response to a format which is close to the database models (try to use

lodash/fp) - Save the owner to the database, don't fail if the user already exists (use your models created in step 3)

- Save the repository to the database, don't fail if the repository already exists (use your models created in step 3)

- Publish a message to the

contributionschannel with the samedate

- Validate the

- [ ] Implement the trigger handler:

- The responsibility of the trigger handler is to send 10 messages to the

repositorycollect channel implemented above. 10, because GitHub only gives access to the first 1000 (10 * page size of 100) search results - Validate the

message, it has two fields:dateandquery

- The responsibility of the trigger handler is to send 10 messages to the

- [ ] We would like to make our first search and data collection from GitHub.

- For this, create a trigger.js file in the scripts folder. It should be a simple run once Node script which will publish a message to the

triggerchannel with the query passed in as an environment variable (TRIGGER_QUERY), then exit. It should have the same--local,-Lflag, but for setting theREDIS_URI, as the migrate-db script. - Add a

triggerfield to the scripts inpackage.jsonthat calls yourtrigger.jsscript.

- For this, create a trigger.js file in the scripts folder. It should be a simple run once Node script which will publish a message to the

Readings:

6. Implement a REST API

In this step you will add a few routes to the existing web application to trigger a data crawl and to expose the collected data.

Tasks:

- [ ] The database requirements changed in the meantime, create a new migration (call it

4-add-indexes.js), add indexes touser.loginandrepository.full_name(useknex.schema.alterTable) - [ ] Implement the

POST /api/v1/triggerroute, the body contains an object with a stringqueryfield, you will use this query to send a message to the corresponding Redis channel. Return201when it was successful - [ ] Implement the

GET /api/v1/repository/:idandGET /api/v1/repository/:owner/:nameendpoints - [ ] Implement the

GET /api/v1/repository/:id/contributionsandGET /api/v1/repository/:owner/:name/contributionsendpoints - [ ] Create a middleware (

requestLogger({ level = 'silly' })) and add it to your server, that logs out:- The method and original url of the request

- Request headers (except

authorizationandcookie) and body - The request duration in

ms - Response headers (except

authorizationandcookie) and body - Response status code (based on it: log level should be

errorwhen server error,warnwhen client error)

- [ ] Document your API using Apiary's Blueprint format (edit the

API_DOCUMENTATION.apib).

Notes:

- Make use of koa-compose and the validator middleware

compose([ middleware.validator({ params: paramsSchema, query: querySchema, body: bodySchema }), // additional middleware ])

Readings:

7. Prepare your service for production

In this step you will add some features, which are required to have your application running in production environment.

Tasks:

- [ ] Listen on the

SIGTERMsignal inweb/index.js.- Create a function called

gracefulShutdown - Use koa's

.callback()function to create ahttpserver (look forhttp.createServer) and convertserver.closewithutil.promisify - Close the server and destroy the database and redis connections (use the

destroyfunction to the redis model, which callsdisconnecton both redis clients and returns aPromise) - Log out and exit the process with code

1if something fails - Exit the process with code

0if everything is closed succesfully

- Create a function called

- [ ] Implement the same for the worker process

- [ ] Add a health check endpoint for the web server

- Add a

healthCheckfunction for the database model, use thePG_HEALTH_CHECK_TIMEOUTenvironment variable to set the query timeout (set default to2000ms) - Add a

healthCheckfunction to the redis model - Implement the

GET /healthzendpoint, return200with JSON body{ "status": "ok" }when everything is healthy,500if any of the database or redis connections are not healthy and503if the process gotSIGTERMsignal

- Add a

- [ ] Create a http server and add a similar health check endpoint for the worker process

Readings: