meng-tang / Rloss

Labels

Projects that are alternatives of or similar to Rloss

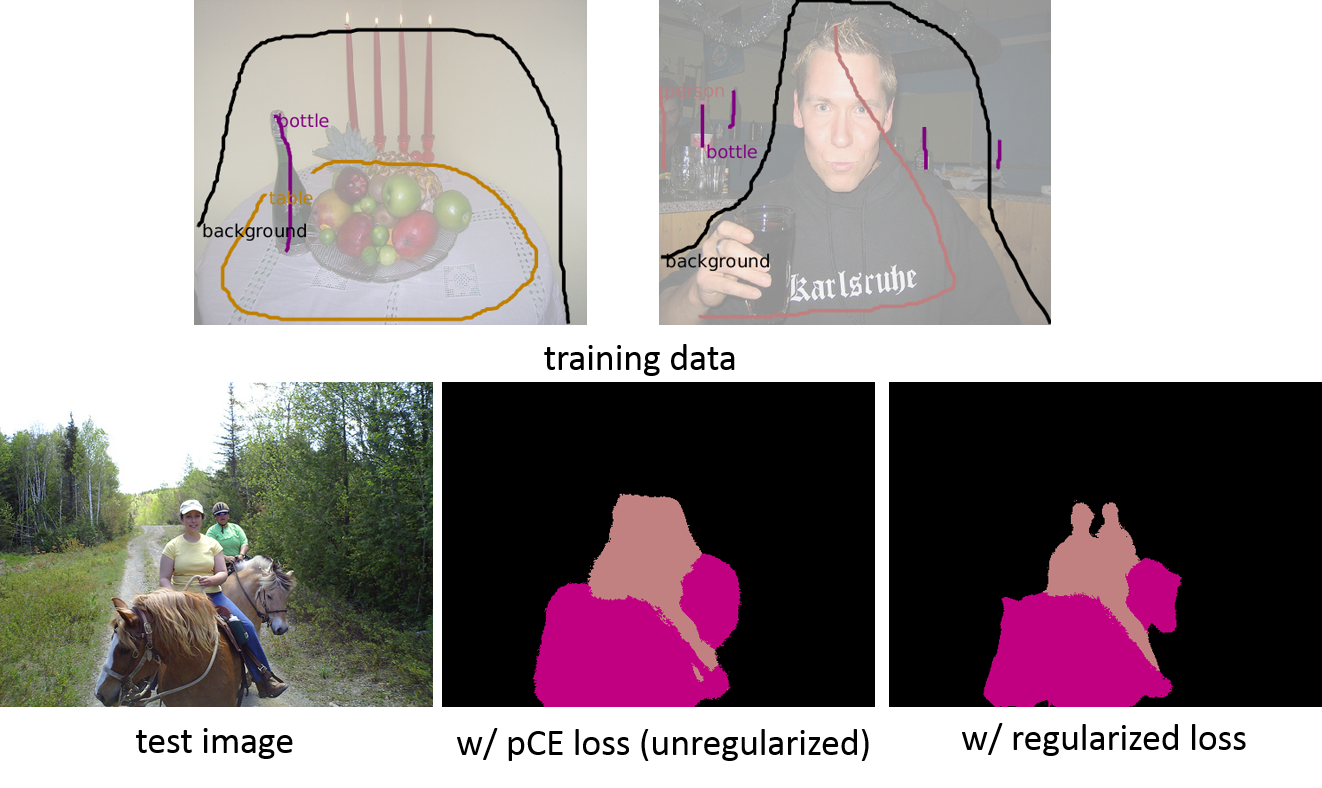

Regularized Losses (rloss) for Weakly-supervised CNN Segmentation

(Caffe and Pytorch)

To train CNN for semantic segmentation using weak-supervision (e.g. scribbles), we propose regularized loss framework. The loss have two parts, partial cross-entropy (pCE) loss over scribbles and regularization loss e.g. DenseCRF.

If you use the code here, please cite the following paper.

"On Regularized Losses for Weakly-supervised CNN Segmentation" PDF Meng Tang, Federico Perazzi, Abdelaziz Djelouah, Ismail Ben Ayed, Christopher Schroers, Yuri Boykov In European Conference on Computer Vision (ECCV), Munich, Germany, September 2018.

DenseCRF loss

To include DenseCRF loss for CNN, add the following loss layer. It takes two bottom blobs, first RGB image and the second is soft segmentation distributions. We need to specify bandwidth of Gaussian kernel for XY (bi_xy_std) and RGB (bi_rgb_std).

layer {

bottom: "image"

bottom: "segmentation"

propagate_down: false

propagate_down: true

top: "densecrf_loss"

name: "densecrf_loss"

type: "DenseCRFLoss"

loss_weight: ${DENSECRF_LOSS_WEIGHT}

densecrf_loss_param {

bi_xy_std: 100

bi_rgb_std: 15

}

}

The implementation of this loss layer is in:

- deeplab/src/caffe/layers/densecrf_loss_layer.cpp

- deeplab/include/caffe/layers/densecrf_loss_layer.hpp which depend on fast high dimensional Gaussian filtering in

- deeplab/include/caffe/util/filterrgbxy.hpp

- deeplab/src/caffe/util/filterrgbxy.cpp

- deeplab/include/caffe/util/permutohedral.hpp

This implementation is in CPU supporting multi-core parallelization. To enable, build with -fopenmp, see deeplab/Makefile. Some examples of visualizing the gradients of DenseCRF loss are in exper/visualization. To generate visualization yourself, run the script exper/visualize_densecrf_gradient.py.

How to train

An example script for training is given in exper/run_pascal_scribble.sh. We have training in two phases. First, we train with partial cross entropy loss. This gives mIOU of ~55.8% on VOC12 val set.

- exper/pascal_scribble/config/deeplab_largeFOV/solver.prototxt

- exper/pascal_scribble/config/deeplab_largeFOV/train.prototxt

Then we fine-tune the network with extra regularization loss, e.g. DenseCRF loss. This boosts mIOU to ~62.3% on val set.

- exper/pascal_scribble/config/deeplab_largeFOV/solverwithdensecrfloss.prototxt

- exper/pascal_scribble/config/deeplab_largeFOV/trainwithdensecrfloss.prototxt

Our loss can be used for any network. For example, training better network of deeplab_msc_largeFOV gives ~63.2% mIOU on val set. Note that this is almost as good as that with full supervison (64.1%).

- exper/pascal_scribble/config/deeplab_msc_largeFOV/solverwithdensecrfloss.prototxt

- exper/pascal_scribble/config/deeplab_msc_largeFOV/trainwithdensecrfloss.prototxt

| network | weak supervision (~3% pixels labeled) | full supervision | |

| (partial) Cross Entropy Loss | w/ DenseCRF Loss | ||

| Deeplab_largeFOV | 55.8% | 62.3% | 63.0% |

| Deeplab_Msc_largeFOV | n/a | 63.2% | 64.1% |

| Deeplab_VGG16 | 60.7% | 64.7% | 68.8% |

| Deeplab_ResNet101 | 69.5% | 73.0% | 75.6% |

Table 1: mIOU on PASCAL VOC2012 val set

Trained models

The trained models for various networks with unregularized or regularized losses are released here.

Other Regularized Losses

In principle, our framework allows any differentialble regularization losses for segmentation, e.g. normalized cut clustering criterion and size constraint. "Normalized Cut Loss for Weakly-supervised CNN Segmentation" PDF Meng Tang, Abdelaziz Djelouah, Federico Perazzi, Yuri Boykov, Christopher Schroers In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, USA, June 2018 “Size-constraint loss for weakly supervised CNN segmentation” PDF Code Hoel Kervadec, Jose Dolz, Meng Tang, Eric Granger, Yuri Boykov, Ismail Ben Ayed In International conference on Medical Imaging with Deep Learning (MIDL), Amsterdam, Netherlands, July 2018.

Pytorch and Tensorflow

The original implementation used for the published articles is in Caffe. We release a PyTorch implementation, see pytorch. A tensorflow version is under development. We will also try other state-of-the-art network backbones with regularized losses and include in this repository.