ZhengPeng7 / Sanet Keras

Labels

Projects that are alternatives of or similar to Sanet Keras

SANet-Keras

An unofficial implementation of SANet for crowd counting in Keras-TF.

Paper:

Results now:

On dataset ShanghaiTech B

Still far from the performance in the original paper(MAE 8.4)

| MAE | MSE | MAPE | Mean DM Distance |

|---|---|---|---|

| 13.727 | 22.726 | 11.9 | 27.065 |

Dataset:

- ShanghaiTech dataset: dropbox or Baidu Disk.

Training Parameters:

-

Loss = ssim_loss + L2

-

Optimizer = Adam(lr=1e-4)

-

Data augmentation: Flip horizontally.

-

Patch: No patch, input the whole image, output the same shape DM.

-

Instance normalization: No IN layers at present, since network with IN layers is very hard to train and IN layers didn't show improvement to the network in my experiments.

-

Output Zeros: The density map output may fade to zeros in 95%+ random initialization, I tried the initialization method in the original paper while it didn't work. In the past, when this happens, I just restarted the kernel and re-run. But now, I tried to train different modules(1-5) separately in the first several epochs to get relatively reasonable weights:

, and it worked out to greatly decrease the probability of the zero-output-phenomena. Any other question, welcome to contact me.

-

Weights: Got best weights in 251-th epoch(300 epochs in total), and here is the loss records:

-

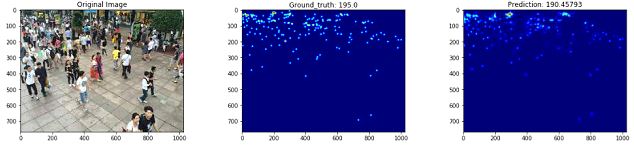

Prediction example:

Run:

- Download dataset;

- Data generation: run the

generate_datasets.ipynb. - Run the

main.ipynbto train the model and do the test.

Abstraction:

-

Network = encoder + decoder, model plot is here:

Network encoder decoder Composition scale aggregation module conv2dTranspose Usage extract multi-scale features generate high resolution density map -

Loss:

-

Normalization layer:

- Ease the training process;

- Reduce 'statistic shift problem'.