miztiik / Serverless S3 To Elasticsearch Ingester

Licence: mit

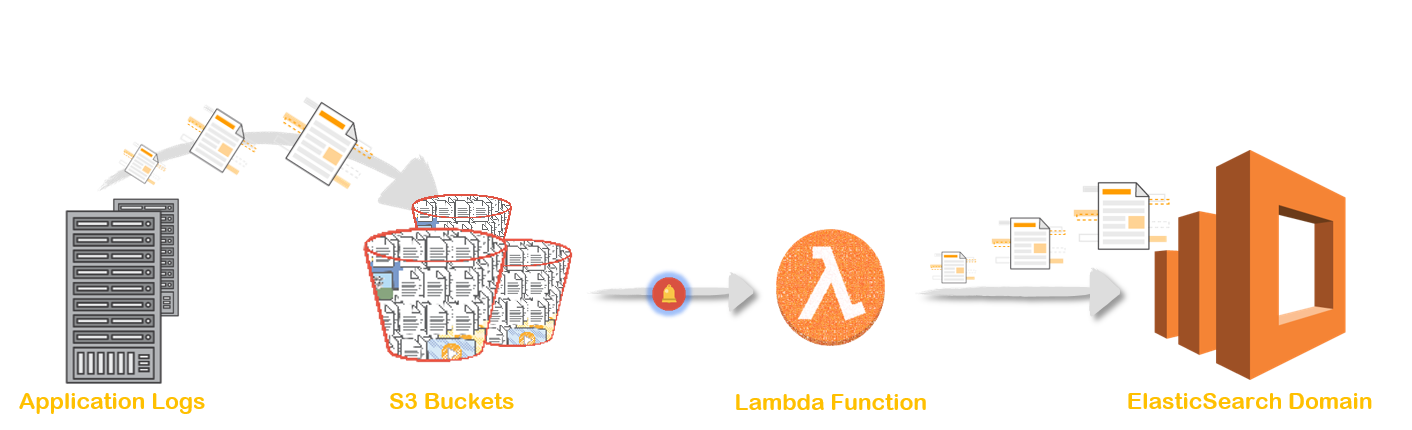

AWS Lambda function to ingest application logs from S3 Buckets into ElasticSearch for indexing

Stars: ✭ 36

Programming Languages

python

139335 projects - #7 most used programming language

Serverless S3 To Elasticsearch Ingester

We can load streaming data(say application logs) to Amazon Elasticsearch Service domain from many different sources. Native services like Kinesis & Cloudwatch have built-in support to push data to ES. But services like S3 & DynamoDB can use Lambda function to ingest data to ES.

Follow this article in Youtube

Prerequisites

- S3 Bucket - BucketName:

s3-log-dest- You will have to create your own bucket and use that name in the instructions

- Amazon Elaticsearch Domain Get help here

- Amazon Linux with AWS CLI Profile configured ( S3 Full Access Required )

- Create IAM Role -

s3-to-es-ingestor-botGet help here- Attach following managed permissions -

AWSLambdaExecute

- Attach following managed permissions -

Creating the Lambda Deployment Package

Login to the linux machine & Execute the commands below,

# Install Dependancies

yum -y install python-pip zip

pip3 install virtualenv

# Prepare the log ingestor virtual environment

mkdir -p /var/s3-to-es && cd /var/s3-to-es

virtualenv /var/s3-to-es

cd /var/s3-to-es && source bin/activate

pip3 install requests_aws4auth -t .

pip3 install requests -t .

pip3 freeze > requirements.txt

# Copy the ingester code to the directory

COPY THE CODE IN THE REPO TO THIS DIRECTORY

# Update your ES endpoint (NOT KIBANA URL)

IN line 27

# Set the file permission to execute mode

chmod 754 s3-to-es.py

# Package the lambda runtime

zip -r /var/s3-to-es.zip *

# Send the package to S3 Bucket

# aws s3 cp /var/s3-to-es.zip s3://YOUR-BUCKET-NAME/log-ingester/

aws s3 cp /var/s3-to-es.zip s3://s3-log-dest/log-ingester/

Configure Lambda Function

- For Handler, type

s3-to-es.lambda_handler. This setting tells Lambda the file (s3-to-es.py) and method (lambda_handler) that it should execute after a trigger. - For Code entry type, choose Choose a file from Amazon S3, and Update the URL in the below field.

- Choose Save.

- If you are running ES in a VPC Access, Make sure your Lambda runs in the same VPC and can reach your ES domain. Otherwise, Lambda cannot ingest data into ES

- Set the resource & time limit based on the size of your log files (Ex: ~ 1 Minute )

- Save

Setup S3 Event Triggers to Lambda Function

We want the code to execute whenever a log file arrives in an S3 bucket:

- Choose S3.

- Choose your bucket.

- For Event type, choose PUT.

- For Prefix, type

logs/. - For Filter pattern, type

.txtor.log. - Select Enable trigger.

- Choose Add.

Test the function

- Upload object to S3

- Login to Kibana dashboard or ES Head plugin to check the newly created index & Logs

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].