wy1iu / Spherenet

Programming Languages

Projects that are alternatives of or similar to Spherenet

Deep Hyperspherical Learning

By Weiyang Liu, Yan-Ming Zhang, Xingguo Li, Zhiding Yu, Bo Dai, Tuo Zhao, Le Song

License

SphereNet is released under the MIT License (refer to the LICENSE file for details).

Updates

- [x] SphereNet: a neural network that learns on hyperspheres

- [x] SphereResNet: an adaptation of SphereConv to residual networks

Contents

Introduction

The repository contains an example Tensorflow implementation for SphereNets. SphereNets are introduced in the NIPS 2017 paper "Deep Hyperspherical Learning" (arXiv). SphereNets are able to converge faster and more stably than its CNN counterparts, while yielding to comparable or even better classification accuracy.

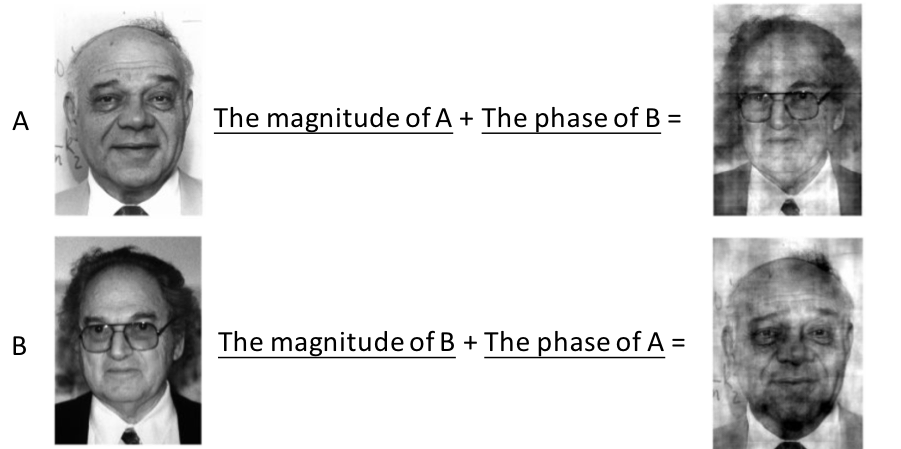

Hyperspherical learning is inspired by an interesting obvervation of the 2D Fourier transform. From the image below, we could see that magnitude information is not crucial for recognizing the identity, but phase information is very important for recognition. By droping the magnitude information, SphereNets can reduce the learning space and therefore gain more convergence speed. Hypersphereical learning provides a new framework to improve the convolutional neural networks.

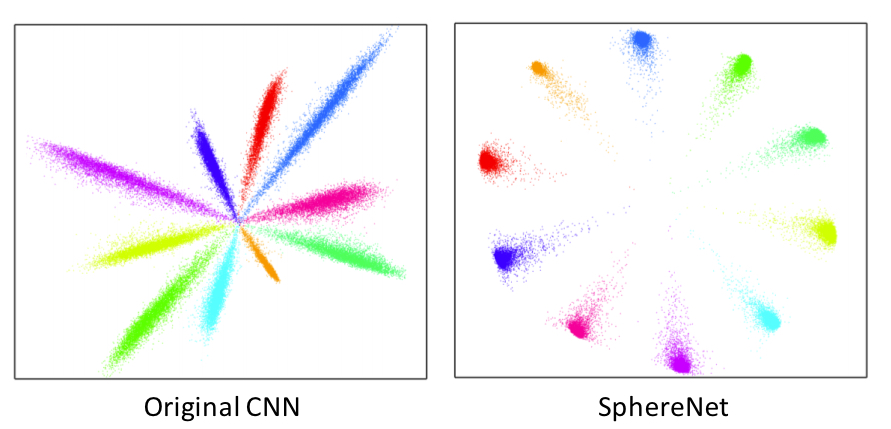

The features learned by SphereNets are also very interesting. The 2D features of SphereNets learned on MNIST are more compact and have larger margin between classes. From the image below, we can see that local behavior of convolutions could lead to dramatic difference in final features, even if they are supervised by the same standard softmax loss. Hypersphereical learning provides a new perspective to think about convolutions and deep feature learning.

Besides, the hyperspherical learning also leads to a well-performing normalization technique, SphereNorm. SphereNorm basically can be viewed as SphereConv operator in our implementation.

Citation

If you find our work useful in your research, please consider to cite:

@inproceedings{liu2017deep,

title={Deep Hyperspherical Learning},

author={Liu, Weiyang and Zhang, Yan-Ming and Li, Xingguo and Yu, Zhiding and Dai, Bo and Zhao, Tuo and Song, Le},

booktitle={Advances in Neural Information Processing Systems},

pages={3953--3963},

year={2017}

}

Requirements

Python 2.7-

TensorFlow(Tested on version 1.01) numpy

Usage

Part 1: Setup

-

Clone the repositary and download the training set.

git clone https://github.com/wy1iu/SphereNet.git cd SphereNet ./dataset_setup.sh

Part 2: Train Baseline/SphereNets

-

We use '$SPHERENET_ROOT' to denote the directory path of this repository.

-

To train the baseline model, please open

$SPHERENET_ROOT/baseline/train_baseline.pyand assign an available GPU. The default hyperparameters are exactly the same with SphereNets.cd $SPHERENET_ROOT/baseline python train_baseline.py -

To train the SphereNet, please open

$SPHERENET_ROOT/train_spherenet.pyand assign an available GPU.cd $SPHERENET_ROOT python train_spherenet.py

Part 3: Train Baseline/SphereResNets

-

We use '$SPHERENET_ROOT' to denote the directory path of this repository.

-

To train the baseline ResNet-32 model, please open

$SPHERENET_ROOT/sphere_resnet/baseline/train_baseline.pyand assign an available GPU. The default hyperparameters are exactly the same with SphereNets.cd $SPHERENET_ROOT/sphere_resnet/baseline python train_baseline.py -

To train the SphereResNet-32 (the default setting is Linear SphereConv with the standard softmax loss), please open

$SPHERENET_ROOT/sphere_resnet/train_sphere_resnet.pyand assign an available GPU.cd $SPHERENET_ROOT/sphere_resnet python train_sphere_resnet.py

Configuration

The default setting of SphereNet is Cosine SphereConv + Standard Softmax Loss. To change the type of SphereConv, please open the spherenet.py and change the norm variable.

- If

normis set tonone, then the network will use original convolution and become standard CNN. - If

normis set tolinear, then the SphereNet will use linear SphereConv. - If

normis set tocosine, then the SphereNet will use cosine SphereConv. - If

normis set tosigmoid, then the SphereNet will use sigmoid SphereConv. - If

normis set tolr_sigmoid, then the SphereNet will use learnable sigmoid SphereConv.

The w_norm variable can also be changed similarly in order to use the weight-normalized softmax loss (combined with different SphereConv). By setting w_norm to none, we will use the standard softmax loss.

There are some examples of setting these two variables provided in the examples/ foloder.

Results

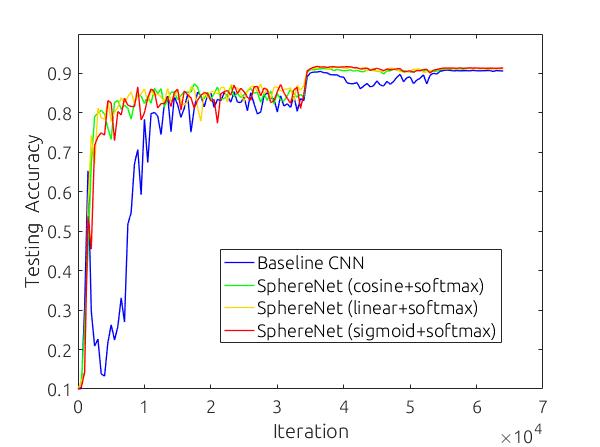

Part 1: Convergence

The convergence curves for baseline CNN and several types of SphereNets are given as follows.

Part 2: Best testing accuracy on CIFAR-10 (SphereNet-9)

- Baseline (standard CNN with standard softmax loss): 90.86%

- SphereNet with cosine SphereConv and standard softmax loss: 91.31%

- SphereNet with linear SphereConv and standard softmax loss: 91.65%

- SphereNet with sigmoid SphereConv and standard softmax loss: 91.81%

- SphereNet with learnable sigmoid SphereConv and standard softmax loss: 91.66%

- SphereNet with cosine SphereConv and weight-normalized softmax loss: 91.44%

Part 3: Best testing accuracy on CIFAR-10+ (SphereResNet-32)

- Baseline (standard ResNet-32 with standard softmax loss): 93.09%

- SphereResNet-32 with linear SphereConv and standard softmax loss: 94.68%

Part 4: Training log (SphereNet-9)

- Baseline: here

- SphereNet with cosine SphereConv and standard softmax loss: here.

- SphereNet with linear SphereConv and standard softmax loss: here.

- SphereNet with sigmoid SphereConv and standard softmax loss: here.

- SphereNet with learnable sigmoid SphereConv and standard softmax loss: here.

- SphereNet with cosine SphereConv and weight-normalized softmax loss: here.

Part 5: Training log (SphereResNet-32)

Notes

- Empirically, SphereNets have more accuracy gain with larger filter number. If the filter number is very small, SphereNets may yield slightly worse accuracy but can still achieve much faster convergence.

- SphereConv may be useful for RNNs and deep Q-learning where better convergence can help.

- By adding rescaling factors to SphereConv and make them learnable in order for the SphereNorm to degrade to the original convolution, we present a new normalization technique, SphereNorm. SphereNorm does not contradict with the BatchNorm, and can be used either with or without BatchNorm

- We also developed an improved SphereNet in our latest CVPR 2018 paper, which works better than this version. The paper and code will be released soon.

Third-party re-implementation

- TensorFlow: code by unixpickle.