mli0603 / Stereo Transformer

Labels

Projects that are alternatives of or similar to Stereo Transformer

STereo TRansformer (STTR)

This is the official repo for our work Revisiting Stereo Depth Estimation From a Sequence-to-Sequence Perspective with Transformers.

Fine-tuned result on street scene:

Generalization to medical domain when trained only on synthetic data:

If you find our work relevant, please cite

@article{li2020revisiting,

title={Revisiting Stereo Depth Estimation From a Sequence-to-Sequence Perspective with Transformers},

author={Li, Zhaoshuo and Liu, Xingtong and Creighton, Francis X and Taylor, Russell H and Unberath, Mathias},

journal={arXiv preprint arXiv:2011.02910},

year={2020}

}

Update

- 2021.01.13: 🔥🔥🔥 STTR-light 🔥🔥🔥 is released! Now the network is ~4x faster and ~3x more

memory efficient with comparable performance as before. This also enables inference/training on higher resolution images. The benchmark result can be found in Expected Result.

Use branch

sttr-lightfor the new model. - 2020.11.05: First code and arxiv release

Table of Content

- Introduction

- Dependencies

- Pre-trained Models

- Folder Structure

- Usage

- Expected Result

- Common Q&A

- License

- Contributing

- Acknowledgement

Introduction

Benefits of STTR

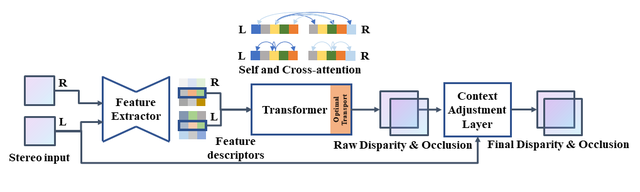

STereo TRansformer (STTR) revisits stereo depth estimation from a sequence-to-sequence perspective. The network combines conventional CNN feature extractor and long-range relationship capturing module Transformer. STTR is able to relax prior stereo depth estimation networks in three aspects:

- Disparity range naturally scales with image resolution, no more manually set range.

- Explicit occlusion handling.

- Imposing uniqueness constraint.

STTR performs comparably well against prior work with refinement in Scene Flow and KITTI 2015. STTR is also able to generalize to KITTI 2015, Middlebury 2014 and SCARED when trained only on synthetic data.

Working Theory

Attention

Two types of attention mechanism are used: self-attention and cross-attention. Self-attention uses context within the same image, while cross-attention uses context across two images. The attention shrinks from global context to local context as the layer goes deeper. Attention in a large textureless area tends to keep attending dominant features like edges, which helps STTR to resolve ambiguity.

Relative Positional Encoding

We find that only image-image based attention is not enough. Therefore, we opt in relative positional encoding to provide positional information. This allows STTR to use the relative distance from a featureless pixel to dominant pixel (such as edge) to resolve ambiguity. In the following example, STTR starts to texture the center of the table using relative distance, thus strides parallel to the edges start to show.

Dependencies

We recommend the following steps to set up your environment

-

Create your python virtual environment by

conda create --name sttr python=3.6 # create a virtual environment called "sttr" with python version 3.6

(as long as it is Python 3, it can be anything >= 3.6)

-

Install Pytorch 1.5.1: Please follow link here.

- Other versions of Pytorch may have problem during evaluation, see Issue #8 for more detail.

-

Other third-party packages: You can use pip to install the dependencies by

pip install -r requirements.txt

-

(Optional) Install Nvidia apex: We use apex for mixed precision training to accelerate training. To install, please follow instruction here

- You can remove apex dependency if

- you have more powerful GPUs, or

- you don't need to run the training script.

- Note: We tried to use the native mixed precision training from official Pytorch implementation. However, it looks like it currently does not support gradient checkpointing. We will post update if this is resolved.

- You can remove apex dependency if

Pre-trained Models

You can download the pretrained model from the following links:

| Models | Link |

|---|---|

| sceneflow_pretrained_model.pth.tar | Download link |

| kitti_finetuned_model.pth.tar | Download link |

| 🔥sttr_light_sceneflow_pretrained_model.pth.tar🔥 | Download link |

Folder Structure

Code Structure

stereo-transformer

|_ dataset (dataloder)

|_ module (network modules, including loss)

|_ utilities (training, evaluation, inference, logger etc.)

Data Structure

Please see sample_data folder for details. We keep the original data folder structure from the official site. If you need to modify the existing structure, make sure to modify the dataloader.

- Note: We only provide one sample of each dataset to run the code. We do not own any copyright or credits of the data.

KITTI 2015

KITTI_2015

|_ training

|_ disp_occ_0 (disparity including occluded region)

|_ image_2 (left image)

|_ image_3 (right image)

|_ testing

MIDDLEBURY_2014

MIDDLEBURY_2014

|_ trainingQ

|_ Motorcycle (scene name)

|_ disp0GT.pfm (left disparity)

|_ disp1GT.pfm (right disparity)

|_ im0.png (left image)

|_ im1.png (right image)

|_ mask0nocc.png (left occlusion)

|_ mask1nocc.png (right occlusion)

SCARED

SCARED

|_ train

|_ disparity (disparity including occluded region)

|_ left (left image)

|_ occlusion (left occlusion)

|_ right (right image)

Usage

Colab/Notebook Example

If you don't have a GPU, you can use Google Colab:

If you have a GPU and want to run locally:

- Download pretrained model using links in Pre-trained Models.

- Note: The pretrained model is assumed to be in the

stereo-transformerfolder.

- Note: The pretrained model is assumed to be in the

- An example of how to run inference is given in file inference_example.ipynb.

Terminal Example

- Download pretrained model using links in Pre-trained Models.

- Run pretraining by

sh scripts/pretrain.sh- Note: please set the

--dataset_directoryargument in the.shfile to where Scene Flow data is stored, i.e. replacePATH_TO_SCENEFLOW

- Note: please set the

- Run fine-tune on KITTI by

sh scripts/kitti_finetune.sh- Note: please set the

--dataset_directoryargument in the.shfile to where KITTI data is stored, i.e. replacePATH_TO_KITTI - Note: the pretrained model is assumed to be in the

stereo-transformerfolder.

- Note: please set the

- Run evaluation on the provided KITTI example by

sh scripts/kitti_toy_eval.sh- Note: the pretrained model is assumed to be in the

stereo-transformerfolder.

- Note: the pretrained model is assumed to be in the

Expected Result

The result of STTR may vary by a small fraction depending on the trial, but it should be approximately the same as the tables below.

Expected result of STTR (sceneflow_pretrained_model.pth.tar) and STTR-light (sttr_light_pretrained_model.pth.tar).

| Sceneflow | Sceneflow (disp<192) | |||

|---|---|---|---|---|

| 3px Error | EPE | 3px Error | EPE | |

| PSMNet | 3.31 | 1.25 | 2.87 | 0.95 |

| GA-Net | 2.09 | 0.89 | 1.57 | 0.48 |

| GwcNet | 2.19 | 0.97 | 1.60 | 0.48 |

| Bi3D | 1.92 | 1.16 | 1.46 | 0.54 |

| STTR | 1.26 | 0.45 | 1.13 | 0.42 |

| STTR-light | 1.44 (+0.18) |

0.51 (+0.06) |

1.43 (+0.30) |

0.48 (+0.06) |

| KITTI 2015 | Middleburry-Q | Middleburry-H | SCARED | |||||

|---|---|---|---|---|---|---|---|---|

| 3px Error | EPE | 3px Error | EPE | 3px Error | EPE | 3px Error | EPE | |

| PSMNet | 27.79 | 6.56 | 12.96 | 3.05 | 27.71 | 8.65 | 9.19 | 1.56 |

| GA-Net | 10.62 | 1.67 | 8.40 | 1.79 | 18.54 | 5.77 | 8.88 | 1.50 |

| GwcNet | 12.60 | 2.21 | 8.60 | 1.89 | 17.46 | 5.93 | 12.37 | 1.89 |

| Bi3D | 7.28 | 1.68 | 10.90 | 2.40 | OOM | OOM | 8.91 | 1.52 |

| STTR | 6.73 | 1.50 | 6.19 | 2.33 | OOM | OOM | 8.00 | 1.47 |

| STTR-light | 7.06 (+0.33) |

1.58 (+0.08 |

5.90 (-0.29) |

1.61 (-0.72) |

9.38 | 2.87 | 8.42 (+0.43) |

1.49 (+0.02) |

Expected 3px error result of kitti_finetuned_model.pth.tar

| Dataset | 3px Error | EPE |

|---|---|---|

| KITTI 2015 training | 0.79 | 0.41 |

| KITTI 2015 testing | 2.07 | N/A |

Common Q&A

-

What are occluded regions?

"Occlusion" means pixels in the left image do not have a corresponding match in right images. Because objects in right image are shifted to the left a little bit compared to the right image, thus pixels in the following two regions generally do not have a match:- At the left border of the left image

- At the left border of foreground objects

-

Why there are black patches in predicted disparity with values 0?

The disparities of occluded region are set to 0. -

Why do you read disparity map including occluded area for KITTI during training?

We use random crop as a form of augmentation, thus we need to recompute occluded regions again. The code for computing occluded area can be found in dataset/preprocess.py. -

How to reproduce feature map visualization in Figure 4 of the paper?

The feature map is taken after the first LayerNorm in Transformer. We use PCA trained on the first and third layer to reduce the dimensionality to 3. -

How to reproduce feature distribution visualization in Figure 6 of the paper?

The feature distribution visualization is done via UMAP, which is trained on Scene Flow feature map only. The feature map is again taken after the first LayerNorm in Transformer.

License

This project is under the Apache 2.0 license. Please see LICENSE for more information.

Contributing

We try out best to make our work easy to transfer. If you see any issues, feel free to fork the repo and start a pull request.

Acknowledgement

Special thanks to authors of SuperGlue, PSMNet and DETR for open-sourcing the code.