clovaai / Tedeval

Programming Languages

Projects that are alternatives of or similar to Tedeval

TedEval: A Fair Evaluation Metric for Scene Text Detectors

Official Python 3 implementation of TedEval | paper | slides

Chae Young Lee, Youngmin Baek, and Hwalsuk Lee.

Clova AI Research, NAVER Corp.

Overview

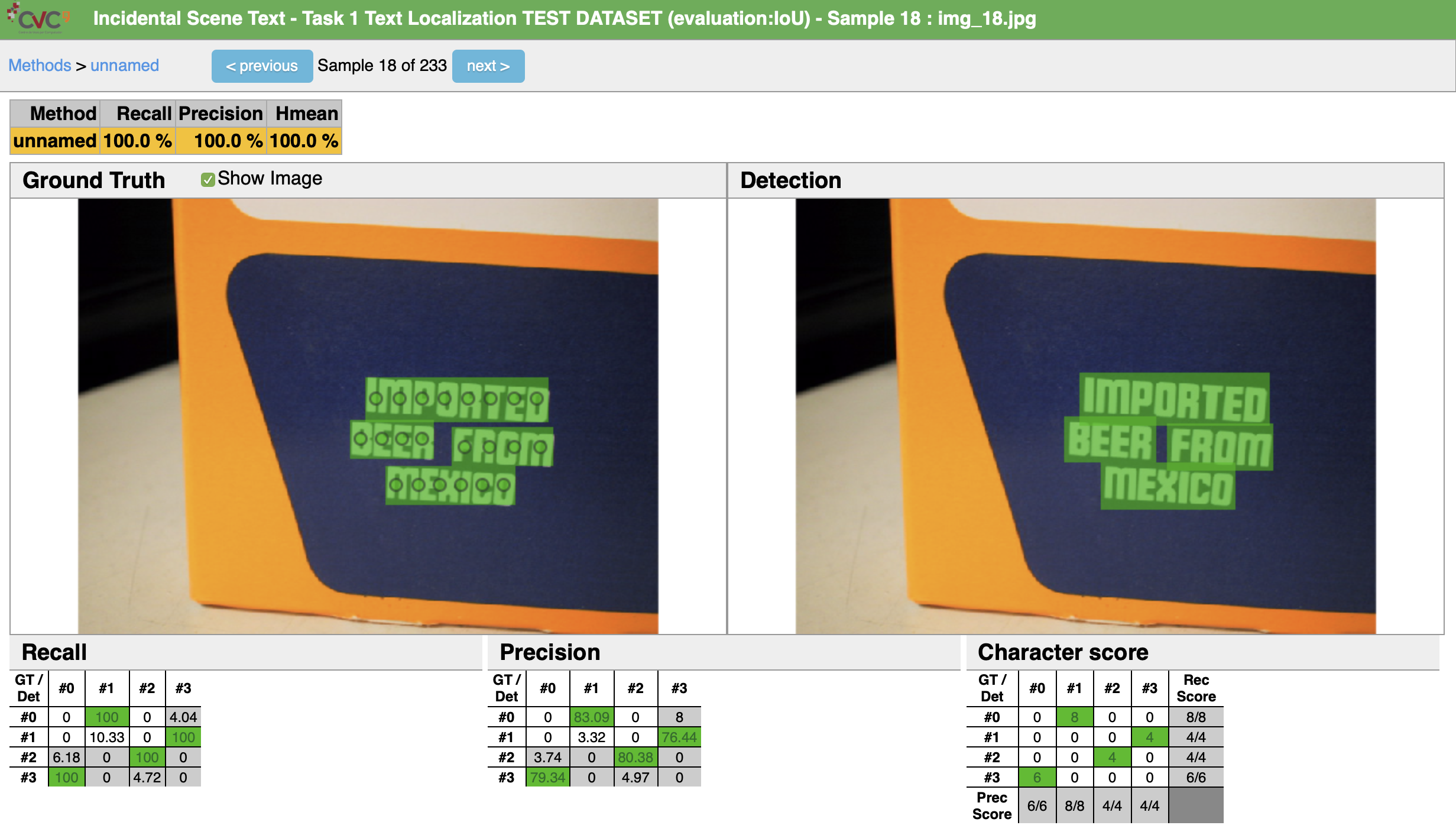

We propose a new evaluation metric for scene text detectors called TedEval. Through separate instance-level matching policy and character-level scoring policy, TedEval solves the drawbacks of previous metrics such as IoU and DetEval. This code is based on ICDAR15 official evaluation code.

Methodology

1. Mathcing Policy

- Non-exclusively gathers all possible matches of not only one-to-one but also one-to-many and many-to-one.

- The threshold of both area recall and area precision are set to 0.4.

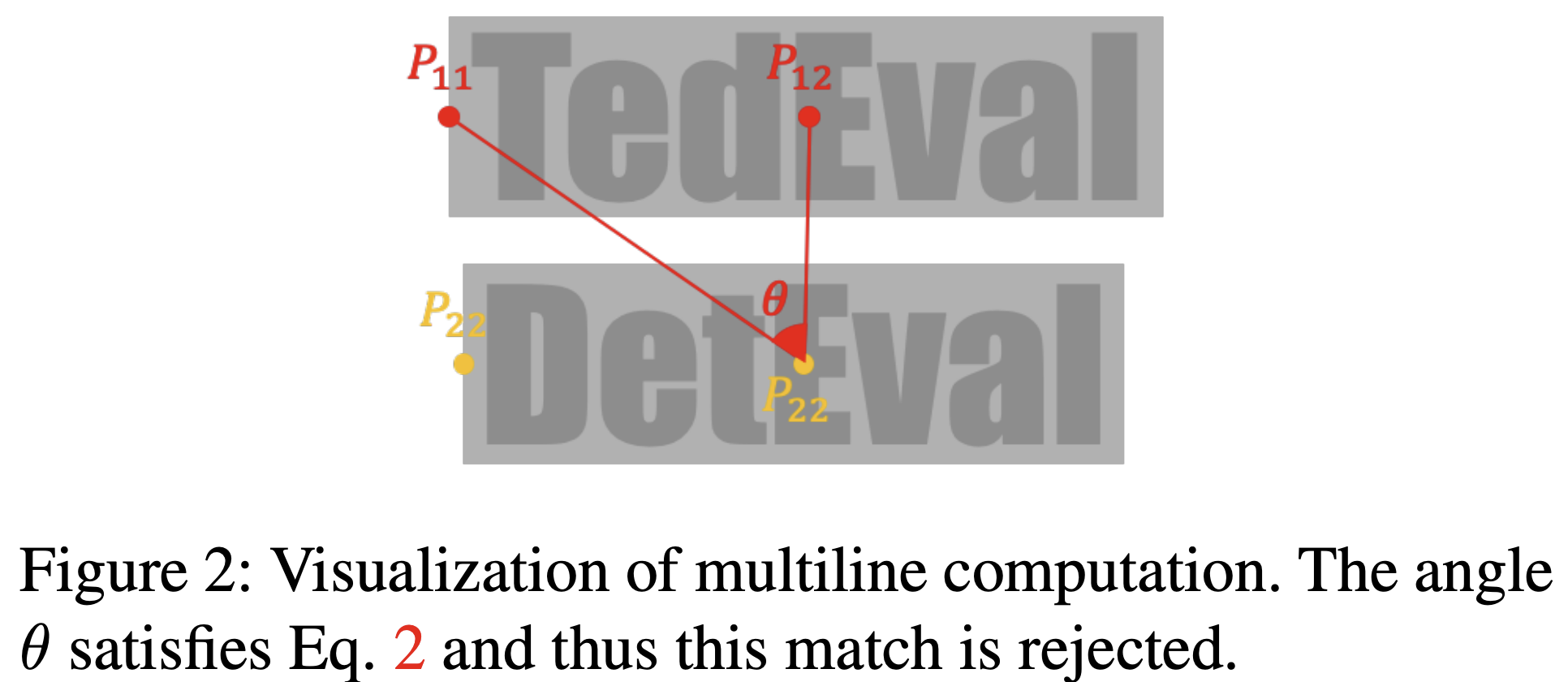

- Multiline is identified and rejected when |min(theta, 180 - theta)| > 45 from Fig. 2.

2. Scoring Policy

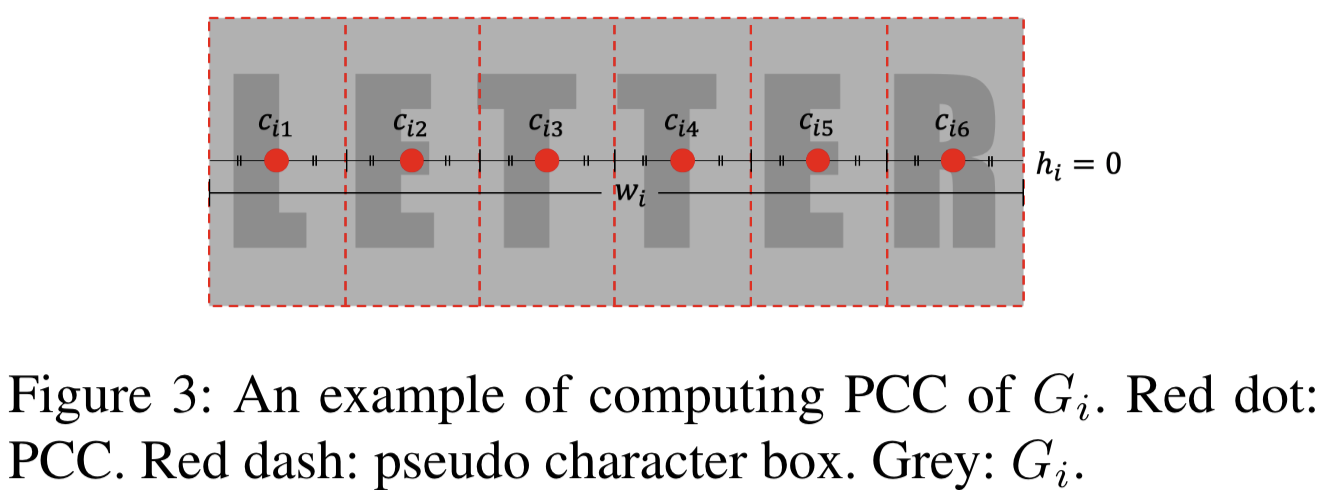

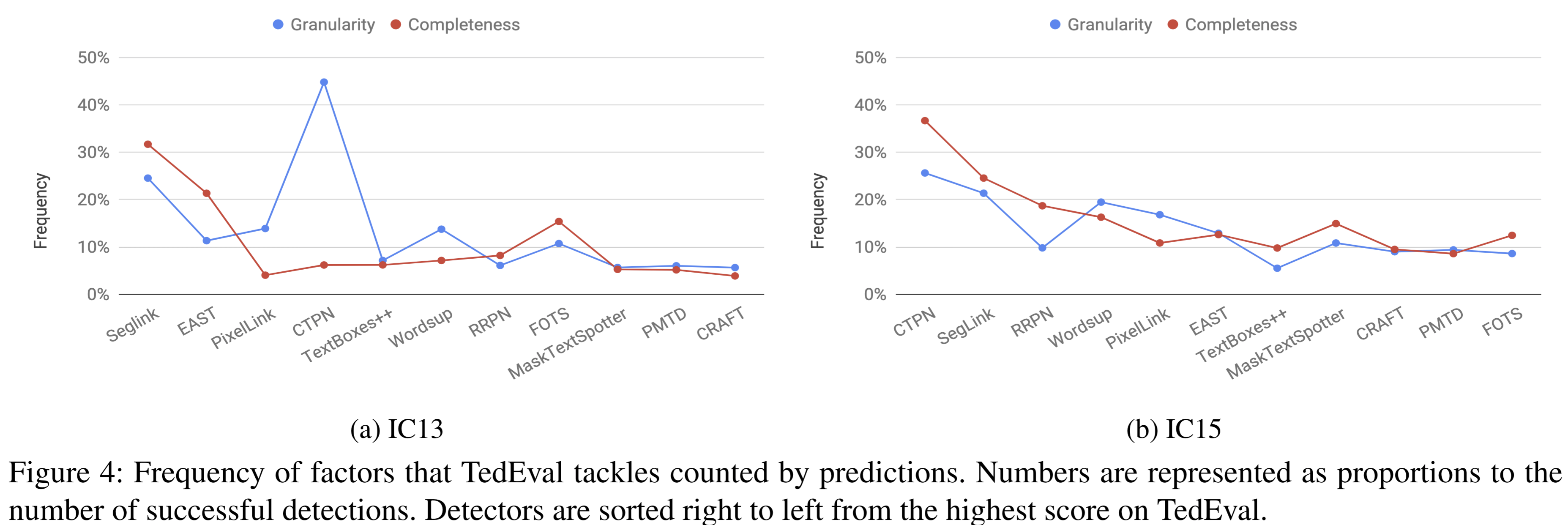

We compute Pseudo Character Center (PCC) from word-level bounding boxes and penalize matches when PCCs are missing or overlapping.

Sample Evaluation

Experiments

We evaluated state-of-the-art scene text detectors with TedEval on two benchmark datasets: ICDAR 2013 Focused Scene Text (IC13) and ICDAR 2015 Incidental Scene Text (IC15). Detectors are listed in the order of published dates.

ICDAR 2013

| Detector | Date (YY/MM/DD) | Recall (%) | Precision (%) | H-mean (%) |

|---|---|---|---|---|

| CTPN | 16/09/12 | 82.1 | 92.7 | 87.6 |

| RRPN | 17/03/03 | 89.0 | 94.2 | 91.6 |

| SegLink | 17/03/19 | 65.6 | 74.9 | 70.0 |

| EAST | 17/04/11 | 77.7 | 87.1 | 82.5 |

| WordSup | 17/08/22 | 87.5 | 92.2 | 90.2 |

| PixelLink | 18/01/04 | 84.0 | 87.2 | 86.1 |

| FOTS | 18/01/05 | 91.5 | 93.0 | 92.6 |

| TextBoxes++ | 18/01/09 | 87.4 | 92.3 | 90.0 |

| MaskTextSpotter | 18/07/06 | 90.2 | 95.4 | 92.9 |

| PMTD | 19/03/28 | 94.0 | 95.2 | 94.7 |

| CRAFT | 19/04/03 | 93.6 | 96.5 | 95.1 |

ICDAR 2015

| Detector | Date (YY/MM/DD) | Recall (%) | Precision (%) | H-mean (%) |

|---|---|---|---|---|

| CTPN | 16/09/12 | 85.0 | 81.1 | 67.8 |

| RRPN | 17/03/03 | 79.5 | 85.9 | 82.6 |

| SegLink | 17/03/19 | 77.1 | 83.9 | 80.6 |

| EAST | 17/04/11 | 82.5 | 90.0 | 86.3 |

| WordSup | 17/08/22 | 83.2 | 87.1 | 85.2 |

| PixelLink | 18/01/04 | 85.7 | 86.1 | 86.0 |

| FOTS | 18/01/05 | 89.0 | 93.4 | 91.2 |

| TextBoxes++ | 18/01/09 | 82.4 | 90.8 | 86.5 |

| MaskTextSpotter | 18/07/06 | 82.5 | 91.8 | 86.9 |

| PMTD | 19/03/28 | 89.2 | 92.8 | 91.0 |

| CRAFT | 19/04/03 | 88.5 | 93.1 | 90.9 |

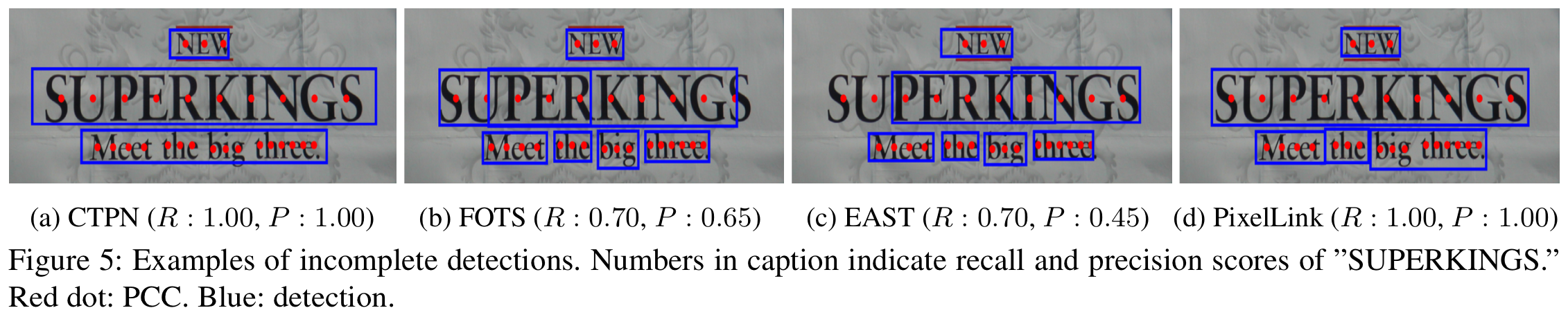

Frequency

Getting Started

Clone repository

git clone https://github.com/clovaai/TedEval.git

Requirements

- python 3

- python 3.x Polygon, Bottle, Pillow

# install

pip3 install Polygon3 bottle Pillow

Supported Annotation Type

- LTRB(xmin, ymin, xmax, ymax)

- QUAD(x1, y1, x2, y2, x3, y3, x4, y4)

Evaluation

Prepare data

The ground truth and the result data should be text files, one for each sample. Note that the naming rule of each text file is that there must be img_{number} in the filename and that the number indicate the image sample.

# gt/gt_img_38.txt

644,101,932,113,932,168,643,156,[email protected]

477,138,487,139,488,149,477,148,###

344,131,398,130,398,149,344,149,###

1195,148,1277,138,1277,177,1194,187,###

23,270,128,267,128,282,23,284,###

# result/res_img_38.txt

644,101,932,113,932,168,643,156,{Transcription},{Confidence}

477,138,487,139,488,149,477,148

344,131,398,130,398,149,344,149

1195,148,1277,138,1277,177,1194,187

23,270,128,267,128,282,23,284

Compress these text files.

zip gt.zip gt/*

zip result.zip result/*

Refer to gt/result.zip and gt/gt_*.zip for examples.

Run stand-alone evaluation

python script.py –g=gt/gt.zip –s=result/result.zip

- Locate the path of GT and submission file using the flag

-gand-s, respectively. - QUAD annotation type is used as default. To switch between

{QUAD, LTRB}, add-p='{"LTRB" = False}'in the command or directly modify thedefault_evaluation_params()function inscript.py. - If there are transcription or confidence values in your submission file, add

-p='{"CONFIDENCES" = True}or-p='{"TRANSCRIPTION" = True}'.

Run Visualizer

python web.py

- Place the zip file of images and GTs of the dataset named

images.zipandgt.zip, respectively, in thegtdirectory. - Create an empty directory name

output. This is where the DB, submission files, and result files will be created. - You can change the host and port number in the final line of

web.py.

The file structure should then be:

.

├── gt

│ ├── gt.zip

│ └── images.zip

├── output # empty dir

├── script.py

├── web.py

├── README.md

└── ...

Citation

@article{lee2019tedeval,

title={TedEval: A Fair Evaluation Metric for Scene Text Detectors},

author={Lee, Chae Young and Baek, Youngmin and Lee, Hwalsuk},

journal={arXiv preprint arXiv:1907.01227},

year={2019}

}

Contact us

We welcome any feedbacks to our metric. Please contact the authors via {cylee7133, youngmin.baek, hwalsuk.lee}@gmail.com. In case of code errors, open an issue and we will get to you.

License

Copyright (c) 2019-present NAVER Corp.

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in

all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

THE SOFTWARE.