HLearning / Unet_keras

Licence: mit

unet_keras use image Semantic segmentation

Stars: ✭ 312

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Unet keras

Railroad and Obstacle detection

This program detect and identify obstacle on railway. If program detect some obstacle that train must stop, program gives you warning sign. This program Also estimate riskiness of obstacle how it is negligible or not. We provide many models to you to detect railways and obstacles.

Stars: ✭ 14 (-95.51%)

Mutual labels: unet

Open Solution Mapping Challenge

Open solution to the Mapping Challenge 🌎

Stars: ✭ 291 (-6.73%)

Mutual labels: unet

CFUN

Combining Faster R-CNN and U-net for efficient medical image segmentation

Stars: ✭ 109 (-65.06%)

Mutual labels: unet

PyTorch-UNet

PyTorch Implementation for Segmentation and Saliency Prediction

Stars: ✭ 21 (-93.27%)

Mutual labels: unet

onnx tensorrt project

Support Yolov5(4.0)/Yolov5(5.0)/YoloR/YoloX/Yolov4/Yolov3/CenterNet/CenterFace/RetinaFace/Classify/Unet. use darknet/libtorch/pytorch/mxnet to onnx to tensorrt

Stars: ✭ 145 (-53.53%)

Mutual labels: unet

Segmentation models.pytorch

Segmentation models with pretrained backbones. PyTorch.

Stars: ✭ 4,584 (+1369.23%)

Mutual labels: unet

TensorFlow-Advanced-Segmentation-Models

A Python Library for High-Level Semantic Segmentation Models based on TensorFlow and Keras with pretrained backbones.

Stars: ✭ 64 (-79.49%)

Mutual labels: unet

Pytorch Saltnet

Kaggle | 9th place single model solution for TGS Salt Identification Challenge

Stars: ✭ 270 (-13.46%)

Mutual labels: unet

Depth estimation

Deep learning model to estimate the depth of image.

Stars: ✭ 62 (-80.13%)

Mutual labels: unet

DDUnet-Modified-Unet-for-WMH-with-Dense-Dilate

WMH segmentaion with unet, dilated_unet, and with ideas from denseNet

Stars: ✭ 23 (-92.63%)

Mutual labels: unet

ResUNetPlusPlus

Official code for ResUNetplusplus for medical image segmentation (TensorFlow implementation) (IEEE ISM)

Stars: ✭ 69 (-77.88%)

Mutual labels: unet

pytorch-Deep-Steganography

core code for High-Capacity Convolutional Video Steganography with Temporal Residual Modeling

Stars: ✭ 31 (-90.06%)

Mutual labels: unet

Segmentation models

Segmentation models with pretrained backbones. Keras and TensorFlow Keras.

Stars: ✭ 3,575 (+1045.83%)

Mutual labels: unet

uformer-pytorch

Implementation of Uformer, Attention-based Unet, in Pytorch

Stars: ✭ 54 (-82.69%)

Mutual labels: unet

Unet Zoo

A collection of UNet and hybrid architectures in PyTorch for 2D and 3D Biomedical Image segmentation

Stars: ✭ 302 (-3.21%)

Mutual labels: unet

Human Segmentation Pytorch

Human segmentation models, training/inference code, and trained weights, implemented in PyTorch

Stars: ✭ 289 (-7.37%)

Mutual labels: unet

unet

unet主要用于语义分割, 这里是一个细胞边缘检测的例子, 数据集比较简单。 unet的网络结构, 因像字母‘U’而得名。

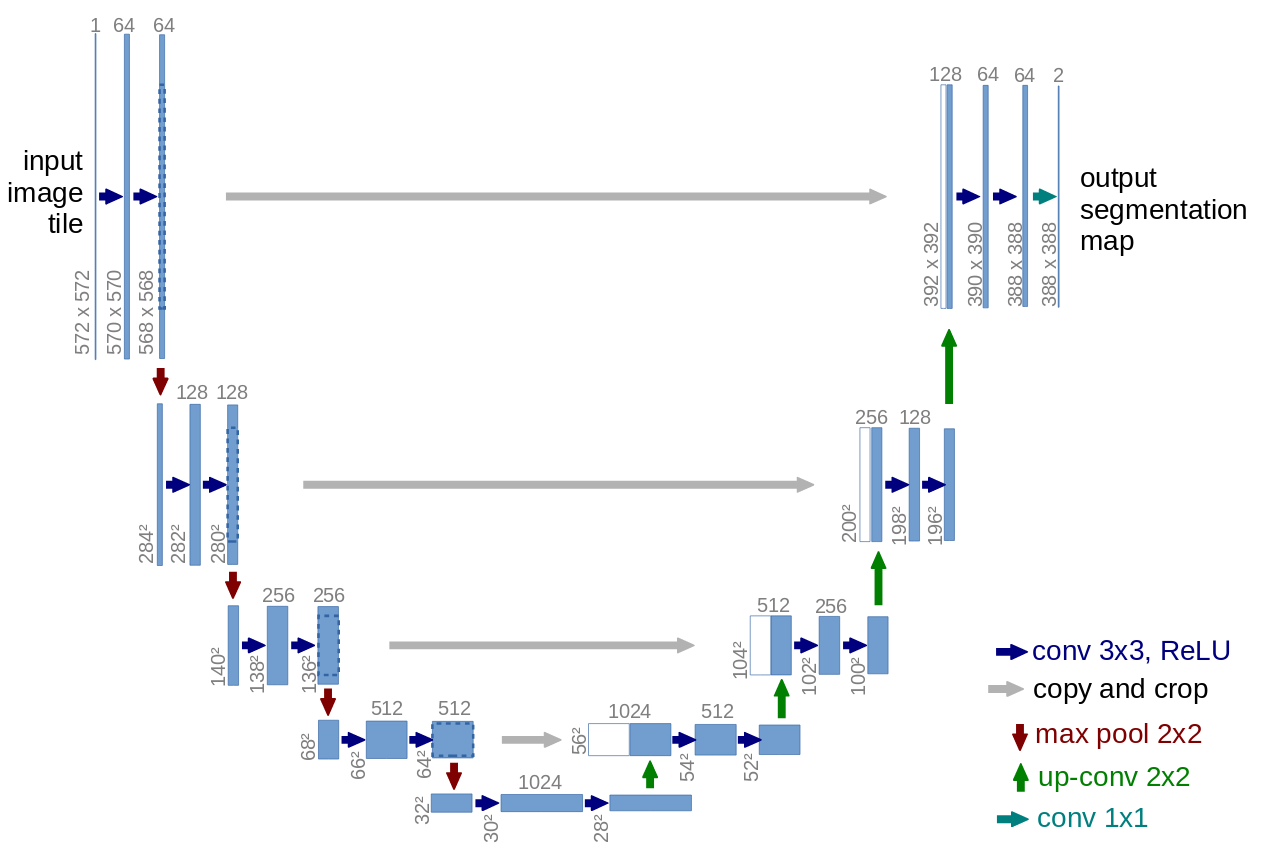

这里有一篇关于unet的 [论文](U-Net: Convolutional Networks for Biomedical Image Segmentation), 论文里面的网络结构如下:

说一下这个网络: 输入572×572×1, 输出:388×388×2, 大小不一样。 主要是因为卷积的过程中, 每次卷积会减小, 在copy and crop中, 也会减小。

我这里设计的网络, 并没有像上图的网络一样, 原封不动的实现出来, 而是借助vgg网络结构来实现的。

看上图, 我们发现, unet的前半部分采用2层卷积+一层池化的设计方式, 这一点和vgg16的前半部分很相似, 因此, 我在实现的过程中, 采用了vgg16的前10层。

网络设计

def vgg10_unet(self, input_shape=(256,256,3), weights='imagenet'):

vgg16_model = VGG16(input_shape=input_shape, weights=weights, include_top=False)

block4_pool = vgg16_model.get_layer('block4_pool').output

block5_conv1 = Conv2D(1024, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block4_pool)

block5_conv2 = Conv2D(1024, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block5_conv1)

block5_drop = Dropout(0.5)(block5_conv2)

block6_up = Conv2D(512, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(block5_drop))

block6_merge = Concatenate(axis=3)([vgg16_model.get_layer('block4_conv3').output, block6_up])

block6_conv1 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block6_merge)

block6_conv2 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block6_conv1)

block6_conv3 = Conv2D(512, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block6_conv2)

block7_up = Conv2D(256, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(block6_conv3))

block7_merge = Concatenate(axis=3)([vgg16_model.get_layer('block3_conv3').output, block7_up])

block7_conv1 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block7_merge)

block7_conv2 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block7_conv1)

block7_conv3 = Conv2D(256, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block7_conv2)

block8_up = Conv2D(128, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(block7_conv3))

block8_merge = Concatenate(axis=3)([vgg16_model.get_layer('block2_conv2').output, block8_up])

block8_conv1 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block8_merge)

block8_conv2 = Conv2D(128, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block8_conv1)

block9_up = Conv2D(64, 2, activation='relu', padding='same', kernel_initializer='he_normal')(

UpSampling2D(size=(2, 2))(block8_conv2))

block9_merge = Concatenate(axis=3)([vgg16_model.get_layer('block1_conv2').output, block9_up])

block9_conv1 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block9_merge)

block9_conv2 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block9_conv1)

block10_conv1 = Conv2D(64, 3, activation='relu', padding='same', kernel_initializer='he_normal')(block9_conv2)

block10_conv2 = Conv2D(2, 1, activation='sigmoid')(block10_conv1)

model = Model(inputs=vgg16_model.input, outputs=block10_conv2)

return model

这样设计的好处就是, 我们前半部分采用了vgg网络可以使我们在训练网络的时候, 前半部分的权重, 我们可以加载vgg的预训练模型的权重来进行初始化, 当然, 你也可以在训练的时候, 冻结这几层网络, 只训练后半部分。

测试结果

感谢

学习的过程中, 参考了项目: https://github.com/zhixuhao/unet

论文引用: Convolutional Networks for Biomedical Image Segmentation

如果你看了这个项目对你有帮助, 麻烦帮我点颗星星, 谢谢

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].