chaitanya100100 / Vae For Image Generation

Licence: mit

Implemented Variational Autoencoder generative model in Keras for image generation and its latent space visualization on MNIST and CIFAR10 datasets

Stars: ✭ 87

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Vae For Image Generation

soft-intro-vae-pytorch

[CVPR 2021 Oral] Official PyTorch implementation of Soft-IntroVAE from the paper "Soft-IntroVAE: Analyzing and Improving Introspective Variational Autoencoders"

Stars: ✭ 170 (+95.4%)

Mutual labels: vae, image-generation, variational-autoencoder

Tensorflow Generative Model Collections

Collection of generative models in Tensorflow

Stars: ✭ 3,785 (+4250.57%)

Mutual labels: generative-model, vae, variational-autoencoder

Vae protein function

Protein function prediction using a variational autoencoder

Stars: ✭ 57 (-34.48%)

Mutual labels: generative-model, vae, variational-autoencoder

Awesome Vaes

A curated list of awesome work on VAEs, disentanglement, representation learning, and generative models.

Stars: ✭ 418 (+380.46%)

Mutual labels: generative-model, vae, variational-autoencoder

srVAE

VAE with RealNVP prior and Super-Resolution VAE in PyTorch. Code release for https://arxiv.org/abs/2006.05218.

Stars: ✭ 56 (-35.63%)

Mutual labels: generative-model, vae, variational-autoencoder

Deepnude An Image To Image Technology

DeepNude's algorithm and general image generation theory and practice research, including pix2pix, CycleGAN, UGATIT, DCGAN, SinGAN, ALAE, mGANprior, StarGAN-v2 and VAE models (TensorFlow2 implementation). DeepNude的算法以及通用生成对抗网络(GAN,Generative Adversarial Network)图像生成的理论与实践研究。

Stars: ✭ 4,029 (+4531.03%)

Mutual labels: vae, image-generation

Disentangling Vae

Experiments for understanding disentanglement in VAE latent representations

Stars: ✭ 398 (+357.47%)

Mutual labels: vae, variational-autoencoder

Generating Devanagari Using Draw

PyTorch implementation of DRAW: A Recurrent Neural Network For Image Generation trained on Devanagari dataset.

Stars: ✭ 82 (-5.75%)

Mutual labels: generative-model, image-generation

Generative Models

Collection of generative models, e.g. GAN, VAE in Pytorch and Tensorflow.

Stars: ✭ 6,701 (+7602.3%)

Mutual labels: generative-model, vae

Neuraldialog Cvae

Tensorflow Implementation of Knowledge-Guided CVAE for dialog generation ACL 2017. It is released by Tiancheng Zhao (Tony) from Dialog Research Center, LTI, CMU

Stars: ✭ 279 (+220.69%)

Mutual labels: generative-model, variational-autoencoder

Tensorflow Mnist Vae

Tensorflow implementation of variational auto-encoder for MNIST

Stars: ✭ 422 (+385.06%)

Mutual labels: vae, variational-autoencoder

Attend infer repeat

A Tensorfflow implementation of Attend, Infer, Repeat

Stars: ✭ 82 (-5.75%)

Mutual labels: generative-model, vae

Pytorch Rl

This repository contains model-free deep reinforcement learning algorithms implemented in Pytorch

Stars: ✭ 394 (+352.87%)

Mutual labels: vae, variational-autoencoder

Simple Variational Autoencoder

A VAE written entirely in Numpy/Cupy

Stars: ✭ 20 (-77.01%)

Mutual labels: generative-model, variational-autoencoder

Texturize

🤖🖌️ Generate photo-realistic textures based on source images. Remix, remake, mashup! Useful if you want to create variations on a theme or elaborate on an existing texture.

Stars: ✭ 366 (+320.69%)

Mutual labels: generative-model, image-generation

Sentence Vae

PyTorch Re-Implementation of "Generating Sentences from a Continuous Space" by Bowman et al 2015 https://arxiv.org/abs/1511.06349

Stars: ✭ 462 (+431.03%)

Mutual labels: generative-model, vae

Variational Autoencoder

PyTorch implementation of "Auto-Encoding Variational Bayes"

Stars: ✭ 25 (-71.26%)

Mutual labels: vae, variational-autoencoder

S Vae Pytorch

Pytorch implementation of Hyperspherical Variational Auto-Encoders

Stars: ✭ 255 (+193.1%)

Mutual labels: vae, variational-autoencoder

Generative models tutorial with demo

Generative Models Tutorial with Demo: Bayesian Classifier Sampling, Variational Auto Encoder (VAE), Generative Adversial Networks (GANs), Popular GANs Architectures, Auto-Regressive Models, Important Generative Model Papers, Courses, etc..

Stars: ✭ 276 (+217.24%)

Mutual labels: generative-model, variational-autoencoder

VAE for Image Generation

Variational AutoEncoder - Keras implementation on mnist and cifar10 datasets

Dependencies

- keras

- tensorflow / theano (current implementation is according to tensorflow. It can be used with theano with few changes in code)

- numpy, matplotlib, scipy

implementation Details

code is highly inspired from keras examples of vae : ,

(source files contains some code duplication)

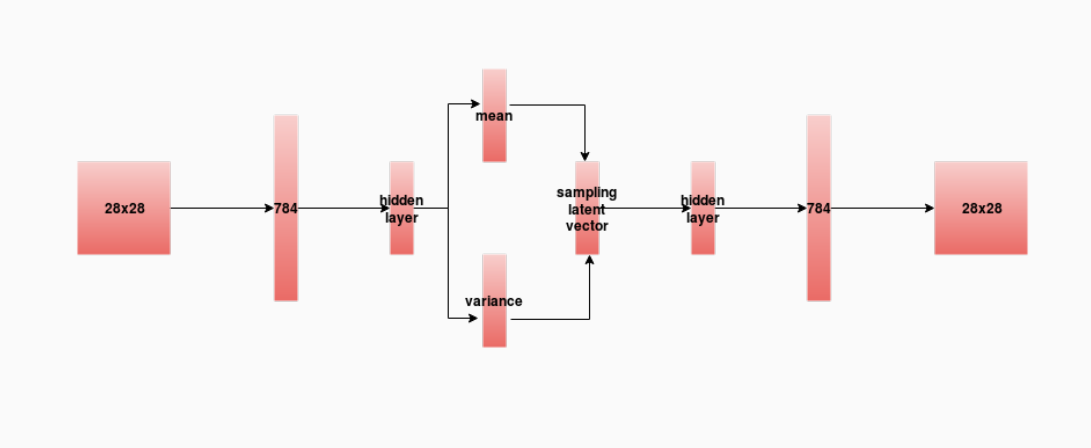

MNIST

- images are flatten out to treat them as 1D vectors

- encoder and decoder - both have normal neural network architecture

| network architecture |

|---|

|

- it trains vae model according to the hyperparameters defined in

- entire vae model, encoder and decoder is stored as keras models in

directory as

ld_<latent_dim>_id_<intermediate_dim>_e_<epochs>_<vae/encoder/decoder>.h5where<latent_dim>is number of latent dimensions,<intermediate_dim>is number of neurons in hidden layer and<epochs>is number of training epochs - after training, the saved model can be used to analyse the latent distribution and to generate new images

- it also stores the training history in

ld_<latent_dim>_id_<intermediate_dim>_e_<epochs>_history.pkl

- it is only for 2 dimensional latent space

- it loads trained model according to the hyperparameters defined in

mnist_params.py - it displays the latent space distribution and then generates the images according to user input of latent variables (see the code as it is almost self-explanatory)

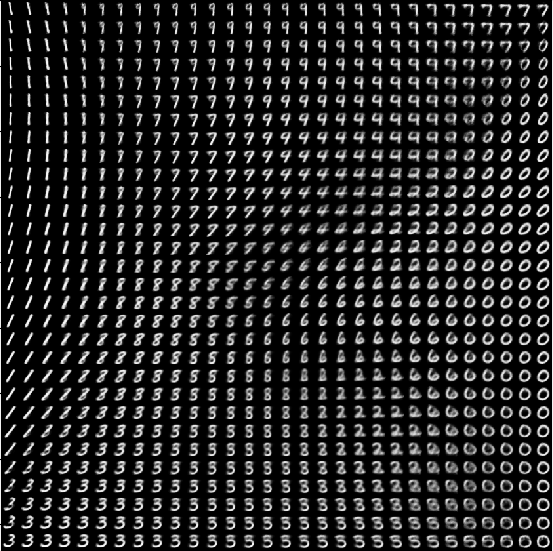

- it can also generate images from latent vectors randomly sampled from 2D latent space (comment out the user input lines) and display them in a grid

- it is same as

mnist_2d_latent_space_and_generate.pybut it is for 3d latent space

- it loads trained model according to the hyperparameters defined in

mnist_params.py - if latent space is either 2D or 3D, it displays it

- it displays a grid of images generated from randomly sampled latent vectors

results

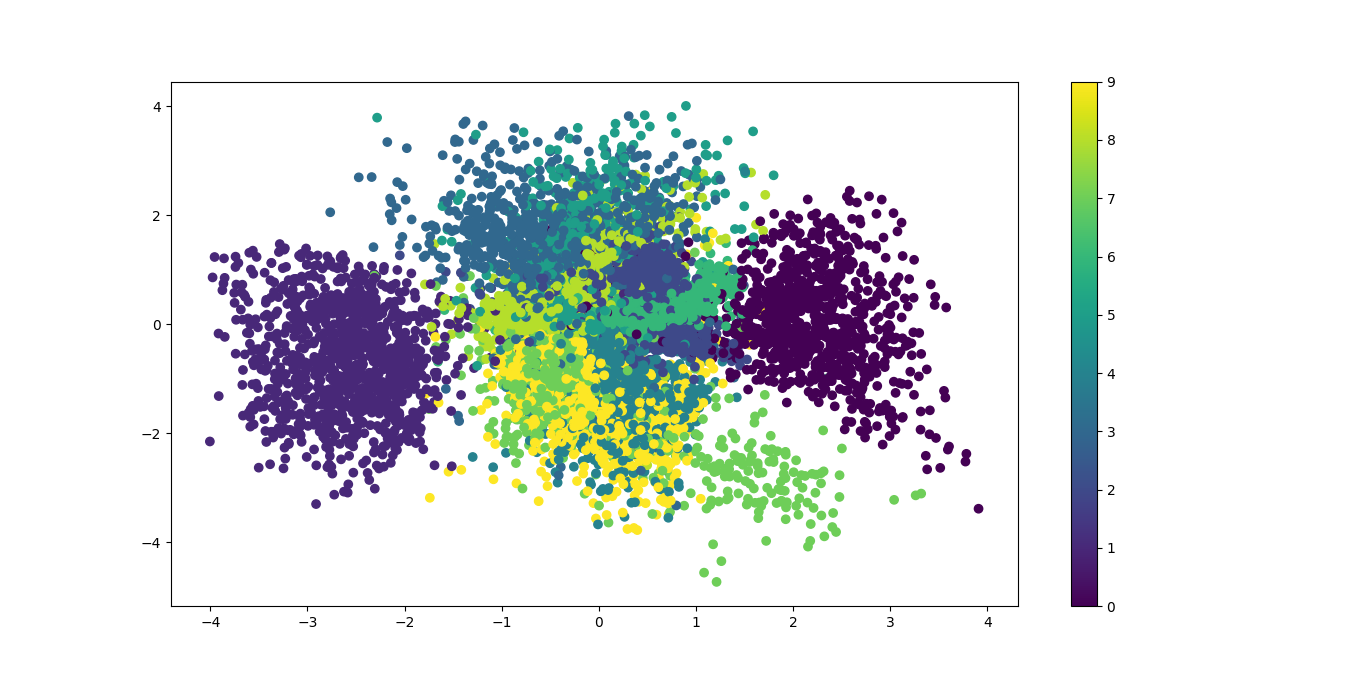

2D latent space

| latent space | uniform sampling |

|---|---|

|

|

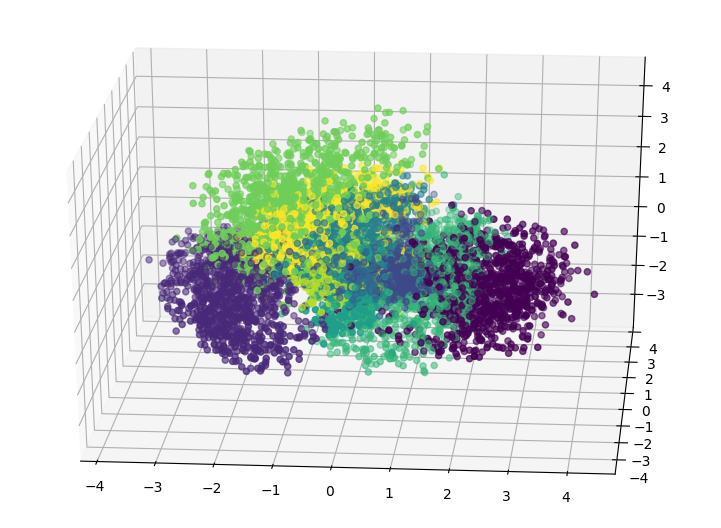

3D latent space

3D latent space results

| uniform sampling | random sampling |

|---|---|

|

|

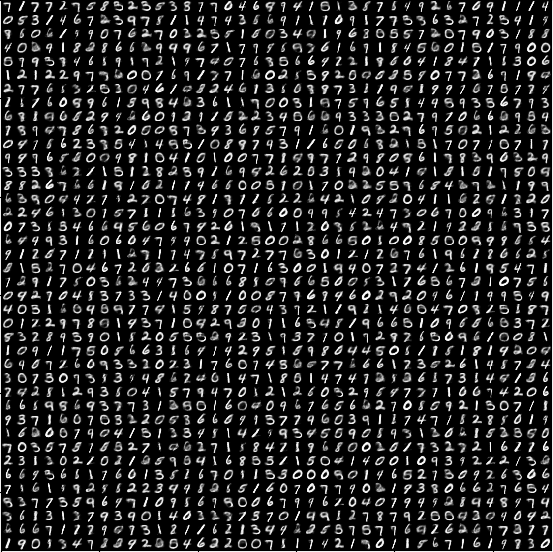

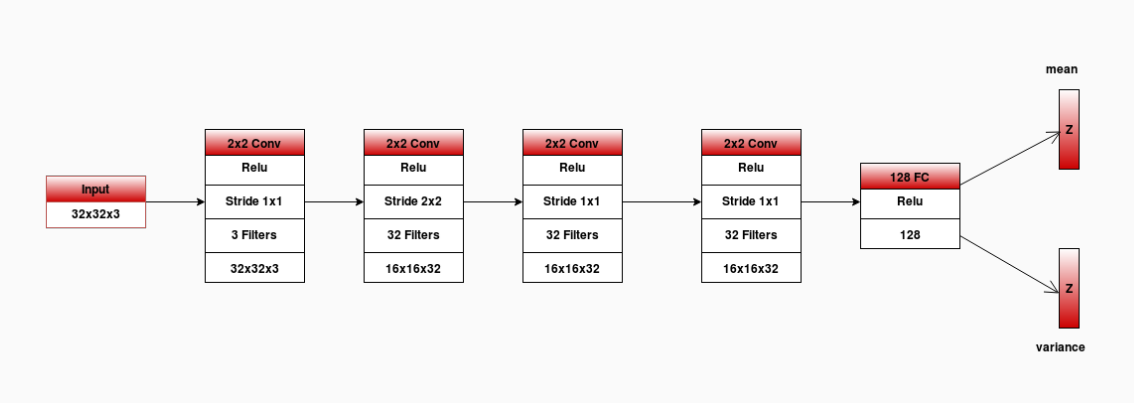

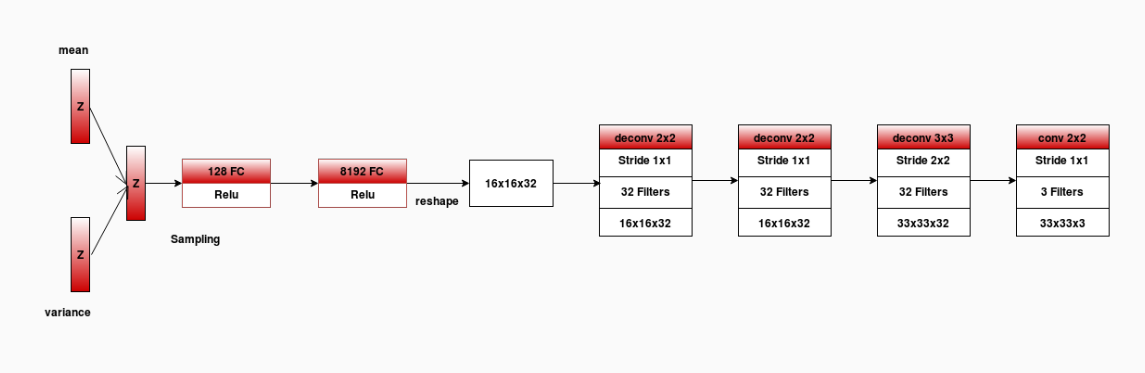

CIFAR10

- images are treated as 2D input

- encoder has the architecture of convolutional neural network and decoder has the architecture of deconvolutional network

- network architecture for encoder and decoder are as follows

| encoder |

|---|

|

| decoder |

|---|

|

,

,

implementation structure is same as mnist files

result - latent dimensions 16

| 25 epochs | 50 epochs | 75 epochs |

|---|---|---|

|

|

|

| 600 epochs |

|---|

|

CALTECH101

-

caltech101_<sz>_train.pyandcaltech101_<sz>_generate.py(whereszis the size of input image - here the training was done for two sizes - 92*92 and 128*128) are same as cifar10 dataset files - as the image size is large, more computation power is needed to train the model

- results obtained with less training are qualitatively not good

- in

datasetdirectory,is provided to preprocess the dataset

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].