qizhuli / Weakly Supervised Panoptic Segmentation

Programming Languages

Projects that are alternatives of or similar to Weakly Supervised Panoptic Segmentation

Weakly- and Semi-Supervised Panoptic Segmentation

by Qizhu Li*, Anurag Arnab*, Philip H.S. Torr

This repository demonstrates the weakly supervised ground truth generation scheme presented in our paper Weakly- and Semi-Supervised Panoptic Segmentation published at ECCV 2018. The code has been cleaned-up and refactored, and should reproduce the results presented in the paper.

For details, please refer to our Downloads section for all the additional data we release.

* Equal first authorship

Introduction

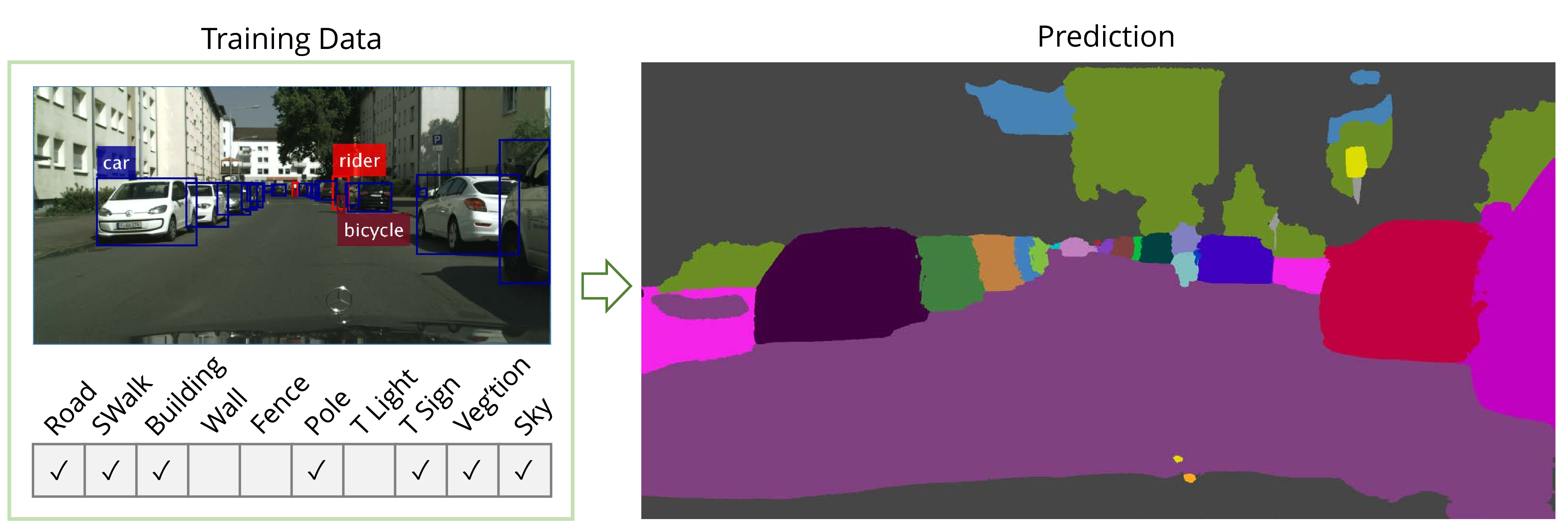

In our weakly-supervised panoptic segmentation experiments, our models are supervised by 1) image-level tags and 2) bounding boxes, as shown in the figure above. We used image-level tags as supervision for "stuff" classes which do not have a defined extent and cannot be described well by tight bounding boxes. For "thing" classes, we used bounding boxes as our weak supervision. This code release clarifies the implementation details of the method presented in the paper.

Iterative ground truth generation

For readers' convenience, we will give an outline of the proposed iterative ground truth generation pipeline, and provide demos for some of the key steps.

-

We train a multi-class classifier for all classes to obtain rough localisation cues. As it is not possible to fit an entire Cityscapes image (1024x2048) into a network due to GPU memory constraints, we took 15 fixed 400x500 crops per training image, and derived their classification ground truth accordingly, which we use to train the multi-class classifier. From the trained classifier, we extract the Class Activation Maps (CAMs) using Grad-CAM, which has the advantage of being agnostic to network architecture over CAM.

-

In parallel, we extract bounding box annotations from Cityscapes ground truth files, and then run MCG (a segment-proposal algorithm) and Grabcut (a classic foreground segmentation technique given a bounding-box prior) on the training images to generate foreground masks inside each annotated bounding box. MCG and Grabcut masks are merged following the rule that only regions where both have consensus are given the predicted label; otherwise an "ignore" label is assigned.

- The extracted bounding boxes (saved in .mat format) can be downloaded here. Alternatively, we also provide a demo script

demo_instanceTrainId_to_dets.mand a batch scriptbatch_instanceTrainId_to_dets.mfor you to make them yourself. The demo is self-contained; However, before running the batch script, make sure to-

Download the official Cityscapes scripts repository;

-

Inside the above repository, navigate to

cityscapesscripts/preparationand runpython createTrainIdInstanceImgs.py

This command requires an environment variable

CITYSCAPES_DATASTET=path/to/your/cityscapes/data/folderto be set. These two steps produce the*_instanceTrainIds.pngfiles required by our batch script; -

Navigate back to this repository, and place/symlink your

gtFineandgtCoarsefolders insidedata/Cityscapes/folder so that they are visible to our batch script.

-

- Please see here for details on MCG.

- We use the OpenCV implementation of Grabcut in our experiments.

- The merged M&G masks we produced are available for download here.

- The extracted bounding boxes (saved in .mat format) can be downloaded here. Alternatively, we also provide a demo script

-

The CAMs (step 1) and M&G masks (step 2) are merged to produce the ground truth needed to kick off iterative training. To see a demo of merging, navigate to the root folder of this repo in MATLAB and run:

demo_merge_cam_mandg;

When post-processing network predictions of images from the Cityscapes

train_extrasplit, make sure to use the following settings:opts.run_apply_bbox_prior = false; opts.run_check_image_level_tags = false; opts.save_ins = false;

because the coarse annotation provided on the

train_extrasplit trades off recall for precision, leading to inaccurate bounding box coordinates, and frequent occurrences of false negatives. This also applies to step 5.- The results from merging CAMs with M&G masks can be downloaded here.

-

Using the generated ground truth, weakly-supervised models can be trained in the same way as a fully-supervised model. When the training loss converges, we make dense predictions using the model and also save the prediction scores.

- An example of dense prediction made by a weakly-supervised model is included at

results/pred_sem_raw/, and an example of the corresponding prediction scores is provided atresults/pred_flat_feat/.

- An example of dense prediction made by a weakly-supervised model is included at

-

The prediction and prediction scores (and optionally, the M&G masks) are used to generate the ground truth labels for next stage of iterative training. To see a demo of iterative ground truth generation, navigate to the root folder of this repo in MATLAB and run:

demo_make_iterative_gt;

The generated semantic and instance ground truth labels are saved at

results/pred_sem_cleanandresults/pred_ins_cleanrespectively.Please refer to

scripts/get_opts.mfor the options available. To reproduce the results presented in the paper, use the default setting, and setopts.run_merge_with_mcg_and_grabcuttofalseafter five iterations of training, as the weakly supervised model by then produces better quality segmentation of ''thing'' classes than the original M&G masks. -

Repeat step 4 and 5 until training loss no longer reduces.

Downloads

-

Image crops and tags for training multi-class classifier:

- Images

- Ground truth tags

- Lists

- Semantic labels (provided for convenience; not to be used in training)

- CAMs:

- Extracted Cityscapes bounding boxes (.mat format):

- Merged MCG&Grabcut masks:

- CAMs merged with MCG&Grabcut masks:

Note that due to file size limit set by BaiduYun, some of the larger files had to be split into several chunks in order to be uploaded. These files are named as filename.zip.part##, where filename is the original file name excluding the extension, and ## is a two digit part index. After you have downloaded all the parts, cd to the folder where they are saved, and use the following command to join them back together:

cat filename.zip.part* > filename.zip

The joining operation may take several minutes, depending on file size.

The above does not apply to files downloaded from Dropbox.

Reference

If you find the code helpful in your research, please cite our paper:

@InProceedings{Li_2018_ECCV,

author = {Li, Qizhu and

Arnab, Anurag and

Torr, Philip H.S.},

title = {Weakly- and Semi-Supervised Panoptic Segmentation},

booktitle = {The European Conference on Computer Vision (ECCV)},

month = {September},

year = {2018}

}

Questions

Please contact Qizhu Li [email protected] and Anurag Arnab [email protected] for enquires, issues, and suggestions.