amirgholami / Zeroq

Programming Languages

Labels

Projects that are alternatives of or similar to Zeroq

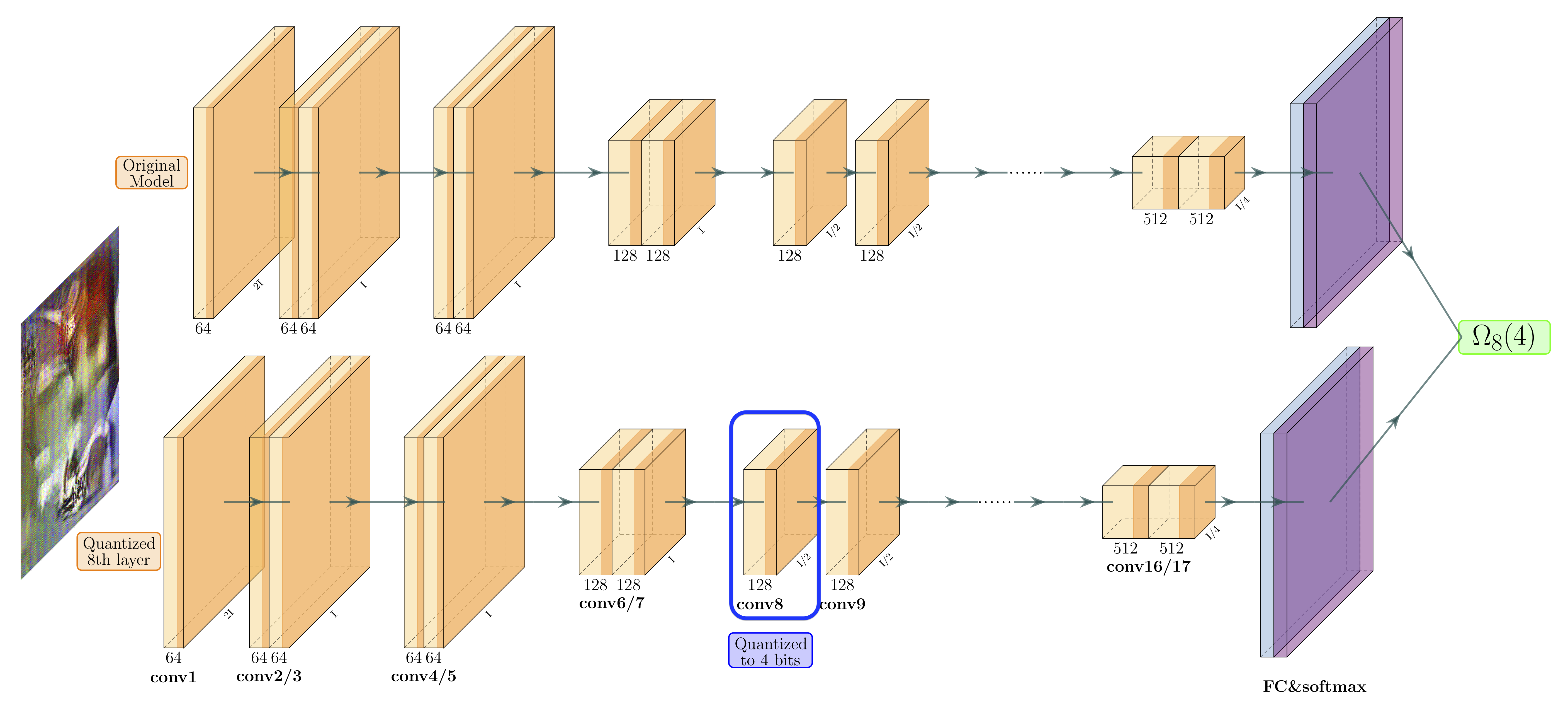

ZeroQ: A Novel Zero Shot Quantization Framework

Introduction

This repository contains the PyTorch implementation for the CVPR 2020 paper ZeroQ: A Novel Zero-Shot Quantization Framework. Below are instructions for reproducing classification results. Please see detection readme for instructions to reproduce object detection results.

You can find a short video explanation of ZeroQ here.

TLDR;

# Code is based on PyTorch 1.2 (Cuda10). Other dependancies could be installed as follows:

cd classification

pip install -r requirements.txt --user

# Set a symbolic link to ImageNet validation data (used only to evaluate model)

mkdir data

ln -s /path/to/imagenet/ data/

The folder structures should be the same as following

zeroq

├── utils

├── data

│ ├── imagenet

│ │ ├── val

Afterwards you can test Zero Shot quantization with W8A8 by running:

bash run.sh

Below are the results that you should get for 8-bit quantization (W8A8 refers to the quantizing model to 8-bit weights and 8-bit activations).

| Models | Single Precision Top-1 | W8A8 Top-1 |

|---|---|---|

| ResNet18 | 71.47 | 71.43 |

| ResNet50 | 77.72 | 77.67 |

| InceptionV3 | 78.88 | 78.72 |

| MobileNetV2 | 73.03 | 72.91 |

| ShuffleNet | 65.07 | 64.94 |

| SqueezeNext | 69.38 | 69.17 |

Evaluate

- You can test a single model using the following command:

export CUDA_VISIBLE_DEVICES=0

python uniform_test.py [--dataset] [--model] [--batch_size] [--test_batch_size]

optional arguments:

--dataset type of dataset (default: imagenet)

--model model to be quantized (default: resnet18)

--batch-size batch size of distilled data (default: 64)

--test-batch-size batch size of test data (default: 512)

Citation

ZeroQ has been developed as part of the following paper. We appreciate it if you would please cite the following paper if you found the implementation useful for your work:

@inproceedings{cai2020zeroq,

title={Zeroq: A novel zero shot quantization framework},

author={Cai, Yaohui and Yao, Zhewei and Dong, Zhen and Gholami, Amir and Mahoney, Michael W and Keutzer, Kurt},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={13169--13178},

year={2020}

}