9corp / 9volt

Projects that are alternatives of or similar to 9volt

9volt

A modern, distributed monitoring system written in Go.

Another monitoring system? Why?

While there are a bunch of solutions for monitoring and alerting using time series data, there aren't many (or any?) modern solutions for 'regular'/'old-skool' remote monitoring similar to Nagios and Icinga.

9volt offers the following things out of the box:

- Single binary deploy

- Fully distributed

- Incredibly easy to scale to hundreds of thousands of checks

- Uses

etcdfor all configuration - Real-time configuration pick-up (update etcd -

9voltimmediately picks up the change) -

Support for assigning checks to specific (groups of) nodes

- Helpful for getting around network restrictions (or requiring certain checks to run from a specific region)

- Interval based monitoring (ie. run check XYZ every 1s, 1y, 1d or even 1ms)

- Natively supported monitors:

- TCP

- HTTP

- Exec

- DNS

- Natively supported alerters:

- Slack

- Pagerduty

- RESTful API for querying current monitoring state and loaded configuration

- Comes with a built-in, react based UI that provides another way to view and manage the cluster

- Access the UI by going to http://hostname:8080/ui

- Comes with a built-in monitor and alerter config management util (that parses and syncs YAML-based configs to etcd)

./9volt cfg --help

Usage

- Install/setup

etcd - Download latest

9voltrelease - Start server:

./9volt server -e http://etcd-server-1.example.com:2379 -e http://etcd-server-2.example.com:2379 -e http://etcd-server-3.example.com:2379 - Optional: use

9volt cfgfor managing configs - Optional: add

9voltto be managed bysupervisord,upstartor some other process manager - Optional: Several configuration params can be passed to

9voltvia env vars

... or, if you prefer to do things via Docker, check out these docs.

H/A and scaling

Scaling 9volt is incredibly simple. Launch another 9volt service on a separate host and point it to the same etcd hosts as the main 9volt service.

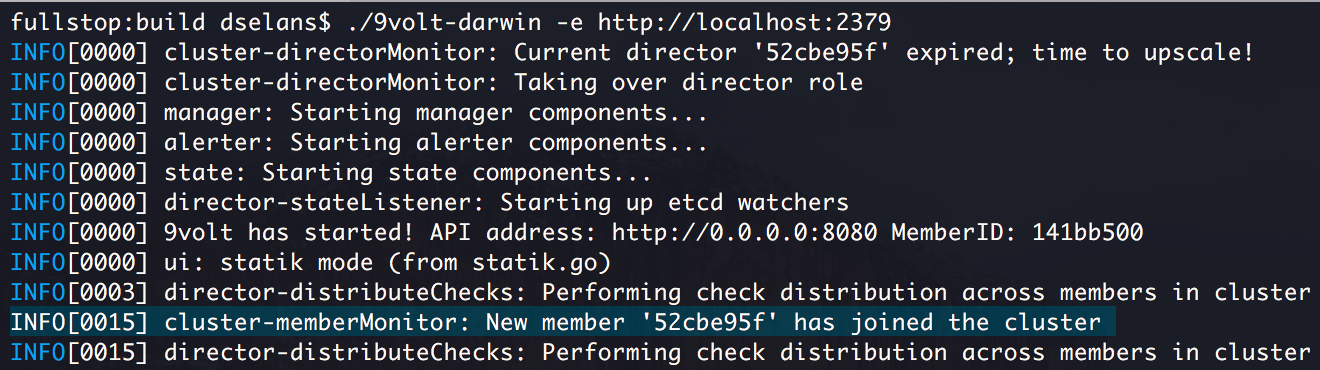

Your main 9volt node will produce output similar to this when it detects a node join:

Checks will be automatically divided between the all 9volt instances.

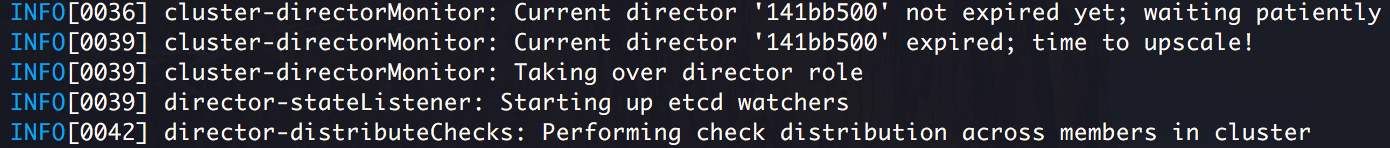

If one of the nodes were to go down, a new leader will be elected (if the node that went down was the previous leader) and checks will be redistributed among the remaining nodes.

This will produce output similar to this (and will be also available in the event stream via the API and UI):

API

API documentation can be found here.

Minimum requirements (can handle ~1,000-3,000 <10s interval checks)

- 1 x 9volt instance (1 core, 256MB RAM)

- 1 x etcd node (1 core, 256MB RAM)

Note In the minimum configuration, you could run both 9volt and etcd on the same node.

Recommended (production) requirements (can handle 10,000+ <10s interval checks)

- 3 x 9volt instances (2+ cores, 512MB RAM)

- 3 x etcd nodes (2+ cores, 1GB RAM)

Configuration

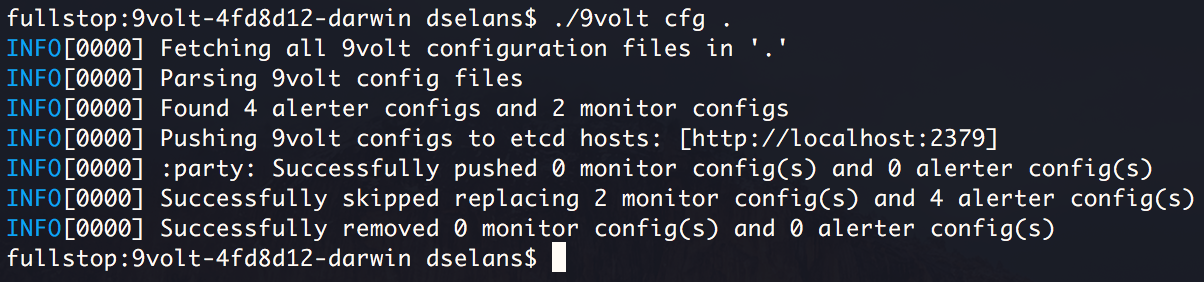

While you can manage 9volt alerter and monitor configs via the API, another approach to config management is to use the built-in config utility (9volt cfg <flags>).

This utility allows you to scan a given directory for any YAML files that resemble 9volt configs (the file must contain either 'monitor' or 'alerter' sections) and it will automatically parse, validate and push them to your etcd server(s).

By default, the utility will keep your local configs in sync with your etcd server(s). In other words, if the utility comes across a config in etcd that does not exist locally (in config(s)), it will remove the config entry from etcd (and vice versa). This functionality can be turned off by flipping the --nosync flag.

You can look at an example of a YAML based config here.

Docs

Read through the docs dir.

Suggestions/ideas

Got a suggestion/idea? Something that is preventing you from using 9volt over another monitoring system because of a missing feature? Submit an issue and we'll see what we can do!