shangjingbo1226 / Autoner

Licence: apache-2.0

Learning Named Entity Tagger from Domain-Specific Dictionary

Stars: ✭ 357

Projects that are alternatives of or similar to Autoner

Cluener2020

CLUENER2020 中文细粒度命名实体识别 Fine Grained Named Entity Recognition

Stars: ✭ 689 (+93%)

Mutual labels: named-entity-recognition, ner, sequence-labeling

Ld Net

Efficient Contextualized Representation: Language Model Pruning for Sequence Labeling

Stars: ✭ 148 (-58.54%)

Mutual labels: named-entity-recognition, ner, sequence-labeling

Named entity recognition

中文命名实体识别(包括多种模型:HMM,CRF,BiLSTM,BiLSTM+CRF的具体实现)

Stars: ✭ 995 (+178.71%)

Mutual labels: named-entity-recognition, ner, sequence-labeling

Kashgari

Kashgari is a production-level NLP Transfer learning framework built on top of tf.keras for text-labeling and text-classification, includes Word2Vec, BERT, and GPT2 Language Embedding.

Stars: ✭ 2,235 (+526.05%)

Mutual labels: named-entity-recognition, ner, sequence-labeling

Ncrfpp

NCRF++, a Neural Sequence Labeling Toolkit. Easy use to any sequence labeling tasks (e.g. NER, POS, Segmentation). It includes character LSTM/CNN, word LSTM/CNN and softmax/CRF components.

Stars: ✭ 1,767 (+394.96%)

Mutual labels: named-entity-recognition, ner, sequence-labeling

CrossNER

CrossNER: Evaluating Cross-Domain Named Entity Recognition (AAAI-2021)

Stars: ✭ 87 (-75.63%)

Mutual labels: named-entity-recognition, ner, sequence-labeling

NER corpus chinese

NER(命名实体识别)中文语料,一站式获取

Stars: ✭ 102 (-71.43%)

Mutual labels: named-entity-recognition, ner

huner

Named Entity Recognition for biomedical entities

Stars: ✭ 44 (-87.68%)

Mutual labels: named-entity-recognition, ner

NER-Multimodal-pytorch

Pytorch Implementation of "Adaptive Co-attention Network for Named Entity Recognition in Tweets" (AAAI 2018)

Stars: ✭ 42 (-88.24%)

Mutual labels: named-entity-recognition, ner

Hscrf Pytorch

ACL 2018: Hybrid semi-Markov CRF for Neural Sequence Labeling (http://aclweb.org/anthology/P18-2038)

Stars: ✭ 284 (-20.45%)

Mutual labels: ner, sequence-labeling

ner-d

Python module for Named Entity Recognition (NER) using natural language processing.

Stars: ✭ 14 (-96.08%)

Mutual labels: named-entity-recognition, ner

CrowdLayer

A neural network layer that enables training of deep neural networks directly from crowdsourced labels (e.g. from Amazon Mechanical Turk) or, more generally, labels from multiple annotators with different biases and levels of expertise.

Stars: ✭ 45 (-87.39%)

Mutual labels: named-entity-recognition, sequence-labeling

Bert Bilstm Crf Ner

Tensorflow solution of NER task Using BiLSTM-CRF model with Google BERT Fine-tuning And private Server services

Stars: ✭ 3,838 (+975.07%)

Mutual labels: named-entity-recognition, ner

react-taggy

A simple zero-dependency React component for tagging user-defined entities within a block of text.

Stars: ✭ 29 (-91.88%)

Mutual labels: named-entity-recognition, ner

mitie-ruby

Named-entity recognition for Ruby

Stars: ✭ 77 (-78.43%)

Mutual labels: named-entity-recognition, ner

fairseq-tagging

a Fairseq fork for sequence tagging/labeling tasks

Stars: ✭ 26 (-92.72%)

Mutual labels: ner, sequence-labeling

presidio-research

This package features data-science related tasks for developing new recognizers for Presidio. It is used for the evaluation of the entire system, as well as for evaluating specific PII recognizers or PII detection models.

Stars: ✭ 62 (-82.63%)

Mutual labels: named-entity-recognition, ner

Chatbot ner

chatbot_ner: Named Entity Recognition for chatbots.

Stars: ✭ 273 (-23.53%)

Mutual labels: named-entity-recognition, ner

Phobert

PhoBERT: Pre-trained language models for Vietnamese (EMNLP-2020 Findings)

Stars: ✭ 332 (-7%)

Mutual labels: named-entity-recognition, ner

AutoNER

Check Our New NER Toolkit🚀🚀🚀

-

Inference:

- LightNER: inference w. models pre-trained / trained w. any following tools, efficiently.

-

Training:

- LD-Net: train NER models w. efficient contextualized representations.

- VanillaNER: train vanilla NER models w. pre-trained embedding.

-

Distant Training:

- AutoNER: train NER models w.o. line-by-line annotations and get competitive performance.

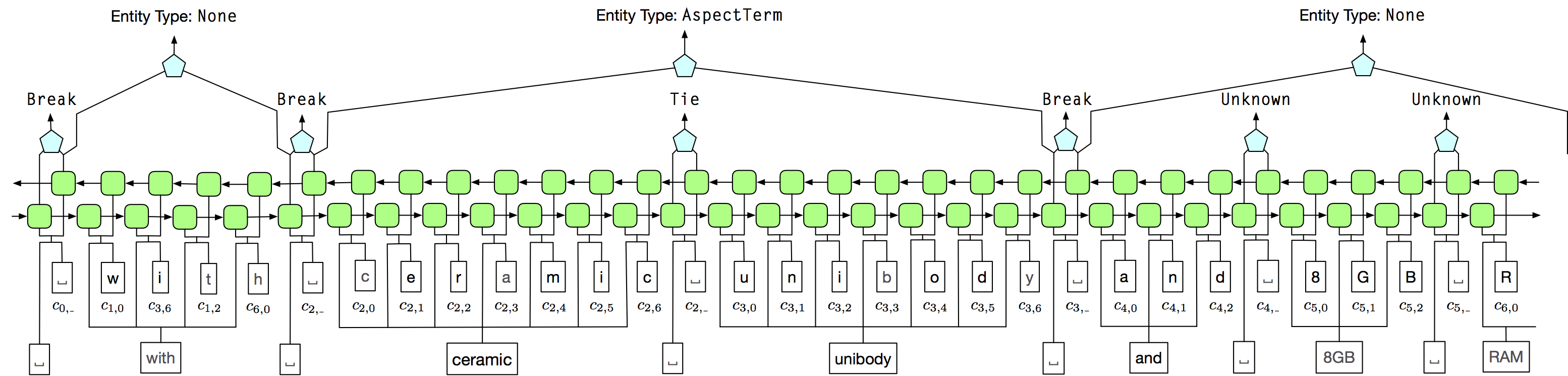

No line-by-line annotations, AutoNER trains named entity taggers with distant supervision.

Details about AutoNER can be accessed at: https://arxiv.org/abs/1809.03599

Model Notes

Benchmarks

| Method | Precision | Recall | F1 |

|---|---|---|---|

| Supervised Benchmark | 88.84 | 85.16 | 86.96 |

| Dictionary Match | 93.93 | 58.35 | 71.98 |

| Fuzzy-LSTM-CRF | 88.27 | 76.75 | 82.11 |

| AutoNER | 88.96 | 81.00 | 84.80 |

Training

Required Inputs

-

Tokenized Raw Texts

- Example:

data/BC5CDR/raw_text.txt- One token per line.

- An empty line means the end of a sentence.

- Example:

-

Two Dictionaries

-

Core Dictionary w/ Type Info

- Example:

data/BC5CDR/dict_core.txt- Two columns (i.e., Type, Tokenized Surface) per line.

- Tab separated.

- How to obtain?

- From domain-specific dictionaries.

- Example:

-

Full Dictionary w/o Type Info

- Example:

data/BC5CDR/dict_full.txt- One tokenized high-quality phrases per line.

- How to obtain?

- From domain-specific dictionaries.

- Applying the high-quality phrase mining tool on domain-specific corpus.

- Example:

-

Core Dictionary w/ Type Info

-

Pre-trained word embeddings

- Train your own or download from the web.

- The example run uses

embedding/bio_embedding.txt, which can be downloaded from our group's server. For example,curl http://dmserv4.cs.illinois.edu/bio_embedding.txt -o embedding/bio_embedding.txt. Since the embedding encoding step consumes quite a lot of memory, we also provide the encoded file in theautoner_train.sh.

-

[Optional] Development & Test Sets.

- Example:

data/BC5CDR/truth_dev.ckanddata/BC5CDR/truth_test.ck- Three columns (i.e., token,

Tie or Breaklabel, entity type). -

IisBreak. -

OisTie. - Two special tokens

<s>and<eof>mean the start and end of the sentence.

- Three columns (i.e., token,

- Example:

Dependencies

This project is based on python>=3.6. The dependent package for this project is listed as below:

numpy==1.13.1

tqdm

torch-scope>=0.5.0

pytorch==0.4.1

Command

To train an AutoNER model, please run

./autoner_train.sh

To apply the trained AutoNER model, please run

./autoner_test.sh

You can specify the parameters in the bash files. The variables names are self-explained.

Citation

Please cite the following two papers if you are using our tool. Thanks!

- Jingbo Shang*, Liyuan Liu*, Xiaotao Gu, Xiang Ren, Teng Ren and Jiawei Han, "Learning Named Entity Tagger using Domain-Specific Dictionary", in Proc. of 2018 Conf. on Empirical Methods in Natural Language Processing (EMNLP'18), Brussels, Belgium, Oct. 2018. (* Equal Contribution)

- Jingbo Shang, Jialu Liu, Meng Jiang, Xiang Ren, Clare R Voss, Jiawei Han, "Automated Phrase Mining from Massive Text Corpora", accepted by IEEE Transactions on Knowledge and Data Engineering, Feb. 2018.

@inproceedings{shang2018learning,

title = {Learning Named Entity Tagger using Domain-Specific Dictionary},

author = {Shang, Jingbo and Liu, Liyuan and Ren, Xiang and Gu, Xiaotao and Ren, Teng and Han, Jiawei},

booktitle = {EMNLP},

year = 2018,

}

@article{shang2018automated,

title = {Automated phrase mining from massive text corpora},

author = {Shang, Jingbo and Liu, Jialu and Jiang, Meng and Ren, Xiang and Voss, Clare R and Han, Jiawei},

journal = {IEEE Transactions on Knowledge and Data Engineering},

year = {2018},

publisher = {IEEE}

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].