LiyuanLucasLiu / Ld Net

Programming Languages

Projects that are alternatives of or similar to Ld Net

LD-Net

Check Our New NER Toolkit🚀🚀🚀

-

Inference:

- LightNER: inference w. models pre-trained / trained w. any following tools, efficiently.

-

Training:

- LD-Net: train NER models w. efficient contextualized representations.

- VanillaNER: train vanilla NER models w. pre-trained embedding.

-

Distant Training:

- AutoNER: train NER models w.o. line-by-line annotations and get competitive performance.

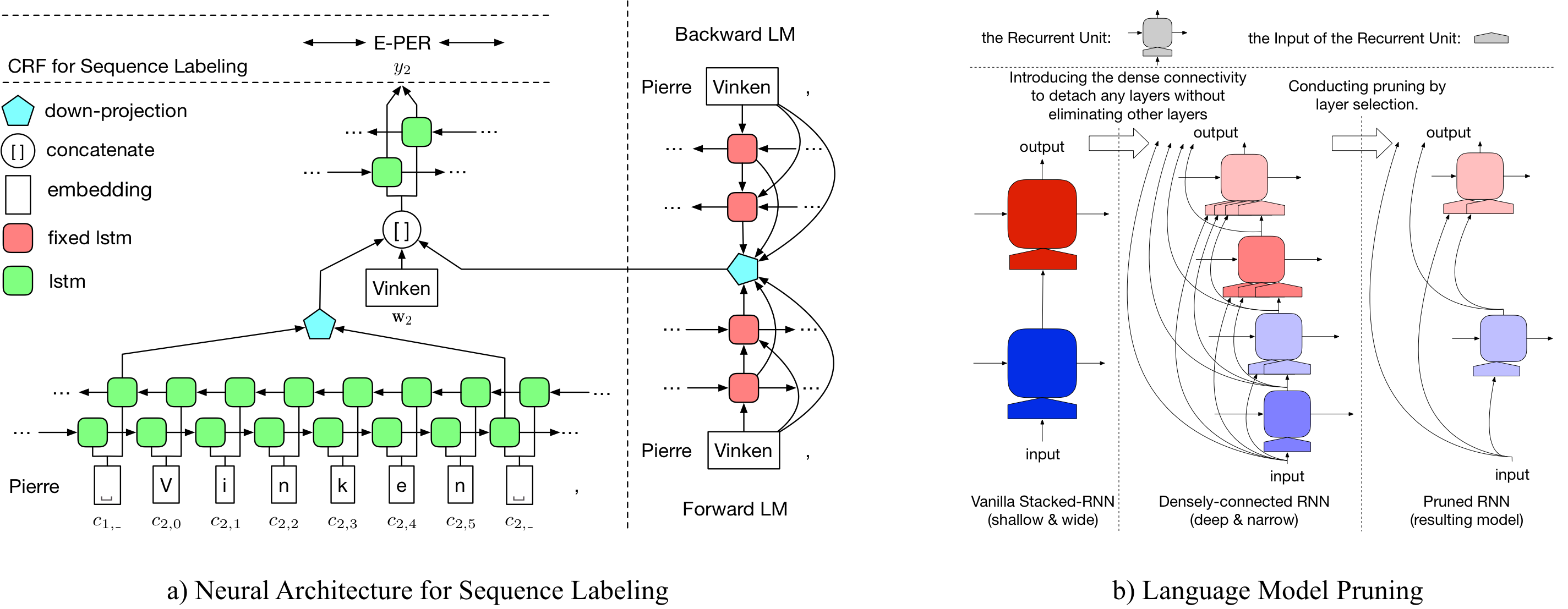

LD-Net provides sequence labeling models featuring:

- Efficiency: constructing efficient contextualized representations without retraining language models.

- Portability: well-organized, easy-to-modify and well-documented.

Remarkablely, our pre-trained NER model achieved:

- 92.08 test F1 on the CoNLL03 NER task.

- 160K words/sec decoding speed (6X speedup compared to its original model).

Details about LD-Net can be accessed at: https://arxiv.org/abs/1804.07827.

Model Notes

Benchmarks

| Model for CoNLL03 | #FLOPs | Mean(F1) | Std(F1) |

|---|---|---|---|

| Vanilla NER w.o. LM | 3 M | 90.78 | 0.24 |

| LD-Net (w.o. pruning) | 51 M | 91.86 | 0.15 |

| LD-Net (origin, picked based on dev f1) | 51 M | 91.95 | |

| LD-Net (pruned) | 5 M | 91.84 | 0.14 |

| Model for CoNLL00 | #FLOPs | Mean(F1) | Std(F1) |

|---|---|---|---|

| Vanilla NP w.o. LM | 3 M | 94.42 | 0.08 |

| LD-Net (w.o. pruning) | 51 M | 96.01 | 0.07 |

| LD-Net (origin, picked based on dev f1) | 51 M | 96.13 | |

| LD-Net (pruned) | 10 M | 95.66 | 0.04 |

Pretrained Models

Here we provide both pre-trained language models and pre-trained sequence labeling models.

Language Models

Our pretrained language model contains word embedding, 10-layer densely-connected LSTM and adative softmax, and achieve an average PPL of 50.06 on the one billion benchmark dataset.

| Forward Language Model | Backward Language Model |

|---|---|

| Download Link | Download Link |

Named Entity Recognition

The original pre-trained named entity tagger achieves 91.95 F1, the pruned tagged achieved 92.08 F1.

| Original Tagger | Pruned Tagger |

|---|---|

| Download Link | Download Link |

Chunking

The original pre-trained named entity tagger achieves 96.13 F1, the pruned tagged achieved 95.79 F1.

| Original Tagger | Pruned Tagger |

|---|---|

| Download Link | Download Link |

Training

Demo Scripts

To pruning the original LD-Net for the CoNLL03 NER, please run:

bash ldnet_ner_prune.sh

To pruning the original LD-Net for the CoNLL00 Chunking, please run:

bash ldnet_np_prune.sh

Dependency

Our package is based on Python 3.6 and the following packages:

numpy

tqdm

torch-scope

torch==0.4.1

Data

Pre-process scripts are available in pre_seq and pre_word_ada, while pre-processed data has been stored in:

| NER | Chunking |

|---|---|

| Download Link | Download Link |

Model

Our implementations are available in model_seq and model_word_ada, and the documentations are hosted in ReadTheDoc

| NER | Chunking |

|---|---|

| Download Link | Download Link |

Inference

For model inference, please check our LightNER package

Citation

If you find the implementation useful, please cite the following paper: Efficient Contextualized Representation: Language Model Pruning for Sequence Labeling

@inproceedings{liu2018efficient,

title = "{Efficient Contextualized Representation: Language Model Pruning for Sequence Labeling}",

author = {Liu, Liyuan and Ren, Xiang and Shang, Jingbo and Peng, Jian and Han, Jiawei},

booktitle = {EMNLP},

year = 2018,

}