llhthinker / Bdci2017 Minglue

BDCI2017-让AI当法官,决赛第四(4/415)https://www.datafountain.cn/competitions/277/details

Stars: ✭ 118

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Bdci2017 Minglue

Sarcasm Detection

Detecting Sarcasm on Twitter using both traditonal machine learning and deep learning techniques.

Stars: ✭ 73 (-38.14%)

Mutual labels: text-classification

Doc2vec

📓 Long(er) text representation and classification using Doc2Vec embeddings

Stars: ✭ 92 (-22.03%)

Mutual labels: text-classification

Delta

DELTA is a deep learning based natural language and speech processing platform.

Stars: ✭ 1,479 (+1153.39%)

Mutual labels: text-classification

Bible text gcn

Pytorch implementation of "Graph Convolutional Networks for Text Classification"

Stars: ✭ 90 (-23.73%)

Mutual labels: text-classification

Neuronblocks

NLP DNN Toolkit - Building Your NLP DNN Models Like Playing Lego

Stars: ✭ 1,356 (+1049.15%)

Mutual labels: text-classification

Char Cnn Text Classification Tensorflow

Character-level Convolutional Networks for Text Classification论文仿真实现

Stars: ✭ 72 (-38.98%)

Mutual labels: text-classification

Rnn Text Classification Tf

Tensorflow Implementation of Recurrent Neural Network (Vanilla, LSTM, GRU) for Text Classification

Stars: ✭ 114 (-3.39%)

Mutual labels: text-classification

Cnn intent classification

CNN for intent classification task in a Chatbot

Stars: ✭ 90 (-23.73%)

Mutual labels: text-classification

Hierarchical Attention Networks

TensorFlow implementation of the paper "Hierarchical Attention Networks for Document Classification"

Stars: ✭ 75 (-36.44%)

Mutual labels: text-classification

Text classification

Text Classification Algorithms: A Survey

Stars: ✭ 1,276 (+981.36%)

Mutual labels: text-classification

Text Classification

An example on how to train supervised classifiers for multi-label text classification using sklearn pipelines

Stars: ✭ 100 (-15.25%)

Mutual labels: text-classification

Nlp Tutorial

A list of NLP(Natural Language Processing) tutorials

Stars: ✭ 1,188 (+906.78%)

Mutual labels: text-classification

Kadot

Kadot, the unsupervised natural language processing library.

Stars: ✭ 108 (-8.47%)

Mutual labels: text-classification

Text Classifier

text-classifier is a toolkit for text classification. It was developed to facilitate the designing, comparing, and sharing of text classification models.

Stars: ✭ 72 (-38.98%)

Mutual labels: text-classification

Text rnn attention

嵌入Word2vec词向量的RNN+ATTENTION中文文本分类

Stars: ✭ 117 (-0.85%)

Mutual labels: text-classification

Pytorch Rnn Text Classification

Word Embedding + LSTM + FC

Stars: ✭ 112 (-5.08%)

Mutual labels: text-classification

Texting

[ACL 2020] Tensorflow implementation for "Every Document Owns Its Structure: Inductive Text Classification via Graph Neural Networks"

Stars: ✭ 103 (-12.71%)

Mutual labels: text-classification

BDCI2017-MingLue

- BDCI2017,让AI当法官:http://www.datafountain.cn/#/competitions/277/intro

- 比赛成绩:最终评测第四名

- 感谢另外两位队友: zxsong, dyhu

代码运行环境说明

- 操作系统:Ubuntu

- GPU显存:12G

- CUDA版本:8.0

- Python版本:3.5

- 依赖库:

-

numpy1.13.3 -

gensim3.1.0 -

jieba0.39 -

torch0.2.0

-

任务说明

-

Task 1: 罚金等级预测

-

Task 2: 法条预测

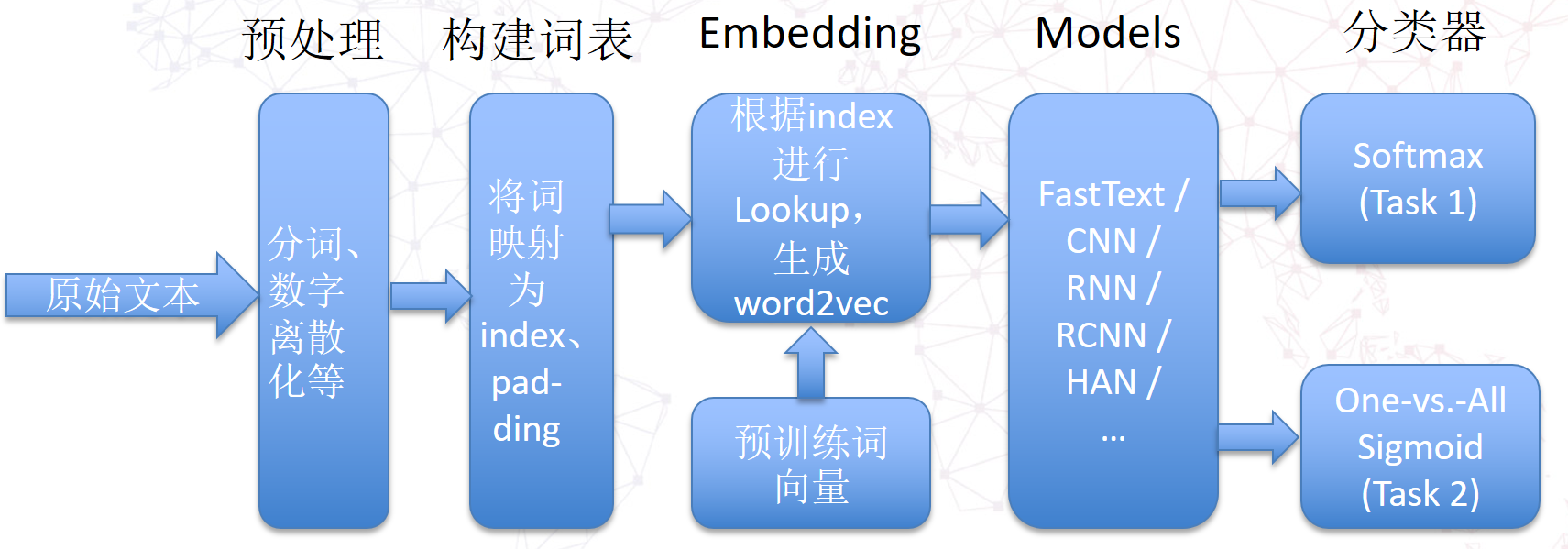

基本思路

分类器

任务1的罚金等级预测可以看作文本多分类问题,任务2的法条预测可以看作文本多标签分类问题 。

- 对于多分类问题,可以使用softmax函数作为分类器,最小化交叉熵(Cross Entropy)

- 对于多标签分类问题,可以one-versus-all策略,具体地,训练时可以使用pytorch中的MultiLabelSoftMarginLoss函数,预测时可以使用sigmoid函数:

- 若某个标签对应的sigmoid函数的输出值 > 阈值(如0.5):则该标签加入预测的标签集合,否则不加入。

特征提取

- 传统方法:n-gram + TFIDF + LDA (在实验阶段尝试过,最后没有使用)

- 深度学习方法 (基于word2vec):

优化思路

- 预处理

- 数字离散化

- 文本中的无用信息替换:如人名、地名等

- 数据增强: 同义词/同义短语替换

- 多模型融合: 预测时,将多个模型的预测值的均值作为最终的预测结果

- 如何解决不均匀分类问题

- 调整Loss函数中不同标签样本权重

- 过采样

- 欠采样 (未采用)

- 混合要素模型 (未采用)

- 混合paragraph vector (未采用)

- Inception in CNN

- 卷积层个数:单层 vs. 多层 (未尝试)

- 全连接层个数:单层 vs. 多层 (未尝试)

代码文件夹说明

-

preprocessor: 存放数据预处理相关的代码

- builddataset.py: 将文本数据转化为索引数值,用于Task 1

- buildmultidataset.py: 将文本数据转化为索引数值,用于Task 2

- segtext.py: 分词

python ./segtext.py -i [input-file-path] -o [output-file-path]- shuffledata.py: 将文本按行随机打乱

python ./shuffledata.py -i [input-file-path] -o [output-file-path]- trainword2vecmodel.py: 根据训练集[train-file]和测试集[test-file]生成[word2vec-model],用于pretrain

python ./trainword2vecmodel.py --word2vec-model-path [word2vec-model] --train-file [train-file] --test-file [test-file] -

utils: 存放一些工具类的代码

- calculatescore.py: 计算得分Micro-Averaged F1(Task 1)和Jaccard(Task 2)

- statisticdata.py: 对数据进行一些统计分析

- trainhelper.py: 训练需要的一些函数

- multitrainhelper.py: Task 2训练需要的一些函数

-

models: 各种DL模型代码

model_id code_file_name model_name 0 fasttext.py FastText 1 textcnn.py TextCNN 2 textrcnn.py TextRCNN 3 textrnn.py TextRNN 4 hierarchical.py/ hierarchical_mask.py HAN 5 cnnwithdoc2vec.py CNNWithDoc2Vec 6 rcnnwithdoc2vec.py RCNNWithDoc2Vec ... ... ... - 单独使用HAN模型,参见队友zxsong的repo: Hierarchical-Attention-Network

-

data: 将数据包装成pytorch中的Dataset

- mingluedata.py: Task 1

- mingluemultidata.py: Task 2

-

notebooks: 用jupyter notebook做一些实验

-

主目录: 存放训练和预测运行代码和相关配置代码

- train.py: Task 1 训练脚本

python ./train.py --model-id [model_id] --use-element [y/n] --is-save [y/n]- multitrain.py: Task 2 训练脚本

python ./multitrain.py --model-id [model_id] --use-element [y/n] --is-save [y/n]- predict.py: 预测脚本,载入已有模型进行预测并生成json格式的结果文件

# 注意model_id要和model_path对应的Model保持一致 python ./predict.py --task1-model-id [model_id] --task1-model-path [model_path] --task2-model-id [model_id] --task2-model-path [model_path]- predict_task1.py: task1预测脚本,载入已有task1模型进行预测并生成json格式的结果文件

# 注意model_id要和model_path对应的Model保持一致 python ./predict_task1.py --task1-model-id [model_id] --task1-model-path [model_path]- predict_task2.py: task2预测脚本,载入已有task2模型进行预测并生成json格式的结果文件

# 注意model_id要和model_path对应的Model保持一致 python ./predict_task2.py --task2-model-id [model_id] --task2-model-path [model_path]- mix_predict_task1.py: task1模型融合预测脚本,载入针对task1训练后的RCNN和HAN模型进行融合预测并生成json格式的结果文件

python ./mix_predict_task1.py --rcnn-model-path [RCNN_model_path] --han-model-path [HAN_model_path]- mix_predict_task2.py: task2模型融合预测脚本,载入针对task2训练后的RCNN和HAN模型进行融合预测并生成json格式的结果文件

python ./mix_predict_task2.py --rcnn-model-path [RCNN_model_path] --han-model-path [HAN_model_path]- config.py: 配置文件,其中Config类对应Task 1, MultiConfig类对应Task 2

数据文件夹说明

- corpus: 存放训练数据和测试数据

-

pickles: 存放pickle类型数据,包括:

- index2word.[*.]pkl

- word2index.[*.]pkl

- 保存的模型数据:

- *.[model_name]表示Task 1的模型文件, 如params.pkl.1511507513.TextCNN

- *.multi.[model_name]表示Task 2的模型文件,如params.pkl.1511514902.multi.TextCNN

- ...

- word2vec: 存放pre-train word embedding相关数据

- results: 存放预测结果文件(json)

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].