tqtg / Hierarchical Attention Networks

Programming Languages

Projects that are alternatives of or similar to Hierarchical Attention Networks

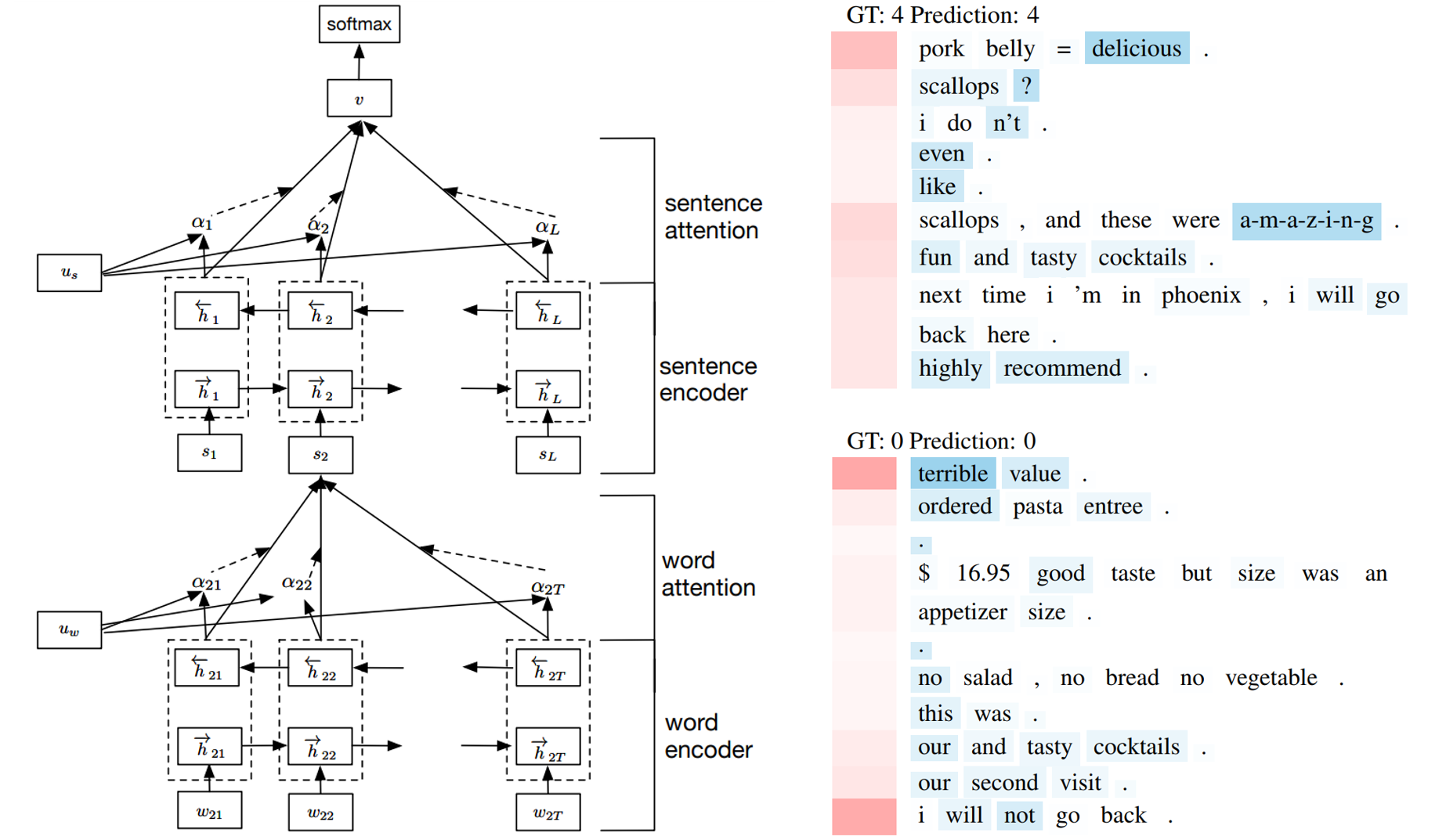

Hierarchical Attention Networks for Document Classification

This is an implementation of the paper Hierarchical Attention Networks for Document Classification, NAACL 2016.

Requirements

- Python 3

- Tensorflow > 1.0

- Pandas

- Nltk

- Tqdm

- Glove pre-trained word embeddings

Data

We use the data provided by Tang et al. 2015, including 4 datasets:

- IMDB

- Yelp 2013

- Yelp 2014

- Yelp 2015

Note: The original data seems to have an issue with unzipping. I re-uploaded the data to GG Drive for better downloading speed. Please request for access permission.

Usage

First, download the datasets and unzip into data folder.

Then, run script to prepare the data (default is using Yelp-2015 dataset):

python data_prepare.py

Train and evaluate the model:

(make sure Glove embeddings are ready before training)

wget http://nlp.stanford.edu/data/glove.6B.zip

unzip glove.6B.zip

python train.py

Print training arguments:

python train.py --help

optional arguments:

-h, --help show this help message and exit

--cell_dim CELL_DIM

Hidden dimensions of GRU cells (default: 50)

--att_dim ATTENTION_DIM

Dimensionality of attention spaces (default: 100)

--emb_dim EMBEDDING_DIM

Dimensionality of word embedding (default: 200)

--learning_rate LEARNING_RATE

Learning rate (default: 0.0005)

--max_grad_norm MAX_GRAD_NORM

Maximum value of the global norm of the gradients for clipping (default: 5.0)

--dropout_rate DROPOUT_RATE

Probability of dropping neurons (default: 0.5)

--num_classes NUM_CLASSES

Number of classes (default: 5)

--num_checkpoints NUM_CHECKPOINTS

Number of checkpoints to store (default: 1)

--num_epochs NUM_EPOCHS

Number of training epochs (default: 20)

--batch_size BATCH_SIZE

Batch size (default: 64)

--display_step DISPLAY_STEP

Number of steps to display log into TensorBoard (default: 20)

--allow_soft_placement ALLOW_SOFT_PLACEMENT

Allow device soft device placement

Results

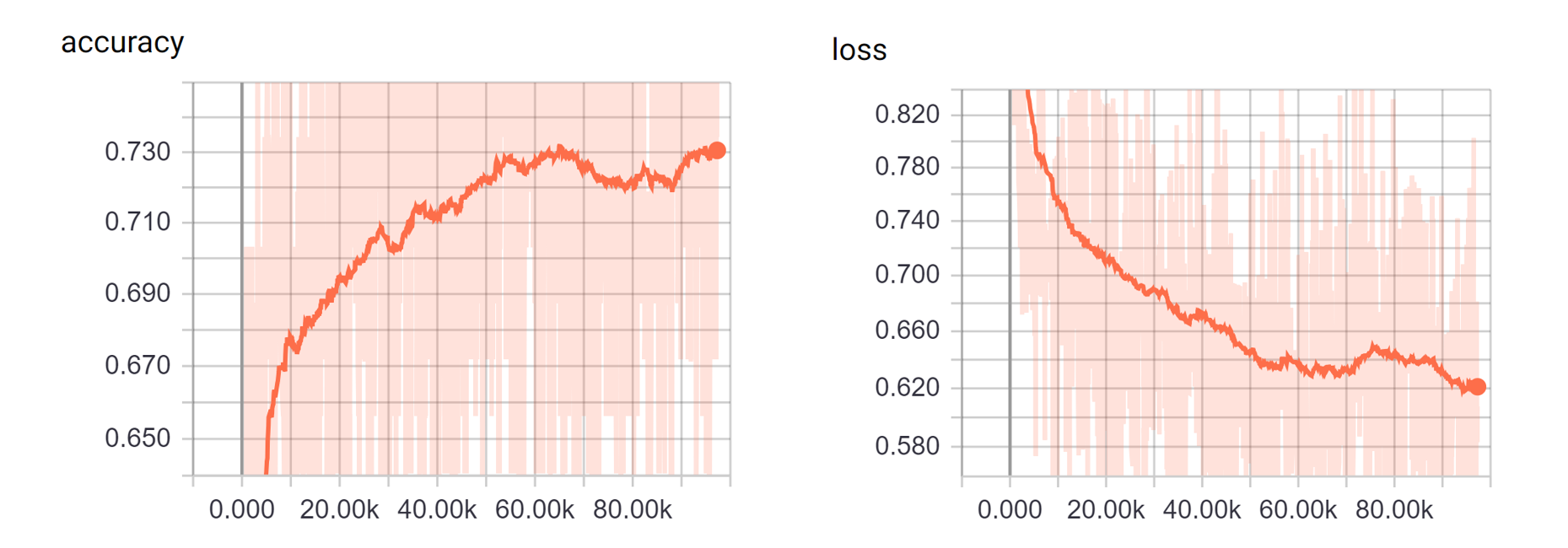

With the Yelp-2015 dataset, after 5 epochs, we achieved:

- 69.79% accuracy on the dev set

- 69.62% accuracy on the test set

No systematic hyper-parameter tunning was performed. The result reported in the paper is 71.0% for the Yelp-2015.