Kyubyong / Bert_ner

Ner with Bert

Stars: ✭ 240

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Bert ner

Spacy Lookup

Named Entity Recognition based on dictionaries

Stars: ✭ 212 (-11.67%)

Mutual labels: named-entity-recognition, ner

Ld Net

Efficient Contextualized Representation: Language Model Pruning for Sequence Labeling

Stars: ✭ 148 (-38.33%)

Mutual labels: named-entity-recognition, ner

Bnlp

BNLP is a natural language processing toolkit for Bengali Language.

Stars: ✭ 127 (-47.08%)

Mutual labels: named-entity-recognition, ner

Multilstm

keras attentional bi-LSTM-CRF for Joint NLU (slot-filling and intent detection) with ATIS

Stars: ✭ 122 (-49.17%)

Mutual labels: named-entity-recognition, ner

Bert Sklearn

a sklearn wrapper for Google's BERT model

Stars: ✭ 182 (-24.17%)

Mutual labels: named-entity-recognition, ner

Dan Jurafsky Chris Manning Nlp

My solution to the Natural Language Processing course made by Dan Jurafsky, Chris Manning in Winter 2012.

Stars: ✭ 124 (-48.33%)

Mutual labels: named-entity-recognition, ner

Persian Ner

پیکره بزرگ شناسایی موجودیتهای نامدار فارسی برچسب خورده

Stars: ✭ 183 (-23.75%)

Mutual labels: named-entity-recognition, ner

Turkish Bert Nlp Pipeline

Bert-base NLP pipeline for Turkish, Ner, Sentiment Analysis, Question Answering etc.

Stars: ✭ 85 (-64.58%)

Mutual labels: named-entity-recognition, ner

Monpa

MONPA 罔拍是一個提供正體中文斷詞、詞性標註以及命名實體辨識的多任務模型

Stars: ✭ 203 (-15.42%)

Mutual labels: named-entity-recognition, ner

Kashgari

Kashgari is a production-level NLP Transfer learning framework built on top of tf.keras for text-labeling and text-classification, includes Word2Vec, BERT, and GPT2 Language Embedding.

Stars: ✭ 2,235 (+831.25%)

Mutual labels: named-entity-recognition, ner

Ner

命名体识别(NER)综述-论文-模型-代码(BiLSTM-CRF/BERT-CRF)-竞赛资源总结-随时更新

Stars: ✭ 118 (-50.83%)

Mutual labels: named-entity-recognition, ner

Ner Datasets

Datasets to train supervised classifiers for Named-Entity Recognition in different languages (Portuguese, German, Dutch, French, English)

Stars: ✭ 220 (-8.33%)

Mutual labels: named-entity-recognition, ner

Bond

BOND: BERT-Assisted Open-Domain Name Entity Recognition with Distant Supervision

Stars: ✭ 96 (-60%)

Mutual labels: named-entity-recognition, ner

Ner Evaluation

An implementation of a full named-entity evaluation metrics based on SemEval'13 Task 9 - not at tag/token level but considering all the tokens that are part of the named-entity

Stars: ✭ 126 (-47.5%)

Mutual labels: named-entity-recognition, ner

Bi Lstm Crf Ner Tf2.0

Named Entity Recognition (NER) task using Bi-LSTM-CRF model implemented in Tensorflow 2.0(tensorflow2.0 +)

Stars: ✭ 93 (-61.25%)

Mutual labels: named-entity-recognition, ner

Ncrfpp

NCRF++, a Neural Sequence Labeling Toolkit. Easy use to any sequence labeling tasks (e.g. NER, POS, Segmentation). It includes character LSTM/CNN, word LSTM/CNN and softmax/CRF components.

Stars: ✭ 1,767 (+636.25%)

Mutual labels: named-entity-recognition, ner

Phonlp

PhoNLP: A BERT-based multi-task learning toolkit for part-of-speech tagging, named entity recognition and dependency parsing (NAACL 2021)

Stars: ✭ 56 (-76.67%)

Mutual labels: named-entity-recognition, ner

Torchcrf

An Inplementation of CRF (Conditional Random Fields) in PyTorch 1.0

Stars: ✭ 58 (-75.83%)

Mutual labels: named-entity-recognition, ner

Sequence tagging

Named Entity Recognition (LSTM + CRF) - Tensorflow

Stars: ✭ 1,889 (+687.08%)

Mutual labels: named-entity-recognition, ner

Pytorch Bert Crf Ner

KoBERT와 CRF로 만든 한국어 개체명인식기 (BERT+CRF based Named Entity Recognition model for Korean)

Stars: ✭ 236 (-1.67%)

Mutual labels: named-entity-recognition, ner

PyTorch Implementation of NER with pretrained Bert

I know that you know BERT. In the great paper, the authors claim that the pretrained models do great in NER. It's even impressive, allowing for the fact that they don't use any prediction-conditioned algorithms like CRFs. We try to reproduce the result in a simple manner.

Requirements

- python>=3.6 (Let's move on to python 3 if you still use python 2)

- pytorch==1.0

- pytorch_pretrained_bert==0.6.1

- numpy>=1.15.4

Training & Evaluating

- STEP 1. Run the command below to download conll 2003 NER dataset.

bash download.sh

It should be extracted to conll2003/ folder automatically.

- STEP 2a. Run the command if you want to do the feature-based approach.

python train.py --logdir checkpoints/feature --batch_size 128 --top_rnns --lr 1e-4 --n_epochs 30

- STEP 2b. Run the command if you want to do the fine-tuning approach.

python train.py --logdir checkpoints/finetuning --finetuning --batch_size 32 --lr 5e-5 --n_epochs 3

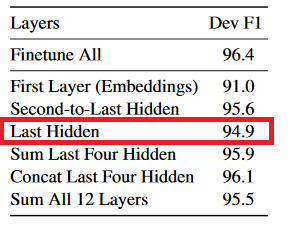

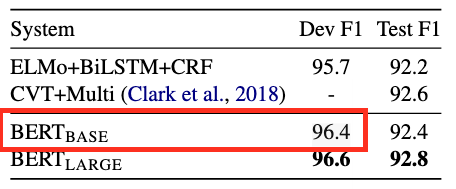

Results in the paper

- Feature-based approach

- Fine-tuning

Results

- F1 scores on conll2003 valid dataset are reported.

- You can check the classification outputs in checkpoints.

| epoch | feature-based | fine-tuning |

|---|---|---|

| 1 | 0.2 | 0.95 |

| 2 | 0.75 | 0.95 |

| 3 | 0.84 | 0.96 |

| 4 | 0.88 | |

| 5 | 0.89 | |

| 6 | 0.90 | |

| 7 | 0.90 | |

| 8 | 0.91 | |

| 9 | 0.91 | |

| 10 | 0.92 | |

| 11 | 0.92 | |

| 12 | 0.93 | |

| 13 | 0.93 | |

| 14 | 0.93 | |

| 15 | 0.93 | |

| 16 | 0.92 | |

| 17 | 0.93 | |

| 18 | 0.93 | |

| 19 | 0.93 | |

| 20 | 0.93 | |

| 21 | 0.94 | |

| 22 | 0.94 | |

| 23 | 0.93 | |

| 24 | 0.93 | |

| 25 | 0.93 | |

| 26 | 0.93 | |

| 27 | 0.93 | |

| 28 | 0.93 | |

| 29 | 0.94 | |

| 30 | 0.93 |

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].