1Konny / Beta Vae

Licence: mit

Pytorch implementation of β-VAE

Stars: ✭ 326

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Beta Vae

Factorvae

Pytorch implementation of FactorVAE proposed in Disentangling by Factorising(http://arxiv.org/abs/1802.05983)

Stars: ✭ 176 (-46.01%)

Mutual labels: unsupervised-learning, vae, celeba

Disentangling Vae

Experiments for understanding disentanglement in VAE latent representations

Stars: ✭ 398 (+22.09%)

Mutual labels: unsupervised-learning, vae, celeba

ladder-vae-pytorch

Ladder Variational Autoencoders (LVAE) in PyTorch

Stars: ✭ 59 (-81.9%)

Mutual labels: vae, unsupervised-learning

Awesome Vaes

A curated list of awesome work on VAEs, disentanglement, representation learning, and generative models.

Stars: ✭ 418 (+28.22%)

Mutual labels: unsupervised-learning, vae

Pycadl

Python package with source code from the course "Creative Applications of Deep Learning w/ TensorFlow"

Stars: ✭ 356 (+9.2%)

Mutual labels: vae, celeba

Variational Autoencoder

Variational autoencoder implemented in tensorflow and pytorch (including inverse autoregressive flow)

Stars: ✭ 807 (+147.55%)

Mutual labels: unsupervised-learning, vae

VAENAR-TTS

PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

Stars: ✭ 66 (-79.75%)

Mutual labels: vae, unsupervised-learning

style-vae

Implementation of VAE and Style-GAN Architecture Achieving State of the Art Reconstruction

Stars: ✭ 25 (-92.33%)

Mutual labels: vae, celeba

srVAE

VAE with RealNVP prior and Super-Resolution VAE in PyTorch. Code release for https://arxiv.org/abs/2006.05218.

Stars: ✭ 56 (-82.82%)

Mutual labels: vae, unsupervised-learning

DCGAN-CelebA-PyTorch-CPP

DCGAN Implementation using PyTorch in both C++ and Python

Stars: ✭ 14 (-95.71%)

Mutual labels: celeba

Pytorch Vsumm Reinforce

AAAI 2018 - Unsupervised video summarization with deep reinforcement learning (PyTorch)

Stars: ✭ 283 (-13.19%)

Mutual labels: unsupervised-learning

UEGAN

[TIP2020] Pytorch implementation of "Towards Unsupervised Deep Image Enhancement with Generative Adversarial Network"

Stars: ✭ 68 (-79.14%)

Mutual labels: unsupervised-learning

S Vae Pytorch

Pytorch implementation of Hyperspherical Variational Auto-Encoders

Stars: ✭ 255 (-21.78%)

Mutual labels: vae

Self-Supervised-depth

Self-Supervised depth kalilia

Stars: ✭ 20 (-93.87%)

Mutual labels: unsupervised-learning

Machine Learning Algorithms From Scratch

Implementing machine learning algorithms from scratch.

Stars: ✭ 297 (-8.9%)

Mutual labels: unsupervised-learning

adareg-monodispnet

Repository for Bilateral Cyclic Constraint and Adaptive Regularization for Unsupervised Monocular Depth Prediction (CVPR2019)

Stars: ✭ 22 (-93.25%)

Mutual labels: unsupervised-learning

learning-topology-synthetic-data

Tensorflow implementation of Learning Topology from Synthetic Data for Unsupervised Depth Completion (RAL 2021 & ICRA 2021)

Stars: ✭ 22 (-93.25%)

Mutual labels: unsupervised-learning

Selflow

SelFlow: Self-Supervised Learning of Optical Flow

Stars: ✭ 319 (-2.15%)

Mutual labels: unsupervised-learning

Simclr

PyTorch implementation of SimCLR: A Simple Framework for Contrastive Learning of Visual Representations by T. Chen et al.

Stars: ✭ 293 (-10.12%)

Mutual labels: unsupervised-learning

β-VAE

Pytorch reproduction of two papers below:

- β-VAE: Learning Basic Visual Concepts with a Constrained Variational Framework, Higgins et al., ICLR, 2017

- Understanding disentangling in β-VAE, Burgess et al., arxiv:1804.03599, 2018

Dependencies

python 3.6.4

pytorch 0.3.1.post2

visdom

Datasets

Usage

initialize visdom

python -m visdom.server

you can reproduce results below by

sh run_celeba_H_beta10_z10.sh

sh run_celeba_H_beta10_z32.sh

sh run_3dchairs_H_beta4_z10.sh

sh run_3dchairs_H_beta4_z16.sh

sh run_dsprites_B_gamma100_z10.sh

or you can run your own experiments by setting parameters manually.

for objective and model arguments, you have two options H and B indicating methods proposed in Higgins et al. and Burgess et al., respectively.

arguments --C_max and --C_stop_iter should be set when --objective B. for further details, please refer to Burgess et al.

e.g.

python main.py --dataset 3DChairs --beta 4 --lr 1e-4 --z_dim 10 --objective H --model H --max_iter 1e6 ...

python main.py --dataset dsprites --gamma 1000 --C_max 25 --C_stop_iter 1e5 --lr 5e-4 --z_dim 10 --objective B --model B --max_iter 1e6 ...

check training process on the visdom server

localhost:8097

Results

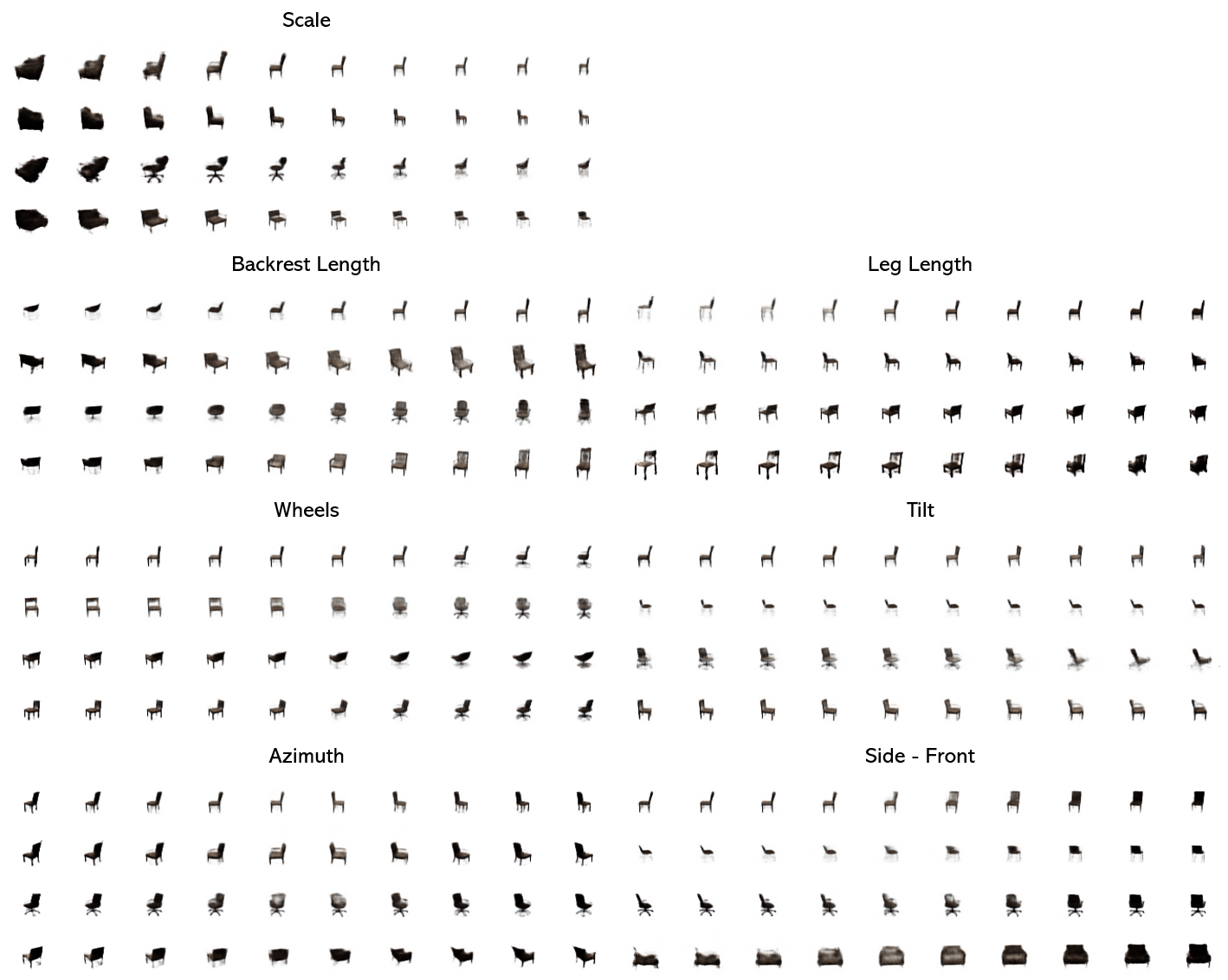

3D Chairs

sh run_3dchairs_H_beta4_z10.sh

sh run_3dchairs_H_beta4_z16.sh

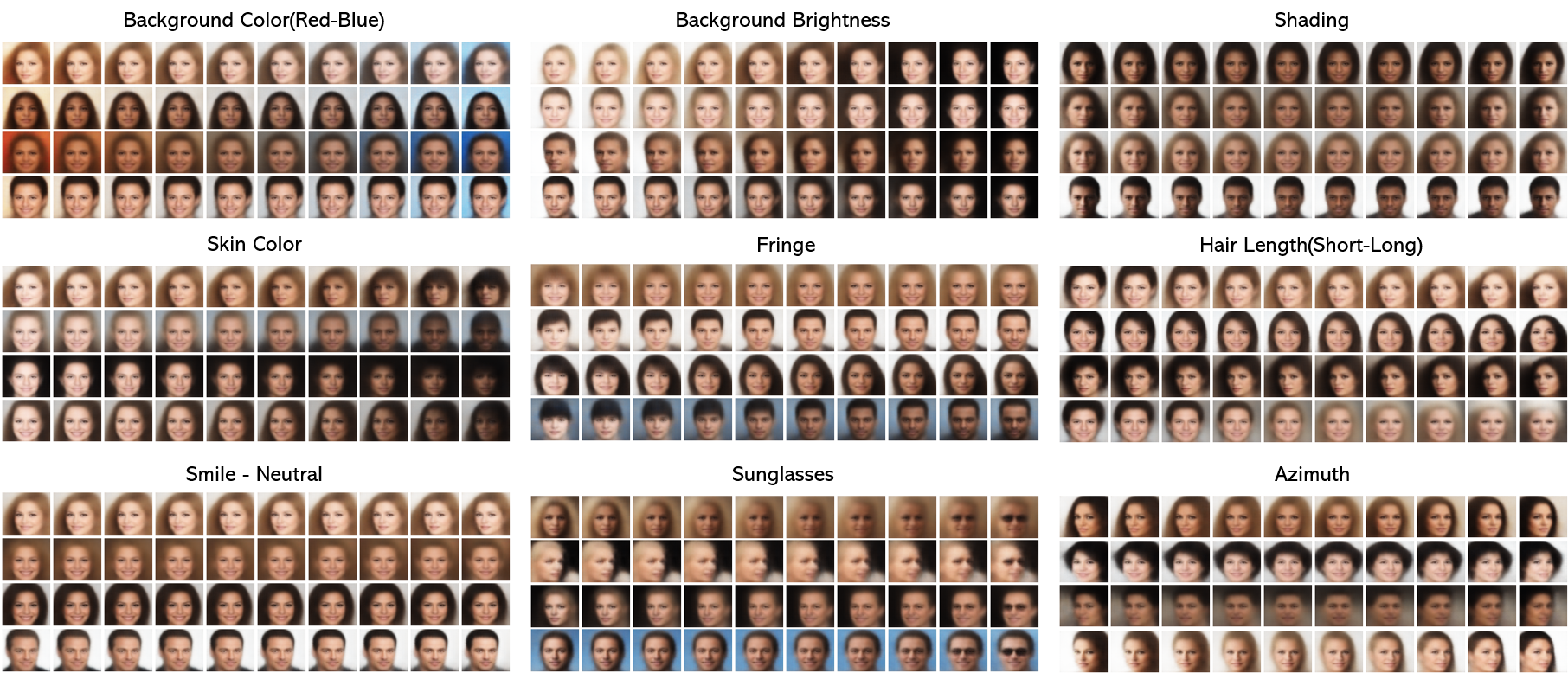

CelebA

sh run_celeba_H_beta10_z10.sh

sh run_celeba_H_beta10_z32.sh

dSprites

sh run_dsprites_B.sh

visdom line plot

latent traversal gif(--save_output True)

##### reconstruction(left: true, right: reconstruction)

Reference

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].