1Konny / Factorvae

Licence: mit

Pytorch implementation of FactorVAE proposed in Disentangling by Factorising(http://arxiv.org/abs/1802.05983)

Stars: ✭ 176

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Factorvae

Beta Vae

Pytorch implementation of β-VAE

Stars: ✭ 326 (+85.23%)

Mutual labels: unsupervised-learning, vae, celeba

Disentangling Vae

Experiments for understanding disentanglement in VAE latent representations

Stars: ✭ 398 (+126.14%)

Mutual labels: unsupervised-learning, vae, celeba

ladder-vae-pytorch

Ladder Variational Autoencoders (LVAE) in PyTorch

Stars: ✭ 59 (-66.48%)

Mutual labels: vae, unsupervised-learning

VAENAR-TTS

PyTorch Implementation of VAENAR-TTS: Variational Auto-Encoder based Non-AutoRegressive Text-to-Speech Synthesis.

Stars: ✭ 66 (-62.5%)

Mutual labels: vae, unsupervised-learning

style-vae

Implementation of VAE and Style-GAN Architecture Achieving State of the Art Reconstruction

Stars: ✭ 25 (-85.8%)

Mutual labels: vae, celeba

srVAE

VAE with RealNVP prior and Super-Resolution VAE in PyTorch. Code release for https://arxiv.org/abs/2006.05218.

Stars: ✭ 56 (-68.18%)

Mutual labels: vae, unsupervised-learning

Pycadl

Python package with source code from the course "Creative Applications of Deep Learning w/ TensorFlow"

Stars: ✭ 356 (+102.27%)

Mutual labels: vae, celeba

Awesome Vaes

A curated list of awesome work on VAEs, disentanglement, representation learning, and generative models.

Stars: ✭ 418 (+137.5%)

Mutual labels: unsupervised-learning, vae

Variational Autoencoder

Variational autoencoder implemented in tensorflow and pytorch (including inverse autoregressive flow)

Stars: ✭ 807 (+358.52%)

Mutual labels: unsupervised-learning, vae

Beat Blender

Blend beats using machine learning to create music in a fun new way.

Stars: ✭ 147 (-16.48%)

Mutual labels: vae

Vae Lagging Encoder

PyTorch implementation of "Lagging Inference Networks and Posterior Collapse in Variational Autoencoders" (ICLR 2019)

Stars: ✭ 153 (-13.07%)

Mutual labels: vae

Pytorch Vae

A Collection of Variational Autoencoders (VAE) in PyTorch.

Stars: ✭ 2,704 (+1436.36%)

Mutual labels: vae

A Hierarchical Latent Structure For Variational Conversation Modeling

PyTorch Implementation of "A Hierarchical Latent Structure for Variational Conversation Modeling" (NAACL 2018 Oral)

Stars: ✭ 153 (-13.07%)

Mutual labels: vae

Lr Gan.pytorch

Pytorch code for our ICLR 2017 paper "Layered-Recursive GAN for image generation"

Stars: ✭ 145 (-17.61%)

Mutual labels: unsupervised-learning

Dynamics

A Compositional Object-Based Approach to Learning Physical Dynamics

Stars: ✭ 159 (-9.66%)

Mutual labels: unsupervised-learning

Spherical Text Embedding

[NeurIPS 2019] Spherical Text Embedding

Stars: ✭ 143 (-18.75%)

Mutual labels: unsupervised-learning

Flappy Es

Flappy Bird AI using Evolution Strategies

Stars: ✭ 140 (-20.45%)

Mutual labels: unsupervised-learning

Tensorflowprojects

Deep learning using tensorflow

Stars: ✭ 167 (-5.11%)

Mutual labels: unsupervised-learning

Remixautoml

R package for automation of machine learning, forecasting, feature engineering, model evaluation, model interpretation, data generation, and recommenders.

Stars: ✭ 159 (-9.66%)

Mutual labels: unsupervised-learning

FactorVAE

Pytorch implementation of FactorVAE proposed in Disentangling by Factorising, Kim et al.(http://arxiv.org/abs/1802.05983)

Dependencies

python 3.6.4

pytorch 0.4.0 (or check pytorch-0.3.1 branch for pytorch 0.3.1)

visdom

tqdm

Datasets

- 2D Shapes(dsprites) Dataset

sh scripts/prepare_data.sh dsprites

- 3D Chairs Dataset

sh scripts/prepare_data.sh 3DChairs

- CelebA Dataset(download)

# first download img_align_celeba.zip and put in data directory like below

└── data

└── img_align_celeba.zip

# then run scrip file

sh scripts/prepare_data.sh CelebA

then data directory structure will be like below

.

└── data

└── dsprites-dataset

└── dsprites_ndarray_co1sh3sc6or40x32y32_64x64.npz

├── 3DChairs

└── images

├── 1_xxx.png

├── 2_xxx.png

├── ...

├── CelebA

└── img_align_celeba

├── 000001.jpg

├── 000002.jpg

├── ...

└── 202599.jpg

└── ...

NOTE: I recommend to preprocess image files(e.g. resizing) BEFORE training and avoid preprocessing on-the-fly.

Usage

initialize visdom

python -m visdom.server

you can reproduce results below as follows

e.g.

sh scripts/run_celeba.sh $RUN_NAME

sh scripts/run_dsprites_gamma6p4.sh $RUN_NAME

sh scripts/run_dsprites_gamma10.sh $RUN_NAME

sh scripts/run_3dchairs.sh $RUN_NAME

or you can run your own experiments by setting parameters manually

e.g.

python main.py --name run_celeba --dataset celeba --gamma 6.4 --lr_VAE 1e-4 --lr_D 5e-5 --z_dim 10 ...

check training process on the visdom server

localhost:8097

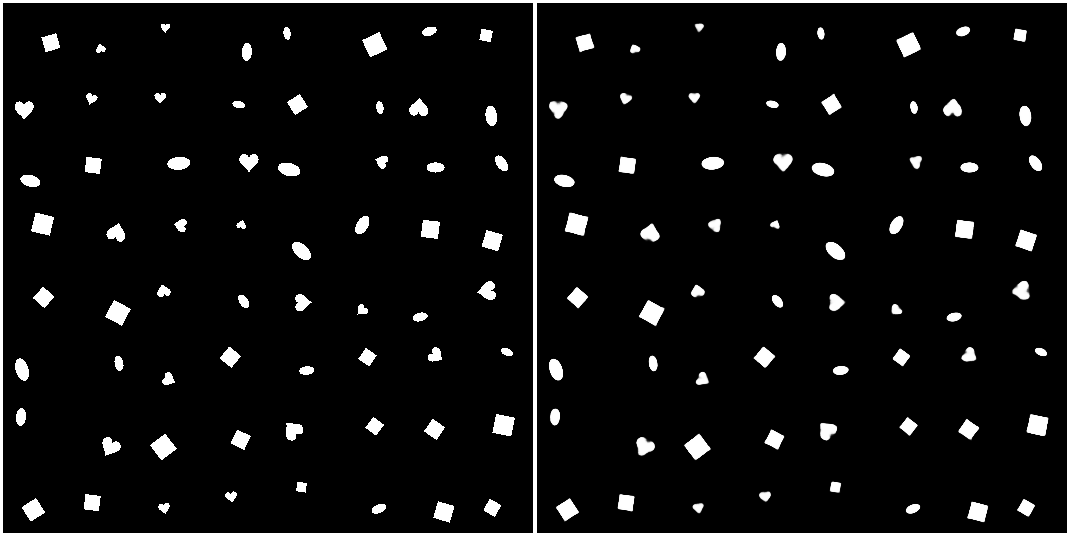

Results - 2D Shapes(dsprites) Dataset

Reconstruction($\gamma$=6.4)

Latent Space Traverse($\gamma$=6.4)

Latent Space Traverse($\gamma$=10)

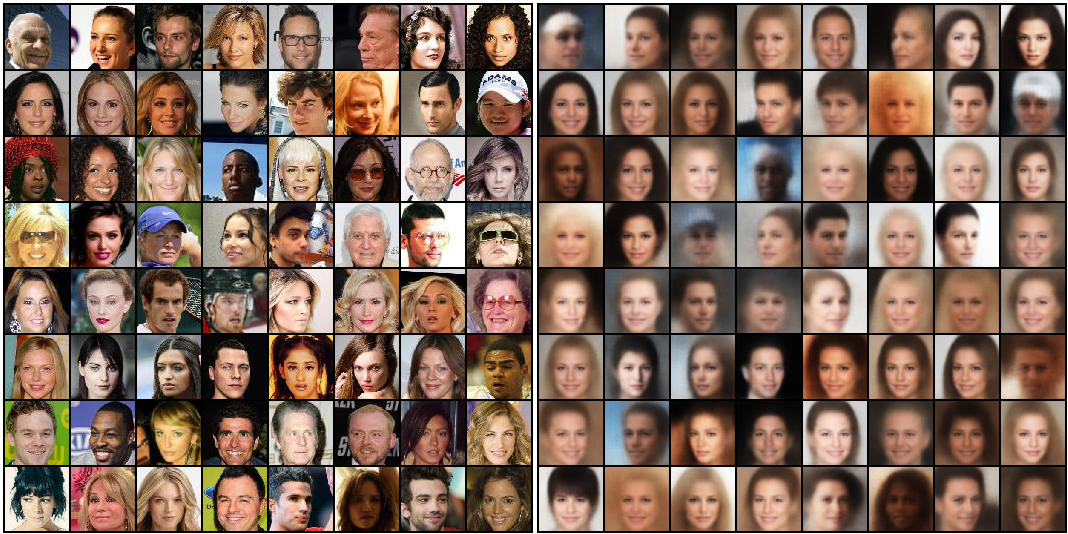

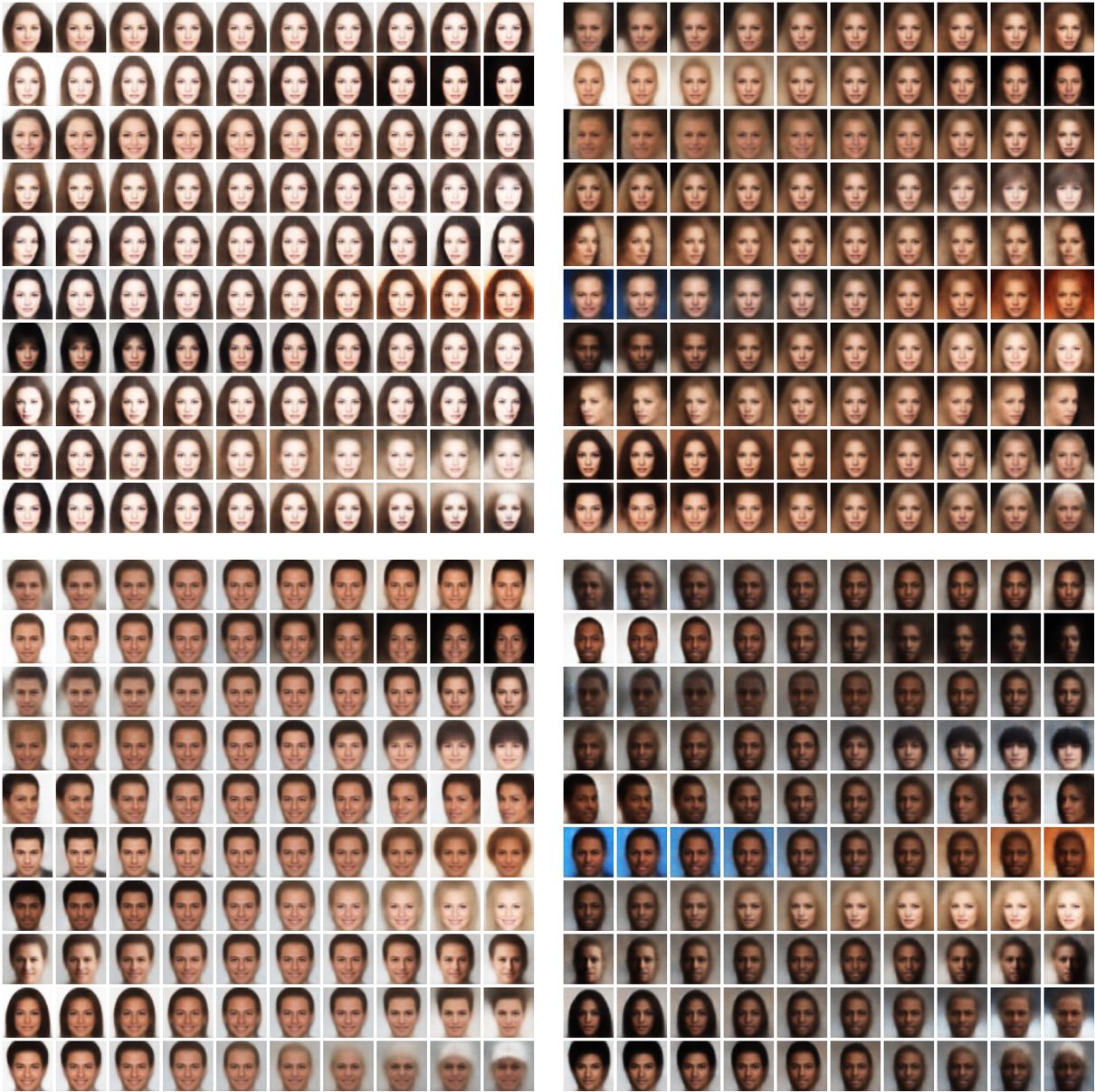

Results - CelebA Dataset

Reconstruction

Latent Space Traverse

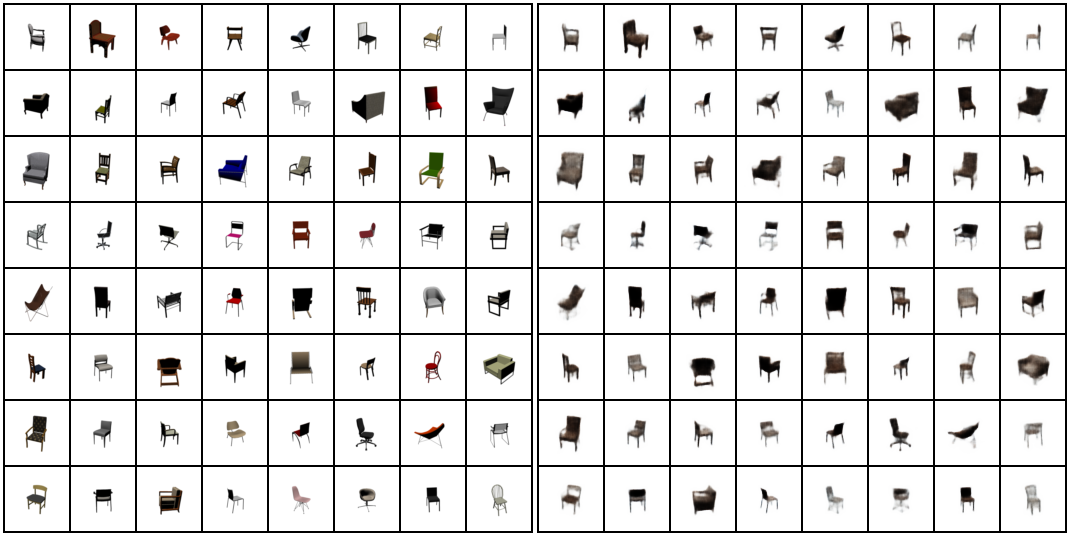

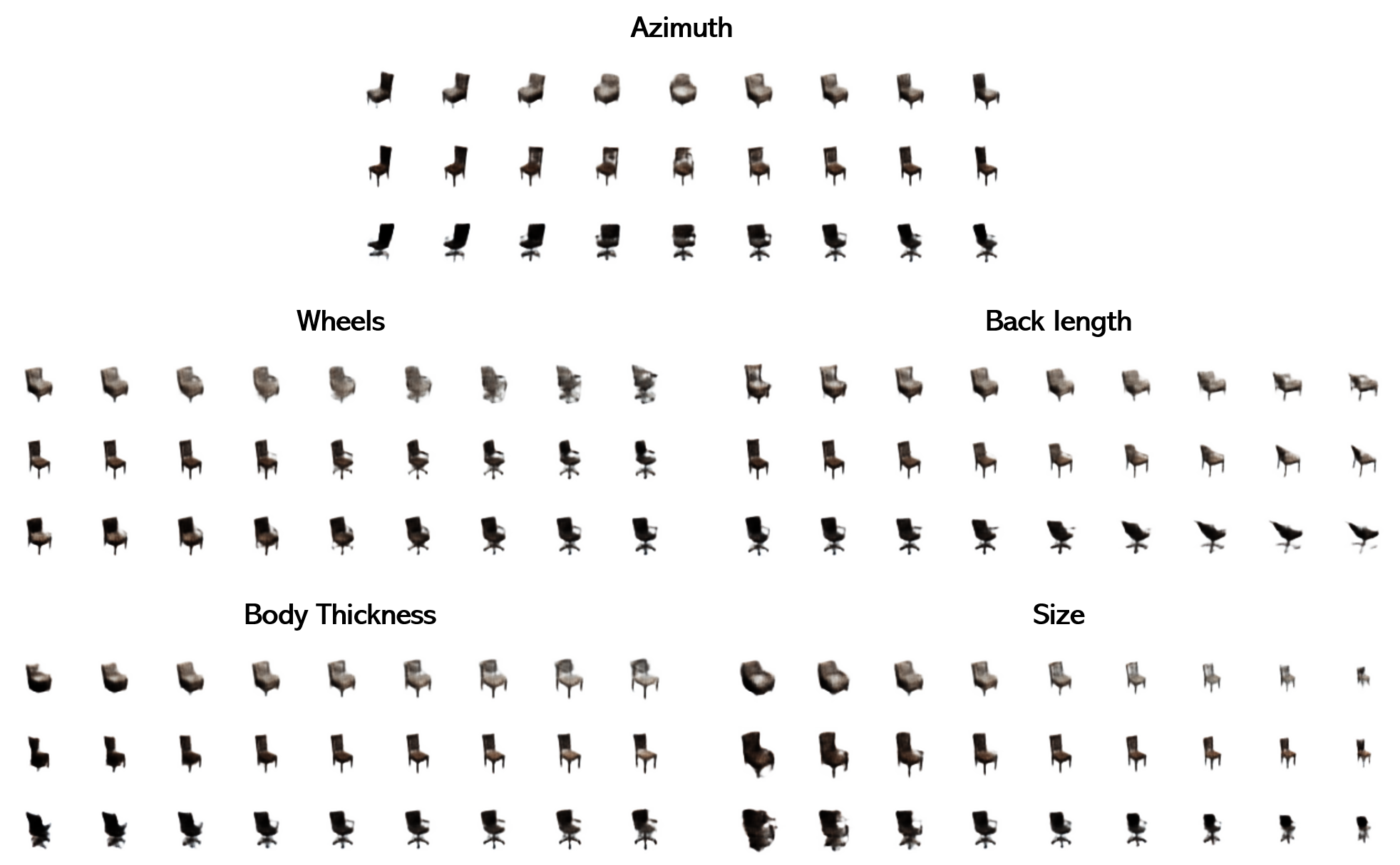

Results - 3D Chairs Dataset

Reconstruction

Latent Space Traverse

Reference

- Disentangling by Factorising, Kim et al.(http://arxiv.org/abs/1802.05983)

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].