Bigtable Autoscaler Operator

Bigtable Autoscaler Operator is a Kubernetes Operator to autoscale the number of nodes of a Google Cloud Bigtable instance based on the CPU utilization.

Overview

Google Cloud Bigtable is designed to scale horizontally, meaning that the number of nodes of an instance can be increased to balance and reduce the average CPU utilization. For Bigtable applications dealing with high variances of workload, automating the cluster scaling allow handling short load bursts while keeping costs as low as possible. This operator automates the scaling by balancing the number of nodes to keep the CPU utilization under the manifest specifications.

The reconciler's responsibility is to keep the CPU utilization of the instance below the target specification respecting the minimum and maximum amount of nodes. When the CPU utilization is above the target, the reconciler will increase the amount of nodes in steps linearly proportional to how above it is from the target. For example, considering 100% of CPU utilization and only one node running, if the CPU target is 50%, it increases to 2 nodes, but if the CPU target is 25% it increases to 4 nodes.

The downscale also follows a linear rule, but it considers the maxScaleDownNodes specification which defines the maximum downscale step size in order to avoid aggressive downscale.

Furthermore, the downscale step is calculated using the amount of current nodes running and the CPU target. For example, if there are two nodes running and the CPU target is 50%, in order to downscale

occur the CPU utilization must go bellow 25%. This is important to avoid downscale that immediately causes upscale.

All scale operations are made respecting a reaction time window, which at time is not part of the manifest specification.

The image bellow shows how peaks above the CPU target of 50% are shortened by the automatic increase of nodes.

Usage

Create a k8s secret with your service account:

$ kubectl create secret generic bigtable-autoscaler-service-account --from-file=service-account=./your_service_account.jsonCreate an autoscaling manifest:

# my-autoscaler.yml

apiVersion: bigtable.bigtable-autoscaler.com/v1

kind: BigtableAutoscaler

metadata:

name: my-autoscaler

spec:

bigtableClusterRef:

projectId: cool-project

instanceId: my-instance-id

clusterId: my-cluster-id

serviceAccountSecretRef:

name: example-service-account

key: service-account

minNodes: 1

maxNodes: 10

targetCPUUtilization: 50Then you can install it on your k8s cluster:

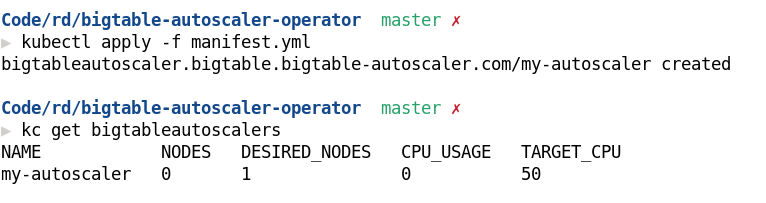

$ kubectl apply -f my-autoscaler.ymlYou can check your autoscaler running:

$ kubectl get bigtableautoscalersPrerequisites

- Enable Bigtable and Monitoring APIs on your GCP project.

- Generate a service account secret with the role for Bigtable administrator.

Installation

- Visit the releases page, download the

all-in-one.ymlof the version of your choice and apply itkubectl apply -f all-in-one.yml

Development environment

These are the steps for setting up the development environment.

This project is using go version 1.13 and other tools with its respective version, we don't guarantee that using other versions can perform successful builds.

-

Install kubebuilder version 2.3.2.

- Also make sure that you have its dependencies installed: controller-gen version 0.5.0 and kustomize version 3.10.0

Option 1: Run with Tilt (recomended)

Tilt is tool to automate development cycle and has features like hot deploy.

-

Install tilt version 0.19.0 (follow the official instructions).

- Install its dependencies: ctlptl and kind (or other tool to create local k8s clusters) as instructed.

-

If it doesn't exist, create your k8s cluster using ctlptl

ctlptl create cluster kind --registry=ctlptl-registry

-

Provide the secret with the service account credentials and role as described in section Secret setup.

-

Run

tilt up

Option 2: Manual run

Running manually requires some extra steps!

-

If it doesn't exist, create your local k8s cluster. Here we will use kind to create it:

kind create cluster

-

Provide the secret with the service account credentials and role as described in section Secret setup.

-

check that your cluster is correctly running

kubectl cluster-info

-

Apply Custom Resource Definition

make install

-

Build docker image with manger binary

make docker-build

-

Load this image to the cluster

kind load docker-image controller:latest

-

Deploy the operator to the local cluster

make deploy

-

Apply the autoscaler sample

kubectl apply -f config/samples/bigtable_v1_bigtableautoscaler.yaml

-

Check pods and logs

kubectl -n bigtable-autoscaler-system logs $(kubectl -n bigtable-autoscaler-system get pods | tail -n1 | cut -d ' ' -f1) --all-containers

Secret setup

-

Use the service account from the Prerequisites section to create the k8s secret

kubectl create secret generic bigtable-autoscaler-service-account --from-file=service-account=./your_service_account.json

-

Create role and rolebinding to read secret

kubectl apply -f config/rbac/secret-role.yml

Running tests

go test ./... -vor

gotestsum