datashim-io / Datashim

Programming Languages

Labels

Projects that are alternatives of or similar to Datashim

Datashim

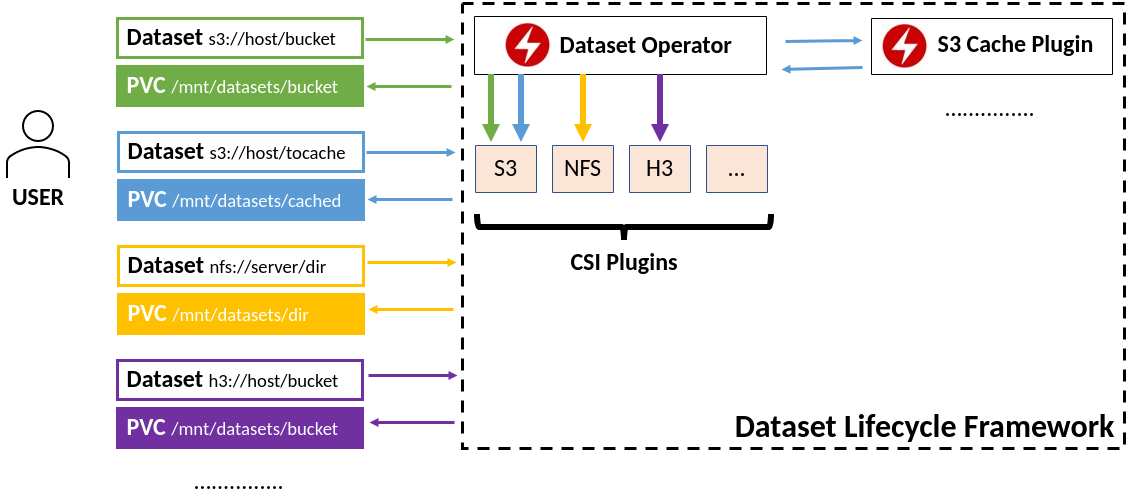

Our Framework introduces the Dataset CRD which is a pointer to existing S3 and NFS data sources. It includes the necessary logic to map these Datasets into Persistent Volume Claims and ConfigMaps which users can reference in their pods, letting them focus on the workload development and not on configuring/mounting/tuning the data access. Thanks to Container Storage Interface it is extensible to support additional data sources in the future.

A Kubernetes Framework to provide easy access to S3 and NFS Datasets within pods. Orchestrates the provisioning of Persistent Volume Claims and ConfigMaps needed for each Dataset. Find more details in our FAQ

Quickstart

In order to quickly deploy DLF, based on your environment execute one of the following commands:

- Kubernetes/Minikube

kubectl apply -f https://raw.githubusercontent.com/IBM/dataset-lifecycle-framework/master/release-tools/manifests/dlf.yaml

- Kubernetes on IBM Cloud

kubectl apply -f https://raw.githubusercontent.com/IBM/dataset-lifecycle-framework/master/release-tools/manifests/dlf-ibm-k8s.yaml

- Openshift

kubectl apply -f https://raw.githubusercontent.com/IBM/dataset-lifecycle-framework/master/release-tools/manifests/dlf-oc.yaml

- Openshift on IBM Cloud

kubectl apply -f https://raw.githubusercontent.com/IBM/dataset-lifecycle-framework/master/release-tools/manifests/dlf-ibm-oc.yaml

Wait for all the pods to be ready :)

kubectl wait --for=condition=ready pods -l app.kubernetes.io/name=dlf -n dlf

As an optional step, label the namespace(or namespaces) you want in order have the pods labelling functionality (see below).

kubectl label namespace default monitor-pods-datasets=enabled

In case don't have an existing S3 Bucket follow our wiki to deploy an Object Store and populate it with data.

We will create now a Dataset named example-dataset pointing to your S3 bucket.

cat <<EOF | kubectl apply -f -

apiVersion: com.ie.ibm.hpsys/v1alpha1

kind: Dataset

metadata:

name: example-dataset

spec:

local:

type: "COS"

accessKeyID: "{AWS_ACCESS_KEY_ID}"

secretAccessKey: "{AWS_SECRET_ACCESS_KEY}"

endpoint: "{S3_SERVICE_URL}"

bucket: "{BUCKET_NAME}"

readonly: "true" #OPTIONAL, default is false

region: "" #OPTIONAL

EOF

If everything worked okay, you should see a PVC and a ConfigMap named example-dataset which you can mount in your pods.

As an easier way to use the Dataset in your pod, you can instead label the pod as follows:

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

dataset.0.id: "example-dataset"

dataset.0.useas: "mount"

spec:

containers:

- name: nginx

image: nginx

As a convention the Dataset will be mounted in /mnt/datasets/example-dataset. If instead you wish to pass the connection

details as environment variables, change the useas line to dataset.0.useas: "configmap"

Feel free to explore our examples

FAQ

Have a look on our wiki for Frequently Asked Questions

Roadmap

Have a look on our wiki for Roadmap

Contact

Reach out to us via email:

- Yiannis Gkoufas, [email protected]

- Christian Pinto, [email protected]

- Srikumar Venugopal, [email protected]

- Panagiotis Koutsovasilis, [email protected]

Acknowledgements

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 825061.