AASHISHAG / Deepspeech German

Programming Languages

Labels

Projects that are alternatives of or similar to Deepspeech German

Automatic Speech Recognition (ASR) - DeepSpeech German

This is the project for the paper German End-to-end Speech Recognition based on DeepSpeech published at KONVENS 2019.

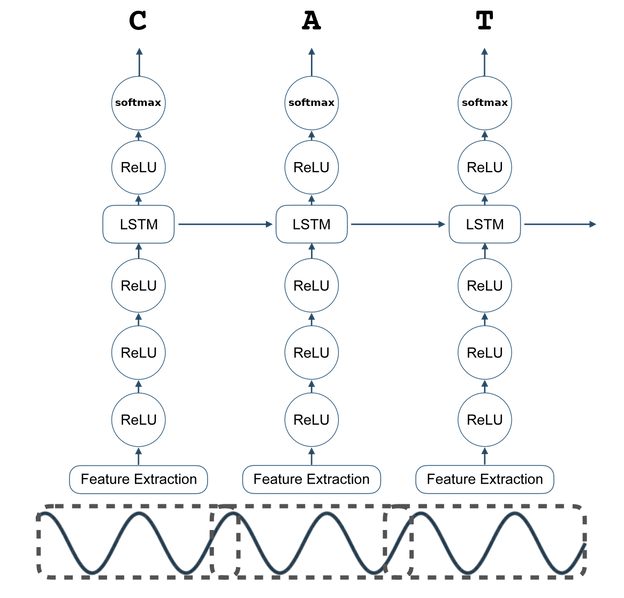

This project aims to develop a working Speech to Text module using Mozilla DeepSpeech, which can be used for any Audio processing pipeline. Mozilla DeepSpeech Architecture is a state-of-the-art open-source automatic speech recognition (ASR) toolkit. DeepSpeech is using a model trained by machine learning techniques based on Baidu's Deep Speech research paper. Project DeepSpeech uses Google's TensorFlow to make the implementation easier.

Important Links:

DeepSpeech-API: https://github.com/AASHISHAG/DeepSpeech-API

This Readme is written for DeepSpeech v0.9.3. Refer to Mozillla DeepSpeech for latest updates.

Contents

- Requirements

- Speech Corpus

- Language Model

- Training

- Hyper-Parameter Optimization

- Results

- Trained Models

- Acknowledgments

- References

Requirements

The instruction are focusing on Linux. It might be possible to use also MacOS and Windows as long as a shell environment can be provided, however some instructions and scripts might have to be adjusted then.

DeepSpeech and KenLM were added as sub modules. To fetch the sub modules execute:

git pull --recurse-submodules

Developer Information

To update the used DeepSpeech version checkout in the DeepSpeech submodule the corresponding tag:

git checkout tags/v0.9.3

The same applies to KenLM.

Installing Python bindings

The DeepSpeech tools require still TensorFlow 1.15, which is only supported up to Python 3.7. pyenv will be used to set up a dedicated Python version.

git clone https://github.com/pyenv/pyenv.git $HOME/.pyenv

Follow the instruction on the pyenv page, for Ubuntu Desktop

Add to your ~/.bashrc:

echo 'export PYENV_ROOT="$HOME/.pyenv"' >> ~/.bashrc

echo 'export PATH="$PYENV_ROOT/bin:$PATH"' >> ~/.bashrc

echo -e 'if command -v pyenv 1>/dev/null 2>&1; then\n eval "$(pyenv init -)"\nfi' >> ~/.bash_profile

Log out or run:

source ~/.bashrc

virtualenv will be used to provide a separate Python environment.

pyenv install 3.7.9

sudo pip3 install virtualenv

virtualenv --python=$HOME/.pyenv/versions/3.7.9/bin/python3.7 python-environments

source python-environments/bin/activate

pip3 install -r python_requirements.txt

NOTE: While writing this 2 issues were fixed in audiomate and a GitHub patched version was used. This can be updated once the upstream audiomate has released a new version.

Installing Linux dependencies

The necessary Linux dependencies can be found in linux_requirements.

xargs -a linux_requirements.txt sudo apt-get install

Speech Corpus

- German Distant Speech Corpus (TUDA-De) ~127h

- Mozilla Common Voice ~140h

- Voxforge ~35h

- M-AILABS

- Spoken Wikipedia Corpora

Download the corpus

For the speech corpus download the script download_speech_corpus.py is used and

it will take the above URLs.

source python-environments/bin/activate

python pre-processing/download_speech_corpus.py --tuda --cv --swc --voxforge --mailabs

NOTE: The python module of audiomate which is used for the download process is using some fixed URLs.

These URLs can be outdated depending on last release of audiomate.

download_speech_corpus.py is patching these URLS, but in case a new speech corpus is released

the URLs in the script must be updated again or passed as arguments <speech_corpus>_url, e.g. --tuda_url <url>.

It is also possible to leave out some corpus and specify just a subset to download. The default target directory

is the current directory, i.e. project root but could be set with --target_path. The path used in this documentation must

be adjusted then accordingly.

The tool will display after a while a download progress indicator.

The downloads will be found in the folder tuda, mozilla, voxforge, swc and mailabs.

If a download failed the incomplete file(s) must be removed manually.

Prepare the Audio Data

The output of this step is a CSV format with references to the audio and corresponding text split into train, dev and test data for the TensorFlow network.

Convert to UTF-8:

pre-processing/run_to_utf_8.sh

The pre-processing/prepare_data script imports the downloaded corpora. It expects the corpus option

followed by the corpus directory followed by the destination directory. Look into the script source to find all possible corpus options.

It is possible to train a subset (i.e. by just specifying one of the options) or all of the speech corpora.

Execute the following commands in the root directory of this project after running:

source python-environments/bin/activate

NOTE: If the filename or folders of the downloaded speech corpora are changing the paths used in this section must be updated, too.

Some Examples:

1. Tuda-De

python pre-processing/prepare_data.py --tuda tuda/german-speechdata-package-v3 german-speech-corpus/data_tuda

2. Mozilla

python pre-processing/prepare_data.py --cv mozilla/cv-corpus-6.1-2020-12-11/de german-speech-corpus/data_mozilla

3. Voxforge

python pre-processing/prepare_data.py --voxforge voxforge german-speech-corpus/data_voxforge

4. Voxforge + Tuda-De

python pre-processing/prepare_data.py --voxforge voxforge --tuda tuda/german-speechdata-package-v2 german-speech-corpus/data_tuda+voxforge

5. Voxforge + Tuda-De + Mozilla

python pre-processing/prepare_data.py --cv mozilla/cv-corpus-6.1-2020-12-11/de/clips --voxforge voxforge --tuda tuda/german-speechdata-package-v3 german-speech-corpus/data_tuda+voxforge+mozilla

Language Model

We used KenLM toolkit to train a 3-gram language model. It is Language Model inference code by Kenneth Heafield Consult the repository for any information regarding the compilation in case of errors.

- Installation

cd kenlm

mkdir -p build

cd build

cmake ..

make -j `nproc`

nproc defines the number of threads to use.

Text Corpus

We used an open-source German Speech Corpus released by University of Hamburg.

Download the data:

mkdir german-text-corpus

cd german-text-corpus

wget http://ltdata1.informatik.uni-hamburg.de/kaldi_tuda_de/German_sentences_8mil_filtered_maryfied.txt.gz

gzip -d German_sentences_8mil_filtered_maryfied.txt.gz

Pre-process the data

Execute the following commands in the root directory of this project.

source python-environments/bin/activate

python pre-processing/prepare_vocab.py german-text-corpus/German_sentences_8mil_filtered_maryfied.txt german-text-corpus/clean_vocab.txt

Build the Language Model

This step will create a trie data structure to describe the used vocabulary for the speech recognition.

This file is termed as "scorer" in the DeepSpeech terminology.

The script create_language_model.sh is used for this.

The script will use the compiled KenLM binaries and download a native DeepSpeechClient.

The native DeepSpeech client is using the "cpu" architecture. For a different architecture pass as additional parameter the architecture.

See the --arch parameter in python DeepSpeech/util/taskcluster.py --help for details.

The mandatory top_k parameter in the script is set to 500000 which corresponds to > 98.43 % of all words in the downloaded text corpus.

The default_alpha and default_beta parameters are taken from Scorer documentation

and are also the defaults in the DeepSpeech.py.

DeepSpeech is offering the lm_optimizer.py scripts to find better values (in case there would be different ones)._

./create_language_model.sh

NOTE: A different scorer might be useful later for the efficient speech recognition in case of a special language domain. Then an own text corpus should be used, and the script source can be used as a template for this scorer and be executed separately.

Training

NOTE: The training can be accelerated by using CUDA. Follow the GPU Installation Instructions for TensorFlow. At the time of writing also on Ubuntu 20.04 the toolchain for Ubuntu 18.04 has to be used.

If CUDA is used the GPU package of TensorFlow has to be installed:

source python-environments/bin/activate

pip uninstall tensorflow

pip install tensorflow-gpu==1.15.4

nohup ./train_model.sh <prepared_speech_corpus> &

Example:

nohup ./train_model.sh german-speech-corpus/data_tuda+voxforge+mozilla &

Parameters:

- The default scorer is

german-text-corpus/kenlm.scorer. - The default alphabet is

data/alphabet.txt. - The default DeepSpeech processing data is stored under

deepspeech_processing. - The model is stored under the directory

models.

It is possible to modify these parameters. If a parameter should take the default value, pass "".

./train_model.sh <prepared_speech_corpus> <alphabet> <scorer> <processing_dir> <models_dir>

Example:

./train_model.sh german-speech-corpus/data_tuda+voxforge+mozilla "" "" my_processing_dir my_models

NOTE: The augmentation parameters are not used and might be interesting for improving the results.

NOTE: Increase the export_model_version option in train_model.sh when a new model is trained.

NOTE: In case a Not enough time for target transition sequence (required: 171, available: 0) is thrown,

the currently only known fix is to edit the file DeepSpeech/training/deepspeech_training/train.py and

add , ignore_longer_outputs_than_inputs=True to the call to tfv1.nn.ctc_loss.

TFLite Model Generation

The TensorFLow Lite model suitable for resource restricted devices (embedded, Raspberry Pi 4, ...) can be generated with:

./export_tflite.sh

Parameters:

- The default alphabet is

data/alphabet.txt. - The default DeepSpeech processing data is taken from

deepspeech_processing. - The model is stored under the directory

models.

It is possible to modify these parameters. If a parameter should take the default value, pass "".

./export_tflite.sh <alphabet> <processing_dir> <models_dir>

Example:

./export_tflite.sh "" my_processing_dir my_models

Fine-Tuning

Fine-tuning is used when the alphabet is staying the same, since the alphabet stays the same when trying to add more German text corpora it can be used.

Consult the Fine-Tuning DeepSpeech Documentation

nohup ./fine_tuning_model.sh <prepared_speech_corpus> &

Example:

nohup ./fine_tuning_model.sh german-speech-corpus/my_own_corpus &

Parameters:

./fine_tuning_model.sh <prepared_speech_corpus> <alphabet> <scorer> <processing_dir> <models_dir> <cuda_options>

- The default alphabet is

data/alphabet.txt. - The default DeepSpeech processing data is saved into

deepspeech_processing. - The scorer is taken from

german-text-corpus/kenlm.scorer. - The model is saved under the directory

models. - CUDA options:

-

-load_cudnnis not passed by default. If you don’t have a CUDA compatible GPU and the training was executed on a GPU (which is the default) pass--load_cudnnto allow training on the CPU. NOTE: This requires also the Pythontensorflow-gpudependency, although no GPU is available. -

--train_cudnnis not passed by default. It must be passed if the existing model was trained on a CUDA GPU and it should be continued on a CUDA GPU.

-

Example:

./fine_tuning_model.sh german-speech-corpus/my_own_corpus "" "" "" "" "" "" --train_cudnn

Transfer Learning

Transfer learning must be used if the alphabet is changed, e.g. when using English and adding the German letters. Transfer learning is using an existing neural network, dropping one or more layers of this neural network and recalculated the weights with the new training data.

Consult the Transfer Learning DeepSpeech Documentation

Example English to German

- The English DeepSpeech model is used as base model. The checkpoints from the DeepSpeech English model must be us.

E.g.

deepspeech-0.9.3-checkpoint.tar.gzmust be downloaded and extracted to/deepspeech-0.9.3-checkpoint. - For the German language the language model and scorer must be build (this was done already above).

- Call

transfer_model.sh:

nohup ./transfer_model.sh german-speech-corpus/data_tuda+voxforge+mozilla+swc+mailabs deepspeech-0.9.3-checkpoint &

NOTE: The checkpoints should be from the same DeepSpeech version to perform transfer learning.

Parameters:

- The default alphabet is

data/alphabet.txt. - The default DeepSpeech processing data is saved into

deepspeech_transfer_processing. - The scorer is taken from

german-text-corpus/kenlm.scorer. - The model is saved under the directory

transfer_models. - The drop layer parameter is 1.

- CUDA options:

-

-load_cudnnis not passed by default. If you don’t have a CUDA compatible GPU and the training was executed on a GPU (which is the default) pass--load_cudnnto allow training on the CPU. NOTE: This requires also the Pythontensorflow-gpudependency, although no GPU is available. -

--train_cudnnis not passed by default. It must be passed if the existing model was trained on a CUDA GPU and it should be continued on a CUDA GPU.

-

It is possible to modify these parameters. If a parameter should take the default value, pass "".

nohup ./transfer_model.sh <prepared_speech_corpus> <load_checkpoint_directory> <alphabet> <scorer> <processing_dir> <models_dir> <drop_layers> <cuda_options> &

Example:

nohup ./transfer_model.sh german-speech-corpus/data_tuda+voxforge+mozilla+swc+mailabs deepspeech-0.9.3-checkpoint "" "" "" my_models 2 --train_cudnn &

Hyper-Parameter Optimization

The learning rate can be tested with the script hyperparameter_optimization.sh.

NOTE: The drop out rate parameter is not tested in this script.

Execute it with:

nohup ./hyperparameter_optimization.sh <prepared_speech_corpus> &

Results

Some WER (word error rate) results from our findings.

- Mozilla 79.7%

- Voxforge 72.1%

- Tuda-De 26.8%

- Tuda-De+Mozilla 57.3%

- Tuda-De+Voxforge 15.1%

- Tuda-De+Voxforge+Mozilla 21.5%

NOTE: Refer our paper for more information.

Trained Models

The DeepSpeech model can be directly re-trained on new datasets. The required dependencies are available at:

1. v0.5.0

This model is trained on DeepSpeech v0.5.0 with Mozilla_v3+Voxforge+Tuda-De (please refer the paper for more details) https://drive.google.com/drive/folders/1nG6xii2FP6PPqmcp4KtNVvUADXxEeakk?usp=sharing

https://drive.google.com/file/d/1VN1xPH0JQNKK6DiSVgyQ4STFyDY_rle3/view

2. v0.6.0

This model is trained on DeepSpeech v0.6.0 with Mozilla_v4+Voxforge+Tuda-De+MAILABS(454+57+184+233h=928h)

https://drive.google.com/drive/folders/1BKblYaSLnwwkvVOQTQ5roOeN0SuQm8qr?usp=sharing

3. v0.7.4

This model is trained on DeepSpeech v0.7.4 using pre-trained English model released by Mozilla English+Mozilla_v5+MAILABS+Tuda-De+Voxforge (1700+750+233+184+57h=2924h)

https://drive.google.com/drive/folders/1PFSIdmi4Ge8EB75cYh2nfYOXlCIgiMEL?usp=sharing

4. v0.9.0

This model is trained on DeepSpeech v0.9.0 using pre-trained English model released by Mozilla English+Mozilla_v5+SWC+MAILABS+Tuda-De+Voxforge (1700+750+248+233+184+57h=3172h)

Thanks to Karsten Ohme for providing the TFLite model.

Link: https://drive.google.com/drive/folders/1L7ILB-TMmzL8IDYi_GW8YixAoYWjDMn1?usp=sharing

Why being SHY to STAR the repository, if you use the resources? :D

Acknowledgments

References

If you use our findings/scripts in your academic work, please cite:

@inproceedings{agarwal-zesch-2019-german,

author = "Aashish Agarwal and Torsten Zesch",

title = "German End-to-end Speech Recognition based on DeepSpeech",

booktitle = "Preliminary proceedings of the 15th Conference on Natural Language Processing (KONVENS 2019): Long Papers",

year = "2019",

address = "Erlangen, Germany",

publisher = "German Society for Computational Linguistics \& Language Technology",

pages = "111--119"

}