Kaixhin / Easy21

Licence: mit

Reinforcement Learning Assignment: Easy21

Stars: ✭ 11

Programming Languages

lua

6591 projects

Labels

Projects that are alternatives of or similar to Easy21

Aim

Aim — a super-easy way to record, search and compare 1000s of ML training runs

Stars: ✭ 894 (+8027.27%)

Mutual labels: reinforcement-learning

Unity Ml Environments

This repository features game simulations as machine learning environments to experiment with deep learning approaches such as deep reinforcement learning inside of Unity.

Stars: ✭ 23 (+109.09%)

Mutual labels: reinforcement-learning

Rainbow Is All You Need

Rainbow is all you need! A step-by-step tutorial from DQN to Rainbow

Stars: ✭ 938 (+8427.27%)

Mutual labels: reinforcement-learning

Sc2atari

Convert sc2 environment to gym-atari and play some mini-games

Stars: ✭ 19 (+72.73%)

Mutual labels: reinforcement-learning

Advanced Deep Learning With Keras

Advanced Deep Learning with Keras, published by Packt

Stars: ✭ 917 (+8236.36%)

Mutual labels: reinforcement-learning

Deepgtav

A plugin for GTAV that transforms it into a vision-based self-driving car research environment.

Stars: ✭ 926 (+8318.18%)

Mutual labels: reinforcement-learning

Pygame Learning Environment

PyGame Learning Environment (PLE) -- Reinforcement Learning Environment in Python.

Stars: ✭ 828 (+7427.27%)

Mutual labels: reinforcement-learning

A3c

MXNET + OpenAI Gym implementation of A3C from "Asynchronous Methods for Deep Reinforcement Learning"

Stars: ✭ 9 (-18.18%)

Mutual labels: reinforcement-learning

Ciff

Cornell Instruction Following Framework

Stars: ✭ 23 (+109.09%)

Mutual labels: reinforcement-learning

Summary loop

Codebase for the Summary Loop paper at ACL2020

Stars: ✭ 26 (+136.36%)

Mutual labels: reinforcement-learning

Slm Lab

Modular Deep Reinforcement Learning framework in PyTorch. Companion library of the book "Foundations of Deep Reinforcement Learning".

Stars: ✭ 904 (+8118.18%)

Mutual labels: reinforcement-learning

Paac.pytorch

Pytorch implementation of the PAAC algorithm presented in Efficient Parallel Methods for Deep Reinforcement Learning https://arxiv.org/abs/1705.04862

Stars: ✭ 22 (+100%)

Mutual labels: reinforcement-learning

Chainerrl

ChainerRL is a deep reinforcement learning library built on top of Chainer.

Stars: ✭ 931 (+8363.64%)

Mutual labels: reinforcement-learning

Bombora

My experimentations with Reinforcement Learning in Pytorch

Stars: ✭ 18 (+63.64%)

Mutual labels: reinforcement-learning

Bindsnet

Simulation of spiking neural networks (SNNs) using PyTorch.

Stars: ✭ 837 (+7509.09%)

Mutual labels: reinforcement-learning

Textworld

TextWorld is a sandbox learning environment for the training and evaluation of reinforcement learning (RL) agents on text-based games.

Stars: ✭ 895 (+8036.36%)

Mutual labels: reinforcement-learning

Deeplearning Trader

backtrader with DRL ( Deep Reinforcement Learning)

Stars: ✭ 24 (+118.18%)

Mutual labels: reinforcement-learning

Awesome Ai Books

Some awesome AI related books and pdfs for learning and downloading, also apply some playground models for learning

Stars: ✭ 855 (+7672.73%)

Mutual labels: reinforcement-learning

Rl Baselines Zoo

A collection of 100+ pre-trained RL agents using Stable Baselines, training and hyperparameter optimization included.

Stars: ✭ 839 (+7527.27%)

Mutual labels: reinforcement-learning

Toybox

The Machine Learning Toybox for testing the behavior of autonomous agents.

Stars: ✭ 25 (+127.27%)

Mutual labels: reinforcement-learning

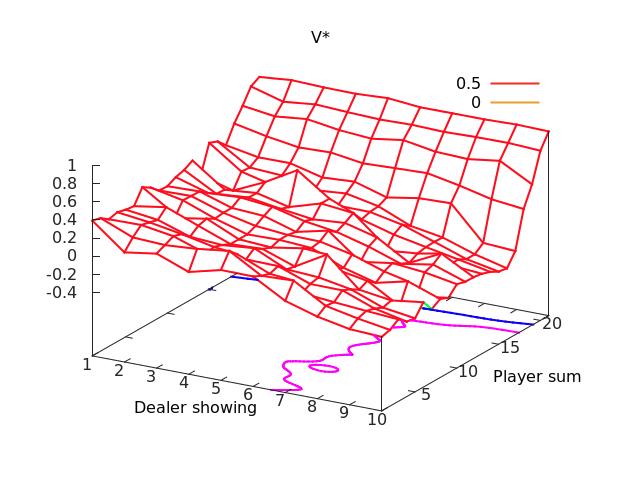

Easy21

Assignment from David Silver's Reinforcement Learning course. Coded for clarity, not efficiency.

Requires Torch7 with the Moses package.

Run monte-carlo.lua first to generate Q* and the plot of V (below), then sarsa-lambda.lua and lin-fun-approx.lua to generate their plots.

Includes an additional method without value functions - policy-gradient.lua - that uses a simple neural network.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].