biezhi / Elves

Licence: mit

🎊 Design and implement of lightweight crawler framework.

Stars: ✭ 315

Programming Languages

java

68154 projects - #9 most used programming language

Projects that are alternatives of or similar to Elves

Happy Spiders

🔧 🔩 🔨 收集整理了爬虫相关的工具、模拟登陆技术、代理IP、scrapy模板代码等内容。

Stars: ✭ 261 (-17.14%)

Mutual labels: spider, scrapy

scrapy-distributed

A series of distributed components for Scrapy. Including RabbitMQ-based components, Kafka-based components, and RedisBloom-based components for Scrapy.

Stars: ✭ 38 (-87.94%)

Mutual labels: spider, scrapy

ip proxy pool

Generating spiders dynamically to crawl and check those free proxy ip on the internet with scrapy.

Stars: ✭ 39 (-87.62%)

Mutual labels: spider, scrapy

python-spider

python爬虫小项目【持续更新】【笔趣阁小说下载、Tweet数据抓取、天气查询、网易云音乐逆向、天天基金网查询、微博数据抓取(生成cookie)、有道翻译逆向、企查查免登陆爬虫、大众点评svg加密破解、B站用户爬虫、拉钩免登录爬虫、自如租房字体加密、知乎问答

Stars: ✭ 45 (-85.71%)

Mutual labels: spider, scrapy

scrapy facebooker

Collection of scrapy spiders which can scrape posts, images, and so on from public Facebook Pages.

Stars: ✭ 22 (-93.02%)

Mutual labels: spider, scrapy

elves

🎊 Design and implement of lightweight crawler framework.

Stars: ✭ 322 (+2.22%)

Mutual labels: spider, scrapy

PttImageSpider

PTT 圖片下載器 (抓取整個看板的圖片,並用文章標題作為資料夾的名稱 ) (使用Scrapy)

Stars: ✭ 16 (-94.92%)

Mutual labels: spider, scrapy

OpenScraper

An open source webapp for scraping: towards a public service for webscraping

Stars: ✭ 80 (-74.6%)

Mutual labels: spider, scrapy

photo-spider-scrapy

10 photo website spiders, 10 个国外图库的 scrapy 爬虫代码

Stars: ✭ 17 (-94.6%)

Mutual labels: spider, scrapy

devsearch

A web search engine built with Python which uses TF-IDF and PageRank to sort search results.

Stars: ✭ 52 (-83.49%)

Mutual labels: spider, scrapy

Elves

一个轻量级的爬虫框架设计与实现,博文分析。

特性

- 事件驱动

- 易于定制

- 多线程执行

-

CSS选择器和XPath支持

Maven 坐标

<dependency>

<groupId>io.github.biezhi</groupId>

<artifactId>elves</artifactId>

<version>0.0.2</version>

</dependency>

如果你想在本地运行这个项目源码,请确保你是 Java8 环境并且安装了 lombok 插件。

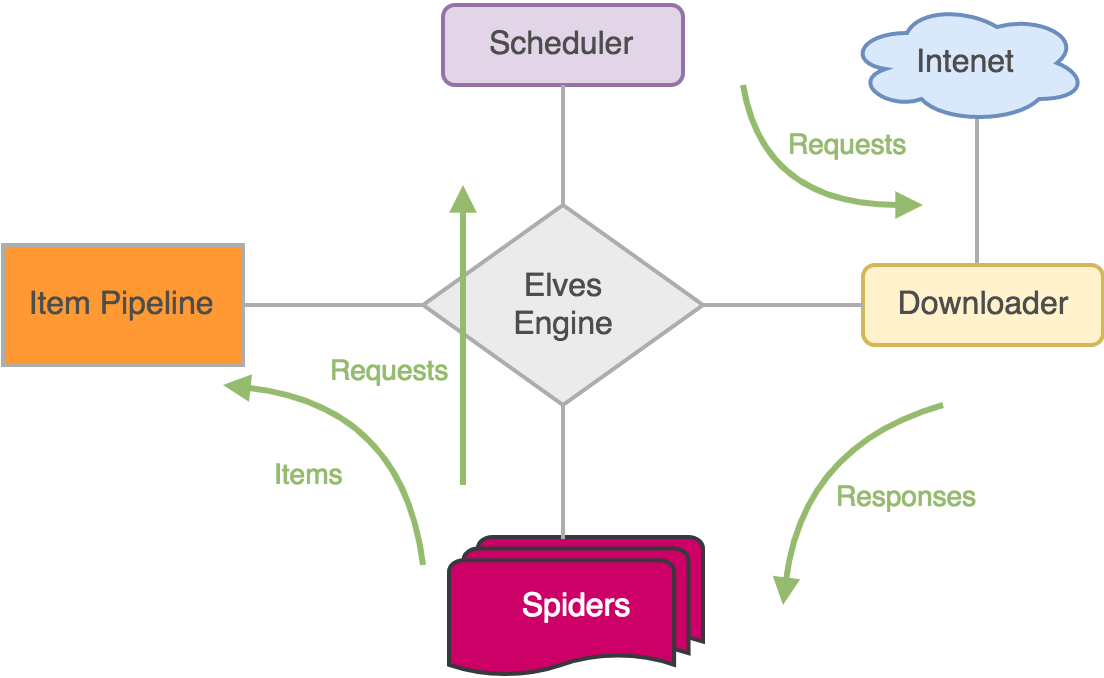

架构图

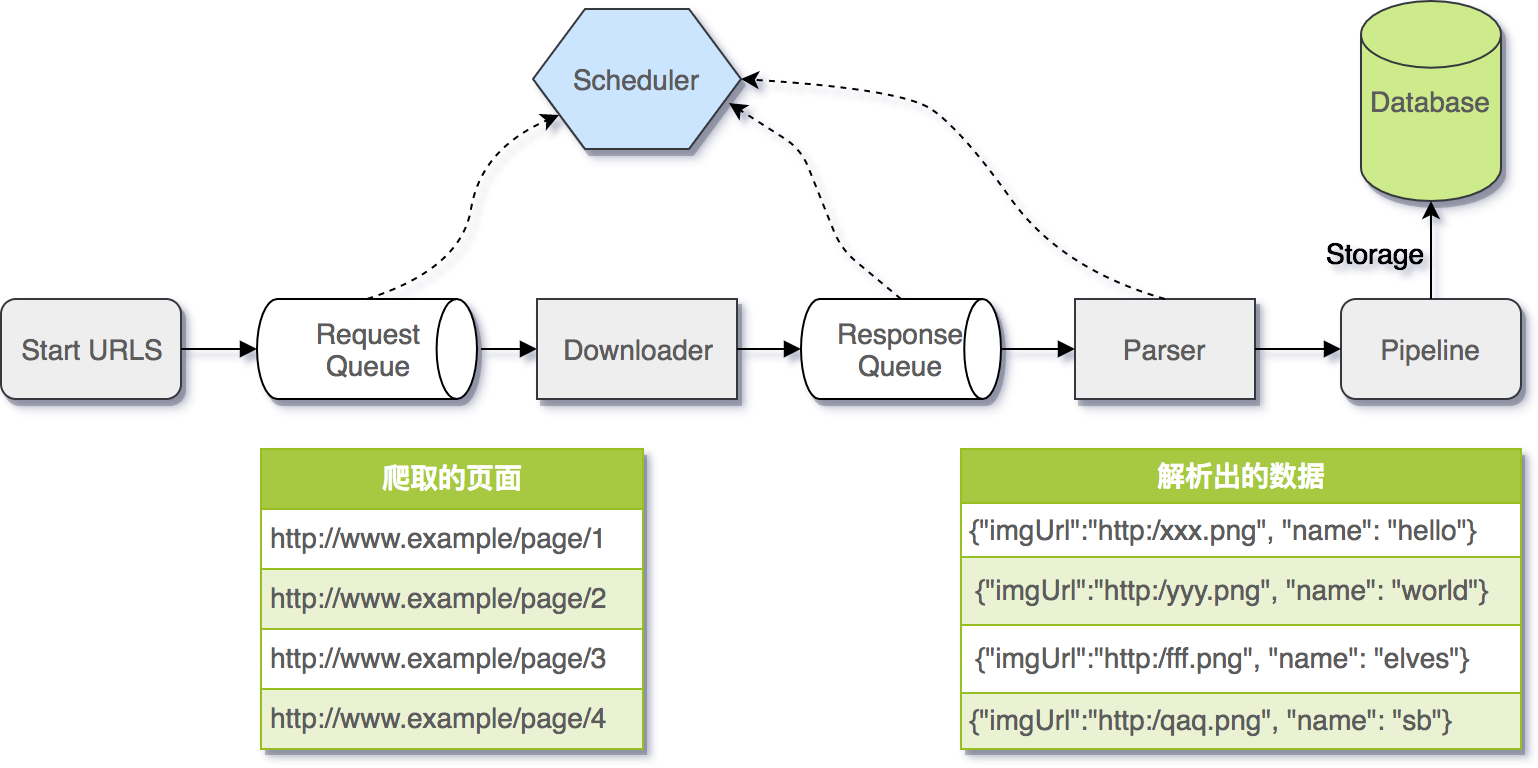

调用流程图

快速上手

搭建一个爬虫程序需要进行这么几步操作

- 编写一个爬虫类继承自

Spider - 设置要抓取的 URL 列表

- 实现

Spider的parse方法 - 添加

Pipeline处理parse过滤后的数据

举个栗子:

public class DoubanSpider extends Spider {

public DoubanSpider(String name) {

super(name);

this.startUrls(

"https://movie.douban.com/tag/爱情",

"https://movie.douban.com/tag/喜剧",

"https://movie.douban.com/tag/动画",

"https://movie.douban.com/tag/动作",

"https://movie.douban.com/tag/史诗",

"https://movie.douban.com/tag/犯罪");

}

@Override

public void onStart(Config config) {

this.addPipeline((Pipeline<List<String>>) (item, request) -> log.info("保存到文件: {}", item));

}

public Result parse(Response response) {

Result<List<String>> result = new Result<>();

Elements elements = response.body().css("#content table .pl2 a");

List<String> titles = elements.stream().map(Element::text).collect(Collectors.toList());

result.setItem(titles);

// 获取下一页 URL

Elements nextEl = response.body().css("#content > div > div.article > div.paginator > span.next > a");

if (null != nextEl && nextEl.size() > 0) {

String nextPageUrl = nextEl.get(0).attr("href");

Request nextReq = this.makeRequest(nextPageUrl, this::parse);

result.addRequest(nextReq);

}

return result;

}

}

public static void main(String[] args) {

DoubanSpider doubanSpider = new DoubanSpider("豆瓣电影");

Elves.me(doubanSpider, Config.me()).start();

}

爬虫例子

开源协议

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].