HuiDingUMD / Exprgan

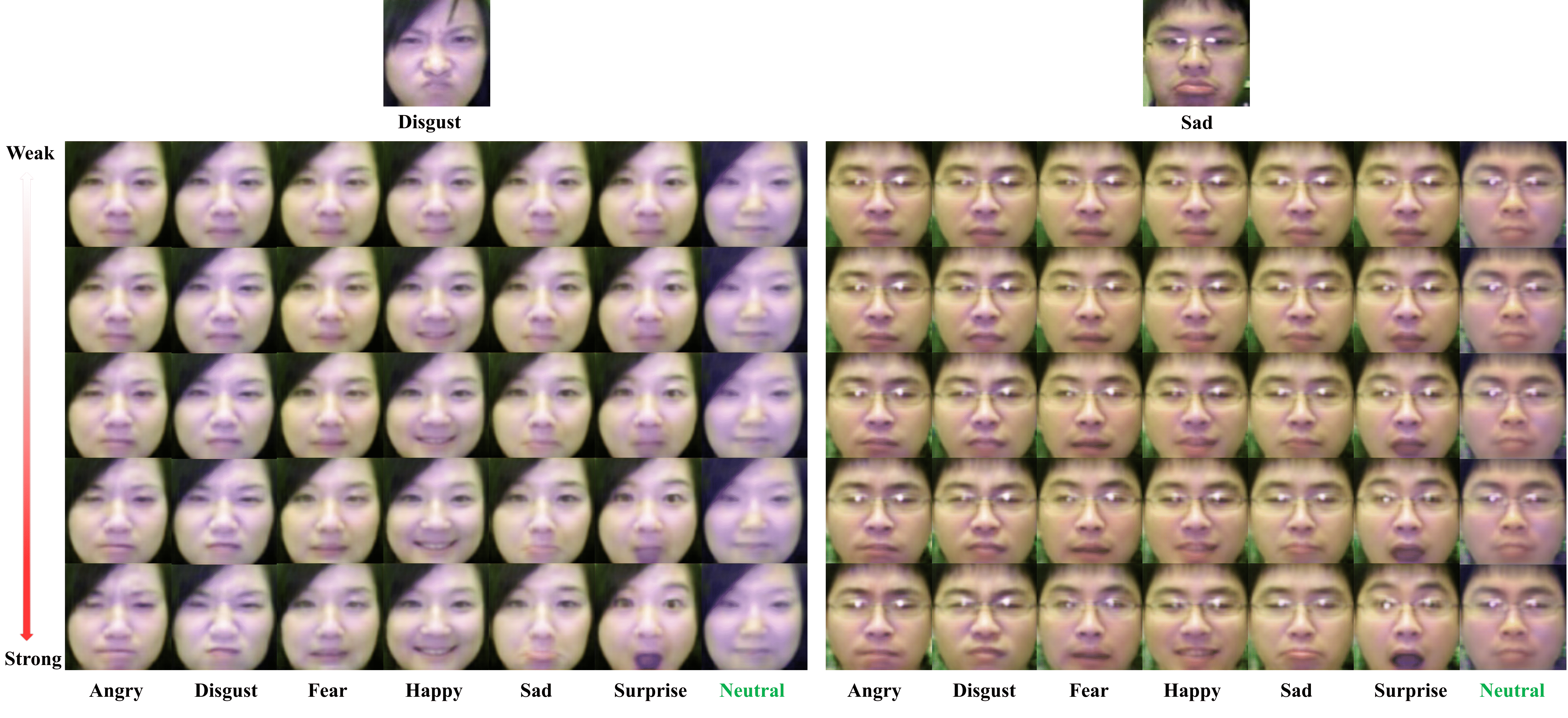

Facial Expression Editing with Controllable Expression Intensity

Stars: ✭ 98

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Exprgan

Anycost Gan

[CVPR 2021] Anycost GANs for Interactive Image Synthesis and Editing

Stars: ✭ 367 (+274.49%)

Mutual labels: gan, image-generation, image-manipulation

Lggan

[CVPR 2020] Local Class-Specific and Global Image-Level Generative Adversarial Networks for Semantic-Guided Scene Generation

Stars: ✭ 97 (-1.02%)

Mutual labels: gan, image-generation, image-manipulation

Cyclegan

Software that can generate photos from paintings, turn horses into zebras, perform style transfer, and more.

Stars: ✭ 10,933 (+11056.12%)

Mutual labels: gan, image-generation, image-manipulation

Focal Frequency Loss

Focal Frequency Loss for Generative Models

Stars: ✭ 141 (+43.88%)

Mutual labels: gan, image-generation, image-manipulation

Pix2pix

Image-to-image translation with conditional adversarial nets

Stars: ✭ 8,765 (+8843.88%)

Mutual labels: gan, image-generation, image-manipulation

automatic-manga-colorization

Use keras.js and cyclegan-keras to colorize manga automatically. All computation in browser. Demo is online:

Stars: ✭ 20 (-79.59%)

Mutual labels: gan, image-manipulation, image-generation

Oneshottranslation

Pytorch implementation of "One-Shot Unsupervised Cross Domain Translation" NIPS 2018

Stars: ✭ 135 (+37.76%)

Mutual labels: gan, image-generation, image-manipulation

Tsit

[ECCV 2020 Spotlight] A Simple and Versatile Framework for Image-to-Image Translation

Stars: ✭ 141 (+43.88%)

Mutual labels: gan, image-generation, image-manipulation

Distancegan

Pytorch implementation of "One-Sided Unsupervised Domain Mapping" NIPS 2017

Stars: ✭ 180 (+83.67%)

Mutual labels: gan, image-generation, image-manipulation

Pytorch Cyclegan And Pix2pix

Image-to-Image Translation in PyTorch

Stars: ✭ 16,477 (+16713.27%)

Mutual labels: gan, image-generation, image-manipulation

Awesome-ICCV2021-Low-Level-Vision

A Collection of Papers and Codes for ICCV2021 Low Level Vision and Image Generation

Stars: ✭ 163 (+66.33%)

Mutual labels: gan, image-manipulation, image-generation

Few Shot Patch Based Training

The official implementation of our SIGGRAPH 2020 paper Interactive Video Stylization Using Few-Shot Patch-Based Training

Stars: ✭ 313 (+219.39%)

Mutual labels: gan, image-generation

Texturize

🤖🖌️ Generate photo-realistic textures based on source images. Remix, remake, mashup! Useful if you want to create variations on a theme or elaborate on an existing texture.

Stars: ✭ 366 (+273.47%)

Mutual labels: image-generation, image-manipulation

Selectiongan

[CVPR 2019 Oral] Multi-Channel Attention Selection GAN with Cascaded Semantic Guidance for Cross-View Image Translation

Stars: ✭ 366 (+273.47%)

Mutual labels: image-generation, image-manipulation

Deep Generative Prior

Code for deep generative prior (ECCV2020 oral)

Stars: ✭ 308 (+214.29%)

Mutual labels: gan, image-manipulation

Sean

SEAN: Image Synthesis with Semantic Region-Adaptive Normalization (CVPR 2020, Oral)

Stars: ✭ 387 (+294.9%)

Mutual labels: gan, image-generation

Igan

Interactive Image Generation via Generative Adversarial Networks

Stars: ✭ 3,845 (+3823.47%)

Mutual labels: gan, image-manipulation

Consingan

PyTorch implementation of "Improved Techniques for Training Single-Image GANs" (WACV-21)

Stars: ✭ 294 (+200%)

Mutual labels: gan, image-generation

Apdrawinggan

Code for APDrawingGAN: Generating Artistic Portrait Drawings from Face Photos with Hierarchical GANs (CVPR 2019 Oral)

Stars: ✭ 510 (+420.41%)

Mutual labels: gan, image-generation

Hidt

Official repository for the paper "High-Resolution Daytime Translation Without Domain Labels" (CVPR2020, Oral)

Stars: ✭ 513 (+423.47%)

Mutual labels: gan, image-generation

ExprGAN

This is our Tensorflow implementation for our AAAI 2018 oral paper: "ExprGAN: Facial Expression Editing with Controllable Expression Intensity", https://arxiv.org/pdf/1709.03842.pdf

Train

- Download OULU-CASIA dataset and put the images under data folder: http://www.cse.oulu.fi/CMV/Downloads/Oulu-CASIA. split/oulu_anno.pickle contains the split of training and testing images.

- Download vgg-face.mat from matconvenet website and put it under joint-train/utils folder: http://www.vlfeat.org/matconvnet/pretrained/

- To overcome the limited training dataset, the training is consisted of three stages: a) Go inot train-controller folder to first train the controller module, run_controller.sh; b) Go into join-train folder for the second and third stage training, run_oulu.sh. Plese see our paper for more training details.

A trained model can be downloaded here: https://drive.google.com/open?id=1bz45QSdS2911-8FDmngGIyd5K4gYimzg

Test

- Run joint-train/test_oulu.sh

Citation

If you use this code for your research, please cite our paper:

@article{ding2017exprgan, title={Exprgan: Facial expression editing with controllable expression intensity}, author={Ding, Hui and Sricharan, Kumar and Chellappa, Rama}, journal={AAAI}, year={2018} }

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].