uidilr / Gail_ppo_tf

Licence: mit

Tensorflow implementation of Generative Adversarial Imitation Learning(GAIL) with discrete action

Stars: ✭ 99

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Gail ppo tf

Easy Rl

强化学习中文教程,在线阅读地址:https://datawhalechina.github.io/easy-rl/

Stars: ✭ 3,004 (+2934.34%)

Mutual labels: ppo, imitation-learning

Tianshou

An elegant PyTorch deep reinforcement learning library.

Stars: ✭ 4,109 (+4050.51%)

Mutual labels: ppo, imitation-learning

imitation learning

PyTorch implementation of some reinforcement learning algorithms: A2C, PPO, Behavioral Cloning from Observation (BCO), GAIL.

Stars: ✭ 93 (-6.06%)

Mutual labels: imitation-learning, ppo

Gym Continuousdoubleauction

A custom MARL (multi-agent reinforcement learning) environment where multiple agents trade against one another (self-play) in a zero-sum continuous double auction. Ray [RLlib] is used for training.

Stars: ✭ 50 (-49.49%)

Mutual labels: ppo

Pgdrive

PGDrive: an open-ended driving simulator with infinite scenes from procedural generation

Stars: ✭ 60 (-39.39%)

Mutual labels: imitation-learning

Run Skeleton Run

Reason8.ai PyTorch solution for NIPS RL 2017 challenge

Stars: ✭ 83 (-16.16%)

Mutual labels: ppo

Deeprl algorithms

DeepRL algorithms implementation easy for understanding and reading with Pytorch and Tensorflow 2(DQN, REINFORCE, VPG, A2C, TRPO, PPO, DDPG, TD3, SAC)

Stars: ✭ 97 (-2.02%)

Mutual labels: ppo

Slm Lab

Modular Deep Reinforcement Learning framework in PyTorch. Companion library of the book "Foundations of Deep Reinforcement Learning".

Stars: ✭ 904 (+813.13%)

Mutual labels: ppo

Hand dapg

Repository to accompany RSS 2018 paper on dexterous hand manipulation

Stars: ✭ 88 (-11.11%)

Mutual labels: imitation-learning

Torch Ac

Recurrent and multi-process PyTorch implementation of deep reinforcement Actor-Critic algorithms A2C and PPO

Stars: ✭ 70 (-29.29%)

Mutual labels: ppo

Dogtorch

Who Let The Dogs Out? Modeling Dog Behavior From Visual Data https://arxiv.org/pdf/1803.10827.pdf

Stars: ✭ 66 (-33.33%)

Mutual labels: imitation-learning

Sc2aibot

Implementing reinforcement-learning algorithms for pysc2 -environment

Stars: ✭ 83 (-16.16%)

Mutual labels: ppo

Torchrl

Pytorch Implementation of Reinforcement Learning Algorithms ( Soft Actor Critic(SAC)/ DDPG / TD3 /DQN / A2C/ PPO / TRPO)

Stars: ✭ 90 (-9.09%)

Mutual labels: ppo

Deterministic Gail Pytorch

PyTorch implementation of Deterministic Generative Adversarial Imitation Learning (GAIL) for Off Policy learning

Stars: ✭ 44 (-55.56%)

Mutual labels: imitation-learning

Imitation Learning

Imitation learning algorithms

Stars: ✭ 85 (-14.14%)

Mutual labels: imitation-learning

On Policy

This is the official implementation of Multi-Agent PPO.

Stars: ✭ 63 (-36.36%)

Mutual labels: ppo

Imitation Learning

Autonomous driving: Tensorflow implementation of the paper "End-to-end Driving via Conditional Imitation Learning"

Stars: ✭ 60 (-39.39%)

Mutual labels: imitation-learning

Inverse rl

Adversarial Imitation Via Variational Inverse Reinforcement Learning

Stars: ✭ 79 (-20.2%)

Mutual labels: imitation-learning

Generative Adversarial Imitation Learning

Implementation of Generative Adversarial Imitation Learning(GAIL) using tensorflow

Dependencies

python>=3.5

tensorflow>=1.4

gym>=0.9.3

Gym environment

Env==CartPole-v0

State==Continuous

Action==Discrete

Usage

Train experts

python3 run_ppo.py

Sample trajectory using expert

python3 sample_trajectory.py

Run GAIL

python3 run_gail.py

Run supervised learning

python3 run_behavior_clone.py

Test trained policy

python3 test_policy.py

Default policy is trained with gail

--alg=bc or ppo allows you to change test policy

If you want to test bc policy, specify the number of model.ckpt-number in the directory trained_models/bc

Example

python3 test_policy.py --alg=bc --model=1000

Tensorboard

tensorboard --logdir=log

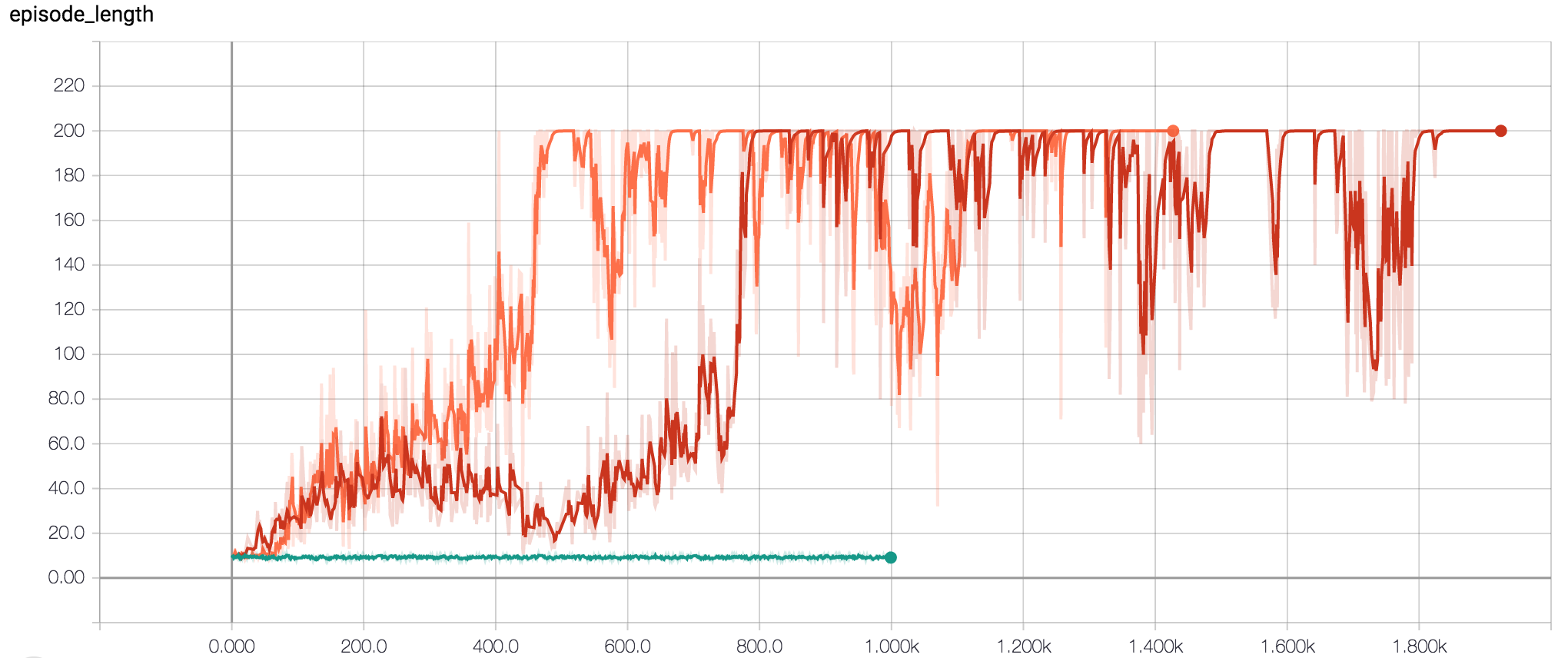

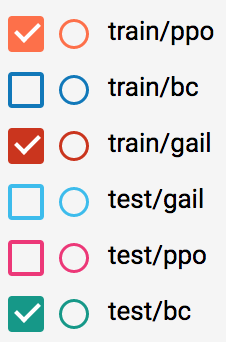

Results

|

|

|---|---|

| Fig.1 Training results | legend |

LICENSE

MIT LICENSE

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].