harskish / Ganspace

Projects that are alternatives of or similar to Ganspace

GANSpace: Discovering Interpretable GAN Controls

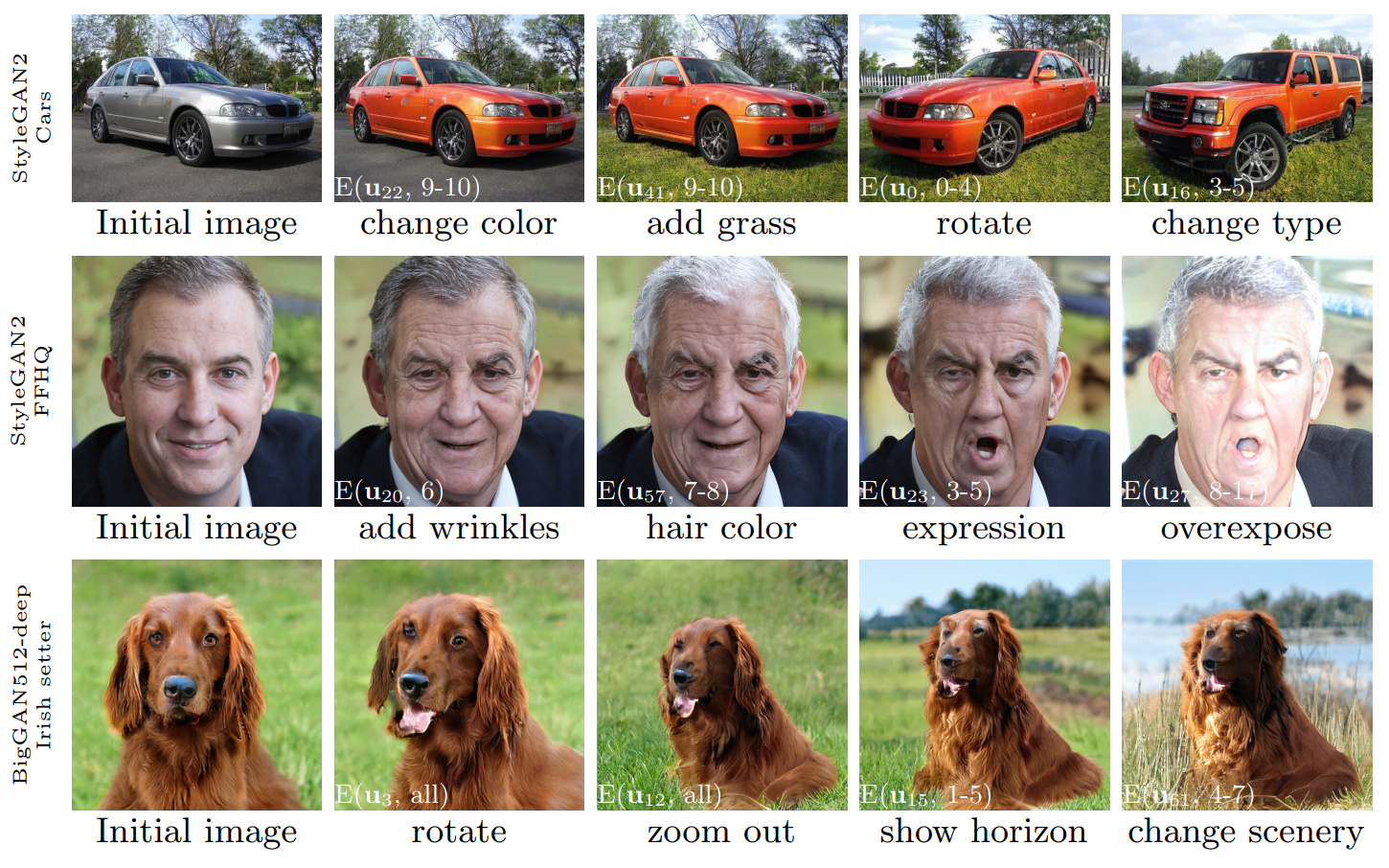

Figure 1: Sequences of image edits performed using control discovered with our method, applied to three different GANs. The white insets specify the particular edits using notation explained in Section 3.4 ('Layer-wise Edits').

GANSpace: Discovering Interpretable GAN Controls

Erik Härkönen1,2, Aaron Hertzmann2, Jaakko Lehtinen1,3, Sylvain Paris2

1Aalto University, 2Adobe Research, 3NVIDIA

https://arxiv.org/abs/2004.02546Abstract: This paper describes a simple technique to analyze Generative Adversarial Networks (GANs) and create interpretable controls for image synthesis, such as change of viewpoint, aging, lighting, and time of day. We identify important latent directions based on Principal Components Analysis (PCA) applied in activation space. Then, we show that interpretable edits can be defined based on layer-wise application of these edit directions. Moreover, we show that BigGAN can be controlled with layer-wise inputs in a StyleGAN-like manner. A user may identify a large number of interpretable controls with these mechanisms. We demonstrate results on GANs from various datasets.

Video: https://youtu.be/jdTICDa_eAI

Setup

See the setup instructions.

Usage

This repository includes versions of BigGAN, StyleGAN, and StyleGAN2 modified to support per-layer latent vectors.

Interactive model exploration

# Explore BigGAN-deep husky

python interactive.py --model=BigGAN-512 --class=husky --layer=generator.gen_z -n=1_000_000

# Explore StyleGAN2 ffhq in W space

python interactive.py --model=StyleGAN2 --class=ffhq --layer=style --use_w -n=1_000_000 -b=10_000

# Explore StyleGAN2 cars in Z space

python interactive.py --model=StyleGAN2 --class=car --layer=style -n=1_000_000 -b=10_000

# Apply previously saved edits interactively

python interactive.py --model=StyleGAN2 --class=ffhq --layer=style --use_w --inputs=out/directions

Visualize principal components

# Visualize StyleGAN2 ffhq W principal components

python visualize.py --model=StyleGAN2 --class=ffhq --use_w --layer=style -b=10_000

# Create videos of StyleGAN wikiart components (saved to ./out)

python visualize.py --model=StyleGAN --class=wikiart --use_w --layer=g_mapping -b=10_000 --batch --video

Options

Command line paramaters:

--model one of [ProGAN, BigGAN-512, BigGAN-256, BigGAN-128, StyleGAN, StyleGAN2]

--class class name; leave empty to list options

--layer layer at which to perform PCA; leave empty to list options

--use_w treat W as the main latent space (StyleGAN / StyleGAN2)

--inputs load previously exported edits from directory

--sigma number of stdevs to use in visualize.py

-n number of PCA samples

-b override automatic minibatch size detection

-c number of components to keep

Reproducibility

All figures presented in the main paper can be recreated using the included Jupyter notebooks:

- Figure 1:

figure_teaser.ipynb - Figure 2:

figure_pca_illustration.ipynb - Figure 3:

figure_pca_cleanup.ipynb - Figure 4:

figure_style_content_sep.ipynb - Figure 5:

figure_supervised_comp.ipynb - Figure 6:

figure_biggan_style_resampling.ipynb - Figure 7:

figure_edit_zoo.ipynb

Known issues

- The interactive viewer sometimes freezes on startup on Ubuntu 18.04. The freeze is resolved by clicking on the terminal window and pressing the control key. Any insight into the issue would be greatly appreciated!

Integrating a new model

- Create a wrapper for the model in

models/wrappers.pyusing theBaseModelinterface. - Add the model to

get_model()inmodels/wrappers.py.

Importing StyleGAN checkpoints from TensorFlow

It is possible to import trained StyleGAN and StyleGAN2 weights from TensorFlow into GANSpace.

StyleGAN

- Install TensorFlow:

conda install tensorflow-gpu=1.*. - Modify methods

__init__(),load_model()inmodels/wrappers.pyunder class StyleGAN.

StyleGAN2

- Follow the instructions in models/stylegan2/stylegan2-pytorch/README.md. Make sure to use the fork in this specific folder when converting the weights for compatibility reasons.

- Save the converted checkpoint as

checkpoints/stylegan2/<dataset>_<resolution>.pt. - Modify methods

__init__(),download_checkpoint()inmodels/wrappers.pyunder class StyleGAN2.

Acknowledgements

We would like to thank:

- The authors of the PyTorch implementations of BigGAN, StyleGAN, and StyleGAN2:

Thomas Wolf, Piotr Bialecki, Thomas Viehmann, and Kim Seonghyeon. - Joel Simon from ArtBreeder for providing us with the landscape model for StyleGAN.

(unfortunately we cannot distribute this model) - David Bau and colleagues for the excellent GAN Dissection project.

- Justin Pinkney for the Awesome Pretrained StyleGAN collection.

- Tuomas Kynkäänniemi for giving us a helping hand with the experiments.

- The Aalto Science-IT project for providing computational resources for this project.

Citation

@inproceedings{härkönen2020ganspace,

title = {GANSpace: Discovering Interpretable GAN Controls},

author = {Erik Härkönen and Aaron Hertzmann and Jaakko Lehtinen and Sylvain Paris},

booktitle = {Proc. NeurIPS},

year = {2020}

}

License

The code of this repository is released under the Apache 2.0 license.

The directory netdissect is a derivative of the GAN Dissection project, and is provided under the MIT license.

The directories models/biggan and models/stylegan2 are provided under the MIT license.