DeepGraphLearning / Graphvite

Projects that are alternatives of or similar to Graphvite

GraphVite - graph embedding at high speed and large scale

Docs | Tutorials | Benchmarks | Pre-trained Models

GraphVite is a general graph embedding engine, dedicated to high-speed and large-scale embedding learning in various applications.

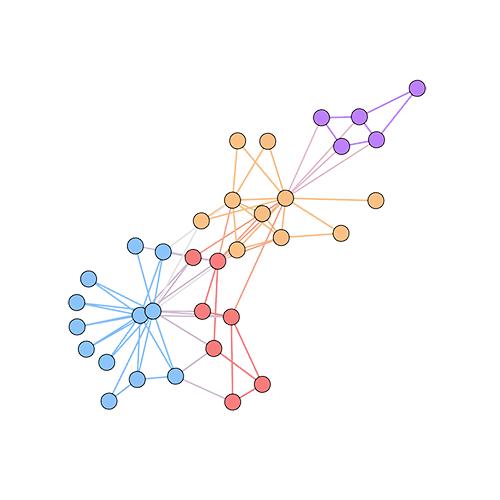

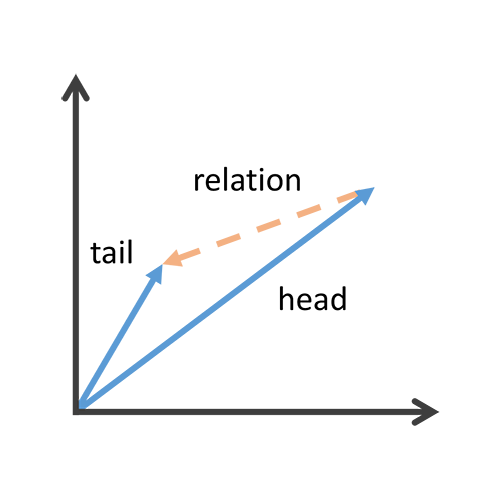

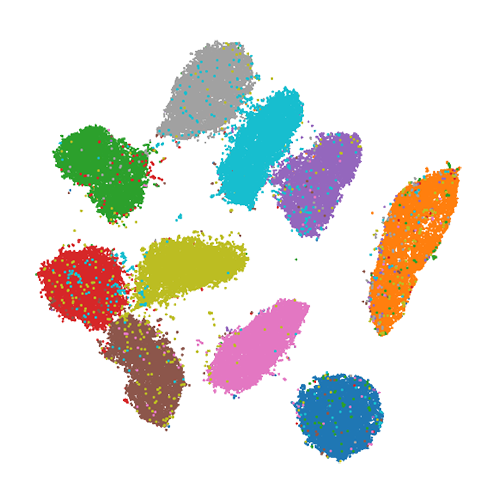

GraphVite provides complete training and evaluation pipelines for 3 applications: node embedding, knowledge graph embedding and graph & high-dimensional data visualization. Besides, it also includes 9 popular models, along with their benchmarks on a bunch of standard datasets.

| Node Embedding | Knowledge Graph Embedding | Graph & High-dimensional Data Visualization |

|---|---|---|

|

|

|

Here is a summary of the training time of GraphVite along with the best open-source implementations on 3 applications. All the time is reported based on a server with 24 CPU threads and 4 V100 GPUs.

Training time of node embedding on Youtube dataset.

| Model | Existing Implementation | GraphVite | Speedup |

|---|---|---|---|

| DeepWalk | 1.64 hrs (CPU parallel) | 1.19 mins | 82.9x |

| LINE | 1.39 hrs (CPU parallel) | 1.17 mins | 71.4x |

| node2vec | 24.4 hrs (CPU parallel) | 4.39 mins | 334x |

Training / evaluation time of knowledge graph embedding on FB15k dataset.

| Model | Existing Implementation | GraphVite | Speedup |

|---|---|---|---|

| TransE | 1.31 hrs / 1.75 mins (1 GPU) | 13.5 mins / 54.3 s | 5.82x / 1.93x |

| RotatE | 3.69 hrs / 4.19 mins (1 GPU) | 28.1 mins / 55.8 s | 7.88x / 4.50x |

Training time of high-dimensional data visualization on MNIST dataset.

| Model | Existing Implementation | GraphVite | Speedup |

|---|---|---|---|

| LargeVis | 15.3 mins (CPU parallel) | 13.9 s | 66.8x |

Requirements

Generally, GraphVite works on any Linux distribution with CUDA >= 9.2.

The library is compatible with Python 2.7 and 3.6/3.7.

Installation

From Conda

conda install -c milagraph -c conda-forge graphvite cudatoolkit=$(nvcc -V | grep -Po "(?<=V)\d+.\d+")

If you only need embedding training without evaluation, you can use the following alternative with minimal dependencies.

conda install -c milagraph -c conda-forge graphvite-mini cudatoolkit=$(nvcc -V | grep -Po "(?<=V)\d+.\d+")

From Source

Before installation, make sure you have conda installed.

git clone https://github.com/DeepGraphLearning/graphvite

cd graphvite

conda install -y --file conda/requirements.txt

mkdir build

cd build && cmake .. && make && cd -

cd python && python setup.py install && cd -

On Colab

!wget -c https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh

!chmod +x Miniconda3-latest-Linux-x86_64.sh

!./Miniconda3-latest-Linux-x86_64.sh -b -p /usr/local -f

!conda install -y -c milagraph -c conda-forge graphvite \

python=3.6 cudatoolkit=$(nvcc -V | grep -Po "(?<=V)\d+\.\d+")

!conda install -y wurlitzer ipykernel

import site

site.addsitedir("/usr/local/lib/python3.6/site-packages")

%reload_ext wurlitzer

Quick Start

Here is a quick-start example of the node embedding application.

graphvite baseline quick start

Typically, the example takes no more than 1 minute. You will obtain some output like

Batch id: 6000

loss = 0.371041

------------- link prediction --------------

AUC: 0.899933

----------- node classification ------------

[email protected]%: 0.242114

[email protected]%: 0.391342

Baseline Benchmark

To reproduce a baseline benchmark, you only need to specify the keywords of the experiment. e.g. model and dataset.

graphvite baseline [keyword ...] [--no-eval] [--gpu n] [--cpu m] [--epoch e]

You may also set the number of GPUs and the number of CPUs per GPU.

Use graphvite list to get a list of available baselines.

Custom Experiment

Create a yaml configuration scaffold for graph, knowledge graph, visualization or word graph.

graphvite new [application ...] [--file f]

Fill some necessary entries in the configuration following the instructions. You can run the configuration by

graphvite run [config] [--no-eval] [--gpu n] [--cpu m] [--epoch e]

High-dimensional Data Visualization

You can visualize your high-dimensional vectors with a simple command line in GraphVite.

graphvite visualize [file] [--label label_file] [--save save_file] [--perplexity n] [--3d]

The file can be either a numpy dump *.npy or a text matrix *.txt. For the save

file, we recommend to use png format, while pdf is also supported.

Contributing

We welcome all contributions from bug fixs to new features. Please let us know if you have any suggestion to our library.

Development Team

GraphVite is developed by MilaGraph, led by Prof. Jian Tang.

Authors of this project are Zhaocheng Zhu, Shizhen Xu, Meng Qu and Jian Tang. Contributors include Kunpeng Wang and Zhijian Duan.

Citation

If you find GraphVite useful for your research or development, please cite the following paper.

@inproceedings{zhu2019graphvite,

title={GraphVite: A High-Performance CPU-GPU Hybrid System for Node Embedding},

author={Zhu, Zhaocheng and Xu, Shizhen and Qu, Meng and Tang, Jian},

booktitle={The World Wide Web Conference},

pages={2494--2504},

year={2019},

organization={ACM}

}

Acknowledgements

We would like to thank Compute Canada for supporting GPU servers. We specially thank Wenbin Hou for useful discussions on C++ and GPU programming techniques.