Zhen-Dong / Hawq

Programming Languages

Projects that are alternatives of or similar to Hawq

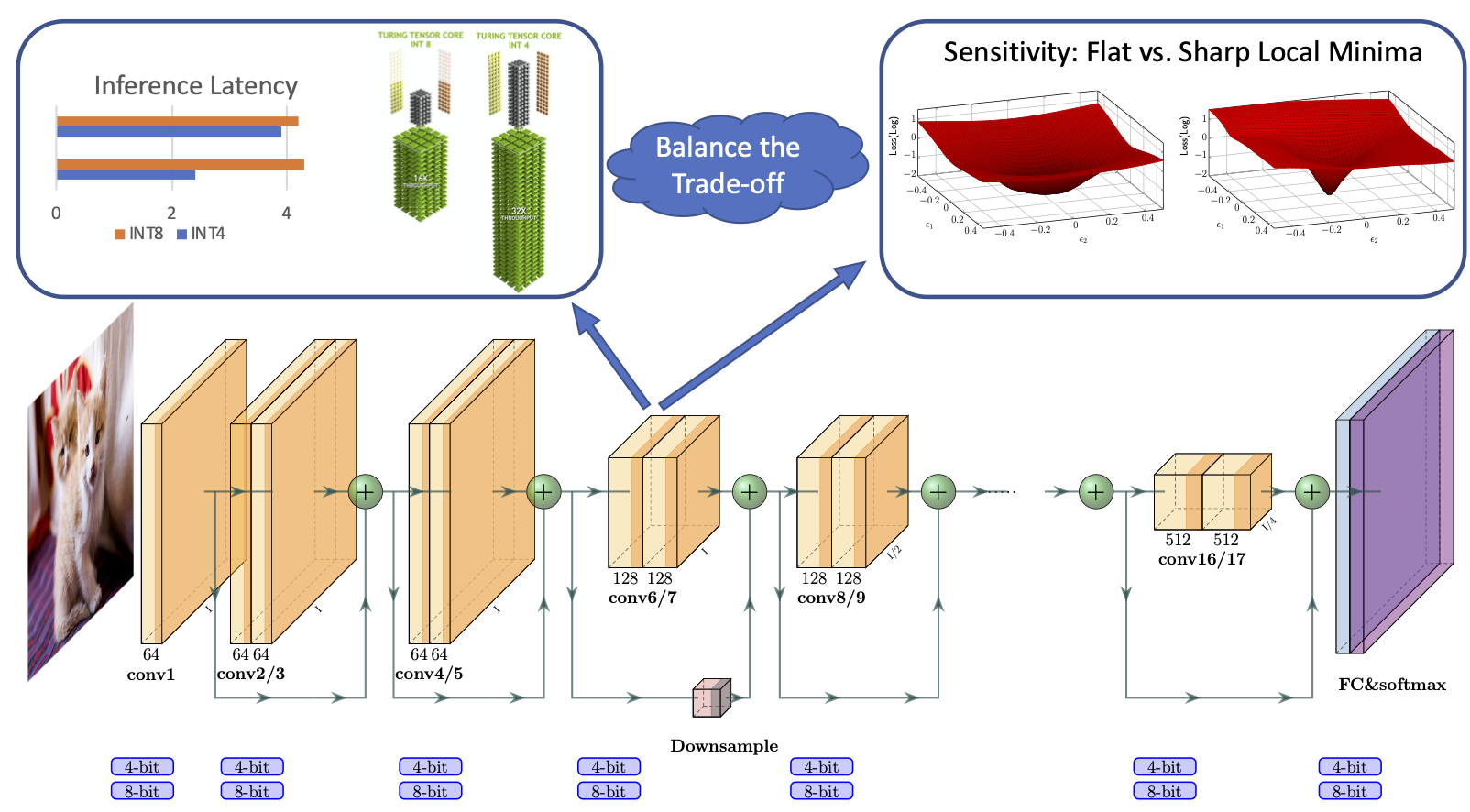

HAWQ: Hessian AWare Quantization

HAWQ is an advanced quantization library written for PyTorch. HAWQ enables low-precision and mixed-precision uniform quantization, with direct hardware implementation through TVM.

For more details please see:

Installation

- PyTorch version >= 1.4.0

- Python version >= 3.6

- For training new models, you'll also need NVIDIA GPUs and NCCL

- To install HAWQ and develop locally:

git clone https://github.com/Zhen-Dong/HAWQ.git

cd HAWQ

pip install -r requirements.txt

Getting Started

Quantization-Aware Training

An example to run uniform 8-bit quantization for resnet50 on ImageNet.

export CUDA_VISIBLE_DEVICES=0

python quant_train.py -a resnet50 --epochs 1 --lr 0.0001 --batch-size 128 --data /path/to/imagenet/ --pretrained --save-path /path/to/checkpoints/ --act-range-momentum=0.99 --wd 1e-4 --data-percentage 0.0001 --fix-BN --checkpoint-iter -1 --quant-scheme uniform8

The commands for other quantization schemes and for other networks are shown in the model zoo.

Inference Acceleration

Experimental Results

Table I and Table II in HAWQ-V3: Dyadic Neural Network Quantization

ResNet18 on ImageNet

| Model | Quantization | Model Size(MB) | BOPS(G) | Accuracy(%) | Inference Speed (batch=8, ms) | Download |

|---|---|---|---|---|---|---|

ResNet18 |

Floating Points | 44.6 | 1858 | 71.47 | 9.7 (1.0x) | resnet18_baseline |

ResNet18 |

W8A8 | 11.1 | 116 | 71.56 | 3.3 (3.0x) | resnet18_uniform8 |

ResNet18 |

Mixed Precision | 6.7 | 72 | 70.22 | 2.7 (3.6x) | resnet18_bops0.5 |

ResNet18 |

W4A4 | 5.8 | 34 | 68.45 | 2.2 (4.4x) | resnet18_uniform4 |

ResNet50 on ImageNet

| Model | Quantization | Model Size(MB) | BOPS(G) | Accuracy(%) | Inference Speed (batch=8, ms) | Download |

|---|---|---|---|---|---|---|

ResNet50 |

Floating Points | 97.8 | 3951 | 77.72 | 26.2 (1.0x) | resnet50_baseline |

ResNet50 |

W8A8 | 24.5 | 247 | 77.58 | 8.5 (3.1x) | resnet50_uniform8 |

ResNet50 |

Mixed Precision | 18.7 | 154 | 75.39 | 6.9 (3.8x) | resnet50_bops0.5 |

ResNet50 |

W4A4 | 13.1 | 67 | 74.24 | 5.8 (4.5x) | resnet50_uniform4 |

More results for different quantization schemes and different models (also the corresponding commands and important notes) are available in the model zoo.

To download the quantized models through wget, please refer to a simple command in model zoo.

Checkpoints in model zoo are saved in floating point precision. To shrink the memory size, BitPack can be applied on weight_integer tensors, or directly on quantized_checkpoint.pth.tar file.

Related Works

- HAWQ-V3: Dyadic Neural Network Quantization

- HAWQ-V2: Hessian Aware trace-Weighted Quantization of Neural Networks (NeurIPS 2020)

- HAWQ: Hessian AWare Quantization of Neural Networks with Mixed-Precision (ICCV 2019)

License

HAWQ is released under the MIT license.