malllabiisc / Hypergcn

Licence: apache-2.0

NeurIPS 2019: HyperGCN: A New Method of Training Graph Convolutional Networks on Hypergraphs

Stars: ✭ 80

Programming Languages

python

139335 projects - #7 most used programming language

Labels

Projects that are alternatives of or similar to Hypergcn

Awesome Semi Supervised Learning

📜 An up-to-date & curated list of awesome semi-supervised learning papers, methods & resources.

Stars: ✭ 538 (+572.5%)

Mutual labels: semi-supervised-learning

Susi

SuSi: Python package for unsupervised, supervised and semi-supervised self-organizing maps (SOM)

Stars: ✭ 42 (-47.5%)

Mutual labels: semi-supervised-learning

Mean Teacher

A state-of-the-art semi-supervised method for image recognition

Stars: ✭ 1,130 (+1312.5%)

Mutual labels: semi-supervised-learning

Ganomaly

GANomaly: Semi-Supervised Anomaly Detection via Adversarial Training

Stars: ✭ 563 (+603.75%)

Mutual labels: semi-supervised-learning

Gans In Action

Companion repository to GANs in Action: Deep learning with Generative Adversarial Networks

Stars: ✭ 748 (+835%)

Mutual labels: semi-supervised-learning

Semi Supervised Learning Pytorch

Several SSL methods (Pi model, Mean Teacher) are implemented in pytorch

Stars: ✭ 49 (-38.75%)

Mutual labels: semi-supervised-learning

Stn Ocr

Code for the paper STN-OCR: A single Neural Network for Text Detection and Text Recognition

Stars: ✭ 473 (+491.25%)

Mutual labels: semi-supervised-learning

Grand

Source code and dataset of the NeurIPS 2020 paper "Graph Random Neural Network for Semi-Supervised Learning on Graphs"

Stars: ✭ 75 (-6.25%)

Mutual labels: semi-supervised-learning

Ladder

Implementation of Ladder Network in PyTorch.

Stars: ✭ 37 (-53.75%)

Mutual labels: semi-supervised-learning

Ali Pytorch

PyTorch implementation of Adversarially Learned Inference (BiGAN).

Stars: ✭ 61 (-23.75%)

Mutual labels: semi-supervised-learning

Alibi Detect

Algorithms for outlier and adversarial instance detection, concept drift and metrics.

Stars: ✭ 604 (+655%)

Mutual labels: semi-supervised-learning

Awesome Federated Learning

Federated Learning Library: https://fedml.ai

Stars: ✭ 624 (+680%)

Mutual labels: semi-supervised-learning

Usss iccv19

Code for Universal Semi-Supervised Semantic Segmentation models paper accepted in ICCV 2019

Stars: ✭ 57 (-28.75%)

Mutual labels: semi-supervised-learning

See

Code for the AAAI 2018 publication "SEE: Towards Semi-Supervised End-to-End Scene Text Recognition"

Stars: ✭ 545 (+581.25%)

Mutual labels: semi-supervised-learning

Sparsely Grouped Gan

Code for paper "Sparsely Grouped Multi-task Generative Adversarial Networks for Facial Attribute Manipulation"

Stars: ✭ 68 (-15%)

Mutual labels: semi-supervised-learning

Ssgan Tensorflow

A Tensorflow implementation of Semi-supervised Learning Generative Adversarial Networks (NIPS 2016: Improved Techniques for Training GANs).

Stars: ✭ 496 (+520%)

Mutual labels: semi-supervised-learning

Social Media Depression Detector

😔 😞 😣 😖 😩 Detect depression on social media using the ssToT method introduced in our ASONAM 2017 paper titled "Semi-Supervised Approach to Monitoring Clinical Depressive Symptoms in Social Media"

Stars: ✭ 45 (-43.75%)

Mutual labels: semi-supervised-learning

Dtc

Semi-supervised Medical Image Segmentation through Dual-task Consistency

Stars: ✭ 79 (-1.25%)

Mutual labels: semi-supervised-learning

Deepaffinity

Protein-compound affinity prediction through unified RNN-CNN

Stars: ✭ 75 (-6.25%)

Mutual labels: semi-supervised-learning

Acgan Pytorch

Pytorch implementation of Conditional Image Synthesis with Auxiliary Classifier GANs

Stars: ✭ 57 (-28.75%)

Mutual labels: semi-supervised-learning

HyperGCN: A New Method of Training Graph Convolutional Networks on Hypergraphs

Source code for NeurIPS 2019 paper: HyperGCN: A New Method of Training Graph Convolutional Networks on Hypergraphs

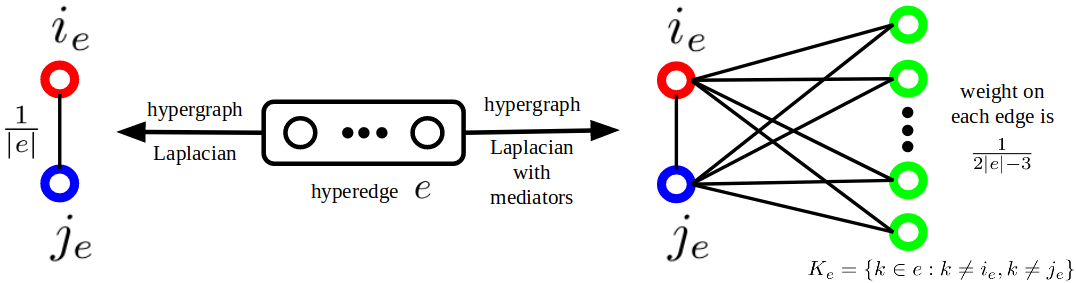

Overview of HyperGCN: *Given a hypergraph and node features, HyperGCN approximates the hypergraph by a graph in which each hyperedge is approximated by a subgraph consisting of an edge between maximally disparate nodes and edges between each of these and every other node (mediator) of the hyperedge. A graph convolutional network (GCN) is then run on the resulting graph approximation. *

Dependencies

- Compatible with PyTorch 1.0 and Python 3.x.

- For data (and/or splits) not used in the paper, please consider tuning hyperparameters such as hidden size, learning rate, seed, etc. on validation data.

Training model (Node classifiction):

-

To start training run:

python hypergcn.py --mediators True --split 1 --data coauthorship --dataset dblp

-

--mediatorsdenotes whether to use mediators (True) or not (False) -

--splitis the train-test split number

-

Citation:

@incollection{hypergcn_neurips19,

title = {HyperGCN: A New Method For Training Graph Convolutional Networks on Hypergraphs},

author = {Yadati, Naganand and Nimishakavi, Madhav and Yadav, Prateek and Nitin, Vikram and Louis, Anand and Talukdar, Partha},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS) 32},

pages = {1509--1520},

year = {2019},

publisher = {Curran Associates, Inc.}

}

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].