helmut-hoffer-von-ankershoffen / Jetson

Licence: mit

Helmut Hoffer von Ankershoffen experimenting with arm64 based NVIDIA Jetson (Nano and AGX Xavier) edge devices running Kubernetes (K8s) for machine learning (ML) including Jupyter Notebooks, TensorFlow Training and TensorFlow Serving using CUDA for smart IoT.

Stars: ✭ 151

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Jetson

Polyaxon

Machine Learning Platform for Kubernetes (MLOps tools for experimentation and automation)

Stars: ✭ 2,966 (+1864.24%)

Mutual labels: jupyter, ml, k8s

Docker Cloud Platform

使用Docker构建云平台,Docker云平台系列共三讲,Docker基础、Docker进阶、基于Docker的云平台方案。OpenStack+Docker+RestAPI+OAuth/HMAC+RabbitMQ/ZMQ+OpenResty/HAProxy/Nginx/APIGateway+Bootstrap/AngularJS+Ansible+K8S/Mesos/Marathon构建/探索微服务最佳实践。

Stars: ✭ 86 (-43.05%)

Mutual labels: ansible, k8s

Devbox Golang

A Vagrant box with Ansible provisioning for setting up a vim-based Go(lang) development environment

Stars: ✭ 84 (-44.37%)

Mutual labels: ansible, virtualbox

Splunkenizer

Ansible framework providing a fast and simple way to spin up complex Splunk environments.

Stars: ✭ 73 (-51.66%)

Mutual labels: ansible, virtualbox

Packer Centos 6

This build has been moved - see README.md

Stars: ✭ 78 (-48.34%)

Mutual labels: ansible, virtualbox

Ansible Role Packer rhel

Ansible Role - Packer RHEL/CentOS Configuration for Vagrant VirtualBox

Stars: ✭ 45 (-70.2%)

Mutual labels: ansible, virtualbox

Packer Ubuntu 1804

This build has been moved - see README.md

Stars: ✭ 101 (-33.11%)

Mutual labels: ansible, virtualbox

Rhcsa8env

This is a RHCSA8 study environment built with Vagrant/Ansible

Stars: ✭ 108 (-28.48%)

Mutual labels: ansible, virtualbox

Responsible Ai Widgets

This project provides responsible AI user interfaces for Fairlearn, interpret-community, and Error Analysis, as well as foundational building blocks that they rely on.

Stars: ✭ 107 (-29.14%)

Mutual labels: jupyter, ml

Molecule Ansible Docker Aws

Example project showing how to test Ansible roles with Molecule using Testinfra and a multiscenario approach with Docker, Vagrant & AWS EC2 as infrastructure providers

Stars: ✭ 72 (-52.32%)

Mutual labels: ansible, virtualbox

Packer Ubuntu 1404

DEPRECATED - Packer Example - Ubuntu 14.04 Vagrant Box using Ansible provisioner

Stars: ✭ 81 (-46.36%)

Mutual labels: ansible, virtualbox

Openfaas On Digitalocean

Ansible playbook to create a Digital Ocean droplet and deploy OpenFaaS onto it.

Stars: ✭ 57 (-62.25%)

Mutual labels: ansible, k8s

Tower Operator

DEPRECATED: This project was moved and renamed to: https://github.com/ansible/awx-operator

Stars: ✭ 87 (-42.38%)

Mutual labels: ansible, k8s

K8s

Deploying Kubernetes High Availability Cluster with Ansible Playbook

Stars: ✭ 125 (-17.22%)

Mutual labels: ansible, k8s

Ansible Jupyterhub

Ansible role to setup jupyterhub server (deprecated)

Stars: ✭ 14 (-90.73%)

Mutual labels: ansible, jupyter

Machinelearningcourse

A collection of notebooks of my Machine Learning class written in python 3

Stars: ✭ 35 (-76.82%)

Mutual labels: jupyter, ml

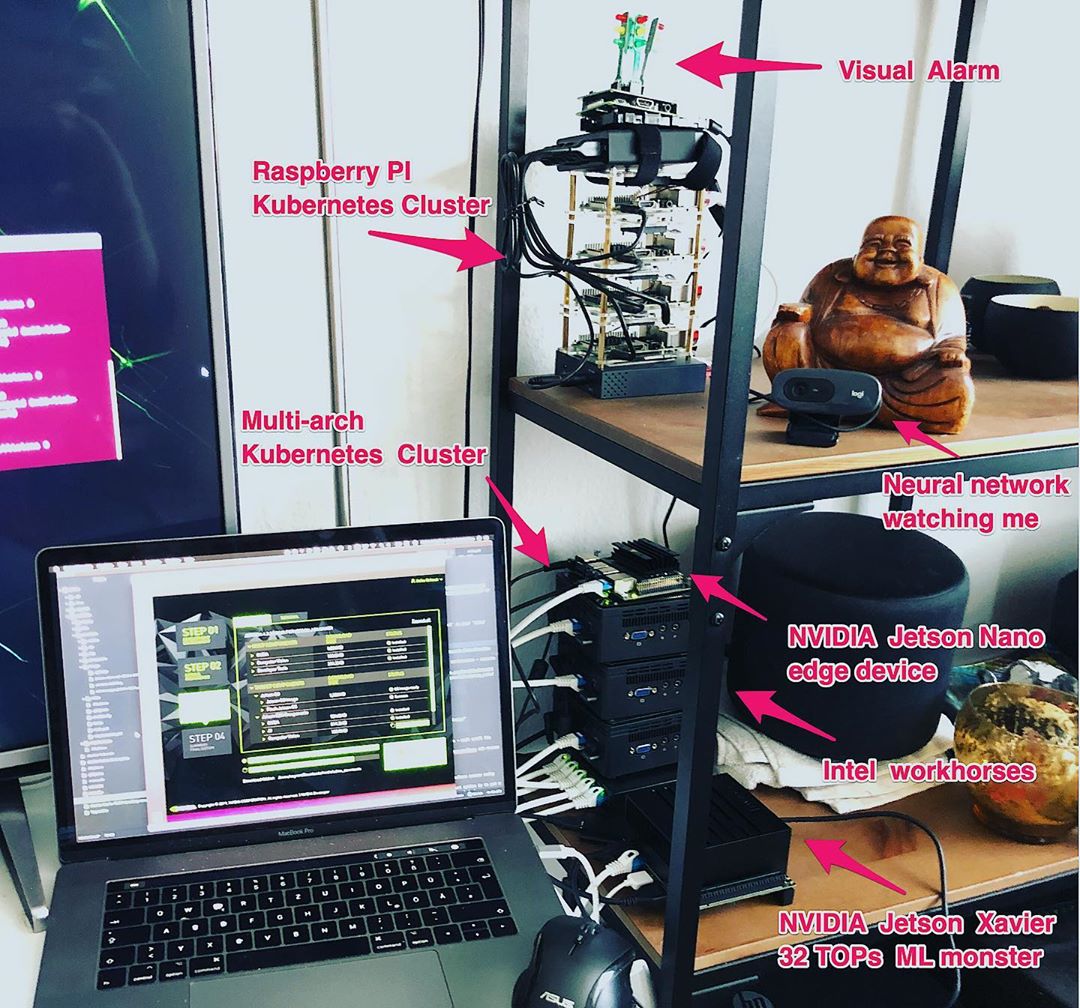

NVIDIA Jetson Nano and NVIDIA Jetson AGX Xavier for Kubernetes (K8s) and machine learning (ML) for smart IoT

Experimenting with arm64 based NVIDIA Jetson (Nano and AGX Xavier) edge devices running Kubernetes (K8s) for machine learning (ML) including Jupyter Notebooks, TensorFlow Training and TensorFlow Serving using CUDA for smart IoT.

Author: Helmut Hoffer von Ankershoffen né Oertel

Hints:

- Assumes an NVIDIA Jetson Nano, TX1, TX2 or AGX Xavier as edge device.

- Assumes a macOS workstation for development such as MacBook Pro

- Assumes access to a bare-metal Kubernetes cluster the Jetson devices can join e.g. set up using Project Max.

- Assumes basic knowledge of Ansible, Docker and Kubernetes.

Mission

- Evaluate feasibility and complexity of Kubernetes + machine learning on edge devices for future smart IoT projects

- Provide patterns to the Jetson community on how to use Ansible and Kubernetes with Jetson devices for automation and orchestration

- Lower the entry barrier for some machine learning students by using cheap edge devices available offline with this starter instead of requiring commercial clouds available online

- Provide a modern Jupyter based infrastructure for students of the Stanford CS229 course using Octave as kernel

- Remove some personal rust regarding deep learning, multi ARM ,-) bandits, artificial intelligence in general and have fun

Features

- [x] basics: Prepare hardware including shared shopping list

- [x] basics: Automatically provision requirements on macOS device for development

- [x] basics: Manually provision root os using NVIDIA JetPack and balenaEtcher on Nanos

- [x] basics: Works with latest JetPack 4.2.1 and default container runtime

- [ ] basics: Works with official NVIDIA Container Runtime, NGC and

nvcr.io/nvidia/l4t-basebase image provided by NVIDIA - [x] basics: Automatically setup secure ssh access

- [x] basics: Automatically setup sudo

- [x] basics: Automatically setup basic packages for development, DevOps and CloudOps

- [x] basics: Automatically setup LXDE for decreased memory consumption

- [x] basics: Automatically setup VNC for headless access

- [x] basics: Automatically setup RDP for headless access

- [x] basics: Automatically setup performance mode including persistent setting of max frequencies for CPU+GPU+EMC to boost throughput

- [X] basics: Automatically build custom kernel as required by Docker + Kubernetes + Weave networking and allowing boot from USB 3.0 / SSD drive

- [x] basics: Automatically provision Jetson Xaviers (in addition to Jetson Nanos) including automatic setup of guest VM for building custom kernel and rootfs and headless oem-setup using a USB-C/serial connection from the guest VM to the Xavier device

- [x] basics: Automatically setup NVMe / SSD drive and use for Docker container images and volumes used by Kubernetes on Jetson Xaviers as part of provisioning

- [x] k8s: Automatically join Kubernetes cluster

maxas worker node labeled asjetson:trueandjetson_model:[nano,xavier]- see Project Max reg.max - [x] k8s: Automatically build and deploy CUDA deviceQuery as pod in Kubernetes cluster to validate access to GPU and correct labeling of jetson based Kubernetes nodes

- [x] k8s: Build and deploy using Skaffold, a custom remote builder for Jetson devices and kustomize for configurable Kubernetes deployments without the need to write Go templates in Helm for Tiller

- [x] k8s: Integrate Container Structure Tests into workflows

- [x] ml: Automatically repack CUDNN, TensorRT and support libraries including python bindings that are bundled with JetPack on Jetson host as deb packages using dpkg-repack as part of provisioning for later use in Docker builds

- [x] ml: Automatically build Docker base image

jetson/[nano,xavier]/ml-baseincluding CUDA, CUDNN, TensorRT, TensorFlow and Anaconda for arm64 - [x] ml: Automatically build and deploy Jupyter server for data science with support for CUDA accelerated Octave, Seaborn, TensorFlow and Keras as pod in Kubernetes cluster running on jetson node

- [x] ml: Automatically build Docker base image

jetson/[nano,xavier]/tensorflow-serving-baseusing bazel and the latest TensorFlow core including support for CUDA capabilities of Jetson edge devices - [x] ml: Automatically build and deploy simple Python/Fast API based webservice as facade of TensorFlow Serving for getting predictions inc. health check for K8s probes, interactive OAS3 API documentation, request/response validation, access of TensorFlow Serving via REST and alternatively gRPC

- [x] ml: Provide variants of Docker images and Kubernetes deployments for Jetson AGX Xavier using auto-activation of Skaffold profiles and Kustomize overlays

- [ ] ml: Build out end to end example application using S3 compatible shared model storage provided by minio for deploying models trained using TensorFlow for inference using TensorFlow serving

- [ ] ml: Automatically setup machine learning workflows using Kubeflow

- [x] optional: Semi-automatically setup USB 3.0 / SSD boot drive given existing installation on micro SD card on Jetson Nanos

- [ ] optional: Automatically cross-build arm64 Docker images on macOS for Jetson devices using buildkit and buildx

- [ ] optional: Automatically setup firewall on host using

ufwfor basic security - [x] community: Publish versioned images on Docker Hub per release and provide Skaffold profiles to pull from Docker Hub instead of having to build images before deploy in Kubernetes cluster

- [ ] community: Author a series of blog posts explaining how to set up ML in Kubernetes on Jetson devices based on this starter, gives more context and explains the toolset used.

- [ ] ml: Deploy Polarize.AI ml training and inference tiers on Jetson nodes (separate project)

Hardware

Shopping list (for Jetson Nano)

- Jetson Nano Developer Kit - ca. $110

- Power supply - ca. $14

- Jumper - ca. $12 - you need only one jumper

- micro SDHC card - ca. $10

- Ethernet cable - ca. $4

- optional: M.2/SATA SSD disk - ca. $49

- optional: USB 3.0 to M.2/SATA enclosure - ca. $12

Total ca. $210 including options.

Hints:

- Assumes a USB mouse, USB keyboard, HDMI cable and monitor is given as required for initial boot of the root OS

- Assumes an existing bare-metal Kubernetes cluster including an Ethernet switch to connect to - have a look at Project Max for how to build one with mini PCs or Project Ceil for the Raspberry PI variant.

- Except for injecting the SSD into its enclosure and shortening one jumper (as described below) no assembly is required.

Shopping list (for Jetson Xavier)

- Jetson Xavier Developer Kit - ca. $712

- M.2/NVMe SSD disk - ca. $65

Total ca. $767.

Hints:

- A USB mouse, USB keyboard, HDMI cable and monitor is not required for initial boot of the root OS as the headless OEM-setup is supported

- Assumes an existing bare-metal Kubernetes cluster including an Ethernet switch to connect to - have a look at Project Max for how to build one with mini PCs or Project Ceil for the Raspberry PI variant.

- Except for installing the NVMe SSD (as described below) no assembly is required.

Install NVMe SSD (required)

As the eMMC soldered onto a Xavier board is 32GB only an SSD is required to provide adequate disk space for Docker images and volume.

- Install the NVMe SSD by connecting to the M.2 port of the daughterboard as described in this article - you can skip the section about setting up the installed SSD in the referenced article as integration (mounting, creation of filesystem, synching of files etc.) is done automatically during provisioning as described in part 2 below.

- Provisioning as described below will automatically integrate the SSD to provide the

/var/lib/dockerdirectory

Bootstrap macOS development environment

- Execute

make bootstrap-environmentto install requirements on your macOS device and setup hostnames such asnano-one.localin your/etc/hosts

Hint:

- During bootstrap you will have to enter the passsword of the current user on your macOS device to allow software installation multiple times

- The system / security settings of your macOS device must allow installation of software coming from outside of the macOS AppStore

-

makeis used as a facade for all workflows triggered from the development workstation - executemake helpon your macOS device to list all targets and see theMakefilein this directory for details -

Ansible is used for configuration management on macOS and edge devices - all Ansible roles are idempotent thus can be executed repeatedly e.g. after you make changes in the configuration in

workflow/provision/group_vars/all.yml - Have a look at

workflow/requirements/macOS/ansible/requirements.ymlfor a list of packages and applications installed

Provision Jetson devices and join Kubernetes cluster as nodes

Part 1 (for Jetson Nanos): Manually flash root os, create provision account as part of oem-setup and automatically establish secure access

- Execute

make nano-image-downloadon your macOS device to download and unzip the NVIDIA Jetpack image intoworkflow/provision/image/ - Start the

balenaEtcherapplication and flash your micro sd card with the downloaded image - Wire the nano up with the ethernet switch of your Kubernetes cluster

- Insert the designated micro sd card in your NVIDIA Jetson nano and power up

-

Create a user account with username

provisionand "Administrator" rights via the UI and setnano-oneas hostname - wait until the login screen appears - Execute

make setup-access-secureand enter the password you set for theprovisionuser in the step above when asked - passwordless ssh access and sudo will be set up

Hints:

- The

balenaEtcherapplication was installed as part of bootstrap - see above - Step 5 requires to wire up your nano with a USB mouse, keyboard and monitor - after that you can unplug and use the nano with headless access using ssh, VNC, RDP or http

- In case of a Jetson Nano you might experience intermittent operation - make sure to provide an adequate power supply - the best option is to put a Jumper on pins J48 and use a DC barrel jack power supply (with 5.5mm OD / 2.1mm ID / 9.5mm length, center pin positive) than can supply 5V with 4A - see NVIDIA DevTalk for a schema of the board layout.

- Depending on how the DHCP server of your cluster is configured you might have to adapt the IP address of

nano-one.localinworkflow/requirements/generic/ansible/playbook.yml- runmake requirements-hostsafter updating the IP address which in turn updates the/etc/hostsfile of your mac - SSH into your Nano using

make nano-one-ssh- your ssh public key was uploaded in step 6 above so no password is asked for

Part 1 (for Jetson AGX Xaviers): Provision guest VM (Ubuntu), build custom Kernel and rootfs in guest VM, flash Xavier, create provision account on Xavier as part of oem-setup and automatically establish secure access

- Execute

make guest-sdk-manager-downloadon your macOS device and follow instructions shown to download the NVIDIA SDK Manager installer intoworkflow/guest/download/- if you fail to download the NVIDIA SDK manager you will be instructed in the next step on how to do it. - Execute

make guest-buildto automatically a) create, boot and provision a Ubuntu guest VM on your macOS device using Vagrant, Virtual Box and Ansible and b) build a custom kernel and rootfs inside the guest VM for flashing the Xavier device - the Linux kernel is built with requirements for Kubernetes + Weave networking - such as activating IP sets and the Open vSwitch datapath module, SDK components are added to the rootfs for automatic installation during provisioning (see part 2). You will be prompted to download SDK components via the NVIDIA SDK manager that was automatically installed during provisioning of the guest VM - please do as instructed on-screen. - Execute

make guest-flashto flash the Xavier with the custom kernel and rootfs - wire up the Xavier with your macOS device using USB-C and enter the recovery mode by powering up and pressing the buttons as described in the printed user manual that was part of your Jetson AGX Xavier shipment before execution - Execute

make guest-oem-setupto start the headless oem-setup process. Follow the on-screen instructions to setup your locale and timezone, create a user account calledprovisionand set an initial password - press the reset button of your Xavier after flashing before triggering the oem-setup. - Execute

make setup-access-secureand enter the password you set for the userprovisionin the step above when asked - passwordless ssh access and sudo will be set up

Hints:

- There is no need to wire up your Xavier with a USB mouse, keyboard and monitor for oem-setup as headless oem-setup is implemented as described in step 4

- Depending on how the DHCP server of your cluster is configured you might have to adapt the IP address of

xavier-one.localinworkflow/requirements/generic/ansible/playbook.yml- you can check the assigned IP after step 4 by logging in as userprovisionwith the password you set, executingifconfig eth0 | grep 'inet'and checking the IP address shown - runmake requirements-hostsafter updating the IP address which in turn updates the/etc/hostsfile of your mac - SSH into your Xavier using

make xavier-one-ssh- your ssh public key was uploaded in step 5 above so no password is asked for

Part 2 (for all Jetson devices): Automatically provision tools, services, kernel and Kubernetes on Jetson host

- Execute

make provision- amongst others services will provisioned, kernel will be compiled (on Nanos only), Kubernetes cluster will be joined

Hints:

- You might want to check settings in

workflow/provision/group_var/all.yml - You might want to use

make provision-nanosandmake provision-xaviersto provision one type of Jetson model only - For Nanos the Linux kernel is built with requirements for Kubernetes + Weave networking on device during provisioning - such as activating IP sets and the Open vSwitch datapath module - thus initial provisioning takes ca. one hour - for Xaviers a custom kernel is built in the guest VM and flashed in part 1 (see above)

- Execute

kubectl get nodesto check that your edge devices joined your cluster and are ready - To easily inspect the Kubernetes cluster in depth execute the lovely

clickfrom the terminal which was installed as part of bootstrap - enternodesto list the nodes with the nano being one of them - see Click for details - VNC into your Nano by starting the VNC Viewer application which was installed as part of bootstrap and connect to

nano-one.local:5901orxavier-one.local:5901respectively - the password issecret - For Xaviers the NVMe SSD is automatically integrated during provisioning to provide

/var/lib/docker. For Nanos you can optionally use a SATA SSD as boot device as described below. - If you want to provision step by step execute

make help | grep "provision-"and execute the desired make target e.g.make provision-kernel - Provisioning is implemented idempotent thus it is safe to repeat provisioning as a whole or individual steps

Part 3 (for all devices): Automatically build Docker images and deploy services to Kubernetes cluster

- Execute

make ml-base-build-and-testonce to build the Docker base imagejetson/[nano,xavier]/ml-base, test via container structure tests and push to the private registry of your cluster, which, amongst others, includes CUDA, CUDNN, TensorRT, TensorFlow, python bindings and Anaconda - have a look at the directoryworkflow/deploy/ml-basefor details - most images below derive from this image - Execute

make device-query-deployto build and deploy a pod into the Kubernetes cluster that queries CUDA capabilities thus validating GPU and Tensor Core access from inside Docker and correct labeling of Jetson/arm64 based Kubernetes nodes - executemake device-query-log-showto show the result after deploying - Execute

make jupyter-deployto build and deploy a Jupyter server as a Kubernetes pod running on nano supporting CUDA accelerated TensorFlow + Keras including support for Octave as an alternative Jupyter Kernel in addition to iPython - executemake jupyter-opento open a browser tab pointing to the Jupyter server to execute the bundled Tensorflow Jupyter notebooks for deep learning - Execute

make tensorflow-serving-base-build-and-testonce to build the TensorFlow Serving base imagejetson/[nano,xavier]/tensorflow-serving-basetest via container structure tests and push to the private registry of your cluster - have a look at the directoryworkflow/deploy/tensorflow-serving-basefor details - most images below derive from this image - Execute

make tensorflow-serving-deployto build and deploy TensorFlow Serving plus a Python/Fast API based Webservice for getting predictions as a Kubernetes pod running on nano - executemake tensorflow-serving-docs-opento open browser tabs pointing to the interactive OAS3 documentation Webservice API; executemake tensorflow-serving-health-checkto check the health as used in K8s readiness and liveness probes; executemake tensorflow-serving-predictto get predictions

Hints:

- To target Xavier devices for build, test, deploy and publish execute

export JETSON_MODEL=xavierin your shell before executing themake ...commands which will auto-activate the matching Skaffold profiles (see below) - ifJETSON_MODELis not set the Nanos will be targeted - All deployments automatically create a private Kubernetes namespace using the pattern

jetson-$deployment- e.g.jetson-jupyterfor the Jupyter deployment - thus easing inspection in the Kubernetes dashboard,clickor similar - All deployments provide a target for deletion such as

make device-query-deletewhich will automatically delete the respective namespace, persistent volumes, pods, services, ingress and loadbalancer on the cluster - All builds on the Jetson device run as user

buildwhich was created during provisioning - usemake nano-one-ssh-buildormake xavier-one-ssh-buildto login as userbuildto monitor intermediate results - Remote building on the Jetson device is implemented using Skaffold and a custom builder - have a look at

workflow/deploy/device-query/skaffold.yamlandworkflow/deploy/tools/builderfor the approach - Skaffold supports a nice build, deploy, tail, watch cycle - execute

make device-query-devas an example - Containers mount devices

/dev/nv*at runtime to access the GPU and Tensor Cores - seeworkflow/deploy/device-query/kustomize/base/deployment.yamlfor details - Kubernetes deployments are defined using kustomize - you can thus define kustomize overlays for deployments on other clusters or scale-out easily

- Kustomize overlays can be easily referenced using Skaffold profiles - have a look at

workflow/deploy/device-query/skaffold.yamlandworkflow/deploy/device-query/kustomize/overlays/xavierfor an example - in this case thexavierprofile is auto-activated respecting theJETSOON_MODELenvironment variable (see above) with the profile in turn activating thexavierKustomize overlay - All containers provide targets for Google container structure tests - execute

make device-query-build-and-testas an example - For easier consumption all container images are published on Docker Hub - if you want to publish your own create a file called

.docker-hub.authin this directory (seedocker-hub.auth.template) and execute the approriate make target, e.g.make ml-base-publish - If you did not use Project Max to provision your bare-metal Kubernetes cluster make sure your cluster provides a DNSMASQ and DHCP server, firewalling, VPN and private Docker registry as well as support for Kubernetes features such as persistent volumes, ingress and loadbalancing as required during build and in deployments - adapt occurrences of the name

max-oneaccordingly to wire up with your infrastructure - The webservice of

tensorflow-servingaccesses TensorFlow Serving via its REST or alternatively the Python bindings of the gRPC API - have a look at the directoryworkflow/deploy/tensorflow-serving/src/webservicefor details of the implementation

Optional: Configure swap

- Execute

provision-swap

Hints:

- You can configure the required swap size in

workflow/provision/group_vars/all.yml

Optional (Jetson Nanos only): Boot from USB 3.0 SATA SSD - for advanced users only

-

Wire up your SATA SSD with one of the USB 3.0 ports, unplug all other block devices connected via USB ports and reboot via

make nano-one-reboot - Set the

ssd.id_serial_shortof the SSD inworkflow/provision/group_varsgiven the info provided by executingmake nano-one-ssd-id-serial-short-show - Execute

nano-one-ssd-prepareto assign the stable device name /dev/ssd, wipe and partition the SSD, create an ext4 filesystem and sync the micro SD card to the SSD - Set the

ssd.uuidof the SSD inworkflow/provision/group_varsgiven the info provided by executingmake nano-one-ssd-uuid-show - Execute

nano-one-ssd-activateto configure the boot menu to use the SSD as the default boot device and reboot

Hints:

- A SATA SSD connected via USB 3.0 is more durable and typically faster by a factor of three relative to a micro sd card - tested using a WD Blue M.2 SSD versus a SanDisk Extreme microSD card

- The micro sd card is mounted as

/mnt/mmcafter step 5 in case you want to update the kernel later which now resides in/mnt/mmc/boot/Image -

You can wire up additional block devices via USB again after step 5 as the UUID is used for referencing the SSD designated for boot(wip)

Optional: Cross-build Docker images for Jetson on macOS (wip)

- Execute

make l4t-deployto cross-build Docker image on macOS using buildkit based on official base image from NVIDIA and deploy - functionality is identical todevice-query- see above

Hints:

- Have a look at the custom Skaffold builder for Mac in

workflow/deploy/l4t/builder.macon how this is achieved - A

daemon.jsonswitching on experimental Docker features on your macOS device required for this was automatically installed as part of bootstrap - see above

Optional: Setup firewall (wip)

- Execute

provision-firewall

Additional labeled references

- https://developer.nvidia.com/embedded/learn/get-started-jetson-nano-devkit (intro)

- https://developer.nvidia.com/embedded/jetpack (jetpack)

- https://blog.hackster.io/getting-started-with-the-nvidia-jetson-nano-developer-kit-43aa7c298797 (jetpack,vnc)

- https://devtalk.nvidia.com/default/topic/1051327/jetson-nano-jetpack-4-2-firewall-broken-possible-kernel-compilation-issue-missing-iptables-modules/ (jetpack,firewall,ufw,bug)

- https://devtalk.nvidia.com/default/topic/1052748/jetson-nano/egx-nvidia-docker-runtime-on-nano/ (docker,nvidia,missing)

- https://github.com/NVIDIA/nvidia-docker/wiki/NVIDIA-Container-Runtime-on-Jetson#mount-plugins (docker,nvidia)

- https://blog.hypriot.com/post/nvidia-jetson-nano-build-kernel-docker-optimized/ (docker,workaround)

- https://github.com/Technica-Corporation/Tegra-Docker (docker,workaround)

- https://medium.com/@jerry**liang/deploy-gpu-enabled-kubernetes-pod-on-nvidia-jetson-nano-ce738e3bcda9 (k8s)

- https://gist.github.com/buptliuwei/8a340cc151507cb48a071cda04e1f882 (k8s)

- https://github.com/dusty-nv/jetson-inference/ (ml)

- https://docs.nvidia.com/deeplearning/frameworks/install-tf-jetson-platform/index.html (tensorflow)

- https://devtalk.nvidia.com/default/topic/1043951/jetson-agx-xavier/docker-gpu-acceleration-on-jetson-agx-for-ubuntu-18-04-image/post/5296647/#5296647 (docker,tensorflow)

- https://towardsdatascience.com/deploying-keras-models-using-tensorflow-serving-and-flask-508ba00f1037 (tensorflow,keras,serving)

- https://jkjung-avt.github.io/build-tensorflow-1.8.0/ (bazel,build)

- https://oracle.github.io/graphpipe/#/ (tensorflow,serving,graphpipe)

- https://towardsdatascience.com/how-to-deploy-jupyter-notebooks-as-components-of-a-kubeflow-ml-pipeline-part-2-b1df77f4e5b3 (kubeflow,jupyter)

- https://rapids.ai/start.html (rapids ai)

- https://syonyk.blogspot.com/2019/04/nvidia-jetson-nano-desktop-use-kernel-builds.html (boot,usb)

- https://kubeedge.io/en/ (kube,edge)

- https://towardsdatascience.com/bringing-the-best-out-of-jupyter-notebooks-for-data-science-f0871519ca29 (jupyter,data-science)

- https://github.com/jetsistant/docker-cuda-jetpack/blob/master/Dockerfile.base (cuda,deb,alternative-approach)

- https://docs.bazel.build/versions/master/user-manual.html (bazel,manual)

- https://docs.bitnami.com/google/how-to/enable-nvidia-gpu-tensorflow-serving/ (tf-serving,build,cuda)

- https://github.com/tensorflow/serving/blob/master/tensorflow_serving/tools/docker/Dockerfile.devel-gpu (tf-serving,docker)

- https://www.kubeflow.org/docs/components/serving/tfserving_new/ (kubeflow,tf-serving)

- https://www.seldon.io/open-source/ (seldon,ml)

- https://www.electronicdesign.com/industrial-automation/nvidia-egx-spreads-ai-cloud-edge (egx,edge-ai)

- https://min.io/ (s3,lambda)

- https://github.com/NVAITC/ai-lab (ai-lab,container)

- https://www.altoros.com/blog/kubeflow-automating-deployment-of-tensorflow-models-on-kubernetes/ (kubeflow)

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].