gcarq / Keras Timeseries Prediction

Licence: mit

Time series prediction with Sequential Model and LSTM units

Stars: ✭ 100

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Keras Timeseries Prediction

Base-On-Relation-Method-Extract-News-DA-RNN-Model-For-Stock-Prediction--Pytorch

基於關聯式新聞提取方法之雙階段注意力機制模型用於股票預測

Stars: ✭ 33 (-67%)

Mutual labels: prediction, lstm

Stock Price Predictor

This project seeks to utilize Deep Learning models, Long-Short Term Memory (LSTM) Neural Network algorithm, to predict stock prices.

Stars: ✭ 146 (+46%)

Mutual labels: lstm, prediction

Deep Learning Time Series

List of papers, code and experiments using deep learning for time series forecasting

Stars: ✭ 796 (+696%)

Mutual labels: lstm, prediction

Tensorflow Lstm Sin

TensorFlow 1.3 experiment with LSTM (and GRU) RNNs for sine prediction

Stars: ✭ 52 (-48%)

Mutual labels: lstm, prediction

Ai Reading Materials

Some of the ML and DL related reading materials, research papers that I've read

Stars: ✭ 79 (-21%)

Mutual labels: lstm, prediction

Lstm chem

Implementation of the paper - Generative Recurrent Networks for De Novo Drug Design.

Stars: ✭ 87 (-13%)

Mutual labels: lstm

Bitcoin Value Predictor

[NOT MAINTAINED] Predicting Bit coin price using Time series analysis and sentiment analysis of tweets on bitcoin

Stars: ✭ 91 (-9%)

Mutual labels: prediction

Contextual Utterance Level Multimodal Sentiment Analysis

Context-Dependent Sentiment Analysis in User-Generated Videos

Stars: ✭ 86 (-14%)

Mutual labels: lstm

Stgcn

implementation of STGCN for traffic prediction in IJCAI2018

Stars: ✭ 87 (-13%)

Mutual labels: prediction

Multitask sentiment analysis

Multitask Deep Learning for Sentiment Analysis using Character-Level Language Model, Bi-LSTMs for POS Tag, Chunking and Unsupervised Dependency Parsing. Inspired by this great article https://arxiv.org/abs/1611.01587

Stars: ✭ 93 (-7%)

Mutual labels: lstm

Ml

A high-level machine learning and deep learning library for the PHP language.

Stars: ✭ 1,270 (+1170%)

Mutual labels: prediction

Sarcasmdetection

Sarcasm detection on tweets using neural network

Stars: ✭ 99 (-1%)

Mutual labels: lstm

Self Attention Classification

document classification using LSTM + self attention

Stars: ✭ 84 (-16%)

Mutual labels: lstm

Language Translation

Neural machine translator for English2German translation.

Stars: ✭ 82 (-18%)

Mutual labels: lstm

Time series predictions with Keras

Requirements

- Theano

- Keras

- matplotlib

- pandas

- scikit-learn

- tqdm

- numpy

Usage

git clone https://github.com/gcarq/keras-timeseries-prediction.git

cd keras-timeseries-prediction/

pip install -r requirements.txt

python main.py

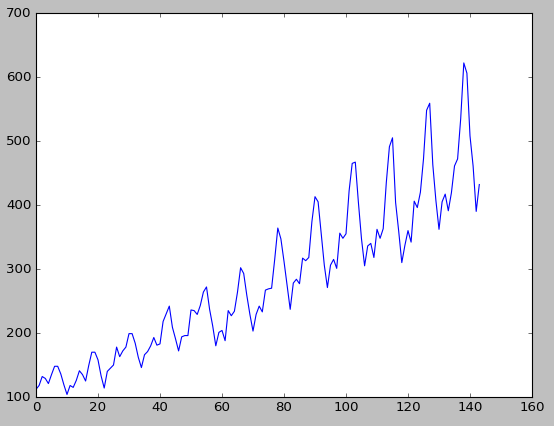

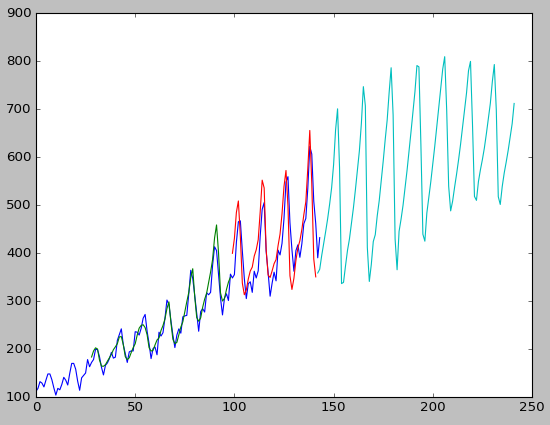

Dataset

The dataset is international-airline-passengers.csv which contains 144 data points ranging from Jan 1949 to Dec 1960.

Each data point represents monthly passengers in thousands.

Model

model = Sequential()

model.add(LSTM(64,

activation='relu',

batch_input_shape=(batch_size, look_back, 1),

stateful=True,

return_sequences=False))

model.add(Dense(1, activation='linear'))

model.compile(loss='mean_squared_error', optimizer='adam')

Results

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].