zlthinker / Kfnet

Programming Languages

Labels

Projects that are alternatives of or similar to Kfnet

KFNet

This is a Tensorflow implementation of our CVPR 2020 Oral paper - "KFNet: Learning Temporal Camera Relocalization using Kalman Filtering" by Lei Zhou, Zixin Luo, Tianwei Shen, Jiahui Zhang, Mingmin Zhen, Yao Yao, Tian Fang, Long Quan.

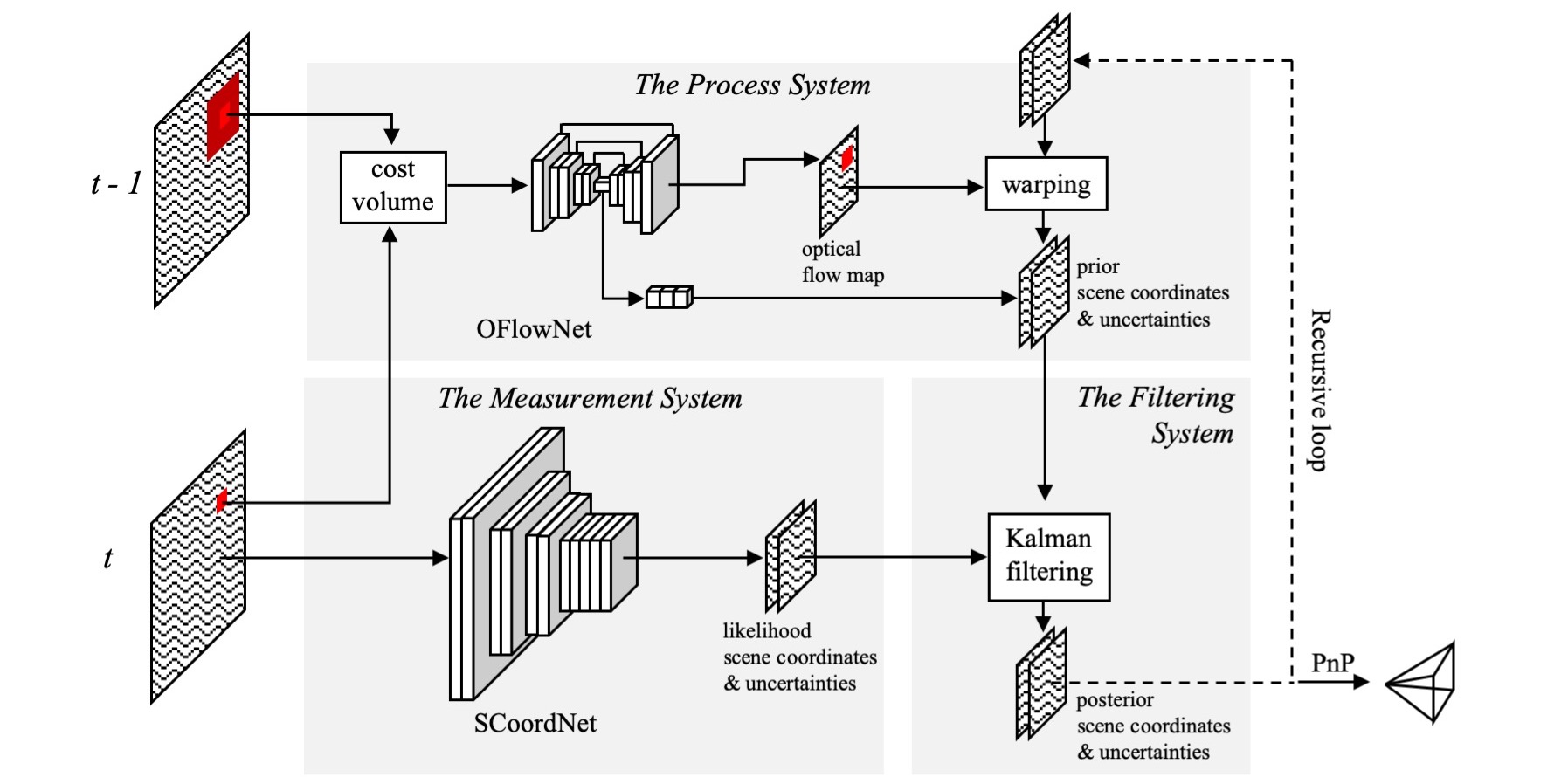

This paper addresses the temporal camera relocalization of time-series image data by folding the scene coordinate regression problem into the principled Kalman filter framework.

If you find this project useful, please cite:

@inproceedings{zhou2020kfnet,

title={KFNet: Learning Temporal Camera Relocalization using Kalman Filtering},

author={Zhou, Lei and Luo, Zixin and Shen, Tianwei and Zhang, Jiahui and Zhen, Mingmin and Yao, Yao and Fang, Tian and Quan, Long},

booktitle={Computer Vision and Pattern Recognition (CVPR)},

year={2020}

}

Contents

About

Network architecture

Sample results on 7scenes and 12scenes

KFNet simultaneously predicts the mapping points and camera poses in a temporal fashion within the coordinate system defined by a known scene.

| DSAC++ | KFNet | |

|---|---|---|

| 7scenes-fire |  |

|

| 12scenes-office2-5a |  |

|

| Description | Blue - ground truth poses | Red - estimated poses |

Intermediate uncertainty predictions

Below we visualize the measurement and process noise.

| Data | Measurement noise | Process noise |

|---|---|---|

| 7scenes-fire |  |

|

| 12scenes-office2-5a |  |

|

| Description | The brighter color means smaller noise. | The figure bar measures the inverse of the covariances (in centimeters) |

Intermediate optical flow results on 7scenes, 12scenes, Cambridge and DeepLoc

As an essential component of KFNet, the process system of KFNet (i.e., OFlowNet) delineates pixel transitions across frames through optical flow reasoning yet without recourse to grourd truth optical flow labelling. We visualize the predicted optical flow fields below while suppressing the predictions with too large uncertainties.

Remark For DeepLoc, since OFlowNet is trained only on one scene included in DeepLoc, the flow predictions appear somewhat messy due to the lack of training data. Training with a larger amount and variety of data would improve the results.

Usage

File format

-

Input: The input folder of a project should contain the files below.

-

image_list.txtcomprising the sequential full image paths in lines. Please go to the 7scenes dataset to download the source images. -

label_list.txtcomprising the full label paths in lines corresponding to the images. The label files are generated by thetofile()function of numpy matrices. They have a channel number of 4, with 3 for scene coordinates and 1 for binary masks of pixels. The mask for one pixel is 1 if its label scene coordinates are valid and 0 otherwise. Their resolutions are 8 times lower than the images. For example, for the 7scenes dataset, the images have a resolution of 480x640, while the label maps have a resolution of 60x80. -

transform.txtrecording the 4x4 Euclidean transformation matrix which decorrelates the scene point cloud to give zero mean and correlations. - You can download the prepared input label map files of 7scenes from the Google drive links below.

chess(13G) fire(9G) heads(4G) office(22G) pumpkin(13G) redkitchen(27G) stairs(7G) -

-

Output: The testing program (to be introduced below) outputs a 3-d scene coordinate map (in meters) and a 1-d confidence map into a 4-channel numpy matrix for each input image. And then you can run the provided PnP program (in

PnP.zip) or your own algorithms to compute the camera poses from them.- The confidences are the inverse of predicted Gaussian variances / uncertainties. Thus, the larger the confidences, the smaller the variances are.

- You can visualize a scene coordinate map as a point cloud via Open3d by running

python vis/vis_scene_coordinate_map.py <path_to_npy_file>. - Or you can visualize a streaming scene coordinate map list by running

python vis/vis_scene_coordinate_map_list.py <path_to_npy_list>.

Environment

-

The codes are tested along with

- python 2.7,

- tensorflow-gpu 1.10~1.13 (inclusive),

- corresponding versions of CUDA and CUDNN to enable tensorflow-gpu (see link for reference of the version combinations),

- other python packages including numpy, matplotlib and open3d.

-

To directly install tensorflow and other python packages, run

sudo pip install -r requirements.txt

- If you are familiar with Conda, you can create the environment for KFNet by running

conda create -f environment.yml

conda activate KFNet

Testing

- Download

You can download the trained models of 7scenes from the Google drive link (3G).

- Test SCoordNet

git checkout SCoordNet

python SCoordnet/eval.py --input_folder <input_folder> --output_folder <output_folder> --model_folder <model_folder> --scene <scene>

# <scene> = chess/fire/heads/office/pumpkin/redkitchen/stairs, i.e., one of the scene names of 7scenes dataset

- Test OFlowNet

git checkout OFlowNet

python OFlowNet/eval --input_folder <input_folder> --output_folder <output_folder> --model_folder <model_folder>

The testing program of OFlowNet will save the 2-d optical flows and 1-d uncertainties of consecutive image pairs as npy files of the dimension 60x80x3. You can visualize the flow results by running scripts vis/vis_optical_flow.py and vis/vis_optical_flow_list.py.

- Test KFNet

git checkout master

python KFNet/eval.py --input_folder <input_folder> --output_folder <output_folder> --model_folder <model_folder> --scene <scene>

- Run PnP to compute camera poses

unzip PnP.zip && cd PnP

python main.py <path_to_output_file_list> <output_folder> --gt <path_to_ground_truth_pose_list> --thread_num <32>

// Please note that you need to install git-lfs before cloning to get PnP.zip, since the zip file is stored via LFS.

Training

The training procedure has 3 stages.

- Train SCoordNet for each scene independently.

git checkout SCoordnet

python SCoordNet/train.py --input_folder <input_folder> --model_folder <scoordnet_model_folder> --scene <scene>

-

Train OFlowNet using all the image sequences that are not limited to any specific scenes, for example, concatenating all the

image_list.txtandlabel_list.txtof 7scenes for training.

git checkout OFlowNet

python OFlowNet/train.py --input_folder <input_folder> --model_folder <oflownet_model_folder>

- Train KFNet for each scene from the pre-trained SCoordNet and OFlowNet models to jointly finetune their parameters.

git checkout master

python KFNet/train.py --input_folder <input_folder> --model_folder <model_folder> --scoordnet <scoordnet_model_folder> --oflownet <oflownet_model_folder> --scene <scene>

Credit

This implementation was developed by Lei Zhou. Feel free to contact Lei for any enquiry.