kuixu / Kitti_object_vis

Licence: mit

KITTI Object Visualization (Birdview, Volumetric LiDar point cloud )

Stars: ✭ 467

Projects that are alternatives of or similar to Kitti object vis

Python3 In One Pic

Learn python3 in one picture.

Stars: ✭ 4,514 (+866.6%)

Mutual labels: jupyter-notebook

Neural Turing Machines

Attempt at implementing system described in "Neural Turing Machines." by Graves, Alex, Greg Wayne, and Ivo Danihelka. (http://arxiv.org/abs/1410.5401)

Stars: ✭ 462 (-1.07%)

Mutual labels: jupyter-notebook

How to make a text summarizer

This is the code for "How to Make a Text Summarizer - Intro to Deep Learning #10" by Siraj Raval on Youtube

Stars: ✭ 467 (+0%)

Mutual labels: jupyter-notebook

Gantts

PyTorch implementation of GAN-based text-to-speech synthesis and voice conversion (VC)

Stars: ✭ 460 (-1.5%)

Mutual labels: jupyter-notebook

Document cluster

A guide to document clustering in Python

Stars: ✭ 469 (+0.43%)

Mutual labels: jupyter-notebook

Additive Margin Softmax

This is the implementation of paper <Additive Margin Softmax for Face Verification>

Stars: ✭ 464 (-0.64%)

Mutual labels: jupyter-notebook

Clickbait Detector

Detects clickbait headlines using deep learning.

Stars: ✭ 468 (+0.21%)

Mutual labels: jupyter-notebook

Scene Graph Benchmark.pytorch

A new codebase for popular Scene Graph Generation methods (2020). Visualization & Scene Graph Extraction on custom images/datasets are provided. It's also a PyTorch implementation of paper “Unbiased Scene Graph Generation from Biased Training CVPR 2020”

Stars: ✭ 462 (-1.07%)

Mutual labels: jupyter-notebook

Rl Book

Source codes for the book "Reinforcement Learning: Theory and Python Implementation"

Stars: ✭ 464 (-0.64%)

Mutual labels: jupyter-notebook

Machine Learning A Probabilistic Perspective Solutions

My solutions to Kevin Murphy Machine Learning Book

Stars: ✭ 467 (+0%)

Mutual labels: jupyter-notebook

Timeseries seq2seq

This repo aims to be a useful collection of notebooks/code for understanding and implementing seq2seq neural networks for time series forecasting. Networks are constructed with keras/tensorflow.

Stars: ✭ 462 (-1.07%)

Mutual labels: jupyter-notebook

Lowresource Nlp Bootcamp 2020

The website for the CMU Language Technologies Institute low resource NLP bootcamp 2020

Stars: ✭ 469 (+0.43%)

Mutual labels: jupyter-notebook

Generative Adversarial Network Tutorial

Tutorial on creating your own GAN in Tensorflow

Stars: ✭ 461 (-1.28%)

Mutual labels: jupyter-notebook

Interview Questions

机器学习/深度学习/Python/Go语言面试题笔试题(Machine learning Deep Learning Python and Golang Interview Questions)

Stars: ✭ 462 (-1.07%)

Mutual labels: jupyter-notebook

Course20

Deep Learning for Coders, 2020, the website

Stars: ✭ 468 (+0.21%)

Mutual labels: jupyter-notebook

KITTI Object data transformation and visualization

Dataset

Download the data (calib, image_2, label_2, velodyne) from Kitti Object Detection Dataset and place it in your data folder at kitti/object

The folder structure is as following:

kitti

object

testing

calib

image_2

label_2

velodyne

training

calib

image_2

label_2

velodyne

Install locally on a Ubuntu 16.04 PC with GUI

- start from a new conda enviornment:

(base)$ conda create -n kitti_vis python=3.7 # vtk does not support python 3.8

(base)$ conda activate kitti_vis

- opencv, pillow, scipy, matplotlib

(kitti_vis)$ pip install opencv-python pillow scipy matplotlib

- install mayavi from conda-forge, this installs vtk and pyqt5 automatically

(kitti_vis)$ conda install mayavi -c conda-forge

- test installation

(kitti_vis)$ python kitti_object.py --show_lidar_with_depth --img_fov --const_box --vis

Note: the above installation has been tested not work on MacOS.

Install remotely

Please refer to the jupyter folder for installing on a remote server and visulizing in Jupyter Notebook.

Visualization

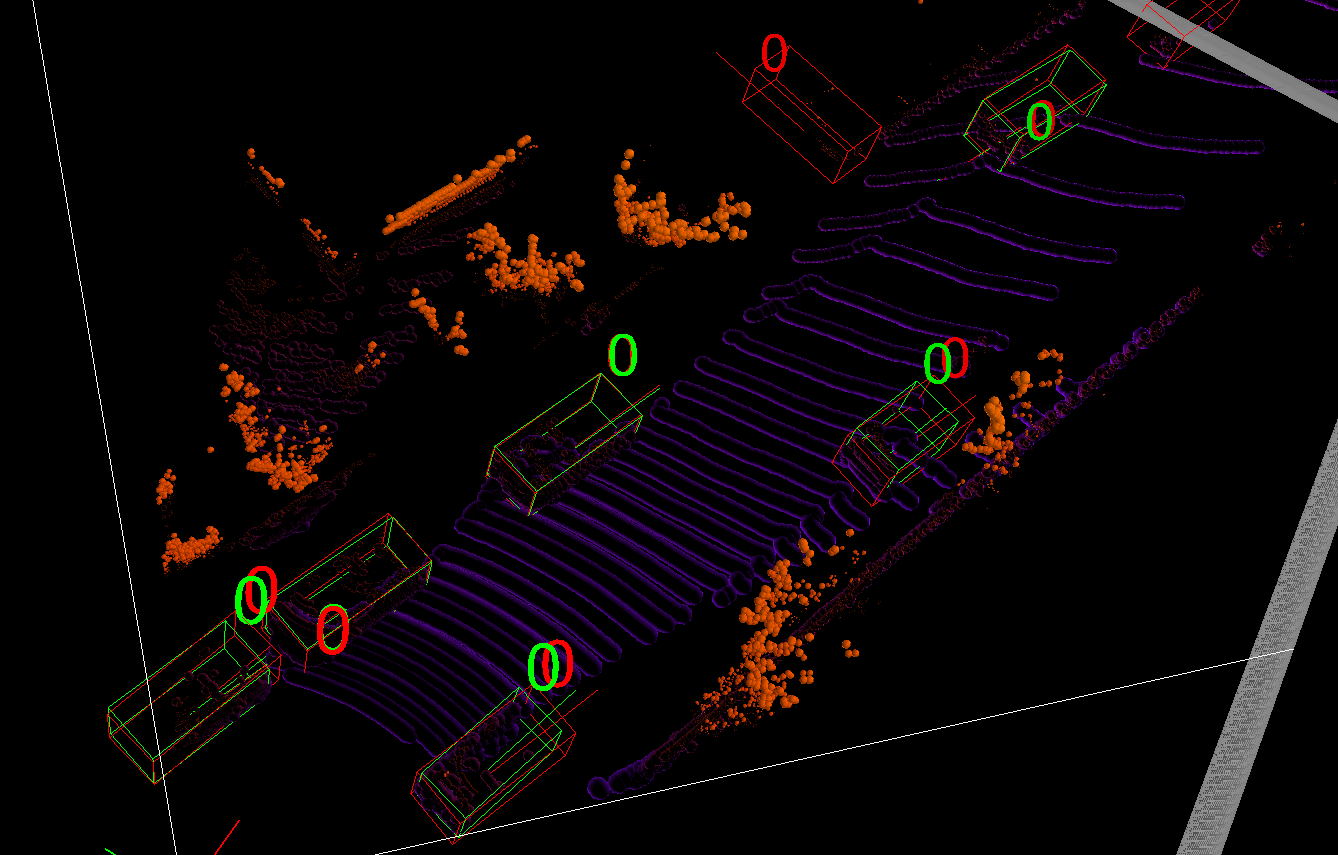

- 3D boxes on LiDar point cloud in volumetric mode

- 2D and 3D boxes on Camera image

- 2D boxes on LiDar Birdview

- LiDar data on Camera image

$ python kitti_object.py --help

usage: kitti_object.py [-h] [-d N] [-i N] [-p] [-s] [-l N] [-e N] [-r N]

[--gen_depth] [--vis] [--depth] [--img_fov]

[--const_box] [--save_depth] [--pc_label]

[--show_lidar_on_image] [--show_lidar_with_depth]

[--show_image_with_boxes]

[--show_lidar_topview_with_boxes]

KIITI Object Visualization

optional arguments:

-h, --help show this help message and exit

-d N, --dir N input (default: data/object)

-i N, --ind N input (default: data/object)

-p, --pred show predict results

-s, --stat stat the w/h/l of point cloud in gt bbox

-l N, --lidar N velodyne dir (default: velodyne)

-e N, --depthdir N depth dir (default: depth)

-r N, --preddir N predicted boxes (default: pred)

--gen_depth generate depth

--vis show images

--depth load depth

--img_fov front view mapping

--const_box constraint box

--save_depth save depth into file

--pc_label 5-verctor lidar, pc with label

--show_lidar_on_image

project lidar on image

--show_lidar_with_depth

--show_lidar, depth is supported

--show_image_with_boxes

show lidar

--show_lidar_topview_with_boxes

show lidar topview

--split use training split or testing split (default: training)

$ python kitti_object.py

Specific your own folder,

$ python kitti_object.py -d /path/to/kitti/object

Show LiDAR only

$ python kitti_object.py --show_lidar_with_depth --img_fov --const_box --vis

Show LiDAR and image

$ python kitti_object.py --show_lidar_with_depth --img_fov --const_box --vis --show_image_with_boxes

Show LiDAR and image with specific index

$ python kitti_object.py --show_lidar_with_depth --img_fov --const_box --vis --show_image_with_boxes --ind 100

Show LiDAR with label (5 vector)

$ python kitti_object.py --show_lidar_with_depth --img_fov --const_box --vis --pc_label

Demo

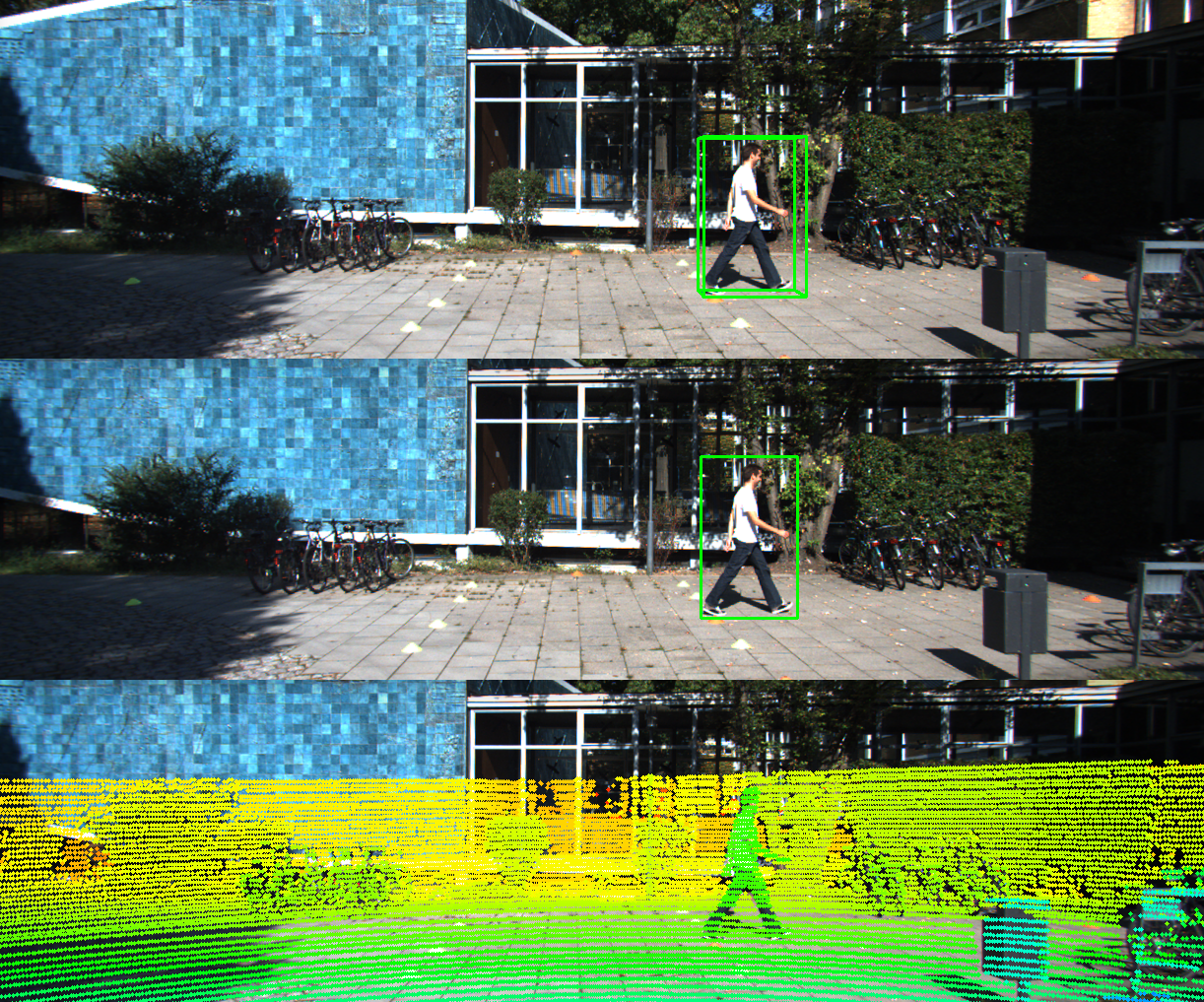

2D, 3D boxes and LiDar data on Camera image

Credit: @yuanzhenxun

Credit: @yuanzhenxun

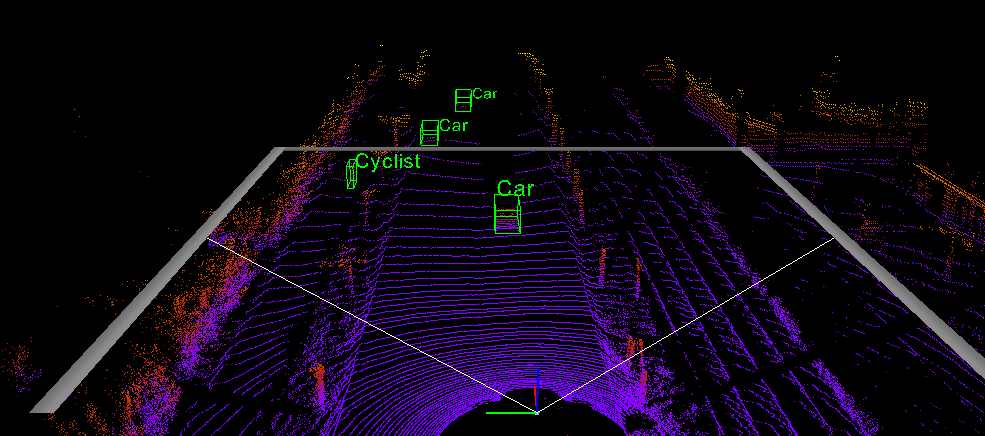

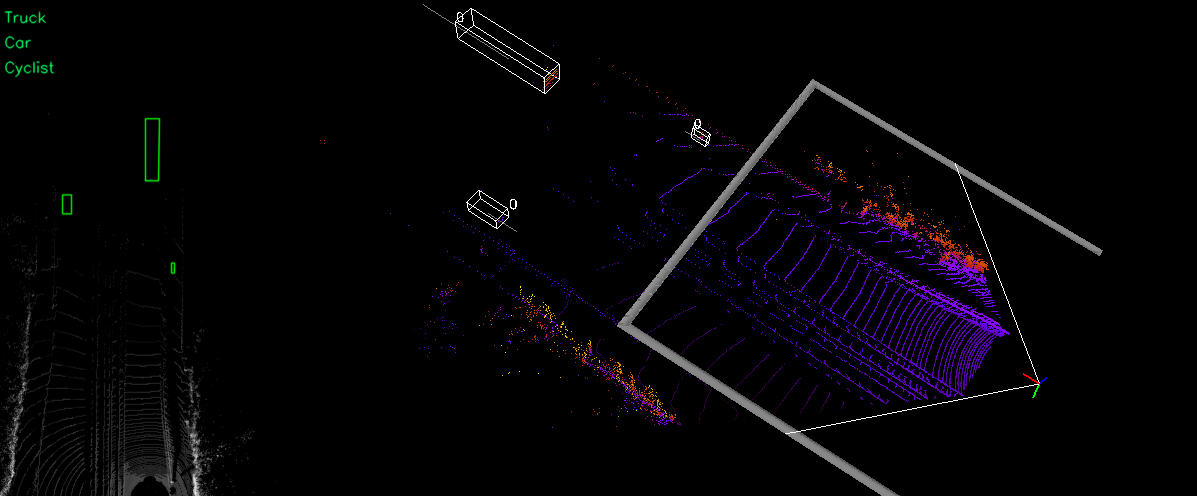

LiDar birdview and point cloud (3D)

Show Predicted Results

Firstly, map KITTI official formated results into data directory

./map_pred.sh /path/to/results

python kitti_object.py -p --vis

Acknowlegement

Code is mainly from f-pointnet and MV3D

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].