Joker316701882 / Additive Margin Softmax

Projects that are alternatives of or similar to Additive Margin Softmax

Additive-Margin-Softmax

This is the implementation of paper <Additive Margin Softmax for Face Verification>

Training logic is highly inspired by Sandberg's Facenet, check it if you are interested.

model structure can be found at ./models/resface.py and loss head can be found at AM-softmax.py

Usage

Step1: Align Dataset

See folder "align", this totally forked from insightface. The default image size is (112,96), in this repository, all trained faces share same size (112,96). Use align code to align your train data and validation data (like lfw) first. You can use align_lfw.py to align both training set and lfw, don't worry about others like align_insight, align_dlib.

python align_lfw.py --input-dir [train data dir] --output-dir [aligned output dir]

Step2: Train AM-softmax

Read parse_arguments() function carefully to confiure parameters. If you are new in face recognition, after aligning dataset, simply run this code, the default settings will help you solve the rest.

python train.py --data_dir [aligned train data] --random_flip --learning_rate -1 --learning_rate_schedule_file ./data/learning_rate_AM_softmax.txt --lfw_dir [aligned lfw data] --keep_probability 0.8 --weight_decay 5e-4

Also watch out that acc on lfw is not from cross validation. Read source code for more detail. Thanks Sandberg again for his extraordinary code.

News

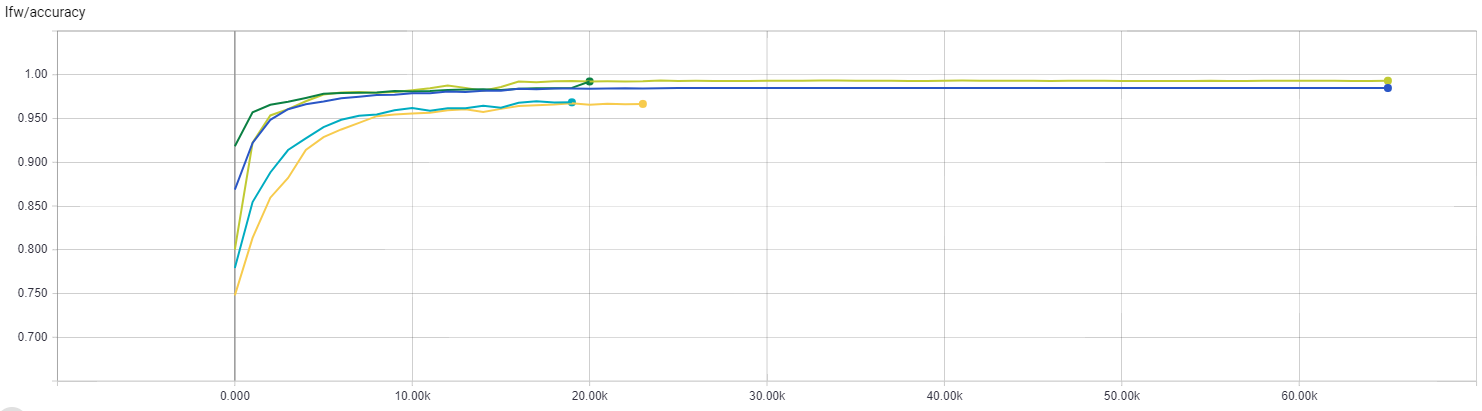

lfw accuracy

Momemtum with weight_decay: See ./tfboard/resface20_mom_weightdecay.png

My Chinese blog about Face Recognition system

https://xraft.github.io/2018/03/21/FaceRecognition/

It includes the experimental details of this repo. Welcome and share your precious advice!