rajarsheem / Libsdae Autoencoder Tensorflow

Licence: mit

A simple Tensorflow based library for deep and/or denoising AutoEncoder.

Stars: ✭ 147

Programming Languages

python

139335 projects - #7 most used programming language

Labels

Projects that are alternatives of or similar to Libsdae Autoencoder Tensorflow

Segmentation

Tensorflow implementation : U-net and FCN with global convolution

Stars: ✭ 101 (-31.29%)

Mutual labels: autoencoder

Lstm Autoencoders

Anomaly detection for streaming data using autoencoders

Stars: ✭ 113 (-23.13%)

Mutual labels: autoencoder

Pt Dec

PyTorch implementation of DEC (Deep Embedding Clustering)

Stars: ✭ 132 (-10.2%)

Mutual labels: autoencoder

Gpnd

Generative Probabilistic Novelty Detection with Adversarial Autoencoders

Stars: ✭ 112 (-23.81%)

Mutual labels: autoencoder

Rectorch

rectorch is a pytorch-based framework for state-of-the-art top-N recommendation

Stars: ✭ 121 (-17.69%)

Mutual labels: autoencoder

Zerospeech Tts Without T

A Pytorch implementation for the ZeroSpeech 2019 challenge.

Stars: ✭ 100 (-31.97%)

Mutual labels: autoencoder

Tensorflow Mnist Cvae

Tensorflow implementation of conditional variational auto-encoder for MNIST

Stars: ✭ 139 (-5.44%)

Mutual labels: autoencoder

Pytorch cpp

Deep Learning sample programs using PyTorch in C++

Stars: ✭ 114 (-22.45%)

Mutual labels: autoencoder

Tybalt

Training and evaluating a variational autoencoder for pan-cancer gene expression data

Stars: ✭ 126 (-14.29%)

Mutual labels: autoencoder

Repo 2016

R, Python and Mathematica Codes in Machine Learning, Deep Learning, Artificial Intelligence, NLP and Geolocation

Stars: ✭ 103 (-29.93%)

Mutual labels: autoencoder

Smrt

Handle class imbalance intelligently by using variational auto-encoders to generate synthetic observations of your minority class.

Stars: ✭ 102 (-30.61%)

Mutual labels: autoencoder

Kate

Code & data accompanying the KDD 2017 paper "KATE: K-Competitive Autoencoder for Text"

Stars: ✭ 135 (-8.16%)

Mutual labels: autoencoder

Deep Autoencoders For Collaborative Filtering

Using Deep Autoencoders for predictions of movie ratings.

Stars: ✭ 101 (-31.29%)

Mutual labels: autoencoder

Focal Frequency Loss

Focal Frequency Loss for Generative Models

Stars: ✭ 141 (-4.08%)

Mutual labels: autoencoder

Splitbrainauto

Split-Brain Autoencoders: Unsupervised Learning by Cross-Channel Prediction. In CVPR, 2017.

Stars: ✭ 137 (-6.8%)

Mutual labels: autoencoder

Srl Zoo

State Representation Learning (SRL) zoo with PyTorch - Part of S-RL Toolbox

Stars: ✭ 125 (-14.97%)

Mutual labels: autoencoder

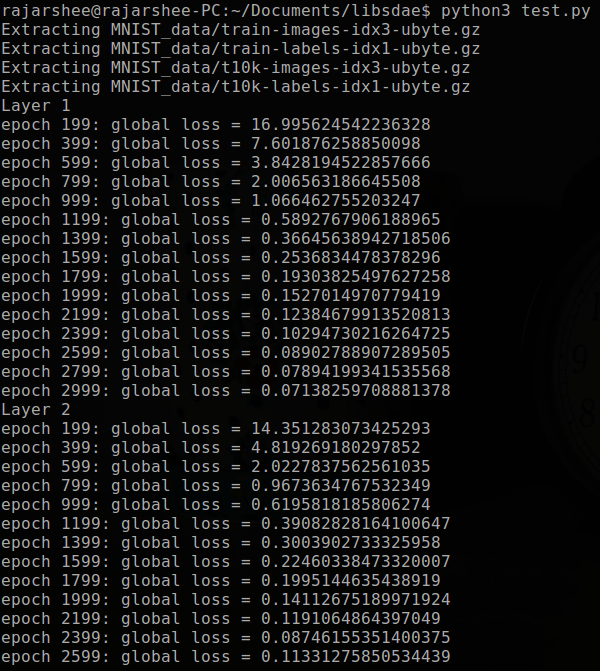

libsdae - deep-Autoencoder & denoising autoencoder

A simple Tensorflow based library for Deep autoencoder and denoising AE. Library follows sklearn style.

Prerequisities & Support

- Tensorflow 1.0 is needed.

- Supports both Python 2.7 and 3.4+ . Inform if it doesn't.

Installing

pip install git+https://github.com/rajarsheem/libsdae.git

Usage and small doc

test.ipynb has small example where both a tiny and a large dataset is used.

from deepautoencoder import StackedAutoEncoder

model = StackedAutoEncoder(dims=[5,6], activations=['relu', 'relu'], noise='gaussian', epoch=[10000,500],

loss='rmse', lr=0.007, batch_size=50, print_step=2000)

# usage 1 - encoding same data

result = model.fit_transform(x)

# usage 2 - fitting on one dataset and transforming (encoding) on another data

model.fit(x)

result = model.transform(np.random.rand(5, x.shape[1]))

Important points:

- If noise is not given, it becomes an autoencoder instead of denoising autoencoder.

- dims refers to the dimenstions of hidden layers. (3 layers in this case)

- noise = (optional)['gaussian', 'mask-0.4']. mask-0.4 means 40% of bits will be masked for each example.

- x_ is the encoded feature representation of x.

- loss = (optional) reconstruction error. rmse or softmax with cross entropy are allowed. default is rmse.

- print_step is the no. of steps to skip between two loss prints.

- activations can be 'sigmoid', 'softmax', 'tanh' and 'relu'.

- batch_size is the size of batch in every epoch

- Note that while running, global loss means the loss on the total dataset and not on a specific batch.

- epoch is a list denoting the no. of iterations for each layer.

Citing

- Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion by P. Vincent, H. Larochelle, I. Lajoie, Y. Bengio and P. Manzagol (Journal of Machine Learning Research 11 (2010) 3371-3408)

Contributing

You are free to contribute by starting a pull request. Some suggestions are:

- Variational Autoencoders

- Recurrent Autoencoders.

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].