ningshixian / Lstm_attention

attention-based LSTM/Dense implemented by Keras

Stars: ✭ 168

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Lstm attention

datastories-semeval2017-task6

Deep-learning model presented in "DataStories at SemEval-2017 Task 6: Siamese LSTM with Attention for Humorous Text Comparison".

Stars: ✭ 20 (-88.1%)

Mutual labels: lstm, attention-mechanism

keras-deep-learning

Various implementations and projects on CNN, RNN, LSTM, GAN, etc

Stars: ✭ 22 (-86.9%)

Mutual labels: lstm, attention-mechanism

extkeras

Playground for implementing custom layers and other components compatible with keras, with the purpose to learn the framework better and perhaps in future offer some utils for others.

Stars: ✭ 18 (-89.29%)

Mutual labels: lstm, attention-mechanism

Multimodal Sentiment Analysis

Attention-based multimodal fusion for sentiment analysis

Stars: ✭ 172 (+2.38%)

Mutual labels: lstm, attention-mechanism

Image Caption Generator

A neural network to generate captions for an image using CNN and RNN with BEAM Search.

Stars: ✭ 126 (-25%)

Mutual labels: lstm, attention-mechanism

Hierarchical-Word-Sense-Disambiguation-using-WordNet-Senses

Word Sense Disambiguation using Word Specific models, All word models and Hierarchical models in Tensorflow

Stars: ✭ 33 (-80.36%)

Mutual labels: lstm, attention-mechanism

ntua-slp-semeval2018

Deep-learning models of NTUA-SLP team submitted in SemEval 2018 tasks 1, 2 and 3.

Stars: ✭ 79 (-52.98%)

Mutual labels: lstm, attention-mechanism

Datastories Semeval2017 Task4

Deep-learning model presented in "DataStories at SemEval-2017 Task 4: Deep LSTM with Attention for Message-level and Topic-based Sentiment Analysis".

Stars: ✭ 184 (+9.52%)

Mutual labels: lstm, attention-mechanism

Linear Attention Recurrent Neural Network

A recurrent attention module consisting of an LSTM cell which can query its own past cell states by the means of windowed multi-head attention. The formulas are derived from the BN-LSTM and the Transformer Network. The LARNN cell with attention can be easily used inside a loop on the cell state, just like any other RNN. (LARNN)

Stars: ✭ 119 (-29.17%)

Mutual labels: lstm, attention-mechanism

SentimentAnalysis

Sentiment Analysis: Deep Bi-LSTM+attention model

Stars: ✭ 32 (-80.95%)

Mutual labels: lstm, attention-mechanism

Poetry Seq2seq

Chinese Poetry Generation

Stars: ✭ 159 (-5.36%)

Mutual labels: lstm, attention-mechanism

Document Classifier Lstm

A bidirectional LSTM with attention for multiclass/multilabel text classification.

Stars: ✭ 136 (-19.05%)

Mutual labels: lstm, attention-mechanism

automatic-personality-prediction

[AAAI 2020] Modeling Personality with Attentive Networks and Contextual Embeddings

Stars: ✭ 43 (-74.4%)

Mutual labels: lstm, attention-mechanism

Patient2Vec

Patient2Vec: A Personalized Interpretable Deep Representation of the Longitudinal Electronic Health Record

Stars: ✭ 85 (-49.4%)

Mutual labels: lstm, attention-mechanism

Abstractive Summarization

Implementation of abstractive summarization using LSTM in the encoder-decoder architecture with local attention.

Stars: ✭ 128 (-23.81%)

Mutual labels: lstm, attention-mechanism

Eeg Dl

A Deep Learning library for EEG Tasks (Signals) Classification, based on TensorFlow.

Stars: ✭ 165 (-1.79%)

Mutual labels: lstm, attention-mechanism

Rnn For Human Activity Recognition Using 2d Pose Input

Activity Recognition from 2D pose using an LSTM RNN

Stars: ✭ 165 (-1.79%)

Mutual labels: lstm

Amazon Product Recommender System

Sentiment analysis on Amazon Review Dataset available at http://snap.stanford.edu/data/web-Amazon.html

Stars: ✭ 158 (-5.95%)

Mutual labels: lstm

Tensorflow On Android For Human Activity Recognition With Lstms

iPython notebook and Android app that shows how to build LSTM model in TensorFlow and deploy it on Android

Stars: ✭ 157 (-6.55%)

Mutual labels: lstm

Pytorch Kaldi

pytorch-kaldi is a project for developing state-of-the-art DNN/RNN hybrid speech recognition systems. The DNN part is managed by pytorch, while feature extraction, label computation, and decoding are performed with the kaldi toolkit.

Stars: ✭ 2,097 (+1148.21%)

Mutual labels: lstm

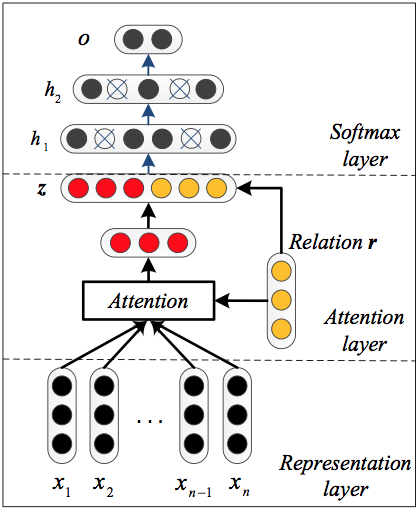

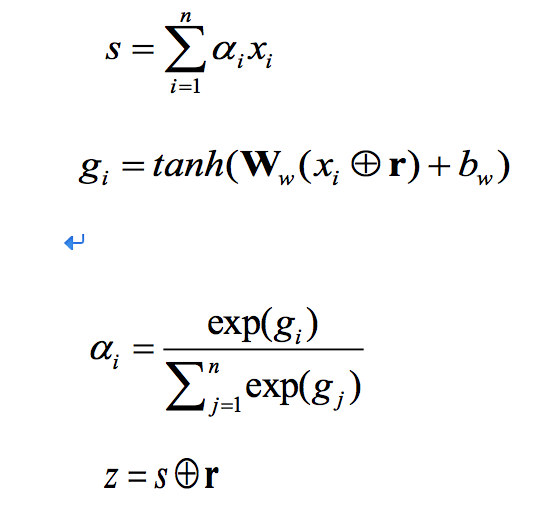

LSTM_Attention

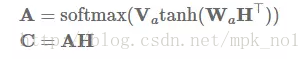

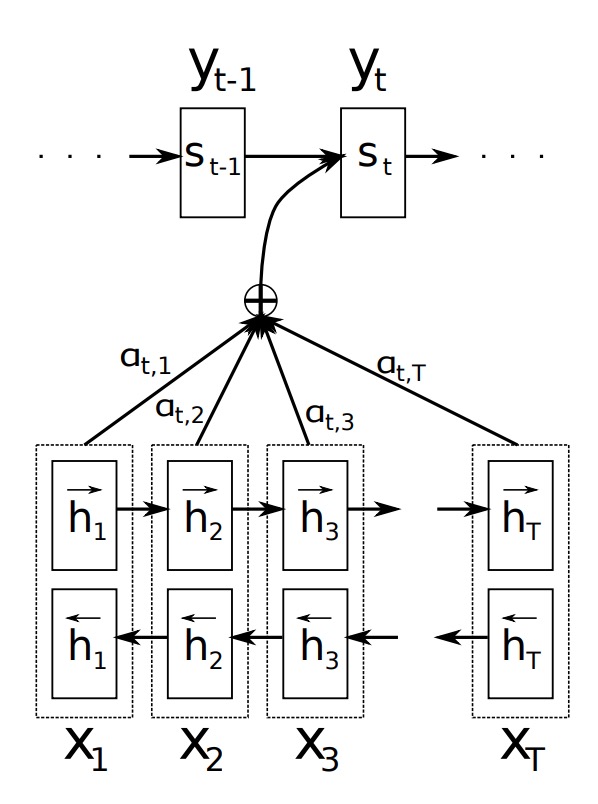

X = Input Sequence of length n.

H = LSTM(X); Note that here the LSTM has return_sequences = True,

so H is a sequence of vectors of length n.

s is the hidden state of the LSTM (h and c)

h is a weighted sum over H: 加权和

h = sigma(j = 0 to n-1) alpha(j) * H(j)

weight alpha[i, j] for each hj is computed as follows:

H = [h1,h2,...,hn]

M = tanh(H)

alhpa = softmax(w.transpose * M)

h# = tanh(h)

y = softmax(W * h# + b)

J(theta) = negative_log_likelihood + regularity

attModel1

GitHub 项目

Attention_Recurrent

GitHub 项目

attModel2

GitHub 项目

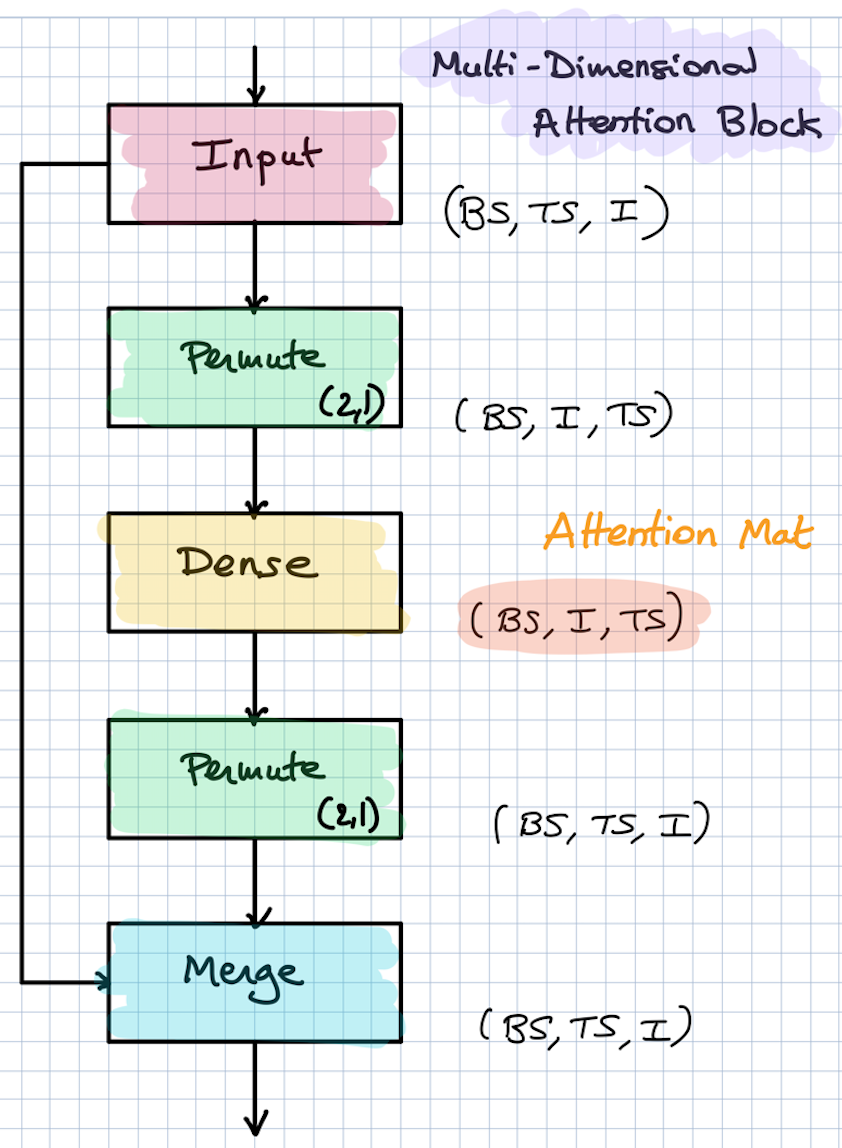

Example: Attention block*

Attention defined per time series (each TS has its own attention)

attModel3

Github 项目

https://github.com/roebius/deeplearning_keras2/blob/master/nbs2/attention_wrapper.py

attModel4

Github 项目

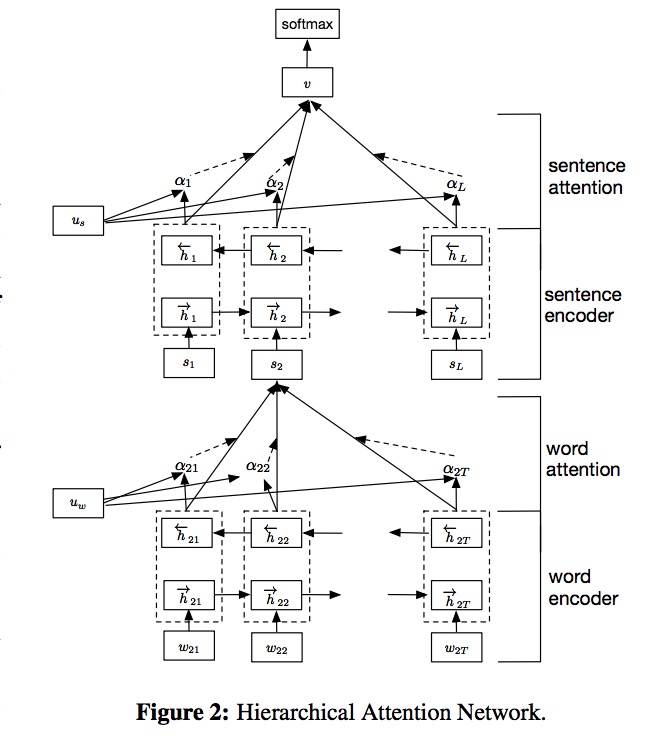

hierarchical-attention-networks

Github:

synthesio/hierarchical-attention-networks

self-attention-networks

参考

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].

/resAtt.png)