facebookresearch / Music Translation

Labels

Projects that are alternatives of or similar to Music Translation

Music Translation

PyTorch implementation of the method described in the A Universal Music Translation Network.

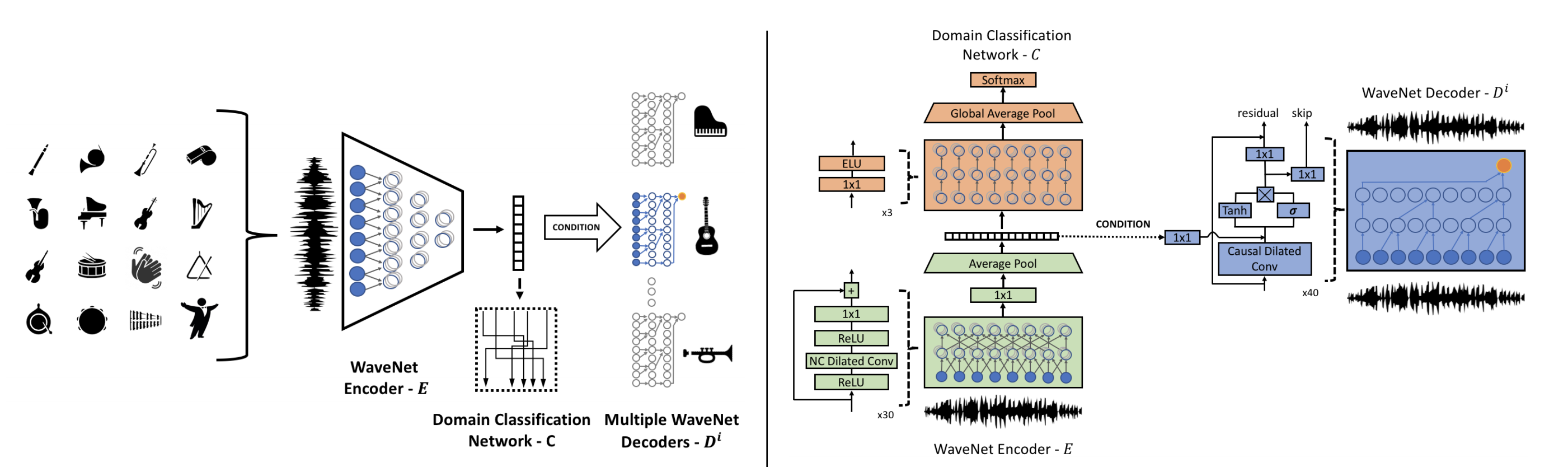

We present a method for translating music across musical instruments and styles. This method is based on unsupervised training of a multi-domain wavenet autoencoder, with a shared encoder and a domain-independent latent space that is trained end-to-end on waveforms.

This repository includes fast-inference kernels written by nvidia. The kernels were modified to match our architecture as detailed in the paper.

Quick Links

Setup

Software

Requirements:

- Linux

- Python 3.7

- PyTorch v1.0.1

- Install python packages

git clone https://github.com/facebookresearch/music-translation.git cd music-translation pip install -r requirements.txt - Install fast inference wavenet kernels (requires Tesla V100 GPU):

cd src/nv-wavenet python setup.py install

Data

- Download MusicNet dataset from here into

musicnetfolder. - Extract specific domains from MusicNet dataset:

python src/parse_musicnet.py -i musicnet -o musicnet/parsed - Split into train/test/val

for d in musicnet/parsed/*/ ; do python src/split_dir.py -i $d -o musicnet/split/$(basename "$d"); done - Preprocess audio for training

python src/preprocess.py -i musicnet/split -o musicnet/preprocessed

Pretrained Models

Pretrained models are available here. Downloaded models should be saved under checkpoints/pretrained_musicnet

Training

We provide training instructions for both single node and multi-node training. Our results were achieved following 3 days of training on 6 nodes, each with 8 gpus.

Single Node Training

Execute the train script:

./train.sh

Trained models will be saved in the checkpoints/musicnet directory.

Multi-Node Training

To train in Multi-Node training, use the follow the instructions below. NOTE: Multi-Node training requires a single node per dataset.

Execute the train script (on each node):

train_dist.sh <nnodes> <node_rank> <master_address>

The parameters are as follows:

<nnodes> - Number of nodes participating in the training.

<node_rank> - The rank of the current node.

<master_address> - Address of the master node.

Inference

We provide both interactive/offline generation scripts based on nv-wavenet CUDA kernels:

-

notebooks/Generate.ipynb- interactive generation. -

sample.sh <checkpiont name> "<decoders ids>"- samples short segments of audio from each dataset and converts to the domains the method was trained on. Running the script will create aresult\DATEdirectory, with subfolder for each decoder. Seesrc/run_on_files.pyfor more details.

For example, to use the pretrained models:

sample.sh pretrained_musicnet "0 1 2 3 4 5"

In addition, we provide python-based generation:

-

sample_py.sh <checkpiont name> "<decoders ids>"- same as 2. above.

License

You may find out more about the license here.

Citation

@inproceedings{musictranslation,

title = {A Universal Music Translation Network},

author = {Noam Mor, Lior Wold, Adam Polyak and Yaniv Taigman},

booktitle = {International Conference on Learning Representations (ICLR)},

year = {2019},

}