koulanurag / Muzero Pytorch

Licence: mit

Pytorch Implementation of MuZero

Stars: ✭ 129

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Muzero Pytorch

Torchrl

Highly Modular and Scalable Reinforcement Learning

Stars: ✭ 102 (-20.93%)

Mutual labels: deep-reinforcement-learning

Tetris Ai

A deep reinforcement learning bot that plays tetris

Stars: ✭ 109 (-15.5%)

Mutual labels: deep-reinforcement-learning

Advanced Deep Learning And Reinforcement Learning Deepmind

🎮 Advanced Deep Learning and Reinforcement Learning at UCL & DeepMind | YouTube videos 👉

Stars: ✭ 121 (-6.2%)

Mutual labels: deep-reinforcement-learning

Reinforcement Learning

🤖 Implements of Reinforcement Learning algorithms.

Stars: ✭ 104 (-19.38%)

Mutual labels: deep-reinforcement-learning

Deeptraffic

DeepTraffic is a deep reinforcement learning competition, part of the MIT Deep Learning series.

Stars: ✭ 1,528 (+1084.5%)

Mutual labels: deep-reinforcement-learning

Reinforcementlearning Atarigame

Pytorch LSTM RNN for reinforcement learning to play Atari games from OpenAI Universe. We also use Google Deep Mind's Asynchronous Advantage Actor-Critic (A3C) Algorithm. This is much superior and efficient than DQN and obsoletes it. Can play on many games

Stars: ✭ 118 (-8.53%)

Mutual labels: deep-reinforcement-learning

Top Deep Learning

Top 200 deep learning Github repositories sorted by the number of stars.

Stars: ✭ 1,365 (+958.14%)

Mutual labels: deep-reinforcement-learning

Pytorch Trpo

PyTorch Implementation of Trust Region Policy Optimization (TRPO)

Stars: ✭ 123 (-4.65%)

Mutual labels: deep-reinforcement-learning

A3c Pytorch

PyTorch implementation of Advantage async actor-critic Algorithms (A3C) in PyTorch

Stars: ✭ 108 (-16.28%)

Mutual labels: deep-reinforcement-learning

Deep Rl Tensorflow

TensorFlow implementation of Deep Reinforcement Learning papers

Stars: ✭ 1,552 (+1103.1%)

Mutual labels: deep-reinforcement-learning

Macad Gym

Multi-Agent Connected Autonomous Driving (MACAD) Gym environments for Deep RL. Code for the paper presented in the Machine Learning for Autonomous Driving Workshop at NeurIPS 2019:

Stars: ✭ 106 (-17.83%)

Mutual labels: deep-reinforcement-learning

Easy Rl

强化学习中文教程,在线阅读地址:https://datawhalechina.github.io/easy-rl/

Stars: ✭ 3,004 (+2228.68%)

Mutual labels: deep-reinforcement-learning

Deep reinforcement learning

Resources, papers, tutorials

Stars: ✭ 119 (-7.75%)

Mutual labels: deep-reinforcement-learning

Intro To Deep Learning

A collection of materials to help you learn about deep learning

Stars: ✭ 103 (-20.16%)

Mutual labels: deep-reinforcement-learning

Rl Medical

Deep Reinforcement Learning (DRL) agents applied to medical images

Stars: ✭ 123 (-4.65%)

Mutual labels: deep-reinforcement-learning

Deep Reinforcement Learning Notes

Deep Reinforcement Learning Notes

Stars: ✭ 101 (-21.71%)

Mutual labels: deep-reinforcement-learning

Hierarchical Actor Critic Hac Pytorch

PyTorch implementation of Hierarchical Actor Critic (HAC) for OpenAI gym environments

Stars: ✭ 116 (-10.08%)

Mutual labels: deep-reinforcement-learning

A Deep Rl Approach For Sdn Routing Optimization

A Deep-Reinforcement Learning Approach for Software-Defined Networking Routing Optimization

Stars: ✭ 125 (-3.1%)

Mutual labels: deep-reinforcement-learning

Rl Quadcopter

Teach a Quadcopter How to Fly!

Stars: ✭ 124 (-3.88%)

Mutual labels: deep-reinforcement-learning

Drl Portfolio Management

CSCI 599 deep learning and its applications final project

Stars: ✭ 121 (-6.2%)

Mutual labels: deep-reinforcement-learning

muzero-pytorch

Pytorch Implementation of MuZero : "Mastering Atari , Go, Chess and Shogi by Planning with a Learned Model" based on pseudo-code provided by the authors

Note: This implementation has just been tested on CartPole-v1 and would required modifications(in config folder) for other environments

Installation

- Python 3.6, 3.7

-

cd muzero-pytorch pip install -r requirements.txt

Usage:

- Train:

python main.py --env CartPole-v1 --case classic_control --opr train --force - Test:

python main.py --env CartPole-v1 --case classic_control --opr test - Visualize results :

tensorboard --logdir=<result_dir_path>

| Required Arguments | Description |

|---|---|

--env |

Name of the environment |

--case {atari,classic_control,box2d} |

It's used for switching between different domains(default: None) |

--opr {train,test} |

select the operation to be performed |

| Optional Arguments | Description |

|---|---|

--value_loss_coeff |

Scale for value loss (default: None) |

--revisit_policy_search_rate |

Rate at which target policy is re-estimated (default:None)( only valid if --use_target_model is enabled) |

--use_priority |

Uses priority for data sampling in replay buffer. Also, priority for new data is calculated based on loss (default: False) |

--use_max_priority |

Forces max priority assignment for new incoming data in replay buffer (only valid if --use_priority is enabled) (default: False) |

--use_target_model |

Use target model for bootstrap value estimation (default: False) |

--result_dir |

Directory Path to store results (defaut: current working directory) |

--no_cuda |

no cuda usage (default: False) |

--debug |

If enables, logs additional values (default:False) |

--render |

Renders the environment (default: False) |

--force |

Overrides past results (default: False) |

--seed |

seed (default: 0) |

--test_episodes |

Evaluation episode count (default: 10) |

Note: default: None => Values are loaded from the corresponding config

Training

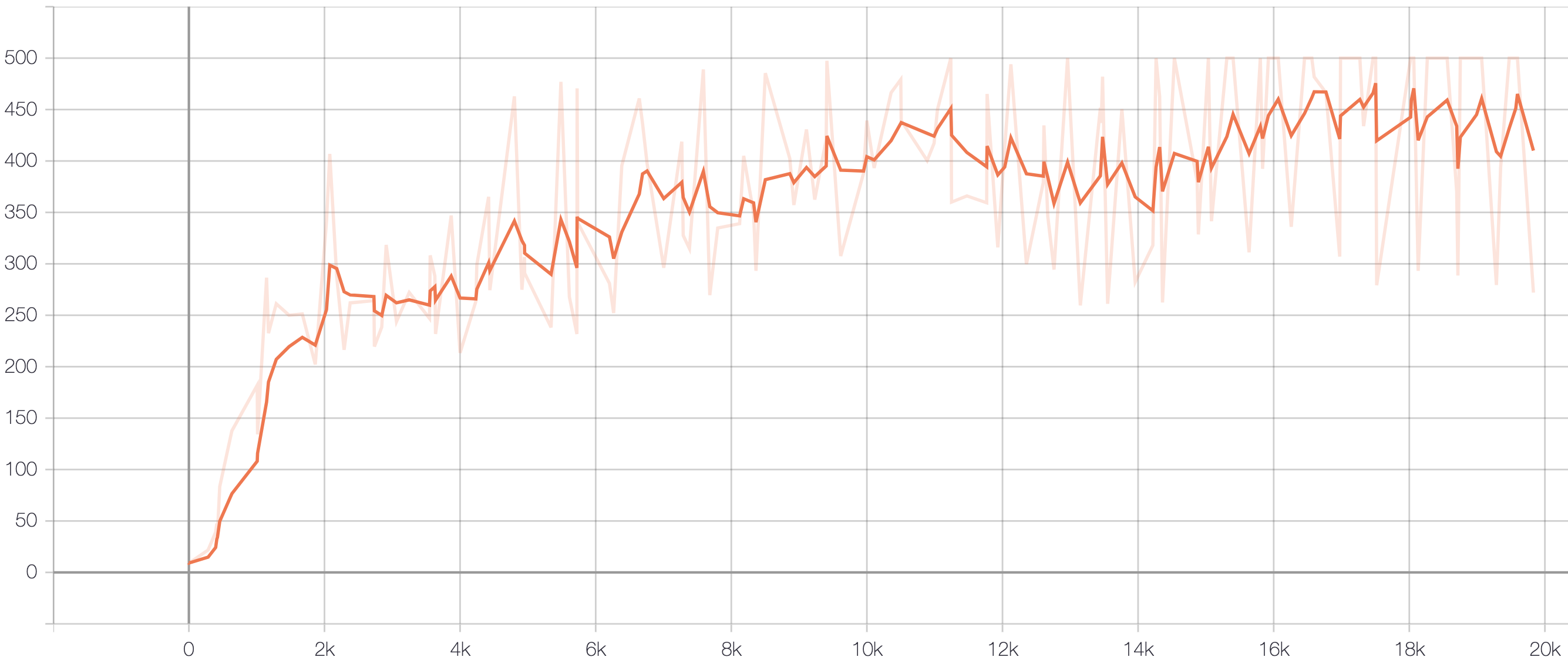

CartPole-v1

- Curves represents model evaluation for 5 episodes at 100 step training interval.

- Also, each curve is a mean scores over 5 runs (seeds : [0,100,200,300,400])

|

|

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].