hassony2 / Obman_train

Programming Languages

Projects that are alternatives of or similar to Obman train

Learning Joint Reconstruction of Hands and Manipulated Objects - Demo, Training Code and Models

Yana Hasson, Gül Varol, Dimitris Tzionas, Igor Kalevatykh, Michael J. Black, Ivan Laptev, Cordelia Schmid, CVPR 2019

Get the code

git clone https://github.com/hassony2/obman_train

cd obman_train

Download and prepare datasets

Download the ObMan dataset

- Request the dataset on the dataset page

- Create a symlink

ln -s path/to/downloaded/obman datasymlinks/obman - Download ShapeNetCore v2 object meshes from the ShapeNet official website

- Create a symlink

ln -s /sequoia/data2/dataset/shapenet/ShapeNetCore.v2 datasymlinks/ShapeNetCore.v2

Your data structure should now look like

obman_train/

datasymlinks/ShapeNetCore.v2

datasymlinks/obman

Download the First-Person Hand Action Benchmark dataset

- Follow the official instructions to download the dataset

Download model files

- Download model files from here

wget http://www.di.ens.fr/willow/research/obman/release_models.zip - unzip

unzip release_models.zip

Install python dependencies

- create conda environment with dependencies:

conda env create -f environment.yml - activate environment:

conda activate obman_train

Install the MANO PyTorch layer

- Follow the instructions from here

Download the MANO model files

-

Go to MANO website

-

Create an account by clicking Sign Up and provide your information

-

Download Models and Code (the downloaded file should have the format mano_v*_*.zip). Note that all code and data from this download falls under the MANO license.

-

unzip and copy the content of the models folder into the misc/mano folder

-

Your structure should look like this:

obman_train/

misc/

mano/

MANO_LEFT.pkl

MANO_RIGHT.pkl

release_models/

fhb/

obman/

hands_only/

Launch

Demo

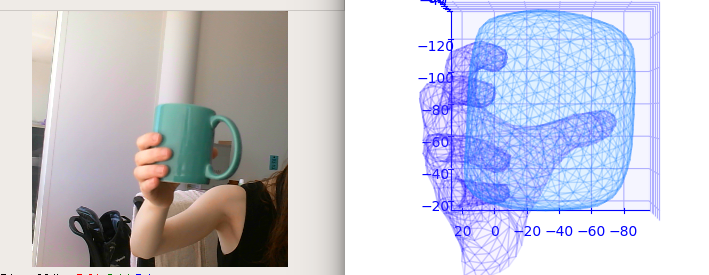

We provide a model trained on the synthetic ObMan dataset

Single image demo

python image_demo.py --resume release_models/obman/checkpoint.pth.tar

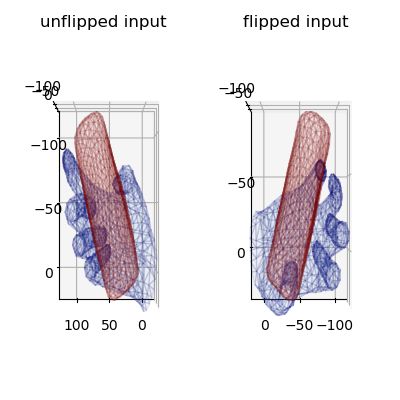

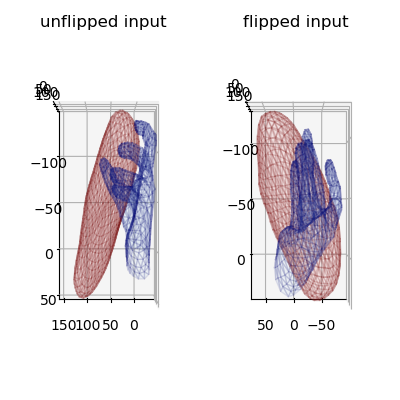

In this demo, both the original and flipped inputs are fed, and the outputs are therefore presented for the input treated as a right and a left hand side by side.

Running the demo should produce the following outputs.

You can also run this demo on data from the First Hand Action Benchmark

python image_demo.py --image_path readme_assets/images/fhb_liquid_soap.jpeg --resume release_models/fhb/checkpoint.pth.tar

Note that the model trained on First Hand Action Benchmark strongly overfits to this dataset, and therefore performs poorly on 'in the wild' images.

Video demo

You can test it on a recorded video or live using a webcam by launching :

python webcam_demo.py --resume release_models/obman/checkpoint.pth.tar --hand_side left

Hand side detection is not handled in this pipeline, therefore, you should explicitly indicate whether you want to use the right or left hand with --hand_side.

Note that the video demo has some lag time, which comes from the visualization bottleneck (matplotlib image rendering is quite slow).

Limitations

- This demo doesn't operate hand detection, so the model expects a roughly centered hand

- As we are deforming a sphere, the topology of the object is 0, which explains results such as the following:

- the model is trained only on hands holding objects, and therefore doesn't perform well on hands in the absence of objects for poses that do not resemble common grasp poses.

- the model is trained on grasping hands only, and therefore struggles with hand poses that are associated with object-handling

- In addition to the models, we also provide a hand-only model trained on various hand datasets, including our ObMan dataset, that captures a wider variety of hand poses

- to try it, launch

python webcam_demo.py --resume release_models/hands_only/checkpoint.pth.tar - Note that this model also regresses a translation and scale parameter that allows to overlay the predicted 2D joints on the images according to an orthographic projection model

Training

python traineval.py --atlas_predict_trans --atlas_predict_scale --atlas_mesh --mano_use_shape --mano_use_pca --freeze_batchnorm --atlas_separate_encoder

Citations

If you find this code useful for your research, consider citing:

@INPROCEEDINGS{hasson19_obman,

title = {Learning joint reconstruction of hands and manipulated objects},

author = {Hasson, Yana and Varol, G{\"u}l and Tzionas, Dimitris and Kalevatykh, Igor and Black, Michael J. and Laptev, Ivan and Schmid, Cordelia},

booktitle = {CVPR},

year = {2019}

}

Acknowledgements

AtlasNet code

Code related to AtlasNet is in large part adapted from the official AtlasNet repository. Thanks Thibault for the provided code !

Hand evaluation code

Code for computing hand evaluation metrics was reused from hand3d, courtesy of Christian Zimmermann with an easy-to-use interface!

Laplacian regularization loss

Code for the laplacian regularization and precious advice was provided by Angjoo Kanazawa !

First Hand Action Benchmark dataset

Helpful advice to work with the dataset was provided by Guillermo Garcia-Hernando !