mit-han-lab / Once For All

Programming Languages

Projects that are alternatives of or similar to Once For All

Once for All: Train One Network and Specialize it for Efficient Deployment [arXiv] [Slides] [Video]

@inproceedings{

cai2020once,

title={Once for All: Train One Network and Specialize it for Efficient Deployment},

author={Han Cai and Chuang Gan and Tianzhe Wang and Zhekai Zhang and Song Han},

booktitle={International Conference on Learning Representations},

year={2020},

url={https://arxiv.org/pdf/1908.09791.pdf}

}

[News] First place in the CVPR 2020 Low-Power Computer Vision Challenge, CPU detection and FPGA track.

[News] OFA-ResNet50 is released.

[News] The hands-on tutorial of OFA is released!

[News] OFA is available via pip! Run pip install ofa to install the whole OFA codebase.

[News] First place in the 4th Low-Power Computer Vision Challenge, both classification and detection track.

[News] First place in the 3rd Low-Power Computer Vision Challenge, DSP track at ICCV’19 using the Once-for-all Network.

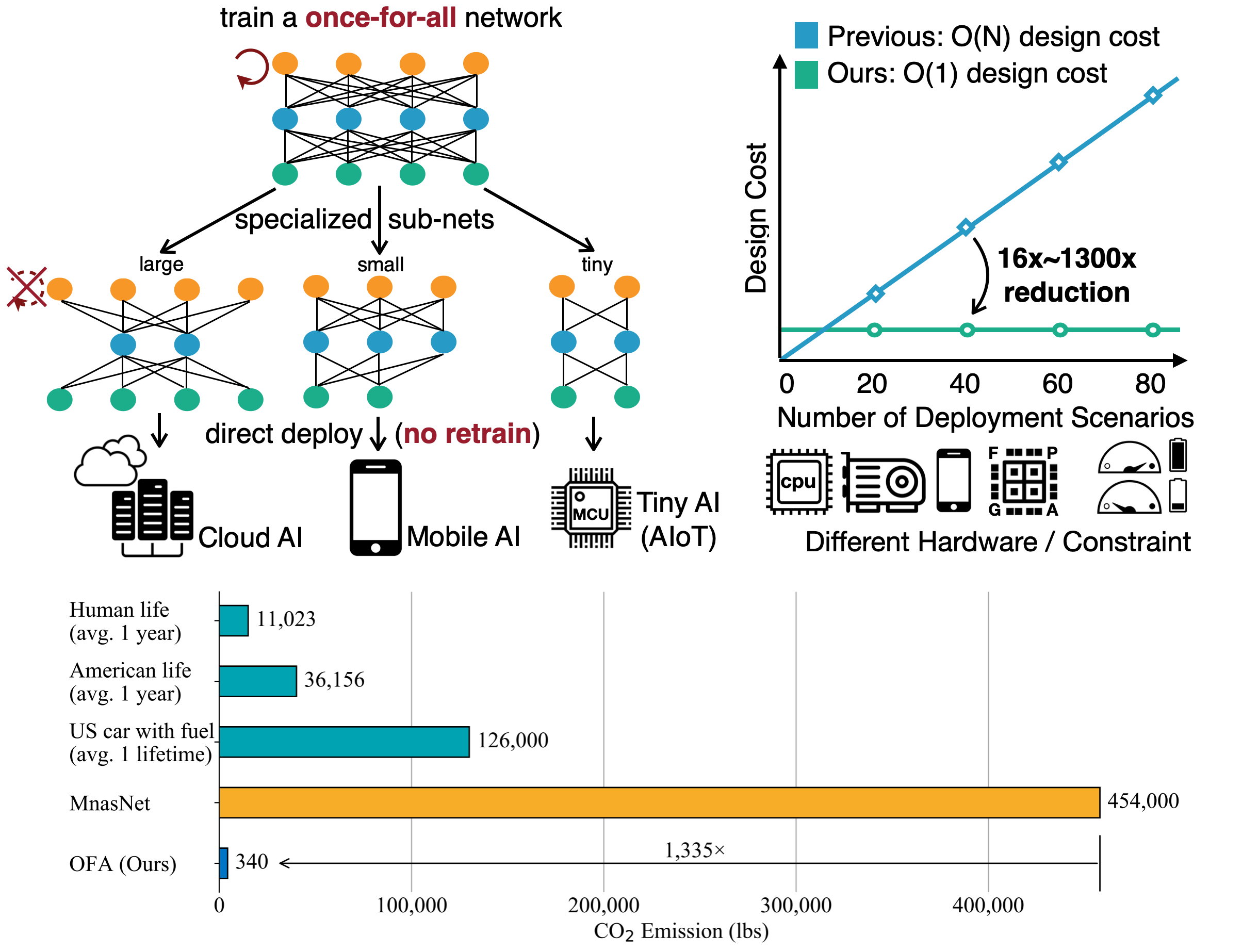

Train once, specialize for many deployment scenarios

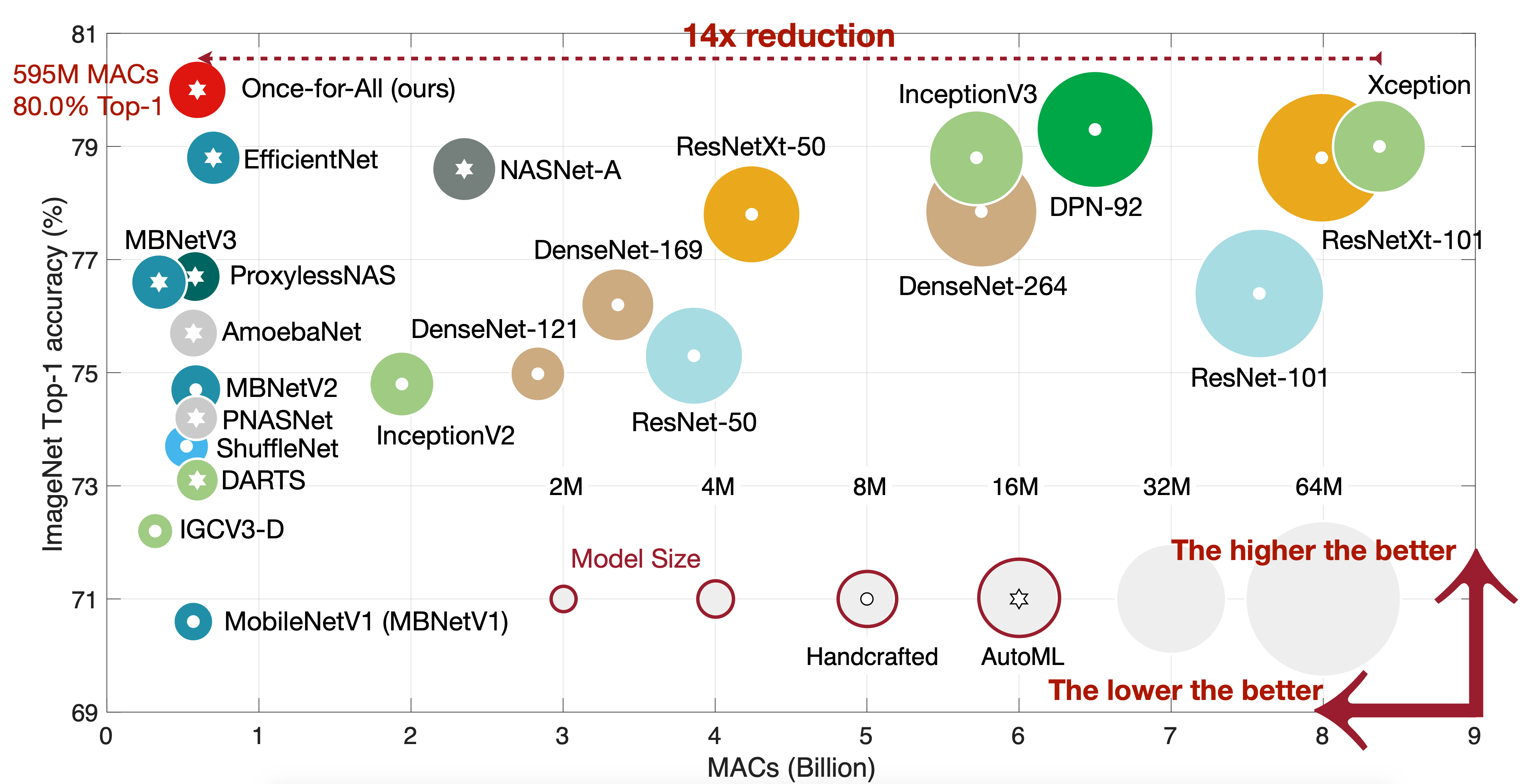

80% top1 ImageNet accuracy under mobile setting

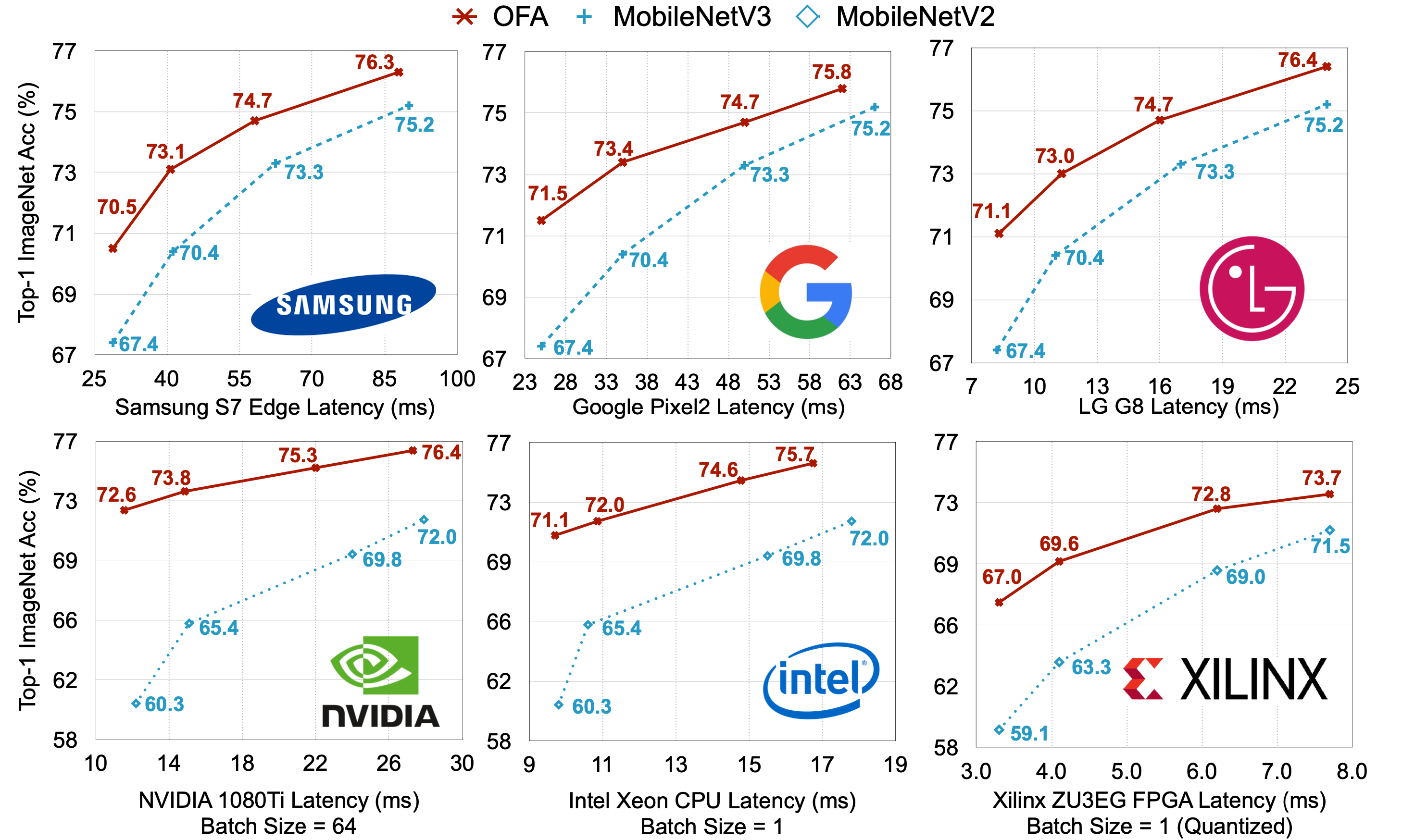

Consistently outperforms MobileNetV3 on Diverse hardware platforms

OFA-ResNet50 [How to use]

How to use / evaluate OFA Networks

Use

""" OFA Networks.

Example: ofa_network = ofa_net('ofa_mbv3_d234_e346_k357_w1.0', pretrained=True)

"""

from ofa.model_zoo import ofa_net

ofa_network = ofa_net(net_id, pretrained=True)

# Randomly sample sub-networks from OFA network

ofa_network.sample_active_subnet()

random_subnet = ofa_network.get_active_subnet(preserve_weight=True)

# Manually set the sub-network

ofa_network.set_active_subnet(ks=7, e=6, d=4)

manual_subnet = ofa_network.get_active_subnet(preserve_weight=True)

If the above scripts failed to download, you download it manually from Google Drive and put them under $HOME/.torch/ofa_nets/.

Evaluate

python eval_ofa_net.py --path 'Your path to imagenet' --net ofa_mbv3_d234_e346_k357_w1.0

| OFA Network | Design Space | Resolution | Width Multiplier | Depth | Expand Ratio | kernel Size |

|---|---|---|---|---|---|---|

| ofa_resnet50 | ResNet50D | 128 - 224 | 0.65, 0.8, 1.0 | 0, 1, 2 | 0.2, 0.25, 0.35 | 3 |

| ofa_mbv3_d234_e346_k357_w1.0 | MobileNetV3 | 128 - 224 | 1.0 | 2, 3, 4 | 3, 4, 6 | 3, 5, 7 |

| ofa_mbv3_d234_e346_k357_w1.2 | MobileNetV3 | 160 - 224 | 1.2 | 2, 3, 4 | 3, 4, 6 | 3, 5, 7 |

| ofa_proxyless_d234_e346_k357_w1.3 | ProxylessNAS | 128 - 224 | 1.3 | 2, 3, 4 | 3, 4, 6 | 3, 5, 7 |

How to use / evaluate OFA Specialized Networks

Use

""" OFA Specialized Networks.

Example: net, image_size = ofa_specialized('[email protected][email protected][email protected]', pretrained=True)

"""

from ofa.model_zoo import ofa_specialized

net, image_size = ofa_specialized(net_id, pretrained=True)

If the above scripts failed to download, you download it manually from Google Drive and put them under $HOME/.torch/ofa_specialized/.

Evaluate

python eval_specialized_net.py --path 'Your path to imagent' --net [email protected][email protected][email protected]

How to train OFA Networks

mpirun -np 32 -H <server1_ip>:8,<server2_ip>:8,<server3_ip>:8,<server4_ip>:8 \

-bind-to none -map-by slot \

-x NCCL_DEBUG=INFO -x LD_LIBRARY_PATH -x PATH \

python train_ofa_net.py

or

horovodrun -np 32 -H <server1_ip>:8,<server2_ip>:8,<server3_ip>:8,<server4_ip>:8 \

python train_ofa_net.py

Introduction Video

Hands-on Tutorial Video

Requirement

- Python 3.6+

- Pytorch 1.4.0+

- ImageNet Dataset

- Horovod

Related work on automated and efficient deep learning:

ProxylessNAS: Direct Neural Architecture Search on Target Task and Hardware (ICLR’19)

AutoML for Architecting Efficient and Specialized Neural Networks (IEEE Micro)

AMC: AutoML for Model Compression and Acceleration on Mobile Devices (ECCV’18)

HAQ: Hardware-Aware Automated Quantization (CVPR’19, oral)