swabhs / Open Sesame

Projects that are alternatives of or similar to Open Sesame

Open-SESAME

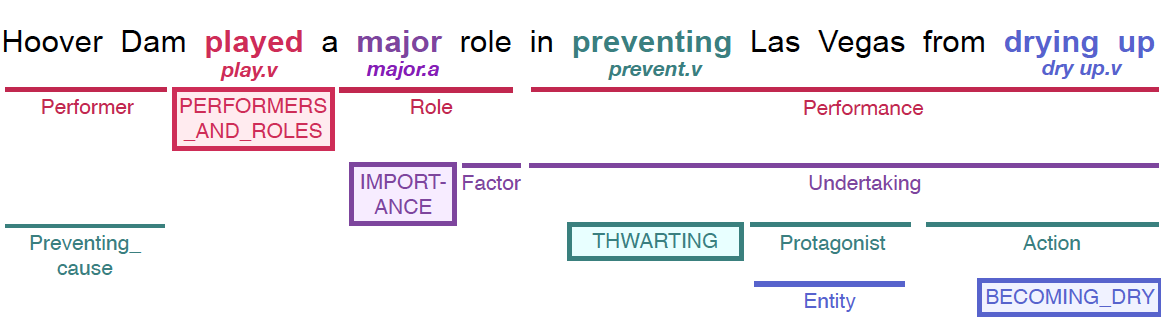

A frame-semantic parser for automatically detecting FrameNet frames and their frame-elements from sentences. The model is based on softmax-margin segmental recurrent neural nets, described in our paper Frame-Semantic Parsing with Softmax-Margin Segmental RNNs and a Syntactic Scaffold. An example of a frame-semantic parse is shown below

Installation

This project is built on python==3.7.9 and the DyNet library. Additionally, it uses some packages from NLTK.

$ pip install dynet==2.0.3

$ pip install nltk==3.5

$ python -m nltk.downloader averaged_perceptron_tagger wordnet

Data Preprocessing

This codebase only handles data in the XML format specified under FrameNet. The default version is FrameNet 1.7, but the codebase is backward compatible with versions 1.6 and 1.5.

As a first step the data is preprocessed for ease of readability.

- Clone the repository.

$ git clone https://github.com/swabhs/open-sesame.git

$ cd open-sesame/

-

Create a directory for the data,

$DATA, containing the (extracted) FrameNet version 1.7 data. This should be under$DATA/fndata-1.7/. -

Second, this project uses pretrained GloVe word embeddings of 100 dimensions, trained on 6B tokens. Download and extract under

$DATA/embeddings_glove/. -

Optionally, make alterations to the configurations in

configurations/global_config.json, if you have decided to either use a different version of FrameNet, or different pretrained embeddings, and so on. -

In this repository, data is formatted in a format similar to CoNLL 2009, but with BIO tags, for ease of reading, compared to the original XML format. See sample CoNLL formatting here. Preprocess the data by executing:

$ python -m sesame.preprocess

The above script writes the train, dev and test files in the required format into the data/neural/fn1.7/ directory. A large fraction of the annotations are either incomplete, or inconsistent. Such annotations are discarded, but logged under preprocess-fn1.7.log, along with the respective error messages. To include exemplars, use the option --exemplar with the above command.

Training

Frame-semantic parsing involves target identification, frame identification and argument identification --- each step is trained independently of the others. Details can be found in our paper, and also below.

To train a model, execute:

$ python -m sesame.$MODEL --mode train --model_name $MODEL_NAME

The $MODELs are specified below. Training saves the best model on validation data in the directory logs/$MODEL_NAME/best-$MODEL-1.7-model. The same directory will also save a configurations.json containing current model configuration.

If training gets interrupted, it can be restarted from the last saved checkpoint by specifying --mode refresh.

Pre-trained Models

The downloads need to be placed under the base-directory. On extraction, these will create a logs/ directory containing pre-trained models for target identification, frame identification using gold targets, and argument identification using gold targets and frames.

Note There is a known open issue about pretrained models not being able to replicate the reported performance on a different machine. It is recommended to train and test from scratch - performance can be replicated (within a small margin of error) to the performance reported below.

| FN 1.5 Dev | FN 1.5 Test | FN 1.5 Models | FN 1.7 Dev | FN 1.7 Test | FN 1.7 Models | |

|---|---|---|---|---|---|---|

| Target ID | 79.85 | 73.23 | Download | 80.26 | 73.25 | Download |

| Frame ID | 89.27 | 86.40 | Download | 89.74 | 86.55 | Download |

| Arg ID | 60.60 | 59.48 | Download | 61.21 | 61.36 | Download |

Test

The different models for target identification, frame identification and argument identification, need to be executed in that order. To test under a given model, execute:

$ python -m sesame.$MODEL --mode test --model_name $MODEL_NAME

The output, in a CoNLL 2009-like format will be written to logs/$MODEL_NAME/predicted-1.7-$MODEL-test.conll and in the frame-elements file format to logs/$MODEL_NAME/predicted-1.7-$MODEL-test.fes for frame and argument identification.

1. Target Identification

$MODEL = targetid

A bidirectional LSTM model takes into account the lexical unit index in FrameNet to identify targets. This model has not been described in the paper. Moreover, FN 1.7 exemplars cannot be used for target identification.

2. Frame Identification

$MODEL = frameid

Frame identification is based on a bidirectional LSTM model. Targets and their respective lexical units need to be identified before this step. At test time, example-wise analysis is logged in the model directory. Exemplars can be used for frame identification using the --exemplar flag during training, but do not help (in fact reduce performance to 88.17).

3. Argument (Frame-Element) Identification

$MODEL = argid

Argument identification is based on a segmental recurrent neural net, used as the baseline in the paper. Targets and their respective lexical units need to be identified, and frames corresponding to the LUs predicted before this step. At test time, example-wise analysis is logged in the model directory. Exemplars can be used for argument identification using the --exemplar flag during training.

Prediction on unannotated data

For predicting targets, frames and arguments on unannotated data, pretrained models are needed. Input needs to be specified in a file containing one sentence per line. The following steps result in the full frame-semantic parsing of the sentences:

$ python -m sesame.targetid --mode predict \

--model_name fn1.7-pretrained-targetid \

--raw_input sentences.txt

$ python -m sesame.frameid --mode predict \

--model_name fn1.7-pretrained-frameid \

--raw_input logs/fn1.7-pretrained-targetid/predicted-targets.conll

$ python -m sesame.argid --mode predict \

--model_name fn1.7-pretrained-argid \

--raw_input logs/fn1.7-pretrained-frameid/predicted-frames.conll

The resulting frame-semantic parses will be written to logs/fn1.7-pretrained-argid/predicted-args.conll in the same CoNLL 2009-like format.

Contact and Reference

For questions and usage issues, please contact [email protected]. If you use open-sesame for research, please cite our paper as follows:

@article{swayamdipta:17,

title={{Frame-Semantic Parsing with Softmax-Margin Segmental RNNs and a Syntactic Scaffold}},

author={Swabha Swayamdipta and Sam Thomson and Chris Dyer and Noah A. Smith},

journal={arXiv preprint arXiv:1706.09528},

year={2017}

}

Copyright [2018] [Swabha Swayamdipta]