will4906 / Patentcrawler

Licence: apache-2.0

scrapy专利爬虫(停止维护)

Stars: ✭ 114

Programming Languages

python

139335 projects - #7 most used programming language

Projects that are alternatives of or similar to Patentcrawler

Icrawler

A multi-thread crawler framework with many builtin image crawlers provided.

Stars: ✭ 629 (+451.75%)

Mutual labels: crawler, scrapy

Universityrecruitment Ssurvey

用严肃的数据来回答“什么样的企业会到什么样的大学招聘”?

Stars: ✭ 30 (-73.68%)

Mutual labels: crawler, data

Taiwan News Crawlers

Scrapy-based Crawlers for news of Taiwan

Stars: ✭ 83 (-27.19%)

Mutual labels: crawler, scrapy

Scrapy Azuresearch Crawler Samples

Scrapy as a Web Crawler for Azure Search Samples

Stars: ✭ 20 (-82.46%)

Mutual labels: crawler, scrapy

Terpene Profile Parser For Cannabis Strains

Parser and database to index the terpene profile of different strains of Cannabis from online databases

Stars: ✭ 63 (-44.74%)

Mutual labels: crawler, scrapy

Scrapy Redis

Redis-based components for Scrapy.

Stars: ✭ 4,998 (+4284.21%)

Mutual labels: crawler, scrapy

Dotnetcrawler

DotnetCrawler is a straightforward, lightweight web crawling/scrapying library for Entity Framework Core output based on dotnet core. This library designed like other strong crawler libraries like WebMagic and Scrapy but for enabling extandable your custom requirements. Medium link : https://medium.com/@mehmetozkaya/creating-custom-web-crawler-with-dotnet-core-using-entity-framework-core-ec8d23f0ca7c

Stars: ✭ 100 (-12.28%)

Mutual labels: crawler, scrapy

Haipproxy

💖 High available distributed ip proxy pool, powerd by Scrapy and Redis

Stars: ✭ 4,993 (+4279.82%)

Mutual labels: crawler, scrapy

Easy Scraping Tutorial

Simple but useful Python web scraping tutorial code.

Stars: ✭ 583 (+411.4%)

Mutual labels: crawler, scrapy

Scrapple

A framework for creating semi-automatic web content extractors

Stars: ✭ 464 (+307.02%)

Mutual labels: crawler, scrapy

Ghcrawler

Crawl GitHub APIs and store the discovered orgs, repos, commits, ...

Stars: ✭ 293 (+157.02%)

Mutual labels: crawler, data

Crawlab

Distributed web crawler admin platform for spiders management regardless of languages and frameworks. 分布式爬虫管理平台,支持任何语言和框架

Stars: ✭ 8,392 (+7261.4%)

Mutual labels: crawler, scrapy

Scrapoxy

Scrapoxy hides your scraper behind a cloud. It starts a pool of proxies to send your requests. Now, you can crawl without thinking about blacklisting!

Stars: ✭ 1,322 (+1059.65%)

Mutual labels: crawler, scrapy

PatentCrawler

专利爬虫

使用说明见WIKI

Config

- 配置文件在config/config.ini

- 至少需要填写username和password

Environment

- pip install -r requirements.txt

- 验证码采用k近邻算法进行识别,具体方法见https://github.com/will4906/CaptchaRecognition

- 代理模块使用: https://github.com/jhao104/proxy_pool

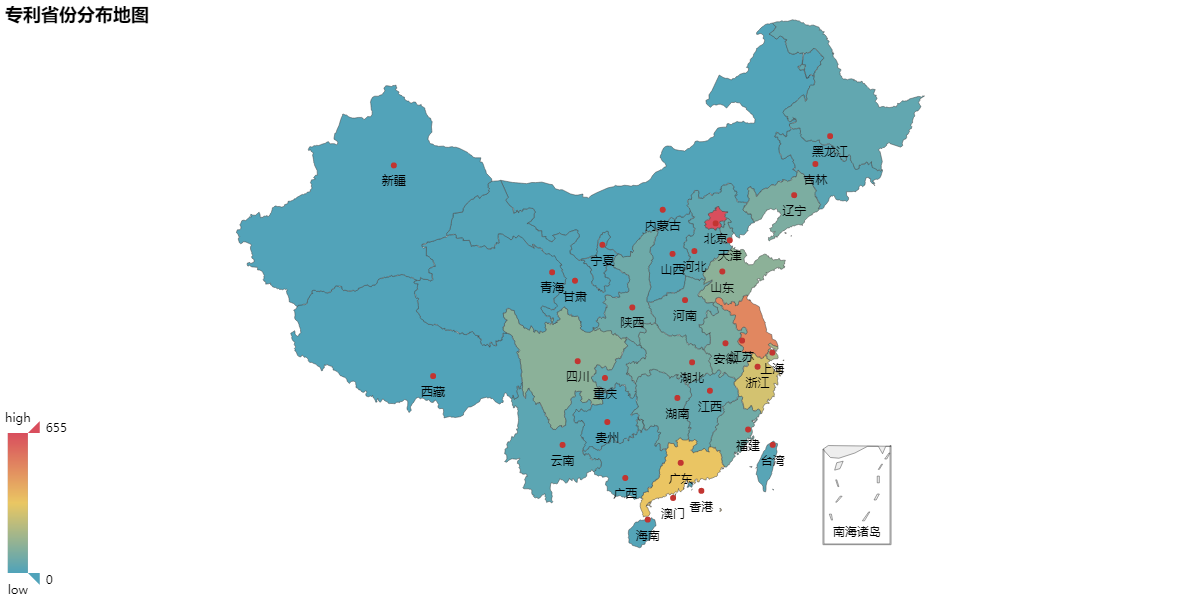

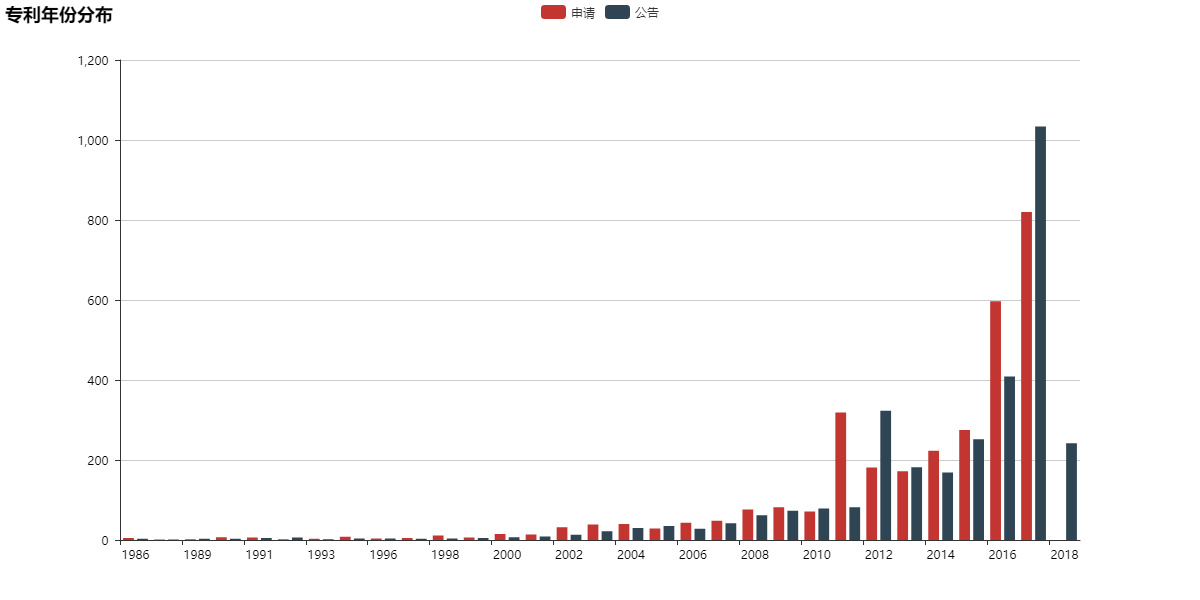

Data Visualization

License

PatentCrawler is released under the Apache 2.0 license.

Copyright 2017 willshuhua.me.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

感谢支持

| 赞赏 | |

|

|

| 微信 | 支付宝 |

Note that the project description data, including the texts, logos, images, and/or trademarks,

for each open source project belongs to its rightful owner.

If you wish to add or remove any projects, please contact us at [email protected].