lyndonzheng / Pluralistic Inpainting

Programming Languages

Projects that are alternatives of or similar to Pluralistic Inpainting

Pluralistic Image Completion

ArXiv | Project Page | Online Demo | Video(demo)

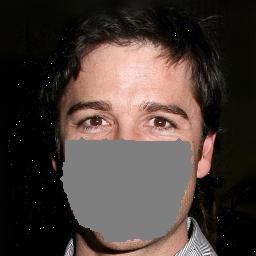

This repository implements the training, testing and editing tools for "Pluralistic Image Completion" by Chuanxia Zheng, Tat-Jen Cham and Jianfei Cai at NTU. Given one masked image, the proposed Pluralistic model is able to generate multiple and diverse plausible results with various structure, color and texture.

Editing example

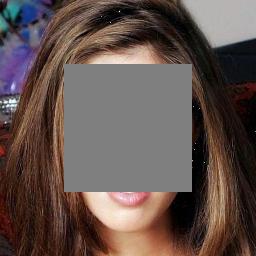

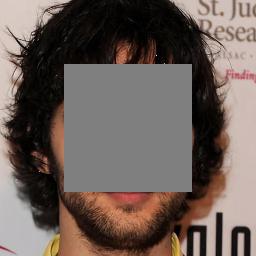

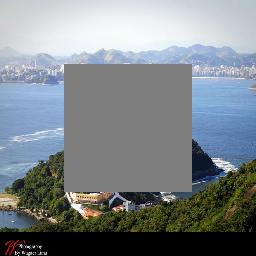

Example results

|

|

|

|

|

|

|

|

Example completion results of our method on images of face (CelebA), building (Paris), and natural scenes (Places2) with center masks (masks shown in gray). For each group, the masked input image is shown left, followed by sampled results from our model without any post-processing. The results are diverse and plusible.

More results on project page

Getting started

Installation

This code was tested with Pytoch 0.4.0, CUDA 9.0, Python 3.6 and Ubuntu 16.04

- Install Pytoch 0.4, torchvision, and other dependencies from http://pytorch.org

- Install python libraries visdom and dominate for visualization

pip install visdom dominate

- Clone this repo:

git clone https://github.com/lyndonzheng/Pluralistic

cd Pluralistic

Datasets

-

face dataset: 24183 training images and 2824 test images from CelebA and use the algorithm of Growing GANs to get the high-resolution CelebA-HQ dataset -

building dataset: 14900 training images and 100 test images from Paris -

natural scenery: original training and val images from Places2 -

objectoriginal training images from ImageNet.

Training

- Train a model (default: random irregular and irregular holes):

python train.py --name celeba_random --img_file your_image_path

- Set

--mask_typein options/base_options.py for different training masks.--mask_filepath is needed for external irregular mask, such as the irregular mask dataset provided by Liu et al. and Karim lskakov . - To view training results and loss plots, run

python -m visdom.serverand copy the URL http://localhost:8097. - Training models will be saved under the checkpoints folder.

- The more training options can be found in options folder.

Testing

- Test the model

python test.py --name celeba_random --img_file your_image_path

- Set

--mask_typein options/base_options.py to test various masks.--mask_filepath is needed for 3. external irregular mask, - The default results will be saved under the results folder. Set

--results_dirfor a new path to save the result.

Pretrained Models

Download the pre-trained models using the following links and put them undercheckpoints/ directory.

-

center_mask model: CelebA_center | Paris_center | Places2_center | Imagenet_center -

random_mask model: CelebA_random | Paris_random | Places2_random | Imagenet_random

Our main novelty of this project is the multiple and diverse plausible results for one given masked image. The center_mask models are trained with images of resolution 256*256 with center holes 128x128, which have large diversity for the large missing information. The random_mask models are trained with random regular and irregular holes, which have different diversity for different mask sizes and image backgrounds.

GUI

Download the pre-trained models from Google drive and put them undercheckpoints/ directory.

- Install the PyQt5 for GUI operation

pip install PyQt5

Basic usage is:

python -m visdom.server

python ui_main.py

The buttons in GUI:

-

Options: Select the model and corresponding dataset for editing. -

Bush Width: Modify the width of bush for free_form mask. -

draw/clear: Draw afree_formorrectanglemask for random_model. Clear all mask region for a new input. -

load: Choose the image from the directory. -

random: Random load the editing image from the datasets. -

fill: Fill the holes ranges and show it on the right. -

save: Save the inputs and outputs to the directory. -

Original/Output: Switch to show the original or output image.

The steps are as follows:

1. Select a model from 'options'

2. Click the 'random' or 'load' button to get an input image.

3. If you choose a random model, click the 'draw/clear' button to input free_form mask.

4. If you choose a center model, the center mask has been given.

5. click 'fill' button to get multiple results.

6. click 'save' button to save the results.

Editing Example Results

- Results (original, input, output) for object removing

|

|

|

|

|

|

|

|

|

|

|

|

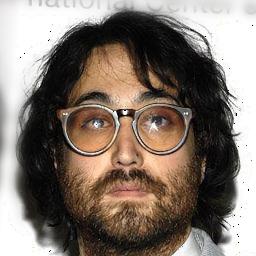

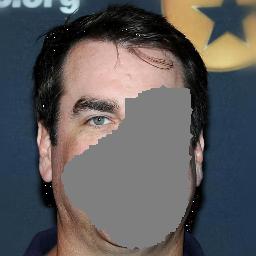

- Results (input, output) for face playing. When mask half or right face, the diversity will be small for the short+long term attention layer will copy information from other side. When mask top or down face, the diversity will be large.

|

|

|

|

|

|

|

|

|

|

|

|

Next

- Free form mask for various Datasets

- Higher resolution image completion

License

This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License.

This software is for educational and academic research purpose only. If you wish to obtain a commercial royalty bearing license to this software, please contact us at [email protected].

Citation

If you use this code for your research, please cite our paper.

@inproceedings{zheng2019pluralistic,

title={Pluralistic Image Completion},

author={Zheng, Chuanxia and Cham, Tat-Jen and Cai, Jianfei},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

pages={1438--1447},

year={2019}

}